将 Azure AI 服务与 Microsoft Fabric 中的 SynapseML 配合使用

Azure AI 服务通过现成的预生成可定制 API 和模型,帮助开发人员和组织快速创建智能、前沿、面向市场且负责任的应用程序。 在本文中,你将使用 Azure AI 服务中提供的多项服务来执行以下任务:文本分析、翻译、文档智能、计算机视觉、图像搜索、语音转文本与文本转语音、异常情况检测,以及从 Web API 中提取数据。

Azure AI 服务的目标是帮助开发人员创建可以看、听、说、理解甚至开始推理的应用程序。 Azure AI 服务中的服务目录可分为五大主要支柱类别:视觉、语音、语言、Web 搜索和决策。

先决条件

获取 Microsoft Fabric 订阅。 或者注册免费的 Microsoft Fabric 试用版。

登录 Microsoft Fabric。

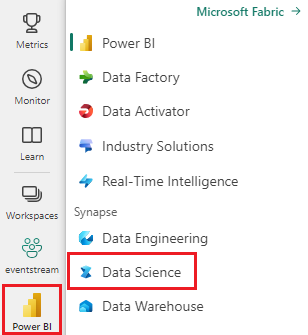

使用主页左侧的体验切换器切换到 Synapse 数据科学体验。

- 创建新的笔记本。

- 将笔记本附加到湖屋。 在笔记本左侧,选择“添加”以添加现有的湖屋或创建新湖屋。

- 按照快速入门:为 Azure AI 服务创建多服务资源获取 Azure AI 服务密钥。 复制要在以下代码示例中使用的密钥值。

准备你的系统

首先,导入所需的库并初始化 Spark 会话。

from pyspark.sql.functions import udf, col

from synapse.ml.io.http import HTTPTransformer, http_udf

from requests import Request

from pyspark.sql.functions import lit

from pyspark.ml import PipelineModel

from pyspark.sql.functions import col

import os

from pyspark.sql import SparkSession

from synapse.ml.core.platform import *

# Bootstrap Spark Session

spark = SparkSession.builder.getOrCreate()

导入 Azure AI 服务库,并将以下代码片段中的密钥和位置替换为 Azure AI 服务密钥和位置。

from synapse.ml.cognitive import *

# A general Azure AI services key for Text Analytics, Vision and Document Intelligence (or use separate keys that belong to each service)

service_key = "<YOUR-KEY-VALUE>" # Replace <YOUR-KEY-VALUE> with your Azure AI service key, check prerequisites for more details

service_loc = "eastus"

# A Bing Search v7 subscription key

bing_search_key = "<YOUR-KEY-VALUE>" # Replace <YOUR-KEY-VALUE> with your Bing v7 subscription key, check prerequisites for more details

# An Anomaly Detector subscription key

anomaly_key = <"YOUR-KEY-VALUE"> # Replace <YOUR-KEY-VALUE> with your anomaly service key, check prerequisites for more details

anomaly_loc = "westus2"

# A Translator subscription key

translator_key = "<YOUR-KEY-VALUE>" # Replace <YOUR-KEY-VALUE> with your translator service key, check prerequisites for more details

translator_loc = "eastus"

# An Azure search key

search_key = "<YOUR-KEY-VALUE>" # Replace <YOUR-KEY-VALUE> with your search key, check prerequisites for more details

对文本执行情绪分析

文本分析服务提供了几种从文本中提取智能见解的算法。 例如,可以使用该服务发现一些输入文本当中的情绪。 服务将返回介于 0.0 和 1.0 之间的分数,其中低分数表示负面情绪,高分表示正面情绪。

下面的代码示例返回三个简单句子的情绪。

# Create a dataframe that's tied to it's column names

df = spark.createDataFrame(

[

("I am so happy today, its sunny!", "en-US"),

("I am frustrated by this rush hour traffic", "en-US"),

("The cognitive services on spark aint bad", "en-US"),

],

["text", "language"],

)

# Run the Text Analytics service with options

sentiment = (

TextSentiment()

.setTextCol("text")

.setLocation(service_loc)

.setSubscriptionKey(service_key)

.setOutputCol("sentiment")

.setErrorCol("error")

.setLanguageCol("language")

)

# Show the results of your text query in a table format

display(

sentiment.transform(df).select(

"text", col("sentiment.document.sentiment").alias("sentiment")

)

)

对健康状况数据执行文本分析

健康服务文本分析从非结构化文本(如医生处方、出院小结、临床文档和电子健康记录)中提取和标记相关的医疗信息。

以下代码示例分析医生笔记中的文本并将其转换为结构化数据。

df = spark.createDataFrame(

[

("20mg of ibuprofen twice a day",),

("1tsp of Tylenol every 4 hours",),

("6-drops of Vitamin B-12 every evening",),

],

["text"],

)

healthcare = (

AnalyzeHealthText()

.setSubscriptionKey(service_key)

.setLocation(service_loc)

.setLanguage("en")

.setOutputCol("response")

)

display(healthcare.transform(df))

将文本翻译成其他语言

Azure AI 翻译是一种基于云的机器翻译服务,是用于构建智能应用的 Azure AI 服务系列认知 API 的一部分。 “翻译”可以轻松地集成到应用程序、网站、工具和解决方案中。 通过它,您可以在 90 种语言和方言中添加多语言用户体验,并且可以用任何操作系统进行文本翻译。

下面的代码示例中,通过提供要翻译的句子和要翻译到的目标语言来执行简单文本翻译。

from pyspark.sql.functions import col, flatten

# Create a dataframe including sentences you want to translate

df = spark.createDataFrame(

[(["Hello, what is your name?", "Bye"],)],

[

"text",

],

)

# Run the Translator service with options

translate = (

Translate()

.setSubscriptionKey(translator_key)

.setLocation(translator_loc)

.setTextCol("text")

.setToLanguage(["zh-Hans"])

.setOutputCol("translation")

)

# Show the results of the translation.

display(

translate.transform(df)

.withColumn("translation", flatten(col("translation.translations")))

.withColumn("translation", col("translation.text"))

.select("translation")

)

将文档中的信息提取到结构化数据中

Azure AI 文档智能是 Azure AI 服务的一部分,可让用户使用机器学习技术构建自动化数据处理软件。 借助 Azure AI 文档智能,可以标识并提取文档中的文本、键/值对、选择标记、表和结构。 该服务会输出结构化数据,其中包含原始文件中的关系、边界框、置信度,等等。

下面的代码示例分析名片图像,并将上面的信息提取到结构化数据中。

from pyspark.sql.functions import col, explode

# Create a dataframe containing the source files

imageDf = spark.createDataFrame(

[

(

"https://mmlspark.blob.core.windows.net/datasets/FormRecognizer/business_card.jpg",

)

],

[

"source",

],

)

# Run the Form Recognizer service

analyzeBusinessCards = (

AnalyzeBusinessCards()

.setSubscriptionKey(service_key)

.setLocation(service_loc)

.setImageUrlCol("source")

.setOutputCol("businessCards")

)

# Show the results of recognition.

display(

analyzeBusinessCards.transform(imageDf)

.withColumn(

"documents", explode(col("businessCards.analyzeResult.documentResults.fields"))

)

.select("source", "documents")

)

分析和标记图像

计算机视觉会分析图像以识别结构,例如人脸、对象和自然语言说明。

以下代码示例分析图像并使用标签标记图像。 标记是对图像中可识别的对象、人物、风景和动作等事物的单个词说明。

# Create a dataframe with the image URLs

base_url = "https://raw.githubusercontent.com/Azure-Samples/cognitive-services-sample-data-files/master/ComputerVision/Images/"

df = spark.createDataFrame(

[

(base_url + "objects.jpg",),

(base_url + "dog.jpg",),

(base_url + "house.jpg",),

],

[

"image",

],

)

# Run the Computer Vision service. Analyze Image extracts information from/about the images.

analysis = (

AnalyzeImage()

.setLocation(service_loc)

.setSubscriptionKey(service_key)

.setVisualFeatures(

["Categories", "Color", "Description", "Faces", "Objects", "Tags"]

)

.setOutputCol("analysis_results")

.setImageUrlCol("image")

.setErrorCol("error")

)

# Show the results of what you wanted to pull out of the images.

display(analysis.transform(df).select("image", "analysis_results.description.tags"))

搜索与自然语言查询相关的图像

必应图像搜索在 Web 中搜索以检索与用户自然语言查询相关的图像。

下面的代码示例使用文本查询来查找带引号的图像。 代码的输出是包含与查询相关的照片的图像 URL 列表。

# Number of images Bing will return per query

imgsPerBatch = 10

# A list of offsets, used to page into the search results

offsets = [(i * imgsPerBatch,) for i in range(100)]

# Since web content is our data, we create a dataframe with options on that data: offsets

bingParameters = spark.createDataFrame(offsets, ["offset"])

# Run the Bing Image Search service with our text query

bingSearch = (

BingImageSearch()

.setSubscriptionKey(bing_search_key)

.setOffsetCol("offset")

.setQuery("Martin Luther King Jr. quotes")

.setCount(imgsPerBatch)

.setOutputCol("images")

)

# Transformer that extracts and flattens the richly structured output of Bing Image Search into a simple URL column

getUrls = BingImageSearch.getUrlTransformer("images", "url")

# This displays the full results returned, uncomment to use

# display(bingSearch.transform(bingParameters))

# Since we have two services, they are put into a pipeline

pipeline = PipelineModel(stages=[bingSearch, getUrls])

# Show the results of your search: image URLs

display(pipeline.transform(bingParameters))

将语音转换为文本

语音转文本服务将语音音频的流或文件转换为文本。 以下代码示例将一个音频文件转录为文本。

# Create a dataframe with our audio URLs, tied to the column called "url"

df = spark.createDataFrame(

[("https://mmlspark.blob.core.windows.net/datasets/Speech/audio2.wav",)], ["url"]

)

# Run the Speech-to-text service to translate the audio into text

speech_to_text = (

SpeechToTextSDK()

.setSubscriptionKey(service_key)

.setLocation(service_loc)

.setOutputCol("text")

.setAudioDataCol("url")

.setLanguage("en-US")

.setProfanity("Masked")

)

# Show the results of the translation

display(speech_to_text.transform(df).select("url", "text.DisplayText"))

将文本转换为语音

文本转语音服务允许用户从 119 种语言和变体的 270 多种神经语音中进行选择,以构建讲述自然语言的应用和服务。

下面的代码示例将文本转换为包含文本内容的音频文件。

from synapse.ml.cognitive import TextToSpeech

fs = ""

if running_on_databricks():

fs = "dbfs:"

elif running_on_synapse_internal():

fs = "Files"

# Create a dataframe with text and an output file location

df = spark.createDataFrame(

[

(

"Reading out loud is fun! Check out aka.ms/spark for more information",

fs + "/output.mp3",

)

],

["text", "output_file"],

)

tts = (

TextToSpeech()

.setSubscriptionKey(service_key)

.setTextCol("text")

.setLocation(service_loc)

.setVoiceName("en-US-JennyNeural")

.setOutputFileCol("output_file")

)

# Check to make sure there were no errors during audio creation

display(tts.transform(df))

检测时序数据中存在的异常

异常检测器对于检测时间序列数据中的不规则性非常有用。 下面的代码示例使用异常检测器服务来查找整个时序数据中存在的异常。

# Create a dataframe with the point data that Anomaly Detector requires

df = spark.createDataFrame(

[

("1972-01-01T00:00:00Z", 826.0),

("1972-02-01T00:00:00Z", 799.0),

("1972-03-01T00:00:00Z", 890.0),

("1972-04-01T00:00:00Z", 900.0),

("1972-05-01T00:00:00Z", 766.0),

("1972-06-01T00:00:00Z", 805.0),

("1972-07-01T00:00:00Z", 821.0),

("1972-08-01T00:00:00Z", 20000.0),

("1972-09-01T00:00:00Z", 883.0),

("1972-10-01T00:00:00Z", 898.0),

("1972-11-01T00:00:00Z", 957.0),

("1972-12-01T00:00:00Z", 924.0),

("1973-01-01T00:00:00Z", 881.0),

("1973-02-01T00:00:00Z", 837.0),

("1973-03-01T00:00:00Z", 9000.0),

],

["timestamp", "value"],

).withColumn("group", lit("series1"))

# Run the Anomaly Detector service to look for irregular data

anamoly_detector = (

SimpleDetectAnomalies()

.setSubscriptionKey(anomaly_key)

.setLocation(anomaly_loc)

.setTimestampCol("timestamp")

.setValueCol("value")

.setOutputCol("anomalies")

.setGroupbyCol("group")

.setGranularity("monthly")

)

# Show the full results of the analysis with the anomalies marked as "True"

display(

anamoly_detector.transform(df).select("timestamp", "value", "anomalies.isAnomaly")

)

从任意 Web API 获取信息

借助 Spark 上的 HTTP,可以在大数据管道中使用任何 Web 服务。 以下代码示例中,使用 World Bank API 获取有关全球各个国家/地区的信息。

# Use any requests from the python requests library

def world_bank_request(country):

return Request(

"GET", "http://api.worldbank.org/v2/country/{}?format=json".format(country)

)

# Create a dataframe with specifies which countries we want data on

df = spark.createDataFrame([("br",), ("usa",)], ["country"]).withColumn(

"request", http_udf(world_bank_request)(col("country"))

)

# Much faster for big data because of the concurrency :)

client = (

HTTPTransformer().setConcurrency(3).setInputCol("request").setOutputCol("response")

)

# Get the body of the response

def get_response_body(resp):

return resp.entity.content.decode()

# Show the details of the country data returned

display(

client.transform(df).select(

"country", udf(get_response_body)(col("response")).alias("response")

)

)