你当前正在访问 Microsoft Azure Global Edition 技术文档网站。 如果需要访问由世纪互联运营的 Microsoft Azure 中国技术文档网站,请访问 https://docs.azure.cn。

Fine-tune models using managed compute (preview)

Important

Items marked (preview) in this article are currently in public preview. This preview is provided without a service-level agreement, and we don't recommend it for production workloads. Certain features might not be supported or might have constrained capabilities. For more information, see Supplemental Terms of Use for Microsoft Azure Previews.

This article explains how to use a managed compute to fine-tune a foundation model in the Azure AI Foundry portal. Fine-tuning involves adapting a pretrained model to a new, related task or domain. When you use a managed compute for fine-tuning, you use your computational resources to adjust training parameters such as learning rate, batch size, and number of training epochs to optimize the model's performance for a specific task.

Fine-tuning a pretrained model to use for a related task is more efficient than building a new model, as fine-tuning builds upon the pretrained model's existing knowledge and reduces the time and data needed for training.

To improve model performance, you might consider fine-tuning a foundation model with your training data. You can easily fine-tune foundation models by using the fine-tune settings in the Azure AI Foundry portal.

Prerequisites

An Azure subscription with a valid payment method. Free or trial Azure subscriptions won't work. If you don't have an Azure subscription, create a paid Azure account to begin.

Azure role-based access controls (Azure RBAC) are used to grant access to operations in Azure AI Foundry portal. To perform the steps in this article, your user account must be assigned the owner or contributor role for the Azure subscription. For more information on permissions, see Role-based access control in Azure AI Foundry portal.

Fine-tune a foundation model using managed compute

Sign in to Azure AI Foundry.

If you're not already in your project, select it.

Select Fine-tuning from the left navigation pane.

- Select Fine-tune model and add the model that you want to fine-tune. This article uses Phi-3-mini-4k-instruct for illustration.

- Select Next to see the available fine-tune options. Some foundation models support only the Managed compute option.

Alternatively, you could select Model catalog from the left sidebar of your project and find the model card of the foundation model that you want to fine-tune.

- Select Fine-tune on the model card to see the available fine-tune options. Some foundation models support only the Managed compute option.

Select Managed compute to use your personal compute resources. This action opens up the "Basic settings" page of a window for specifying the fine-tuning settings.

Configure fine-tune settings

In this section, you go through the steps to configure fine-tuning for your model, using a managed compute.

Provide a name for the fine-tuned model on the "Basic settings" page, and select Next to go to the "Compute" page.

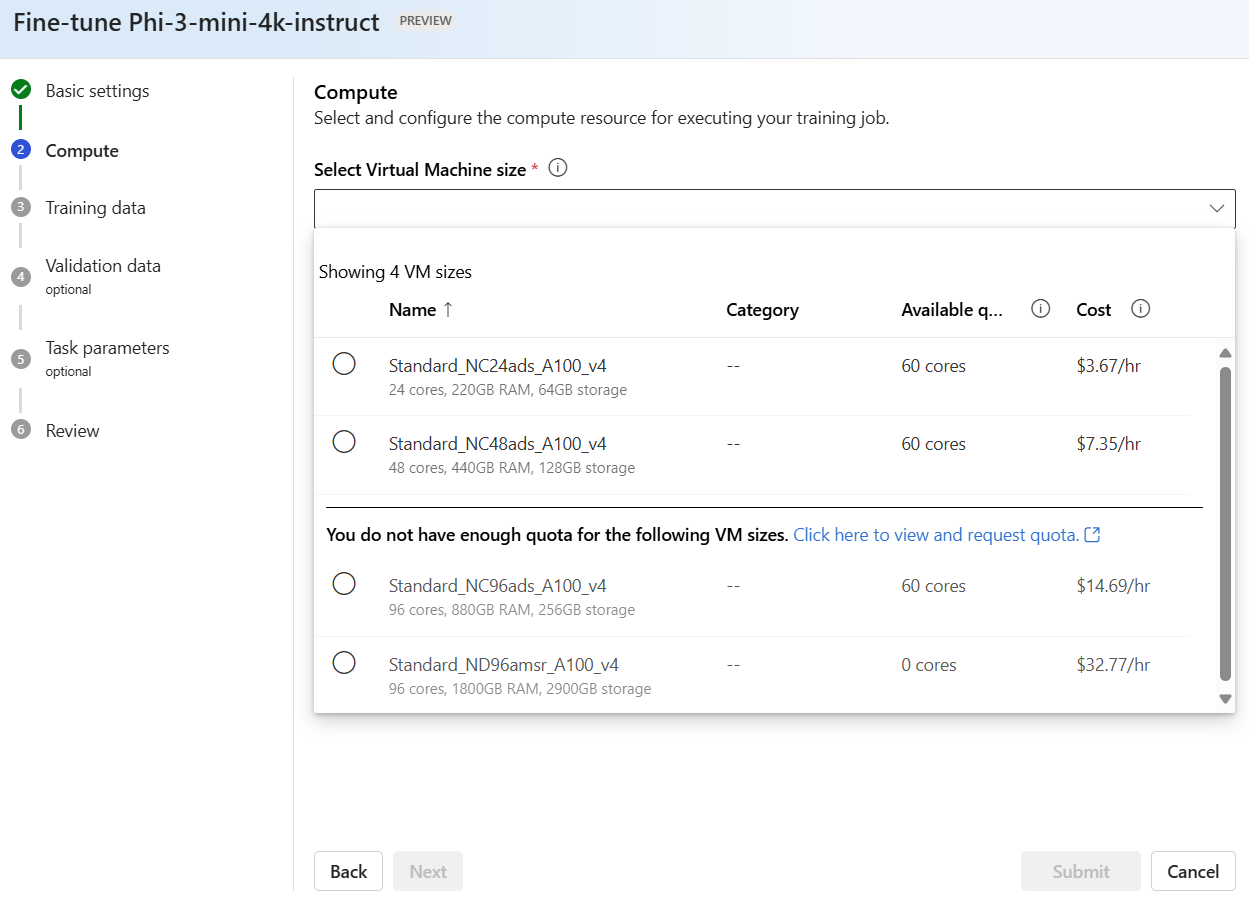

Select the Azure Machine Learning compute cluster to use for fine-tuning the model. Fine-tuning runs on GPU compute. Ensure that you have sufficient compute quota for the compute SKUs you plan to use.

Select Next to go to the "Training data" page. On this page, the "Task type" is preselected as Chat completion.

Provide the training data to use to fine-tune your model. You can choose to either upload a local file (in JSONL, CSV or TSV format) or select an existing registered dataset from your project.

Select Next to go to the "Validation data" page. Keep the Automatic split of training data selection to reserve an automatic split of training data for validation. Alternatively, you could provide a different validation dataset by uploading a local file (in JSONL, CSV or TSV format) or selecting an existing registered dataset from your project.

Select Next to go to the "Task parameters" page. Tuning hyperparameters is essential for optimizing large language models (LLMs) in real-world applications. It allows for improved performance and efficient resource usage. You can choose to keep the default settings or customize parameters like epochs or learning rate.

Select Next to go to the "Review" page and check that all the settings look good.

Select Submit to submit your fine-tuning job. Once the job completes, you can view evaluation metrics for the fine-tuned model. You can then deploy this model to an endpoint for inferencing.