Microsoft SQL Server meets Redis: an elegant logging platform at your doorstep - Day 1

Microsoft officially announced Microsoft Azure support for Redis a little while ago. I was particularly excited about this since Redis is a very elegant software that allows multiple interesting recipes. In a nutshell, Redis is a key-value store optimized for fast modification and retrieval. It’s widely used in web sites as hot (and warm) cache, particulary since OAuth has become so common. You can read more about it and get it here https://redis.io/. If you have a spare linux machine you can play with it now. Or you can just as easily use the hassle-free Azure one. Here is how to do it: https://azure.microsoft.com/en-us/documentation/articles/cache-dotnet-how-to-use-azure-redis-cache/ .

While these key-value store features alone are worth using this software, I especially like an overlooked functionality: publish/subscribe. With redis you can implement the pub/sub pattern easily (if you don’t know what pub/sub is the Wikipedia explains it very well: https://en.wikipedia.org/wiki/Pub/sub).

All you have to do is to open a redis connection and start publishing messages in a topic. In a very redis way the topic doesn’t have to be create beforehand. You can even subscribe to a non-existent topic! This is very loosely coupled and gives you complete freedom.

Let’s try it: fire up a redis server somewhere:

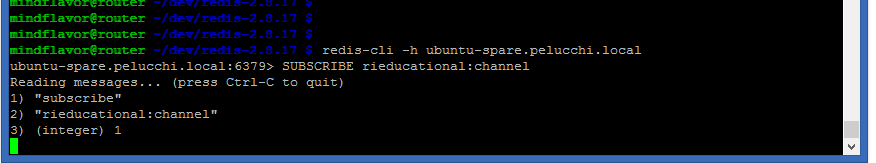

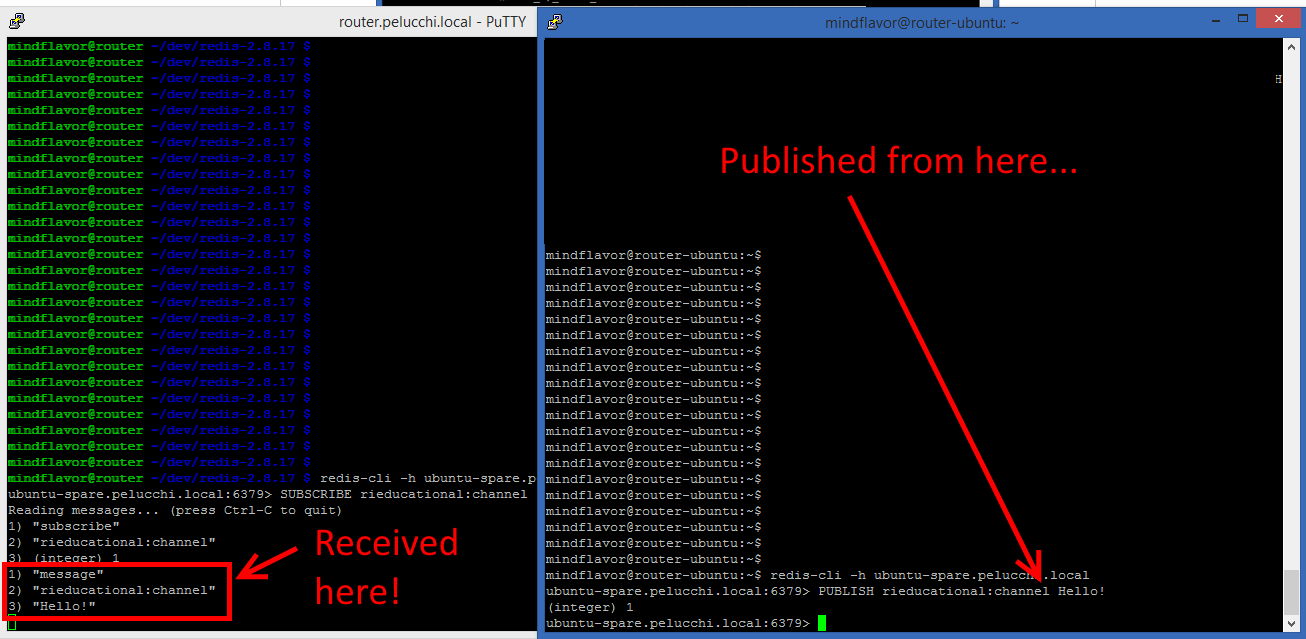

Now open a connection and subscribe to a topic with SUBSCRIBE <topic> command. For testing we can use the redis-cli application

Now if we open a new connection we can publish messages in our topic with PUBLISH <topic> <message> command:

Of course we can add subscribers at will.

With so many as 2 statements we have created a loosely coupled, efficient, multi-destination logging platform!

Now let’s get back to SQL Server for a moment. One of the most daunting challenges of TSQL developer is how to instrument the T-SQL code. SQL Server offers a lot of tools for batch/statement inspection such as SQL Server Profiler (deprecated) or, better yet, eXtended Events. eXtended Events have the capability to correlate different events with each other allowing you to better understand what’s going on. Still, those tools are an overkill most of the times. Also, the need to take care in advance on where to store the data. Moreover, DBA tend to dislike if you ask them to keep an ever-running eXtended Events session for you application :). You might have more luck with SQL Auditing – it supports the audit/audit specification “disconnected” approach that is similar to pub/sub but the purpose of SQL Auditing is for security reasons, not exactly what we are looking for. Also, SQL Audit tend to generate entries for indirect operations: while this is a requirement for security it would make our instrumentation a nightmare to follow.

As developer you might end up creating a log table and just inserting INSERT INTO Log… between the rows of your stored procedures. While easy this approach is simple – and doesn’t require you to mess with the DBA – is a very poor choice. What happens if the log table is locked? Or if the log table is full? Will you go for an heap table or not? And if you are in transaction, do you want to pay the transaction log price for your logging?

While we have SQL Server Service broker that would help a lot in this situation, it’s hard to implement and to maintain. Not to mention that it will use the same resources of your SQL Server instance. We don’t want our logging to slow down the application (it will, but we should make every effort to minimize this).

Your best bet would probably be to use the sp_trace_generateevent (https://msdn.microsoft.com/en-us/library/ms177548.aspx). It will be fast, customizable and will work even if no one is listening (no subscriber). You still have to create a trace to capture it, though, but it’s easy and you can tune it to capture only the events you want.

Now image how would be cool to replace the redis-cli tool as source for events with you SQL Server instance. You would be able to instrument your code without even thinking of where to store the logs. The pub/sub pattern will take care of this. All you have to do is to write EXEC SendToRedis <topic> <message> and be done. You want to use multiple topic? Just do it. Your redis server will be separated from your SQL Instance so you will not slow much your application. You can even find in the web a Node.js based implementation that will subscribe to your topic and publish them to HTML5 enabled browsers.

Sounds too good to be true? I will show you how to do it, just keep in mind that is just a proof of concept, if you want to use this code in production you should enhance it with safety features (ie error checking, transient error handling, etc…) and test it thoroughly. In my sample I’ve not used the nuget packages available on the web: the RESP protocol (REdis Serialization Protocol, see https://redis.io/topics/protocol) is easy and clean so I’ve opted to compose the TCP messages by hand.

If we want to publish a message to a redis server, all we have to do is to:

- Connect to the redis TCP port.

- Compose the PUBLISH message and send it.

- Read the server answer.

The PUBLISH message is a RESP array of bulk strings. Bulk strings are just strings – with whatever content – prefixed by a number specifying its length in bytes (that reminds me of good old Visual Basic strings for some reason :)). RESP require you to separate the statements with the carriage return + line feed combo (also known as \r\n). So each bulk string will be

$1400\r\n

This is your string that will be 1400 bytes long excluding the required line separator.\r\n

Array of bulk strings are just linear arrays prefixed by the number of elements in the array. So if you need to send an array of 3 bulk string you will create something like:

This can be achieved with two simple methods:

protected string SerializeArray(IEnumerable<RedisObject> rObjects)

{

StringBuilder sb = new StringBuilder();

int iCount = 0;

foreach (RedisObject ro in rObjects)

{

sb.Append(SerializeObject(ro));

iCount++;

}

string sCount = "*" + iCount + LINE_SEPARATOR;

string sArray = sb.ToString();

string sReturn = sCount + sArray;

return sReturn;

}

And:

protected string SerializeObject(RedisObject value)

{

StringBuilder sb = new StringBuilder();

string strToOutput = null;

if (value is RedisString)

{

strToOutput = value.ToString();

}

else if (value is RedisInt)

{

strToOutput = ":" + value.ToString();

}

else

{

throw new Exception("Unsupported type");

}

sb.Append("$" + StringLenInBytes(strToOutput) + LINE_SEPARATOR);

sb.Append(strToOutput.ToString() + LINE_SEPARATOR);

string s = sb.ToString();

return s;

}

Now that we have the C# methods required to connect and publish to redis all we have to do is to wrap them in a proper SQLCLR procedure:

[SqlProcedure]

public static void Publish(SqlString redisServerAddress, SqlInt32 redisServerPort, SqlString channel, SqlString message)

{

using (RedisConnection rc = GetRedisConnection(redisServerAddress.Value, redisServerPort.Value))

{

rc.Publish(channel.Value, message.Value);

}

}

The GetRedisConnection method is just a DNS resolver helper method:

private static RedisConnection GetRedisConnection(string address, int port)

{

RedisConnection redisConnection = null;

System.Net.IPAddress ip;

if (!System.Net.IPAddress.TryParse(address, out ip))

{

ip = System.Net.Dns.GetHostEntry(address).AddressList[0];

}

System.Net.IPEndPoint ie = new System.Net.IPEndPoint(ip, port);

redisConnection = new RedisConnection(ie);

redisConnection.Open();

return redisConnection;

}

The SQLCRL installation T-SQL can be just like this:

CREATE PROCEDURE Redis.Publish(@RedisServerAddress NVARCHAR(1024), @RedisServerPort INT, @Channel NVARCHAR(1024), @Message NVARCHAR(MAX))

AS EXTERNAL NAME [MindFlavor.RESP.SQLCLR].[MindFlavor.RESP.SQLCLR.Redis].Publish;

GO

That’s it! Now we can call the Redis.Publish stored procedure from within our T-SQL code and be done with it.

Just to give you a meaningful demo suppose we have a long running batch, such as batch that backups up all your databases. You might want to instrument the batch to be informed of its status. With redis is just simple as:

DECLARE pippo CURSOR FOR

SELECT name FROM sys.databases

OPEN pippo;

DECLARE @name NVARCHAR(255);

DECLARE @stmt NVARCHAR(4000);

DECLARE @txt NVARCHAR(MAX);

FETCH NEXT FROM pippo INTO @name;

WHILE @@FETCH_STATUS = 0

BEGIN

SET @stmt = N'BACKUP DATABASE ' + QUOTENAME(@name) + N' TO DISK=''C:\temp\backup\' + @name + N'.bak'' WITH INIT, COMPRESSION';

SET @txt = N'Statement ' + @stmt + ' completed. ' + @@SERVERNAME + ', ' + USER_NAME() + ' (' + CONVERT(NVARCHAR, @@SPID) + ').';

EXEC [MindFlavor].[Redis].[Publish] N'ubuntu-spare.pelucchi.local', 6379, N'msbackup:sql', @txt;

EXEC sp_ExecuteSQL @stmt;

FETCH NEXT FROM pippo INTO @name;

END

CLOSE pippo;

DEALLOCATE pippo;

SET @txt = N'All backups completed!';

EXEC [MindFlavor].[Redis].[Publish] N'ubuntu-spare.pelucchi.local', 6379, N'msbackup:sql', @txt;

<

If we were to SUBSCRIBE to the msbackup:sql topic:

we would see something like:

Just as expected. From here you can proceed to subscribe with your tool of choice. There are some excellent samples around (for example https://github.com/ohadinho/chat-sample) on how to show entries in a dynamic web page but you can easily dump them in a file or, even better, instrument an alerting system.

Happy Coding,

Francesco Cogno

Comments

Anonymous

November 26, 2014

Very interesting! ThanksAnonymous

June 28, 2016

Hi,Thanks for this. Do you know if SQL Server tabular 2016 can replace the capabilities of Redis, ie in memory cache. Of course different technologies but if I can cache my data within SQL then I prefer this option..Thanks.Panos.Anonymous

September 27, 2016

What namespace/nuGet packages are required to achieve this, can I have code demo anywhere. I am trying to use Install-Package ServiceStack.Redis but not able to add Redis or dependent dll's in SQL catalog.- Anonymous

September 27, 2016

Hi Ezharul,I've published the code here: https://github.com/MindFlavor/MindFlavor.RESP/tree/master/MindFlavor.RESP (MIT license). It's a proof-of-concept but if you're interested you can pick up from there.Cheers,Francesco

- Anonymous