How to add custom Hive UDFs to HDInsight

I recently had a need to add a UDF to Hive on HDInsight. I thought that it would be good to share that experience on a blog post. Hive provides a library of built-in functions to achieve the most common needs. The cool thing is that it also provides the framework to create your own UDF. I had a recent need to extract a string from a column if the string matches a Java RegEx pattern. For those of us coming from a SQL Server background, we can extract a pattern from a string using a combination of the PATINDEX and SUBSTRING functions. The idea was to provide that same functionality with Hive.

When an HDInsight cluster is provisioned (created), you can pass in customizations to suit specific needs. Behind the scenes an HDInsight cluster is built on a series of virtual machine images. Those images sometimes move to a new host or are reimaged for various reasons –customizations passed in when you provision the cluster will survive this process and stay available in the HDInsight cluster. Therefore we recommend passing in custom JARs, such as additions to the Hive function library, at the time you provision your HDInsight cluster.

You can find details on setting up the system for using PowerShell with HDInsight here. Let me walk you through the process that I used to deploy the custom UDF on Azure HDInsight.

Compile your customized code into JAR

The first thing we need to do here is to encapsulate the logic for the functionality within a class. For this example, I had a piece of Java code that I could re-use and I decided to call my class – FindPattern. So, let us encapsulate those details here within a source file called FindPattern.java. I created a Java project and called it HiveUDF. I then created a package called HiveUDF and started adding my custom functionality there. So, one of the classes there is the FindPattern class.

In order to implement a Hive UDF, you would need to extend the class "UDF" available in org.apache.hadoop.hive.ql.exec.UDF. I am showing the code snippet here –

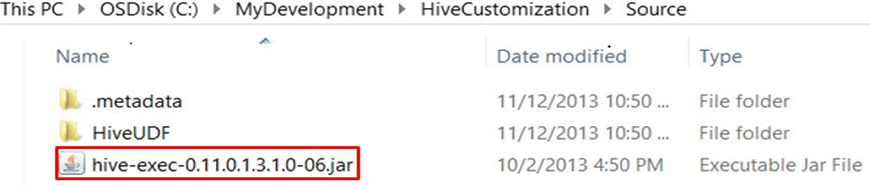

The overall layout of the project on Eclipse IDE is shown below – notice how I have referenced the jar that I downloaded from HDInsight. This will help with resolution of the package org.apache.hadoop.hive.ql.exec.UDF.

Notice how I have the classes separated by the encapsulated functionality within a package: HiveUDF. I have the JAR file - hive-exec-0.11.0.1.3.1.0-06.jar - downloaded from my HDInsight installation. This JAR file is available on the default container of the primary storage account of any existing Azure HDInsight cluster and can be reused for this project as long as the versions of the HDInsight clusters match. You can find a lot more information on provisioning the HDInsight cluster on my previous blog post.

I have taken the quick and easy approach of compiling this Java code with javac and packaging the class file with jar, as shown below. You can find a bit more detailed write up on this here.

Now, it is time for us to compile our code. I am using javac for the sake of this example –

Javac -cp <Path to your JARs separated by ;> -d <Output location> <Source file>

c:\MyDevelopment\HiveCustomization\Classes\target\classes> javac -cp "c:\MyDevelopment\HiveCustomization\Source\hive-exec-0.11.0.1.3.1.0-06.jar" -d "C:\MyDevelopment\HiveCustomization\Classes\target\classes" "c:\MyDevelopment\HiveCustomization\Source\HiveUDF\src\HiveUDF\FindPattern.java"

As a result of the above compilation, you will notice the FindPattern.class file generated within target\classes. Let us go ahead and package this into a JAR file, to upload into the HDInsight cluster!

Switch to: C:\MyDevelopment\HiveCustomization\Classes\target\classes and execute the following jar command -

Jar cvf HiveUDF.jar .

As you can see from the screenshot below, a manifest file and the class files are packaged into a jar file. There are two other class files that are part of my HiveUDF project that exist for other UDFs.

A Java Project can be used to add Java classes to encapsulate the different Hive UDF functionality and built like this and pre-provisioned into the cluster when the cluster is built. Once the rhythm of development is established, the build process, provisioning and creation of cluster can be automated with ease. A copy of HiveUDF.jar developed using the above steps is also attached to this post.

I pre-provisioned the Azure BLOB storage layout as described on my previous post and uploaded this custom JAR on the "myhivelibs" storage account as shown below – use the same location in the $HiveLibStorageAccount

and $HiveLibStorageContainer variables in PowerShell. You can use a tool like Visual Studio Server Explorer to explore Windows Azure Storage resources as described here

Now, it is time to create a customized HDInsight Cluster, adding this JAR to the Hive Configuration! I will demonstrate it with PowerShell – detailed reference here.

You can populate the parameters with your Subscription information and it will prompt for Credentials twice – the first time for creating the admin credentials for the HDInsight cluster that is being provisioned and the second time for the credentials for the MetaStore database. The PowerShell script assumes that the PrimaryStorageAccount, PrimaryStorageContainer, HiveLibStorageAccount, HiveLibStorageContainer, and HiveMetaStoreDB, indicated by respective variables on the PowerShell script below, have been pre-provisioned prior to execution of the script. The credentials for the MetaStore database will need to be the same as the one that is used to connect to the SQL Azure server. The authentication to SQL Azure server can also be independently validated through the Azure management portal.

The code snippet that I used to create the customized HDInsight Cluster is here -

If the script is successful, it will show the details of the cluster that it created for us – snippet below –

Name : MyHDICluster

HttpUserName : admin

HttpPassword : Mypass123!

Version : 2.1.3.0.432823

VersionStatus : Compatible

ConnectionUrl : https://MyHDICluster.azurehdinsight.net

State : Running

CreateDate : 1/14/2014 5:21:51 AM

UserName : admin

Location : West US

ClusterSizeInNodes : 4

DefaultStorageAccount : myprimarystorage.blob.core.windows.net

SubscriptionId : Your Subscription ID

StorageAccounts : {}

What we have done above is created a nice customized HDInsight cluster, which deploys our additional library – HiveUDF.jar - for us! This specific configuration change will survive any background node re-images and so it is important to keep the content on the HiveLibStorageAccount intact so that any re-images that happen in the background can still fetch the library files from this location. Now that we have the JAR deployed, we don't need to do any ADD JAR and can directly create a temporary function and start using our UDF!

With our pattern matching UDF, we can extract a given pattern from the message. Let us see an example where we would like to extract embedded phone numbers from messages – just for the sake of demonstration J For the sake of this demonstration, I am creating a Hive table called WebFeeds and loading it with some data as shown below.

Test Data:

1, "Call me at 123-1234567"

2, "Talk to you later"

3, "You have reached the voicemail for 111-2223344. Please leave a message"

4, "Have a good day"

You can create a file holding the above text data using any text editor like Notepad and save that file as MyUDFTestData.txt. Next using a storage explorer like Visual Studio Server Explorer, you can attach to the storage account "myprimarystorage" and upload this data file on the container "install" as "webfeeds/MyUDFTestData.txt"

Then, let us create a file called MyUDFQuery.hql and save the below Hive code in there and upload that to the storage account "myprimarystorage" on the container "install". The code for MyUDFQuery.hql can be found here -

I am just choosing to do a quick test here as you can see above. The Invoke-Hive cmdlet can take a HQL file as a parameter, but it needs to reside on the BLOB storage account. Notice how the HQL file that has been uploaded to a BLOB storage as shown above is referenced in the code snippet below -

If everything has been set correctly and if a phone pattern is embedded on the message, you will see the UserID and the extracted phone number from the message as the output now!

This is a simple example of how you can customize your HDInsight Hadoop cluster. When you add your own Hive UDFs you can simplify and standardize Hive queries. This is simple and easy to do when you provision your HDInsight cluster. Happy customizing!

@Dharshana (MSFT)

Thanks to Cindy Gross | @SQLCindy, Rick_H, Azim, Farooq and Jason for reviewing this!

Comments

- Anonymous

March 08, 2014

The comment has been removed - Anonymous

March 12, 2014

Thanks! Hive UDFs when used with Hive queries get submitted to the cluster as part of the MapReduce job for the Hive query. It is not necessary to code the UDF with a driver, mapper and reducer. UDFs generally work with one row at a time on the mapper phase. However, you can use distribute by, cluster by clauses to have the UDF evaluate in the reduce phase.Hope this helps!