Configure a distributed Always On availability group

Applies to:

SQL Server

To create a distributed availability group, you must create two availability groups each with its own listener. You then combine these availability groups into a distributed availability group. The following steps provide a basic example in Transact-SQL. This example doesn't cover all of the details of creating availability groups and listeners; instead, it focuses on highlighting the key requirements.

For a technical overview of distributed availability groups, see Distributed availability groups.

Prerequisites

To configure a distributed availability group, you must have the following:

- A supported version of SQL Server.

Note

If you configured the listener for your availability group on your SQL Server on Azure VM by using a distributed network name (DNN), then configuring a distributed availability group on top of your availability group isn't supported. To learn more, see SQL Server on Azure VM feature interoperability with AG and DNN listener.

Set the endpoint listeners to listen to all IP addresses

Make sure the endpoints can communicate between the different availability groups in the distributed availability group. If one availability group is set to a specific network on the endpoint, the distributed availability group doesn't work properly. On each server that hosts a replica in the distributed availability group, set the listener to listen on all IP addresses (LISTENER_IP = ALL).

Create an endpoint to listen to all IP addresses

For example, the following script creates a listener endpoint on TCP port 5022 that listens on all IP addresses.

CREATE ENDPOINT [aodns-hadr]

STATE = STARTED

AS TCP

(

LISTENER_PORT = 5022,

LISTENER_IP = ALL

)

FOR DATABASE_MIRRORING

(

ROLE = ALL,

AUTHENTICATION = WINDOWS NEGOTIATE,

ENCRYPTION = REQUIRED ALGORITHM AES

);

GO

Alter an endpoint to listen to all IP addresses

For example, the following script changes a listener endpoint to listen on all IP addresses.

ALTER ENDPOINT [aodns-hadr]

AS TCP

(

LISTENER_IP = ALL

);

GO

Create first availability group

Create the primary availability group on the first cluster

Create an availability group on the first Windows Server Failover Cluster (WSFC). In this example, the availability group is named ag1 for the database db1. The primary replica of the primary availability group is known as the global primary in a distributed availability group. Server1 is the global primary in this example.

CREATE AVAILABILITY GROUP [ag1]

FOR DATABASE db1

REPLICA ON N'server1' WITH (ENDPOINT_URL = N'TCP://server1.contoso.com:5022',

FAILOVER_MODE = AUTOMATIC,

AVAILABILITY_MODE = SYNCHRONOUS_COMMIT,

BACKUP_PRIORITY = 50,

SECONDARY_ROLE(ALLOW_CONNECTIONS = NO),

SEEDING_MODE = AUTOMATIC),

N'server2' WITH (ENDPOINT_URL = N'TCP://server2.contoso.com:5022',

FAILOVER_MODE = AUTOMATIC,

AVAILABILITY_MODE = SYNCHRONOUS_COMMIT,

BACKUP_PRIORITY = 50,

SECONDARY_ROLE(ALLOW_CONNECTIONS = NO),

SEEDING_MODE = AUTOMATIC);

GO

Note

The preceding example uses automatic seeding, where SEEDING_MODE is set to AUTOMATIC for both the replicas and the distributed availability group. This configuration sets the secondary replicas and secondary availability group to be automatically populated without requiring a manual backup and restore of primary database.

Join the secondary replicas to the primary availability group

Any secondary replicas must be joined to the availability group with ALTER AVAILABILITY GROUP with the JOIN option. Because automatic seeding is used in this example, you must also call ALTER AVAILABILITY GROUP with the GRANT CREATE ANY DATABASE option. This setting allows the availability group to create the database and begin seeding it automatically from the primary replica.

In this example, the following commands are run on the secondary replica, server2, to join the ag1 availability group. The availability group is then permitted to create databases on the secondary.

ALTER AVAILABILITY GROUP [ag1] JOIN

ALTER AVAILABILITY GROUP [ag1] GRANT CREATE ANY DATABASE

GO

Note

When the availability group creates a database on a secondary replica, it sets the database owner as the account that ran the ALTER AVAILABILITY GROUP statement to grant permission to create any database. For complete information, see Grant create database permission on secondary replica to availability group.

Create a listener for the primary availability group

Next add a listener for the primary availability group on the first WSFC. In this example, the listener is named ag1-listener. For detailed instructions on creating a listener, see Create or Configure an Availability Group Listener (SQL Server).

ALTER AVAILABILITY GROUP [ag1]

ADD LISTENER 'ag1-listener' (

WITH IP ( ('2001:db88:f0:f00f::cf3c'),('2001:4898:e0:f213::4ce2') ) ,

PORT = 60173);

GO

Create second availability group

Then on the second WSFC, create a second availability group, ag2. In this case, the database isn't specified, because it's automatically seeded from the primary availability group. The primary replica of the secondary availability group is known as the forwarder in a distributed availability group. In this example, server3 is the forwarder.

CREATE AVAILABILITY GROUP [ag2]

FOR

REPLICA ON N'server3' WITH (ENDPOINT_URL = N'TCP://server3.contoso.com:5022',

FAILOVER_MODE = MANUAL,

AVAILABILITY_MODE = SYNCHRONOUS_COMMIT,

BACKUP_PRIORITY = 50,

SECONDARY_ROLE(ALLOW_CONNECTIONS = NO),

SEEDING_MODE = AUTOMATIC),

N'server4' WITH (ENDPOINT_URL = N'TCP://server4.contoso.com:5022',

FAILOVER_MODE = MANUAL,

AVAILABILITY_MODE = SYNCHRONOUS_COMMIT,

BACKUP_PRIORITY = 50,

SECONDARY_ROLE(ALLOW_CONNECTIONS = NO),

SEEDING_MODE = AUTOMATIC);

GO

Note

The secondary availability group must use the same database mirroring endpoint (in this example port 5022). Otherwise, replication will stop after a local failover.

Join the secondary replicas to the secondary availability group

In this example, the following commands are run on the secondary replica, server4, to join the ag2 availability group. The availability group is then permitted to create databases on the secondary to support automatic seeding.

ALTER AVAILABILITY GROUP [ag2] JOIN

ALTER AVAILABILITY GROUP [ag2] GRANT CREATE ANY DATABASE

GO

Create a listener for the secondary availability group

Next add a listener for the secondary availability group on the second WSFC. In this example, the listener is named ag2-listener. For detailed instructions on creating a listener, see Create or Configure an Availability Group Listener (SQL Server).

ALTER AVAILABILITY GROUP [ag2]

ADD LISTENER 'ag2-listener' ( WITH IP ( ('2001:db88:f0:f00f::cf3c'),('2001:4898:e0:f213::4ce2') ) , PORT = 60173);

GO

Create distributed availability group on first cluster

On the first WSFC, create a distributed availability group (named distributedAG in this example). Use the CREATE AVAILABILITY GROUP command with the DISTRIBUTED option. The AVAILABILITY GROUP ON parameter specifies the member availability groups ag1 and ag2.

To create your distributed availability group using automatic seeding, use the following Transact-SQL code:

CREATE AVAILABILITY GROUP [distributedAG]

WITH (DISTRIBUTED)

AVAILABILITY GROUP ON

'ag1' WITH

(

LISTENER_URL = 'tcp://ag1-listener.contoso.com:5022',

AVAILABILITY_MODE = ASYNCHRONOUS_COMMIT,

FAILOVER_MODE = MANUAL,

SEEDING_MODE = AUTOMATIC

),

'ag2' WITH

(

LISTENER_URL = 'tcp://ag2-listener.contoso.com:5022',

AVAILABILITY_MODE = ASYNCHRONOUS_COMMIT,

FAILOVER_MODE = MANUAL,

SEEDING_MODE = AUTOMATIC

);

GO

Note

The LISTENER_URL specifies the listener for each availability group along with the database mirroring endpoint of the availability group. In this example, that is port 5022 (not port 60173 used to create the listener). If you're using a load balancer, for instance in Azure, add a load balancing rule for the distributed availability group port. Add the rule for the listener port, in addition to the SQL Server instance port.

Cancel automatic seeding to forwarder

If, for whatever reason, it becomes necessary to cancel the initialization of the forwarder before the two availability groups are synchronized, ALTER the distributed availability group by setting the forwarder's SEEDING_MODE parameter to MANUAL and immediately cancel the seeding. Run the command on the global primary:

-- Cancel automatic seeding. Connect to global primary but specify DAG AG2

ALTER AVAILABILITY GROUP [distributedAG]

MODIFY

AVAILABILITY GROUP ON

'ag2' WITH

( SEEDING_MODE = MANUAL );

Join distributed availability group on second cluster

Then join the distributed availability group on the second WSFC.

To join your distributed availability group using automatic seeding, use the following Transact-SQL code:

ALTER AVAILABILITY GROUP [distributedAG]

JOIN

AVAILABILITY GROUP ON

'ag1' WITH

(

LISTENER_URL = 'tcp://ag1-listener.contoso.com:5022',

AVAILABILITY_MODE = ASYNCHRONOUS_COMMIT,

FAILOVER_MODE = MANUAL,

SEEDING_MODE = AUTOMATIC

),

'ag2' WITH

(

LISTENER_URL = 'tcp://ag2-listener.contoso.com:5022',

AVAILABILITY_MODE = ASYNCHRONOUS_COMMIT,

FAILOVER_MODE = MANUAL,

SEEDING_MODE = AUTOMATIC

);

GO

Join the database on the secondary of the second availability group

If the second availability group was set up to use automatic seeding, then move to step 2.

If the second availability group is using manual seeding, then restore the backup you took on the global primary to the secondary of the second availability group:

RESTORE DATABASE [db1] FROM DISK = '<full backup location>' WITH NORECOVERY; RESTORE LOG [db1] FROM DISK = '<log backup location>' WITH NORECOVERY;After the database on the secondary replica of the second availability group is in a restoring state, you have to manually join it to the availability group.

ALTER DATABASE [db1] SET HADR AVAILABILITY GROUP = [ag2];

Fail over a distributed availability group

Since SQL Server 2022 (16.x) introduced distributed availability group support for the REQUIRED_SYNCHRONIZED_SECONDARIES_TO_COMMIT setting, instructions to fail over a distributed availability are different for SQL Server 2022 and later versions than for SQL Server 2019 and earlier versions.

For a distributed availability group, the only supported failover type is a manual user-initiated FORCE_FAILOVER_ALLOW_DATA_LOSS. Therefore, to prevent data loss, you must take extra steps (described in detail in this section) to ensure data is synchronized between the two replicas before initiating the failover.

In the event of an emergency where data loss is acceptable, you can initiate a failover without ensuring data synchronization by running:

ALTER AVAILABILITY GROUP distributedAG FORCE_FAILOVER_ALLOW_DATA_LOSS;

You can use the same command to fail over to the forwarder, as well as fail back to the global primary.

On SQL Server 2022 (16.x) and later you can configure the REQUIRED_SYNCHRONIZED_SECONDARIES_TO_COMMIT setting for a distributed availability group, which is designed to guarantee no data loss when a distributed availability group fails over. If this setting is configured, follow the steps in this section to fail over your distributed availability group. If you don't want to use the REQUIRED_SYNCHRONIZED_SECONDARIES_TO_COMMIT setting, then follow the instructions to fail over a distributed availability group in SQL Server 2019 and earlier.

Note

Setting REQUIRED_SYNCHRONIZED_SECONDARIES_TO_COMMIT to 1 means the primary replica waits for transactions to commit on the secondary replica before they're committed on the primary replica, which can degrade performance. While limiting or stopping transactions on the global primary isn't required for the distributed availability group to synchronize in SQL Server 2022 (16.x), doing so can improve performance for both user transactions and distributed availability group synchronization with REQUIRED_SYNCHRONIZED_SECONDARIES_TO_COMMIT set to 1.

Steps to ensure there's no data loss

To ensure there's no data loss, you must first configure the distributed availability group to support no data loss by following these steps:

- To prepare for failover, verify the global primary and global forwarder are in

SYNCHRONOUS_COMMITmode. If not, set them toSYNCHRONOUS_COMMITthrough ALTER AVAILABILITY GROUP. - Set the distributed availability group to synchronous commit on both the global primary and the forwarder.

- Wait until the distributed availability group is synchronized.

- On the global primary, set the distributed availability group

REQUIRED_SYNCHRONIZED_SECONDARIES_TO_COMMITsetting to 1 by using ALTER AVAILABILITY GROUP. - Verify all replicas in the local AGs and distributed availability group are healthy and the distributed availability group is SYNCHRONIZED.

- On the global primary replica, set the distributed availability group role to

SECONDARY, which makes the distributed availability group unavailable. - On the forwarder (the intended new primary), fail over the distributed availability group by using ALTER AVAILABILITY GROUP with

FORCE_FAILOVER_ALLOW_DATA_LOSS. - On the new secondary (the previous global primary replica), set the distributed availability group

REQUIRED_SYNCHRONIZED_SECONDARIES_TO_COMMITto 0. - Optional: If the availability groups are across a geographical distance that causes latency, change the availability mode to

ASYNCHRONOUS_COMMIT. This reverts the change from the first step, if necessary.

T-SQL example

This section provides the steps in a detailed example to fail over the distributed availability group named distributedAG by using Transact-SQL. The example environment has a total of 4 nodes for the distributed availability group. The global primary N1 and N2 host availability group ag1 while the global forwarder N3 and N4 host availability group ag2. The distributed availability group distributedAG pushes changes from ag1 to ag2.

Query to verify

SYNCHRONOUS_COMMITon the primaries of the local availability groups that form the distributed availability group. Run the following T-SQL directly on the global forwarder and global primary:SELECT DISTINCT ag.name AS [Availability Group], ar.replica_server_name AS [Replica], ar.availability_mode_desc AS [Availability Mode] FROM sys.availability_replicas AS ar INNER JOIN sys.availability_groups AS ag ON ar.group_id = ag.group_id INNER JOIN sys.dm_hadr_database_replica_states AS rs ON ar.group_id = rs.group_id AND ar.replica_id = rs.replica_id WHERE ag.name IN ('ag1', 'ag2') AND rs.is_primary_replica = 1 ORDER BY [Availability Group]; --if needed, to set a given replica to SYNCHRONOUS for node N1, default instance. If named, change from N1 to something like N1\SQL22 ALTER AVAILABILITY GROUP [testag] MODIFY REPLICA ON N'N1\SQL22' WITH (AVAILABILITY_MODE = SYNCHRONOUS_COMMIT);Set the distributed availability group to synchronous commit by running the following code on both the global primary and the forwarder:

-- sets the distributed availability group to synchronous commit ALTER AVAILABILITY GROUP [distributedAG] MODIFY AVAILABILITY GROUP ON 'ag1' WITH (AVAILABILITY_MODE = SYNCHRONOUS_COMMIT), 'ag2' WITH (AVAILABILITY_MODE = SYNCHRONOUS_COMMIT);Note

In a distributed availability group, the synchronization status between the two availability groups depends on the availability mode of both replicas. For synchronous commit mode, both the current primary availability group and the current secondary availability group must have

SYNCHRONOUS_COMMITavailability mode. For this reason, you must run this script on both the global primary replica and the forwarder.Wait until the status of the distributed availability group changes to

SYNCHRONIZED. Run the following query on the global primary:-- Run this query on the Global Primary and the forwarder -- Check the results to see if synchronization_state_desc is SYNCHRONIZED SELECT ag.name, drs.database_id AS [Availability Group], db_name(drs.database_id) AS database_name, drs.synchronization_state_desc, drs.last_hardened_lsn FROM sys.dm_hadr_database_replica_states AS drs INNER JOIN sys.availability_groups AS ag ON drs.group_id = ag.group_id WHERE ag.name = 'distributedAG' ORDER BY [Availability Group];Proceed after the availability group synchronization_state_desc is

SYNCHRONIZED.For SQL Server 2022 (16.x) and later, on the global primary, set

REQUIRED_SYNCHRONIZED_SECONDARIES_TO_COMMITto 1 by using the following T-SQL:ALTER AVAILABILITY GROUP distributedAG SET (REQUIRED_SYNCHRONIZED_SECONDARIES_TO_COMMIT = 1);Verify your availability groups are healthy on all replicas by querying the global primary and the forwarder:

SELECT ag.name AS [AG Name], db_name(drs.database_id) AS database_name, ar.replica_server_name AS [replica], drs.synchronization_state_desc, drs.last_hardened_lsn FROM sys.dm_hadr_database_replica_states AS drs INNER JOIN sys.availability_groups AS ag ON drs.group_id = ag.group_id INNER JOIN sys.availability_replicas AS ar ON drs.replica_id = ar.replica_id AND drs.replica_id = ar.replica_id WHERE ag.name IN ('ag1', 'ag2', 'distributedAG');On the global primary, set the distributed availability group role to

SECONDARY. At this point, the distributed availability group is not available. After this step completes, you can't fail back until the rest of the steps are performed.ALTER AVAILABILITY GROUP distributedAG SET (ROLE = SECONDARY);Fail over from the global primary by running the following query on the forwarder to transition the availability groups and bring the distributed availability group back online:

-- Run the following command on the forwarder, the SQL Server instance that hosts the primary replica of the secondary availability group. ALTER AVAILABILITY GROUP distributedAG FORCE_FAILOVER_ALLOW_DATA_LOSS;After this step:

- The global primary transitions from

N1toN3. - The global forwarder transitions from

N3toN1. - The distributed availability group is available.

- The global primary transitions from

On the new forwarder (previous global primary,

N1), clear the distributed availability group propertyREQUIRED_SYNCHRONIZED_SECONDARIES_TO_COMMITby setting it to 0:ALTER AVAILABILITY GROUP distributedAG SET (REQUIRED_SYNCHRONIZED_SECONDARIES_TO_COMMIT = 0);OPTIONAL: If the availability groups are across a geographical distance that causes latency, consider changing the availability mode back to

ASYNCHRONOUS_COMMITon both the global primary and the forwarder. This reverts the change made in the first step, if it's needed.-- If applicable: sets the distributed availability group to asynchronous commit: ALTER AVAILABILITY GROUP distributedAG MODIFY AVAILABILITY GROUP ON 'ag1' WITH (AVAILABILITY_MODE = ASYNCHRONOUS_COMMIT), 'ag2' WITH (AVAILABILITY_MODE = ASYNCHRONOUS_COMMIT);

Remove a distributed availability group

The following Transact-SQL statement removes a distributed availability group named distributedAG:

DROP AVAILABILITY GROUP distributedAG;

Create distributed availability group on failover cluster instances

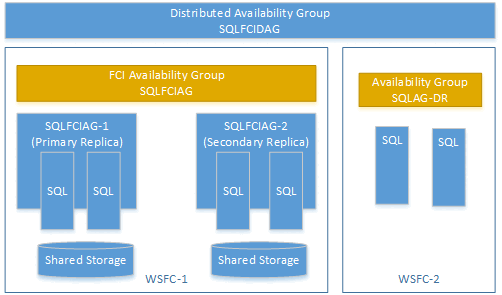

You can create a distributed availability group using an availability group on a failover cluster instance (FCI). In this case, you don't need an availability group listener. Use the virtual network name (VNN) for the primary replica of the FCI instance. The following example shows a distributed availability group called SQLFCIDAG. One availability group is SQLFCIAG. SQLFCIAG has two FCI replicas. The VNN for the primary FCI replica is SQLFCIAG-1, and the VNN for the secondary FCI replica is SQLFCIAG-2. The distributed availability group also includes SQLAG-DR, for disaster recovery.

The following DDL creates this distributed availability group:

CREATE AVAILABILITY GROUP [SQLFCIDAG]

WITH (DISTRIBUTED)

AVAILABILITY GROUP ON

'SQLFCIAG' WITH

(

LISTENER_URL = 'tcp://SQLFCIAG-1.contoso.com:5022',

AVAILABILITY_MODE = ASYNCHRONOUS_COMMIT,

FAILOVER_MODE = MANUAL,

SEEDING_MODE = AUTOMATIC

),

'SQLAG-DR' WITH

(

LISTENER_URL = 'tcp://SQLAG-DR.contoso.com:5022',

AVAILABILITY_MODE = ASYNCHRONOUS_COMMIT,

FAILOVER_MODE = MANUAL,

SEEDING_MODE = AUTOMATIC

);

The listener URL is the VNN of the primary FCI instance.

Manually fail over FCI in distributed availability group

To manually fail over the FCI availability group, update the distributed availability group to reflect the change of listener URL. For example, run the following DDL on both the global primary of the distributed AG and the forwarder of the distributed AG of SQLFCIDAG:

ALTER AVAILABILITY GROUP [SQLFCIDAG]

MODIFY AVAILABILITY GROUP ON

'SQLFCIAG' WITH

(

LISTENER_URL = 'tcp://SQLFCIAG-2.contoso.com:5022'

)