Set up your own infrastructure for Standard logic apps using hybrid deployment (Preview)

Applies to: Azure Logic Apps (Standard)

Note

This capability is in preview, incurs charges for usage, and is subject to the Supplemental Terms of Use for Microsoft Azure Previews.

Sometimes you have to set up and manage your own infrastructure to meet specific needs for regulatory compliance, data privacy, or network restrictions. Azure Logic Apps offers a hybrid deployment model so that you can deploy and host Standard logic app workflows in on-premises, private cloud, or public cloud scenarios. This model gives you the capabilities to host integration solutions in partially connected environments when you need to use local processing, data storage, and network access. With the hybrid option, you have the freedom and flexibility to choose the best environment for your workflows.

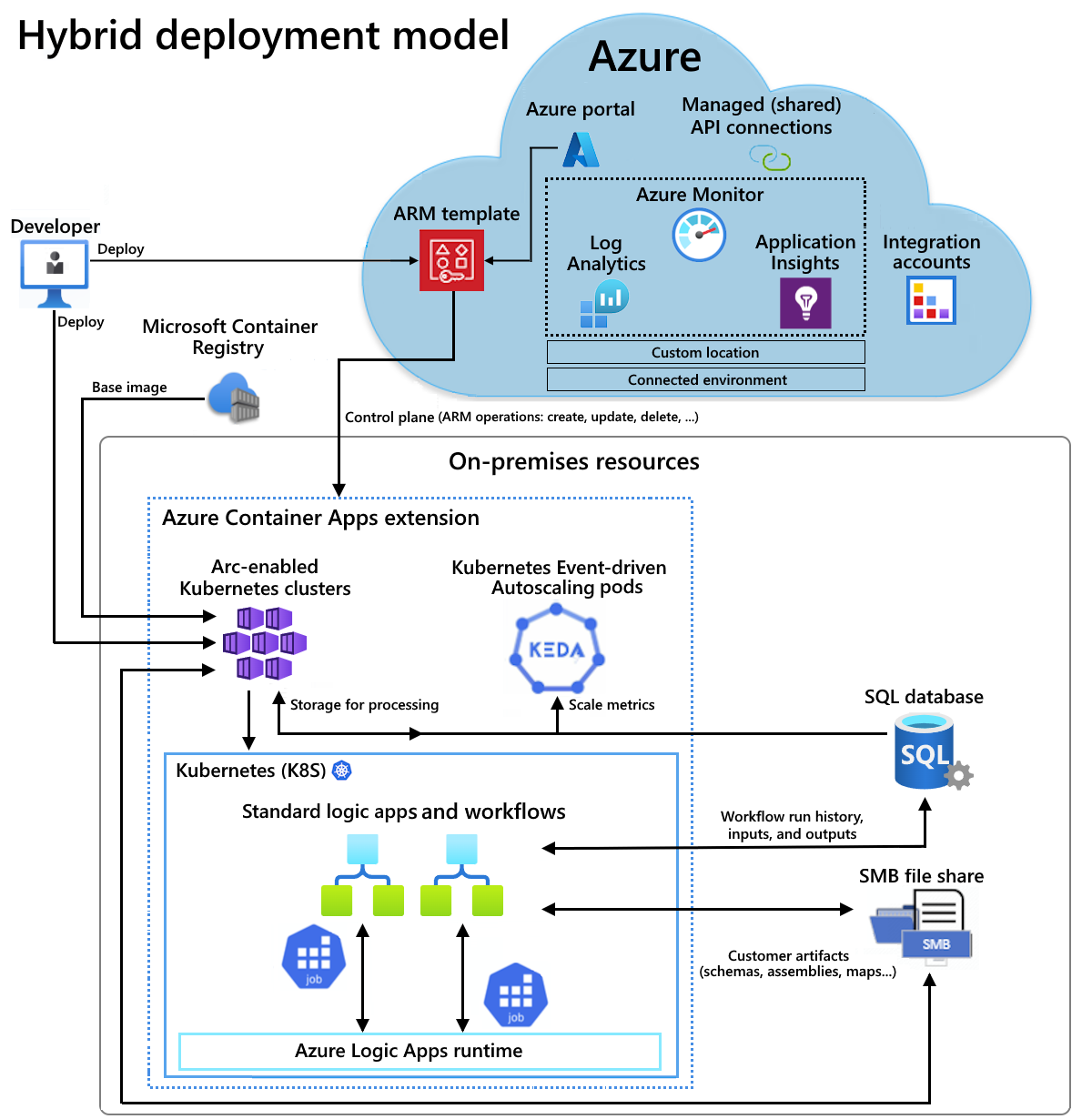

How hybrid deployment works

Standard logic app workflows with the hybrid deployment option are powered by an Azure Logic Apps runtime that is hosted in an Azure Container Apps extension. In your workflow, any built-in operations run locally with the runtime so that you get higher throughput for access to local data sources. If you need access to non-local data resources, for example, cloud-based services such as Microsoft Office 365, Microsoft Teams, Salesforce, GitHub, LinkedIn, or ServiceNow, you can choose operations from 1,000+ connectors hosted in Azure to include in your workflows. For more information, see Managed (shared) connectors. Although you need to have internet connectivity to manage your logic app in the Azure portal, the semi-connected nature of this platform lets you absorb any temporary internet connectivity issues.

For example, if you have an on-premises scenario, the following architectural overview shows where Standard logic app workflows are hosted and run in the hybrid model. The partially connected environment includes the following resources for hosting and working with your Standard logic apps, which deploy as Azure Container Apps resources:

- Azure Arc-enabled Azure Kubernetes Service (AKS) clusters

- A SQL database to locally store workflow run history, inputs, and outputs for processing

- A Server Message Block (SMB) file share to locally store artifacts used by your workflows

For hosting, you can also set up and use Azure Arc-enabled Kubernetes clusters on Azure Stack hyperconverged infrastructure (HCI) or Azure Arc-enabled Kubernetes clusters on Windows Server.

For more information, see the following documentation:

- What is Azure Kubernetes Service?

- Core concepts for Azure Kubernetes Service (AKS)

- Custom locations for Azure Arc-enabled Kubernetes clusters

- What is Azure Container Apps?

- Azure Container Apps on Azure Arc

This how-to guide shows how to set up the necessary on-premises resources in your infrastructure so that you can create, deploy, and host a Standard logic app workflow using the hybrid deployment model.

How billing works

With the hybrid option, you're responsible for the following items:

- Your Azure Arc-enabled Kubernetes infrastructure

- Your SQL Server license

- A billing charge of $0.18 USD per vCPU/hour to support Standard logic app workloads

In this billing model, you pay only for what you need and scale resources for dynamic workloads without having to buy for peak usage. For workflows that use Azure-hosted connector operations, such as Microsoft Teams or Microsoft Office 365, existing Standard (single-tenant) pricing applies to these operation executions.

Limitations

Hybrid deployment is currently available and supported only for the following Azure Arc-enabled Kubernetes clusters:

- Azure Arc-enabled Kubernetes clusters

- Azure Arc-enabled Kubernetes clusters on Azure Stack HCI

- Azure Arc-enabled Kubernetes clusters on Windows Server

Prerequisites

An Azure account and subscription. If you don't have a subscription, sign up for a free Azure account.

Basic understanding about core AKS concepts

Technical requirements for Azure Container Apps on Azure Arc-enabled Kubernetes, including access to a public or private container registry, such as the Azure Container Registry.

Create a Kubernetes cluster

Before you can deploy your Standard logic app as on-premises resource to an Azure Arc-enabled Kubernetes cluster in an Azure Container Apps connected environment, you first need a Kubernetes cluster. You'll later connect this cluster to Azure Arc so that you have an Azure Arc-enabled Kubernetes cluster.

Your Kubernetes cluster requires inbound and outbound connectivity with the SQL database that you later create as the storage provider and with the Server Message Block file share that you later create for artifacts storage. These resources must exist within the same network.

Note

You can also create a Kubernetes cluster on Azure Stack HCI infrastructure or Kubernetes cluster on Windows Server and apply the steps in this guide to connect your cluster to Azure Arc and set up your connected environment. For more information about Azure Stack HCI and AKS on Windows Server, see the following resources:

Set the following environment variables for the Kubernetes cluster that you want to create:

SUBSCRIPTION="<Azure-subscription-ID>" AKS_CLUSTER_GROUP_NAME="<aks-cluster-resource-group-name>" AKS_NAME="<aks-cluster-name>" LOCATION="eastus"Parameter Required Value Description SUBSCRIPTION Yes <Azure-subscription-ID> The ID for your Azure subscription AKS_CLUSTER_GROUP_NAME Yes <aks-cluster-resource-group-name> The name for the Azure resource group to use with your Kubernetes cluster. This name must be unique across regions and can contain only letters, numbers, hyphens (-), underscores (_), parentheses (()), and periods (.).

This example uses Hybrid-RG.AKS_NAME Yes <aks-cluster-name> The name for your Kubernetes cluster. LOCATION Yes <Azure-region> An Azure region that supports Azure container apps on Azure Arc-enabled Kubernetes.

This example uses eastus.Run the following commands either by using the Bash environment in Azure Cloud Shell or locally using Azure CLI installed on your computer:

Note

Make sure to change the max-count and min-count node values based on your load requirements.

az login az account set --subscription $SUBSCRIPTION az provider register --namespace Microsoft.KubernetesConfiguration --wait az extension add --name k8s-extension --upgrade --yes az group create --name $AKS_CLUSTER_GROUP_NAME --location $LOCATION az aks create \ --resource-group $AKS_CLUSTER_GROUP_NAME \ --name $AKS_NAME \ --enable-aad \ --generate-ssh-keys \ --enable-cluster-autoscaler \ --max-count 6 \ --min-count 1Parameter Required Value Description max countNo <max-nodes-value> The maximum number of nodes to use for the autoscaler when you include the enable-cluster-autoscaleroption. This value ranges from 1 to 1000.min countNo <min-nodes-value> The minimum number of nodes to use for the autoscaler when you include the enable-cluster-autoscaleroption. This value ranges from 1 to 1000.For more information, see the following resources:

Connect Kubernetes cluster to Azure Arc

To create your Azure Arc-enabled Kubernetes cluster, connect your Kubernetes cluster to Azure Arc.

Note

You can find the steps in this section and onwards through to creating your connected environment in a script named EnvironmentSetup.ps1, which you can find in the GitHub repo named Azure/logicapps. You can modify and use this script to meet your requirements and scenarios.

The script is unsigned, so before you run the script, run the following Azure PowerShell command as an administrator to set the execution policy:

Set-ExecutionPolicy -ExecutionPolicy Unrestricted

For more information, see Set-ExecutionPolicy.

Install the following Azure CLI extensions:

az extension add --name connectedk8s --upgrade --yes az extension add --name k8s-extension --upgrade --yes az extension add --name customlocation --upgrade --yes az extension add --name containerapp --upgrade --yesFor more information, see the following resources:

Register the following required namespaces:

az provider register --namespace Microsoft.ExtendedLocation --wait az provider register --namespace Microsoft.KubernetesConfiguration --wait az provider register --namespace Microsoft.App --wait az provider register --namespace Microsoft.OperationalInsights --waitFor more information, see the following resources:

Install the Kubernetes command line interface (CLI) named kubectl:

Set-ExecutionPolicy Bypass -Scope Process -Force; [System.Net.ServicePointManager]::SecurityProtocol = [System.Net.ServicePointManager]::SecurityProtocol -bor 3072; iex ((New-Object System.Net.WebClient).DownloadString('https://chocolatey.org/install.ps1')) choco install kubernetes-cli -yFor more information, see the following resources:

Install the Kubernetes package manager named Helm:

choco install kubernetes-helmFor more information, see the following resources:

Install the SMB driver using the following Helm commands:

Add the specified chart repository, get the latest information for available charts, and install the specified chart archive.

helm repo add csi-driver-smb https://raw.githubusercontent.com/kubernetes-csi/csi-driver-smb/master/charts helm repo update helm install csi-driver-smb csi-driver-smb/csi-driver-smb --namespace kube-system --version v1.15.0For more information, see the following resources:

Confirm that the SMB driver is installed by running the following kubectl command, which should list smb.csi.k8s.io:

kubectl get csidriverFor more information, see kubectl get.

Connect your Kubernetes cluster to Azure Arc

Test your connection to your cluster by getting the kubeconfig file:

az aks get-credentials \ --resource-group $AKS_CLUSTER_GROUP_NAME \ --name $AKS_NAME \ --admin kubectl get nsBy default, the kubeconfig file is saved to the path, ~/.kube/config. This command applies to our example Kubernetes cluster and differs for other kinds of Kubernetes clusters.

For more information, see the following resources:

Based on your Kubernetes cluster deployment, set the following environment variable to provide a name to use for the Azure resource group that contains your Azure Arc-enabled cluster and resources:

GROUP_NAME="<Azure-Arc-cluster-resource-group-name>"Parameter Required Value Description GROUP_NAME Yes <Azure-Arc-cluster-resource-group-name> The name for the Azure resource group to use with your Azure Arc-enabled cluster and other resources, such as your Azure Container Apps extension, custom location, and Azure Container Apps connected environment. This name must be unique across regions and can contain only letters, numbers, hyphens (-), underscores (_), parentheses (()), and periods (.).

This example uses Hybrid-Arc-RG.Create the Azure resource group for your Azure Arc-enabled cluster and resources:

az group create \ --name $GROUP_NAME \ --location $LOCATIONFor more information, see the following resources:

Set the following environment variable to provide a name for your Azure Arc-enabled Kubernetes cluster:

CONNECTED_CLUSTER_NAME="$GROUP_NAME-cluster"Parameter Required Value Description CONNECTED_CLUSTER_NAME Yes <Azure-Arc-cluster-resource-group-name>-cluster The name to use for your Azure Arc-enabled cluster. This name must be unique across regions and can contain only letters, numbers, hyphens (-), underscores (_), parentheses (()), and periods (.).

This example uses Hybrid-Arc-RG-cluster.Connect your previously created Kubernetes cluster to Azure Arc:

az connectedk8s connect \ --resource-group $GROUP_NAME \ --name $CONNECTED_CLUSTER_NAMEFor more information, see the following resources:

Validate the connection between Azure Arc and your Kubernetes cluster:

az connectedk8s show \ --resource-group $GROUP_NAME \ --name $CONNECTED_CLUSTER_NAMEIf the output shows that the provisioningState property value isn't set to Succeeded, run the command again after one minute.

For more information, see the following resources:

Create an Azure Log Analytics workspace

You can create an optional, but recommended, Azure Log Analytics workspace, which provides access to logs for apps that run in your Azure Arc-enabled Kubernetes cluster.

Set the following environment variable to provide a name your Log Analytics workspace:

WORKSPACE_NAME="$GROUP_NAME-workspace"Parameter Required Value Description WORKSPACE_NAME Yes <Azure-Arc-cluster-resource-group-name>-workspace The name to use for your Log Analytics workspace. This name must be unique within your resource group.

This example uses Hybrid-Arc-RG-workspace.Create the Log Analytics workspace:

az monitor log-analytics workspace create \ --resource-group $GROUP_NAME \ --workspace-name $WORKSPACE_NAMEFor more information, see the following resources:

Get the base64-encoded ID and shared key for your Log Analytics workspace. You need these values for a later step.

LOG_ANALYTICS_WORKSPACE_ID=$(az monitor log-analytics workspace show \ --resource-group $GROUP_NAME \ --workspace-name $WORKSPACE_NAME \ --query customerId \ --output tsv) LOG_ANALYTICS_WORKSPACE_ID_ENC=[Convert]::ToBase64String([System.Text.Encoding]::UTF8.GetBytes($LOG_ANALYTICS_WORKSPACE_ID)) LOG_ANALYTICS_KEY=$(az monitor log-analytics workspace get-shared-keys \ --resource-group $GROUP_NAME \ --workspace-name $WORKSPACE_NAME \ --query primarySharedKey \ --output tsv) LOG_ANALYTICS_KEY_ENC=[Convert]::ToBase64String([System.Text.Encoding]::UTF8.GetBytes($LOG_ANALYTICS_KEY))Parameter Required Value Description LOG_ANALYTICS_WORKSPACE_ID Yes The ID for your Log Analytics workspace. LOG_ANALYTICS_WORKSPACE_ID_ENC Yes The base64-encoded ID for your Log Analytics workspace. LOG_ANALYTICS_KEY Yes The shared key for your Log Analytics workspace. LOG_ANALYTICS_ENC Yes The base64-encoded shared key for your Log Analytics workspace. For more information, see the following resources:

Create and install the Azure Container Apps extension

Now, create and install the Azure Container Apps extension with your Azure Arc-enabled Kubernetes cluster as an on-premises resource.

Important

If you want to deploy to AKS on Azure Stack HCI, before you create and install the Azure Container Apps extension, make sure that you set up HAProxy or a custom load balancer.

Set the following environment variables to the following values:

EXTENSION_NAME="logicapps-aca-extension" NAMESPACE="logicapps-aca-ns" CONNECTED_ENVIRONMENT_NAME="<connected-environment-name>"Parameter Required Value Description EXTENSION_NAME Yes logicapps-aca-extension The name for the Azure Container Apps extension. NAMESPACE Yes logicapps-aca-ns The cluster namespace where you want to provision resources. CONNECTED_ENVIRONMENT_NAME Yes <connected-environment-name> A unique name to use for the Azure Container Apps connected environment. This name becomes part of the domain name for the Standard logic app that you create, deploy, and host in the Azure Container Apps connected environment. Create and install the extension with Log Analytics enabled for your Azure Arc-enabled Kubernetes cluster. You can't later add Log Analytics to the extension.

az k8s-extension create \ --resource-group $GROUP_NAME \ --name $EXTENSION_NAME \ --cluster-type connectedClusters \ --cluster-name $CONNECTED_CLUSTER_NAME \ --extension-type 'Microsoft.App.Environment' \ --release-train stable \ --auto-upgrade-minor-version true \ --scope cluster \ --release-namespace $NAMESPACE \ --configuration-settings "Microsoft.CustomLocation.ServiceAccount=default" \ --configuration-settings "appsNamespace=${NAMESPACE}" \ --configuration-settings "keda.enabled=true" \ --configuration-settings "keda.logicAppsScaler.enabled=true" \ --configuration-settings "keda.logicAppsScaler.replicaCount=1" \ --configuration-settings "containerAppController.api.functionsServerEnabled=true" \ --configuration-settings "envoy.externalServiceAzureILB=false" \ --configuration-settings "functionsProxyApiConfig.enabled=true" \ --configuration-settings "clusterName=${CONNECTED_ENVIRONMENT_NAME}" \ --configuration-settings "envoy.annotations.service.beta.kubernetes.io/azure-load-balancer-resource-group=${GROUP_NAME}" \ --configuration-settings "logProcessor.appLogs.destination=log-analytics" \ --configuration-protected-settings "logProcessor.appLogs.logAnalyticsConfig.customerId=${LOG_ANALYTICS_WORKSPACE_ID_ENC}" \ --configuration-protected-settings "logProcessor.appLogs.logAnalyticsConfig.sharedKey=${LOG_ANALYTICS_KEY_ENC}"Parameter Required Description Microsoft.CustomLocation.ServiceAccount Yes The service account created for the custom location.

Recommendation: Set the value to default.appsNamespace Yes The namespace to use for creating app definitions and revisions. This value must match the release namespace for the Azure Container Apps extension. clusterName Yes The name for the Azure Container Apps extension Kubernetes environment to create for the extension. keda.enabled Yes Enable Kubernetes Event-driven Autoscaling (KEDA). This value is required and must be set to true. keda.logicAppsScaler.enabled Yes Enable the Azure Logic Apps scaler in KEDA. This value is required and must be set to true. keda.logicAppsScaler.replicaCount Yes The initial number of logic app scalers to start. The default value set to 1. This value scales up or scales down to 0, if no logic apps exist in the environment. containerAppController.api.functionsServerEnabled Yes Enable the service responsible for converting logic app workflow triggers to KEDA-scaled objects. This value is required and must be set to true. envoy.externalServiceAzureILB Yes Determines whether the envoy acts as an internal load balancer or a public load balancer.

- true: The envoy acts as an internal load balancer. The Azure Logic Apps runtime is accessible only within private network.

- false: The envoy acts as a public load balancer. The Azure Logic Apps runtime is accessible over the public network.functionsProxyApiConfig.enabled Yes Enable the proxy service that facilitates API access to the Azure Logic Apps runtime from the Azure portal. This value is required and must be set to true. envoy.annotations.service.beta.kubernetes.io/azure-load-balancer-resource-group Yes, but only when the underlying cluster is Azure Kubernetes Service. The name for the resource group where the Kubernetes cluster exists. logProcessor.appLogs.destination No The destination to use for application logs. The value is either log-analytics or none, which disables logging. logProcessor.appLogs.logAnalyticsConfig.customerId Yes, but only when logProcessor.appLogs.destination is set to log-analytics. The base64-encoded ID for your Log Analytics workspace. Make sure to configure this parameter as a protected setting. logProcessor.appLogs.logAnalyticsConfig.sharedKey Yes, but only when logProcessor.appLogs.destination is set to log-analytics. The base64-encoded shared key for your Log Analytics workspace. Make sure to configure this parameter as a protected setting. For more information, see the following resources:

Save the ID value for the Azure Container Apps extension to use later:

EXTENSION_ID=$(az k8s-extension show \ --cluster-type connectedClusters \ --cluster-name $CONNECTED_CLUSTER_NAME \ --resource-group $GROUP_NAME \ --name $EXTENSION_NAME \ --query id \ --output tsv)Parameter Required Value Description EXTENSION_ID Yes <extension-ID> The ID for the Azure Container Apps extension. For more information, see the following resources:

Before you continue, wait for the extension to fully install. To have your terminal session wait until the installation completes, run the following command:

az resource wait \ --ids $EXTENSION_ID \ --custom "properties.provisioningState!='Pending'" \ --api-version "2020-07-01-preview"For more information, see the following resources:

Create your custom location

Set the following environment variables to the specified values:

CUSTOM_LOCATION_NAME="my-custom-location" CONNECTED_CLUSTER_ID=$(az connectedk8s show \ --resource-group $GROUP_NAME \ --name $CONNECTED_CLUSTER_NAME \ --query id \ --output tsv)Parameter Required Value Description CUSTOM_LOCATION_NAME Yes my-custom-location The name to use for your custom location. CONNECTED_CLUSTER_ID Yes <Azure-Arc-cluster-ID> The ID for the Azure Arc-enabled Kubernetes cluster. For more information, see the following resources:

Create the custom location:

az customlocation create \ --resource-group $GROUP_NAME \ --name $CUSTOM_LOCATION_NAME \ --host-resource-id $CONNECTED_CLUSTER_ID \ --namespace $NAMESPACE \ --cluster-extension-ids $EXTENSION_ID \ --location $LOCATIONNote

If you experience issues creating a custom location on your cluster, you might have to enable the custom location feature on your cluster. This step is required if you signed in to Azure CLI using a service principal, or if you signed in as a Microsoft Entra user with restricted permissions on the cluster resource.

For more information, see the following resources:

Validate that the custom location is successfully created:

az customlocation show \ --resource-group $GROUP_NAME \ --name $CUSTOM_LOCATION_NAMEIf the output shows that the provisioningState property value isn't set to Succeeded, run the command again after one minute.

Save the custom location ID for use in a later step:

CUSTOM_LOCATION_ID=$(az customlocation show \ --resource-group $GROUP_NAME \ --name $CUSTOM_LOCATION_NAME \ --query id \ --output tsv)Parameter Required Value Description CUSTOM_LOCATION_ID Yes <my-custom-location-ID> The ID for your custom location. For more information, see the following resources:

Create the Azure Container Apps connected environment

Now, create your Azure Container Apps connected environment for your Standard logic app to use.

az containerapp connected-env create \

--resource-group $GROUP_NAME \

--name $CONNECTED_ENVIRONMENT_NAME \

--custom-location $CUSTOM_LOCATION_ID \

--location $LOCATION

For more information, see the following resources:

Create SQL Server storage provider

Standard logic app workflows in the hybrid deployment model use a SQL database as the storage provider for the data used by workflows and the Azure Logic Apps runtime, for example, workflow run history, inputs, outputs, and so on.

Your SQL database requires inbound and outbound connectivity with your Kubernetes cluster, so these resources must exist in the same network.

Set up any of the following SQL Server editions:

- SQL Server on premises

- Azure SQL Database

- Azure SQL Managed Instance

- SQL Server enabled by Azure Arc

For more information, see Set up SQL database storage for Standard logic app workflows.

Confirm that your SQL database is in the same network as your Arc-enabled Kubernetes cluster and SMB file share.

Find and save the connection string for the SQL database that you created.

Set up SMB file share for artifacts storage

To store artifacts such as maps, schemas, and assemblies for your logic app (container app) resource, you need to have a file share that uses the Server Message Block (SMB) protocol.

You need administrator access to set up your SMB file share.

Your SMB file share must exist in the same network as your Kubernetes cluster and SQL database.

Your SMB file share requires inbound and outbound connectivity with your Kubernetes cluster. If you enabled Azure virtual network restrictions, make sure that your file share exists in the same virtual network as your Kubernetes cluster or in a peered virtual network.

Don't use the same exact file share path for multiple logic apps.

You can use separate SMB file shares for each logic app, or you can use different folders in the same SMB file share as long as those folders aren't nested. For example, don't have a logic app use the root path, and then have another logic app use a subfolder.

To deploy your logic app using Visual Studio Code, make sure that the local computer with Visual Studio Code can access the file share.

Set up your SMB file share on Windows

Make sure that your SMB file share exists in the same virtual network as the cluster where you mount your file share.

In Windows, go to the folder that you want to share, open the shortcut menu, select Properties.

On the Sharing tab, select Share.

In the box that opens, select a person who you want to have access to the file share.

Select Share, and copy the link for the network path.

If your local computer isn't connected to a domain, replace the computer name in the network path with the IP address.

Save the IP address to use later as the host name.

Set up Azure Files as your SMB file share

Alternatively, for testing purposes, you can use Azure Files as an SMB file share. Make sure that your SMB file share exists in the same virtual network as the cluster where you mount your file share.

From the storage account menu, under Data storage, select File shares.

From the File shares page toolbar, select + File share, and provide the required information for your SMB file share.

After deployment completes, select Go to resource.

On the file share menu, select Overview, if not selected.

On the Overview page toolbar, select Connect. On the Connect pane, select Show script.

Copy the following values and save them somewhere safe for later use:

- File share's host name, for example, mystorage.file.core.windows.net

- File share path

- Username without

localhost\ - Password

On the Overview page toolbar, select + Add directory, and provide a name to use for the directory. Save this name to use later.

You need these saved values to provide your SMB file share information when you deploy your logic app resource.

For more information, see Create an SMB Azure file share.

Confirm SMB file share connection

To test the connection between your Arc-enabled Kubernetes cluster and your SMB file share, and to check that your file share is correctly set up, follow these steps:

If your SMB file share isn't on the same cluster, confirm that the ping operation works from your Arc-enabled Kubernetes cluster to the virtual machine that has your SMB file share. To check that the ping operation works, follow these steps:

In your Arc-enabled Kubernetes cluster, create a test pod that runs any Linux image, such as BusyBox or Ubuntu.

Go to the container in your pod, and install the iputils-ping package by running the following Linux commands:

apt-get update apt-get install iputils-ping

To confirm that your SMB file share is correctly set up, follow these steps:

In your test pod with the same Linux image, create a folder that has the path named mnt/smb.

Go to the root or home directory that contains the mnt folder.

Run the following command:

- mount -t cifs //{ip-address-smb-computer}/{file-share-name}/mnt/smb -o username={user-name}, password={password}

To confirm that artifacts correctly upload, connect to the SMB file share path, and check whether artifact files exist in the correct folder that you specify during deployment.

Next steps

Create Standard logic app workflows for hybrid deployment on your own infrastructure