Tutorial: Migrate Event Hubs captured data from Azure Storage to Azure Synapse Analytics using Azure Event Grid and Azure Functions

In this tutorial, you'll migrate Event Hubs captured data from Azure Blob Storage to Azure Synapse Analytics, specifically a dedicated SQL pool, using Azure Event Grid and Azure Functions.

This diagram depicts the workflow of the solution you build in this tutorial:

- Data sent to an Azure event hub is captured in an Azure blob storage.

- When the data capture is complete, an event is generated and sent to Azure Event Grid.

- Azure Event Grid forwards this event data to an Azure function app.

- The function app uses the blob URL in the event data to retrieve the blob from the storage.

- The function app migrates the blob data to an Azure Synapse Analytics.

In this article, you take the following steps:

- Deploy the required infrastructure for the tutorial

- Publish code to a Functions App

- Create an Event Grid subscription

- Stream sample data into Event Hubs

- Verify captured data in Azure Synapse Analytics

Prerequisites

To complete this tutorial, you must have:

- This article assumes that you are familiar with Event Grid and Event Hubs (especially the Capture feature). If you aren't familiar with Azure Event Grid, see Introduction to Azure Event Grid. To learn about the Capture feature of Azure Event Hubs, see Capture events through Azure Event Hubs in Azure Blob Storage or Azure Data Lake Storage.

- An Azure subscription. If you don't have an Azure subscription, create a free account before you begin.

- Visual Studio with workloads for: .NET desktop development, Azure development, ASP.NET and web development, Node.js development, and Python development.

- Download the EventHubsCaptureEventGridDemo sample project to your computer.

- WindTurbineDataGenerator – A simple publisher that sends sample wind turbine data to an event hub with the Capture feature enabled.

- FunctionDWDumper – An Azure function that receives a notification from Azure Event Grid when an Avro file is captured to the Azure Storage blob. It receives the blob’s URI path, reads its contents, and pushes this data to Azure Synapse Analytics (dedicated SQL pool).

Deploy the infrastructure

In this step, you deploy the required infrastructure with a Resource Manager template. When you deploy the template, the following resources are created:

- Event hub with the Capture feature enabled.

- Storage account for the captured files.

- App service plan for hosting the function app

- Function app for processing the event

- SQL Server for hosting the data warehouse

- Azure Synapse Analytics (dedicated SQL pool) for storing the migrated data

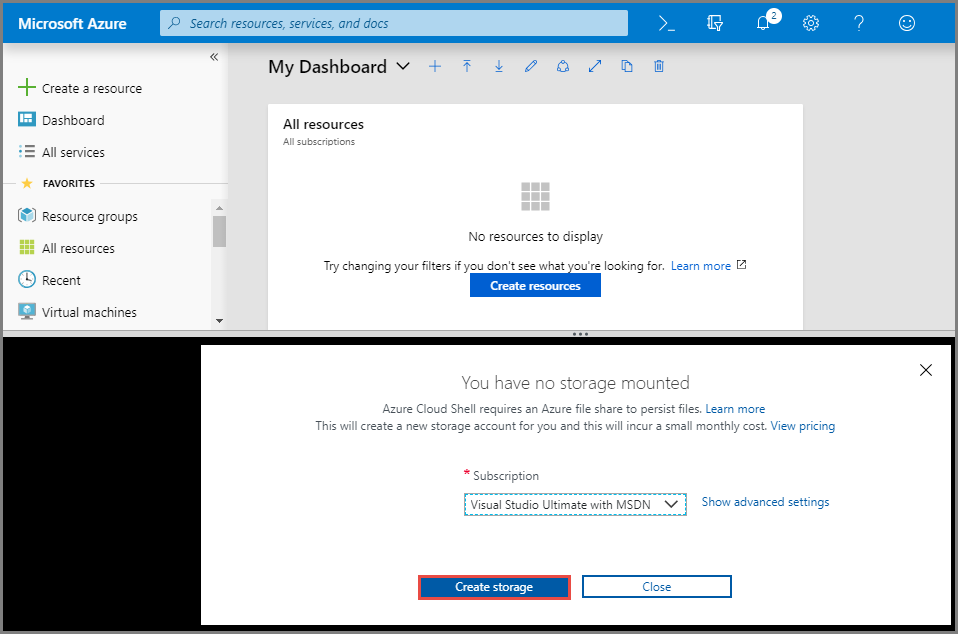

Use Azure CLI to deploy the infrastructure

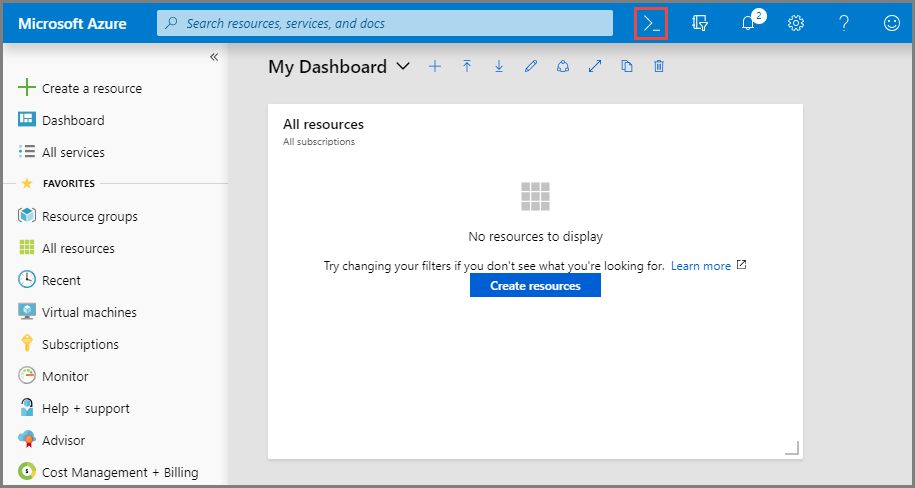

Sign in to the Azure portal.

Select Cloud Shell button at the top.

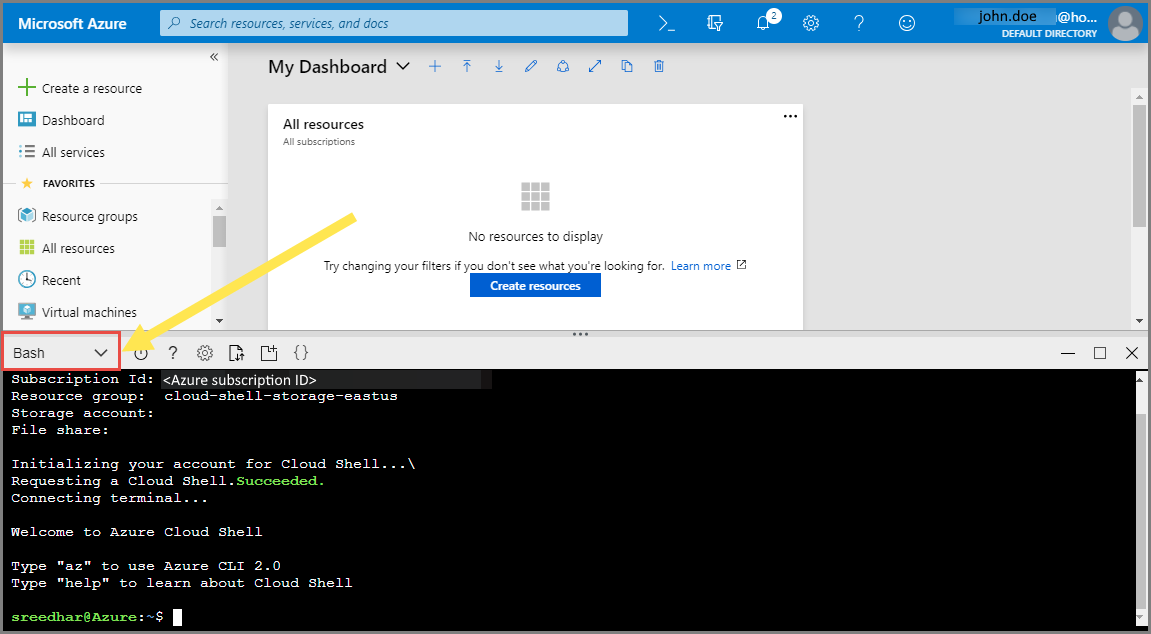

You see the Cloud Shell opened at the bottom of the browser.

In the Cloud Shell, select Bash as shown in the above image, if it isn't already selected.

Create an Azure resource group by running the following CLI command:

Copy and paste the following command into the Cloud Shell window. Change the resource group name and location if you want.

az group create -l eastus -n rgDataMigrationPress ENTER.

Here's an example:

user@Azure:~$ az group create -l eastus -n rgDataMigration { "id": "/subscriptions/00000000-0000-0000-0000-0000000000000/resourceGroups/rgDataMigration", "location": "eastus", "managedBy": null, "name": "rgDataMigration", "properties": { "provisioningState": "Succeeded" }, "tags": null }

Deploy all the resources mentioned in the previous section (event hub, storage account, functions app, Azure Synapse Analytics) by running the following CLI command:

Copy and paste the command into the Cloud Shell window. Alternatively, you can copy/paste into an editor of your choice, set values, and then copy the command to the Cloud Shell. If you see an error due to an Azure resource name, delete the resource group, fix the name, and retry the command again.

Important

Specify values for the following entities before running the command:

- Name of the resource group you created earlier.

- Name for the event hub namespace.

- Name for the event hub. You can leave the value as it is (hubdatamigration).

- Name for the SQL server.

- Name of the SQL user and password.

- Name for the database.

- Name of the storage account.

- Name for the function app.

az deployment group create \ --resource-group rgDataMigration \ --template-uri https://raw.githubusercontent.com/Azure/azure-docs-json-samples/master/event-grid/EventHubsDataMigration.json \ --parameters eventHubNamespaceName=<event-hub-namespace> eventHubName=hubdatamigration sqlServerName=<sql-server-name> sqlServerUserName=<user-name> sqlServerPassword=<password> sqlServerDatabaseName=<database-name> storageName=<unique-storage-name> functionAppName=<app-name>Press ENTER in the Cloud Shell window to run the command. This process might take a while since you're creating a bunch of resources. In the result of the command, ensure that there have been no failures.

Close the Cloud Shell by selecting the Cloud Shell button in the portal (or) X button in the top-right corner of the Cloud Shell window.

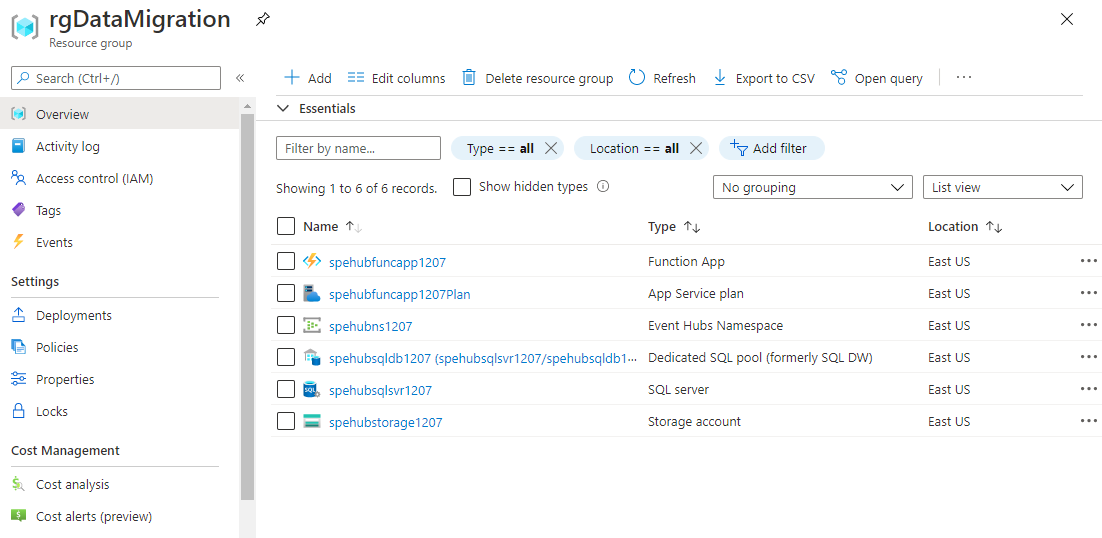

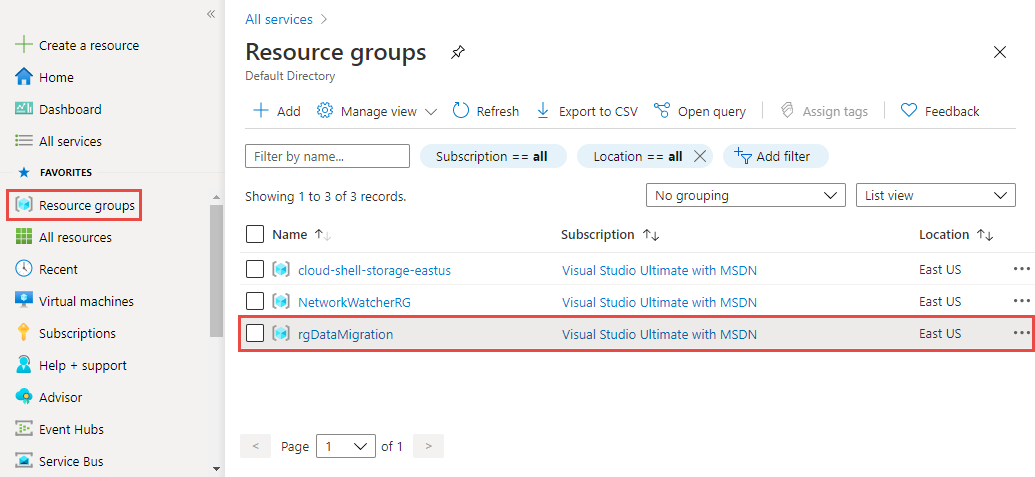

Verify that the resources are created

In the Azure portal, select Resource groups on the left menu.

Filter the list of resource groups by entering the name of your resource group in the search box.

Select your resource group in the list.

Confirm that you see the following resources in the resource group:

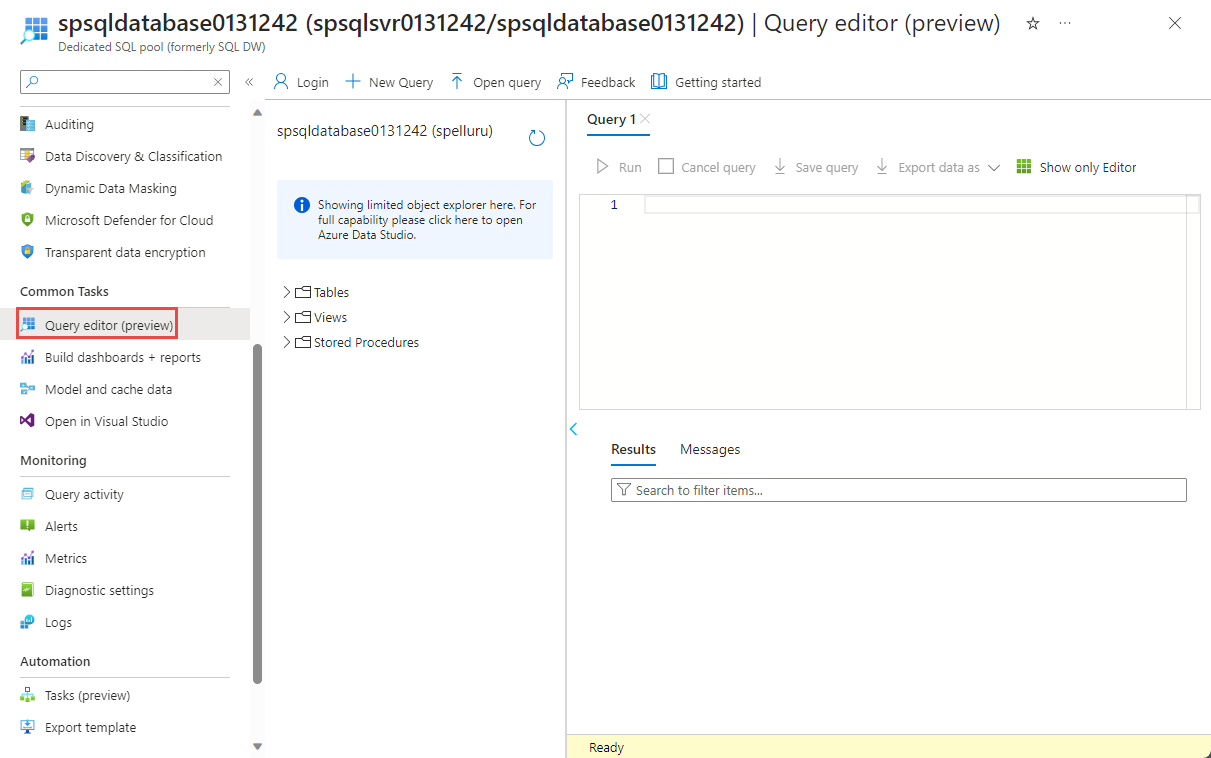

Create a table in Azure Synapse Analytics

In this section, you create a table in the dedicated SQL pool you created earlier.

In the list of resources in the resource group, select your dedicated SQL pool.

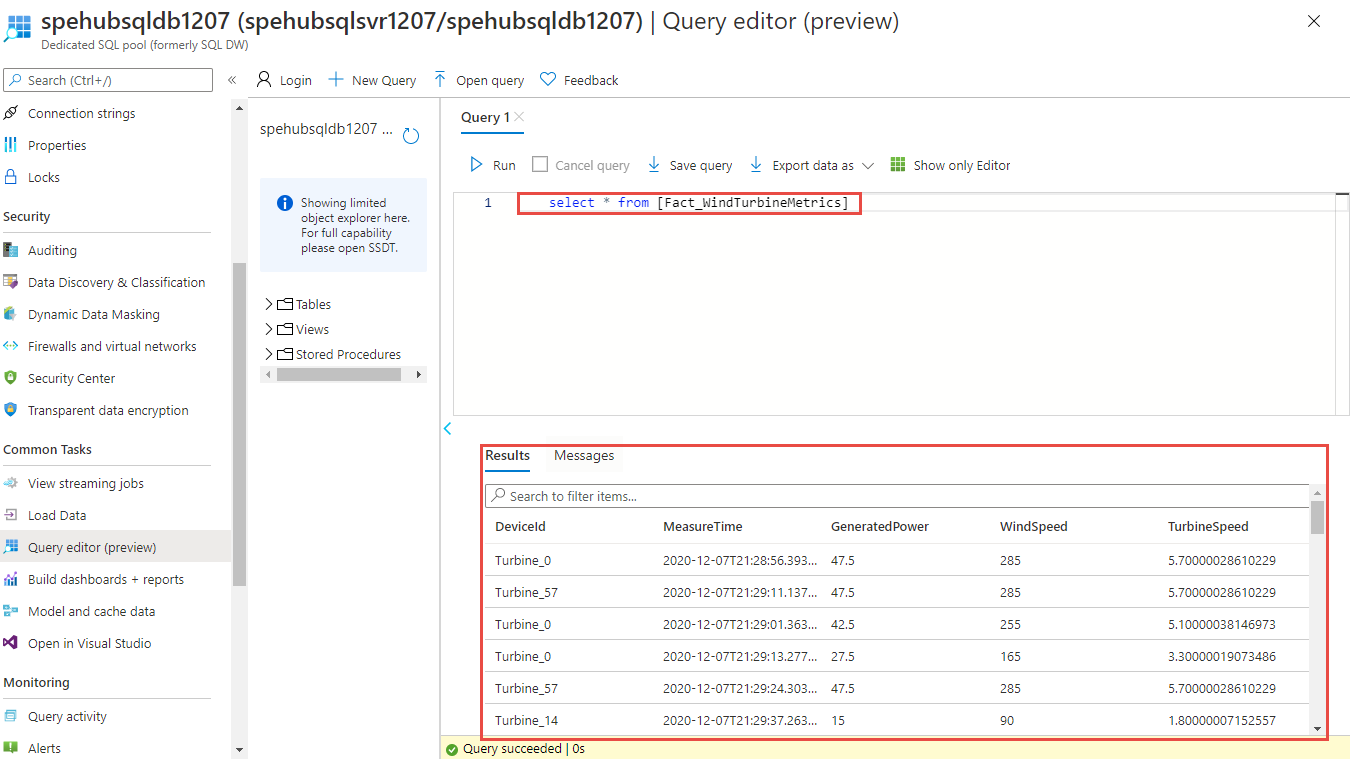

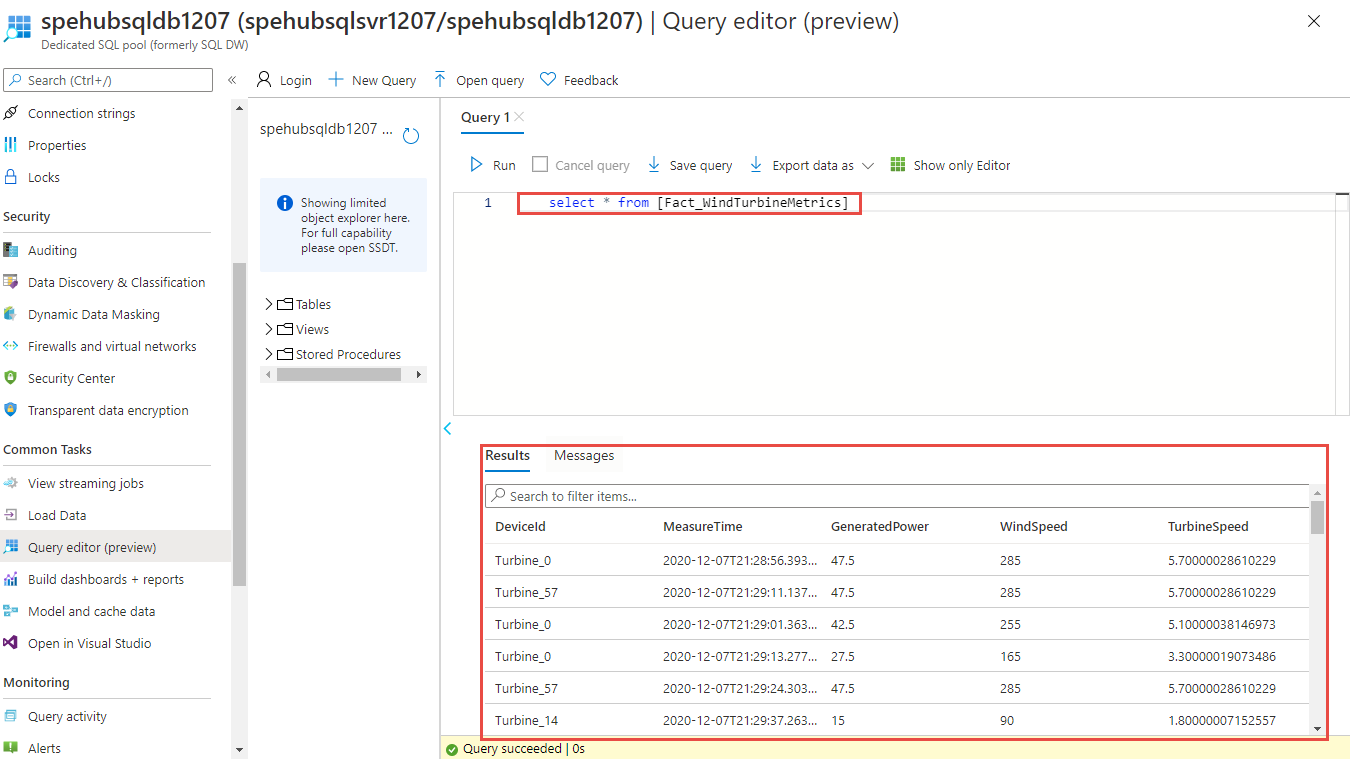

On the Dedicated SQL pool page, in the Common Tasks section on the left menu, select Query editor (preview).

Enter the name of user and password for the SQL server, and select OK. If you see a message about allowing your client to access the SQL server, select Allowlist IP <your IP Address> on server <your SQL server>, and then select OK.

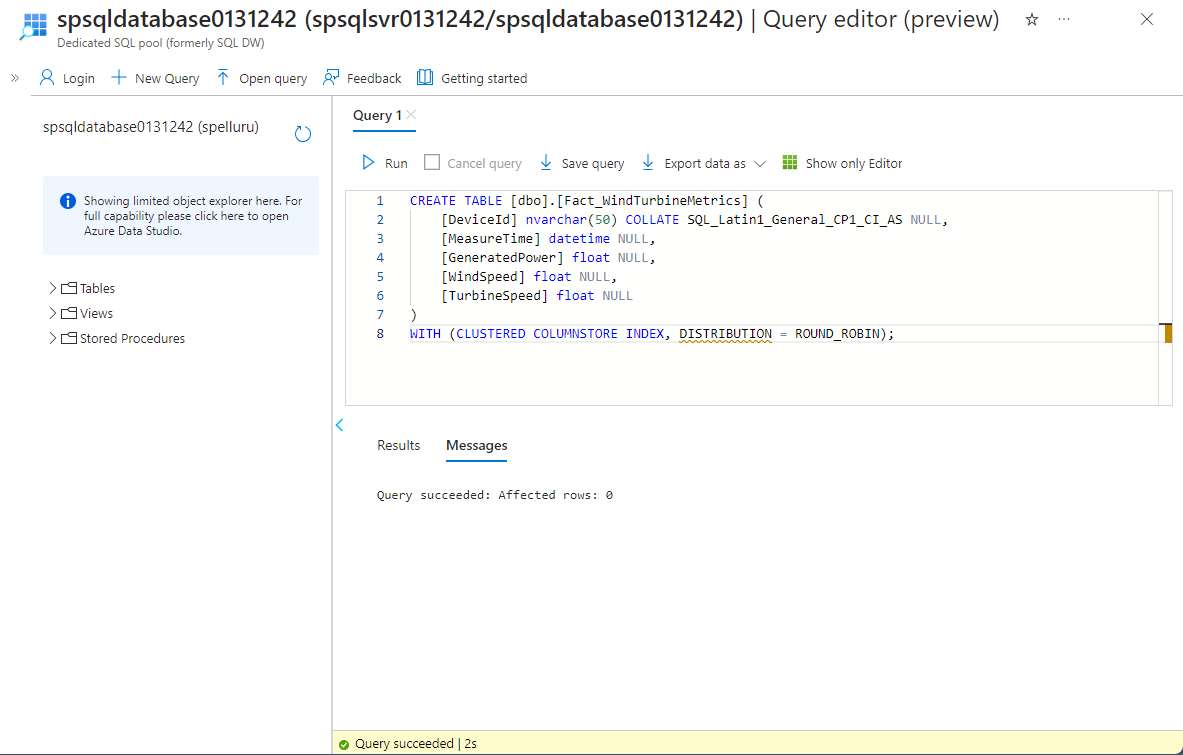

In the query window, copy and run the following SQL script:

CREATE TABLE [dbo].[Fact_WindTurbineMetrics] ( [DeviceId] nvarchar(50) COLLATE SQL_Latin1_General_CP1_CI_AS NULL, [MeasureTime] datetime NULL, [GeneratedPower] float NULL, [WindSpeed] float NULL, [TurbineSpeed] float NULL ) WITH (CLUSTERED COLUMNSTORE INDEX, DISTRIBUTION = ROUND_ROBIN);

Keep this tab or window open so that you can verify that the data is created at the end of the tutorial.

Publish the Azure Functions app

First, get the publish profile for the Functions app from the Azure portal. Then, use the publish profile to publish the Azure Functions project or app from Visual Studio.

Get the publish profile

On the Resource Group page, select the Azure Functions app in the list of resources.

On the Function App page for your app, select Get publish profile on the command bar.

Download and save the file into the FunctionEGDDumper subfolder of the EventHubsCaptureEventGridDemo folder.

Use the publish profile to publish the Functions app

Launch Visual Studio.

Open the EventHubsCaptureEventGridDemo.sln solution that you downloaded from the GitHub as part of the prerequisites. You can find it in the

/samples/e2e/EventHubsCaptureEventGridDemofolder.In Solution Explorer, right-click FunctionEGDWDumper project, and select Publish.

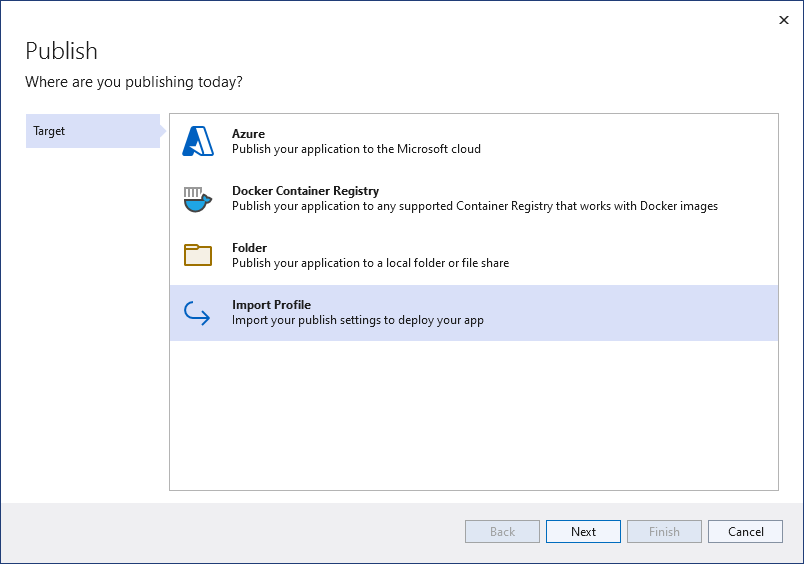

In the following screen, select Start or Add a publish profile.

In the Publish dialog box, select Import Profile for Target, and select Next.

On the Import profile tab, select the publish settings file that you saved earlier in the FunctionEGDWDumper folder, and then select Finish.

When Visual Studio has configured the profile, select Publish. Confirm that the publishing succeeded.

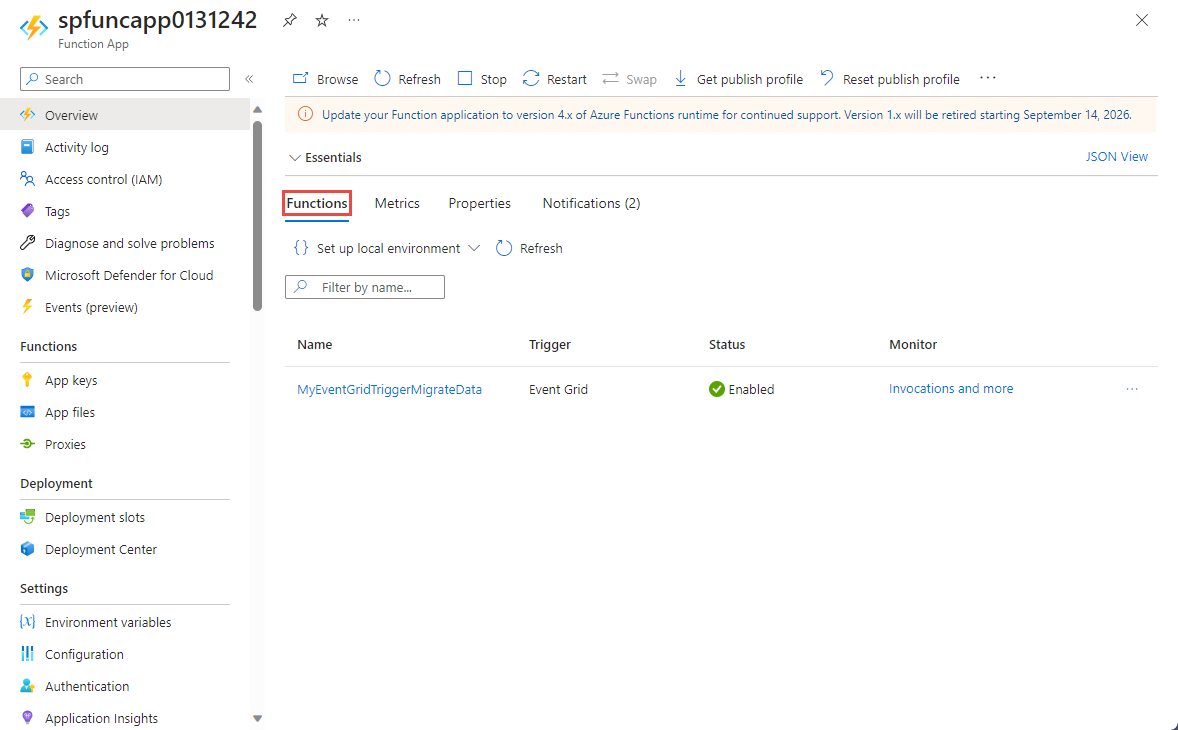

In the web browser that has the Azure Function page open, select Functions in the middle pane. Confirm that the EventGridTriggerMigrateData function shows up in the list. If you don't see it, try publishing from Visual Studio again, and then refresh the page in the portal.

After publishing the function, you're ready to subscribe to the event.

Subscribe to the event

In a new tab or new window of a web browser, sign in to the Azure portal.

In the Azure portal, select Resource groups on the left menu.

Filter the list of resource groups by entering the name of your resource group in the search box.

Select your resource group in the list.

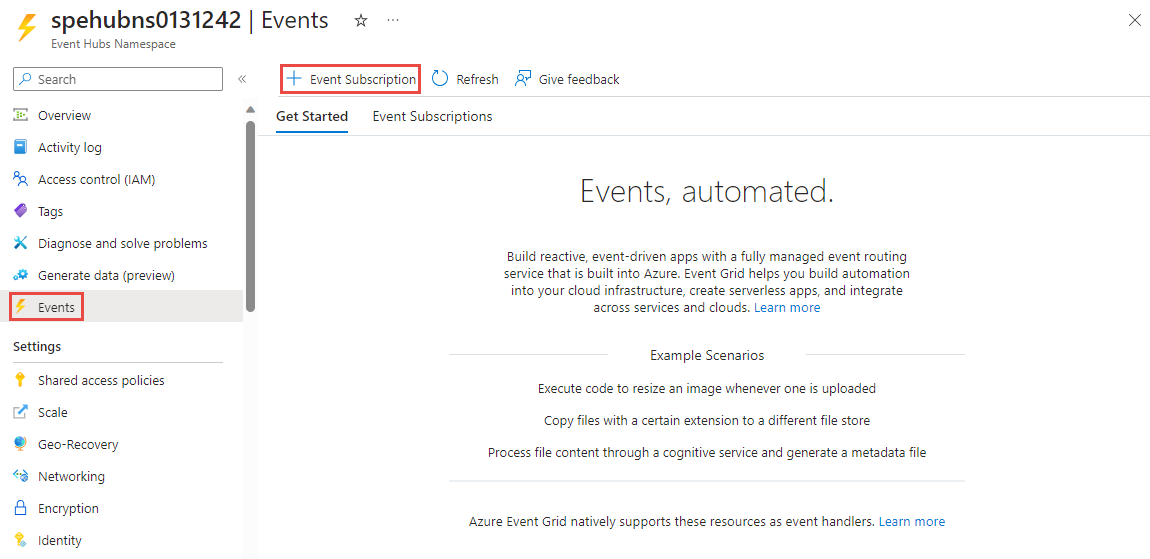

Select the Event Hubs namespace from the list of resources.

On the Event Hubs Namespace page, select Events on the left menu, and then select + Event Subscription on the toolbar.

On the Create Event Subscription page, follow these steps:

Enter a name for the event subscription.

Enter a name for the system topic. A system topic provides an endpoint for the sender to send events. For more information, see System topics

For Endpoint Type, select Azure Function.

For Endpoint, select the link.

On the Select Azure Function page, follow these steps if they aren't automatically filled.

- Select the Azure subscription that has the Azure function.

- Select the resource group for the function.

- Select the function app.

- Select the deployment slot.

- Select the function EventGridTriggerMigrateData.

On the Select Azure Function page, select Confirm Selection.

Then, back on the Create Event Subscription page, select Create.

Verify that the event subscription is created. Switch to the Event Subscriptions tab on the Events page for the Event Hubs namespace.

Run the app to generate data

You've finished setting up your event hub, dedicate SQL pool (formerly SQL Data Warehouse), Azure function app, and event subscription. Before running an application that generates data for event hub, you need to configure a few values.

In the Azure portal, navigate to your resource group as you did earlier.

Select the Event Hubs namespace.

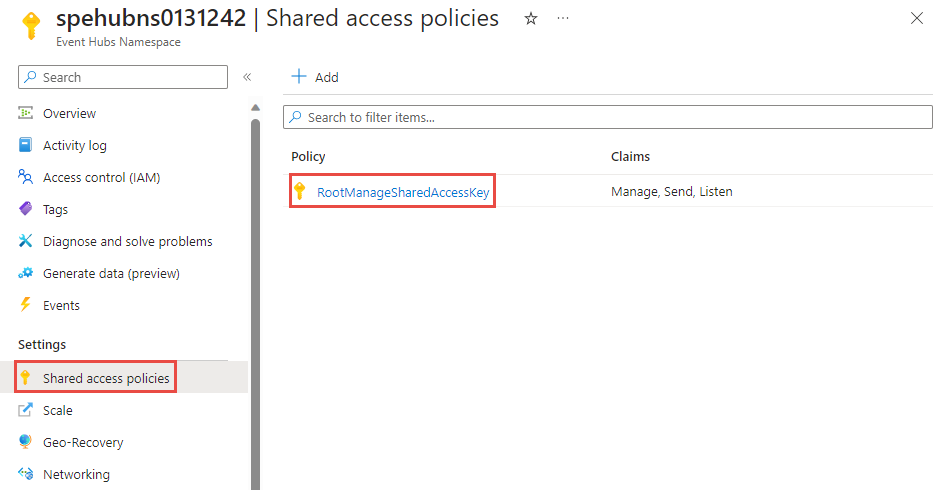

In the Event Hubs Namespace page, select Shared access policies on the left menu.

Select RootManageSharedAccessKey in the list of policies.

Select the copy button next to the Connection string-primary key text box.

Go back to your Visual Studio solution.

Right-click WindTurbineDataGenerator project, and select Set as Startup project.

In the WindTurbineDataGenerator project, open program.cs.

Replace

<EVENT HUBS NAMESPACE CONNECTION STRING>with the connection string you copied from the portal.If you've used a different name for the event hub other than

hubdatamigration, replace<EVENT HUB NAME>with the name of the event hub.private const string EventHubConnectionString = "Endpoint=sb://demomigrationnamespace.servicebus.windows.net/..."; private const string EventHubName = "hubdatamigration";Build the solution. Run the WindTurbineGenerator.exe application.

After a couple of minutes, in the other browser tab where you have the query window open, query the table in your data warehouse for the migrated data.

select * from [dbo].[Fact_WindTurbineMetrics]

Important

We use connection string to authenticate to Azure Event Hubs namespace to keep the tutorial simple. We recommend that you use Microsoft Entra ID authentication in production environments. When using an application, you can enable managed identity for the application and assign the identity an appropriate role (Azure Event Hubs Owner, Azure Event Hubs Data Sender, or Azure Event Hubs Data Receiver) on the Event Hubs namespace. For more information, see Authorize access to Event Hubs using Microsoft Entra ID.

Monitor the solution

This section helps you with monitoring or troubleshooting the solution.

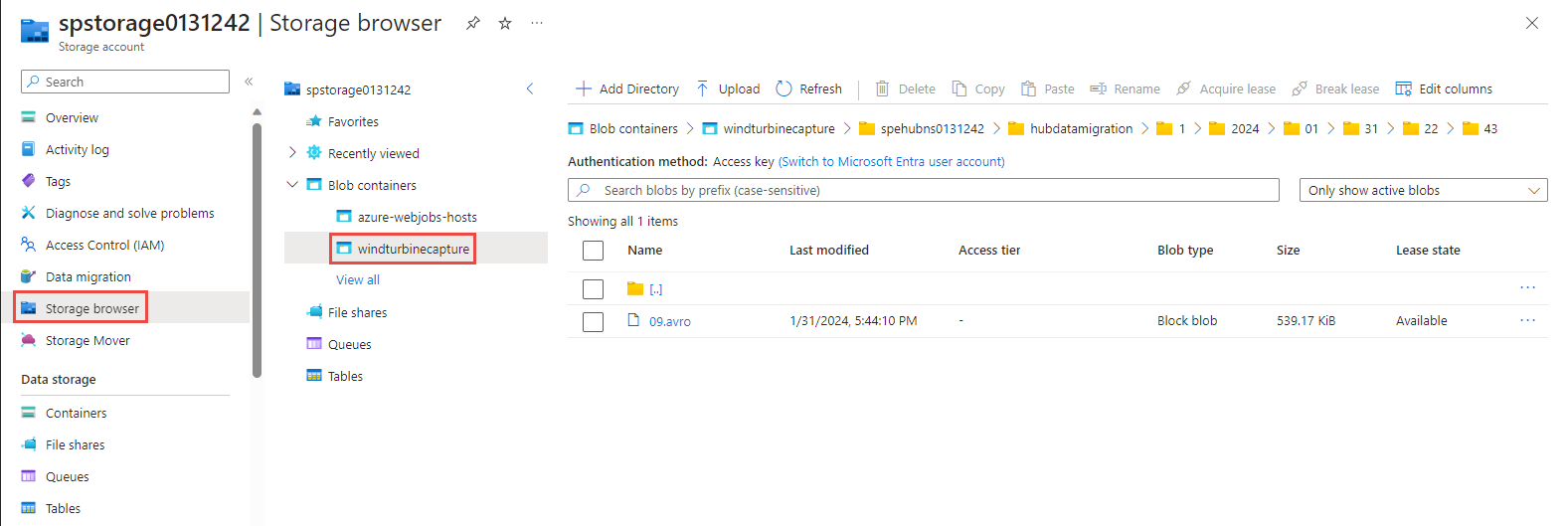

View captured data in the storage account

Navigate to the resource group and select the storage account used for capturing event data.

On the Storage account page, select Storage browser on the left menu.

Expand BLOB CONTAINERS, and select windturbinecapture.

Open the folder named same as your Event Hubs namespace in the right pane.

Open the folder named same as your event hub (hubdatamigration).

Drill through the folders and you see the AVRO files. Here's an example:

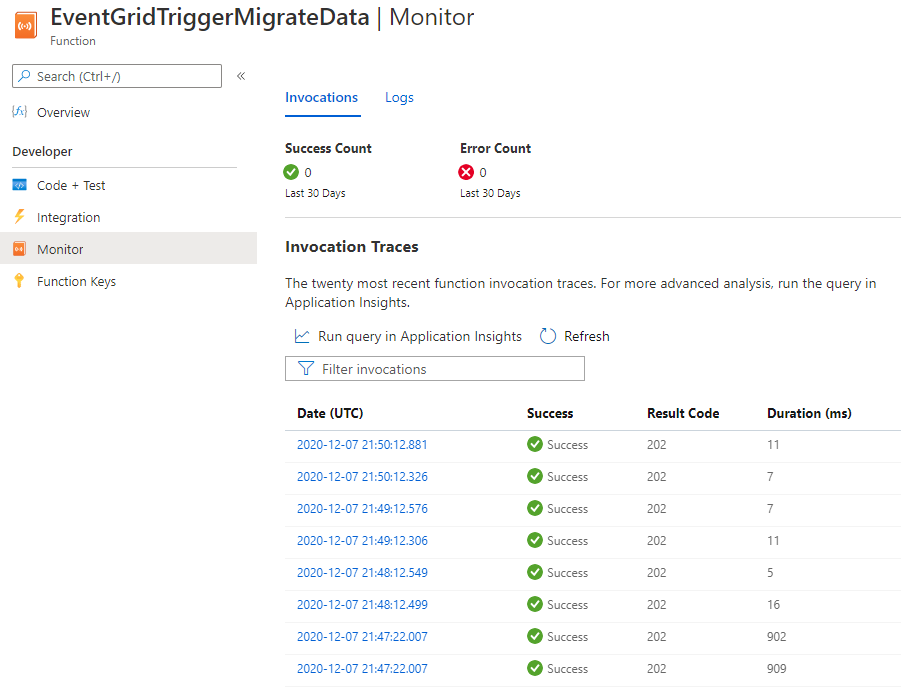

Verify that the Event Grid trigger invoked the function

Navigate to the resource group and select the function app.

Select Functions tab in the middle pane.

Select the EventGridTriggerMigrateData function from the list.

On the Function page, select Monitor on the left menu.

Select Configure to configure application insights to capture invocation logs.

Create a new Application Insights resource or use an existing resource.

Navigate back to the Monitor page for the function.

Confirm that the client application (WindTurbineDataGenerator) that's sending the events is still running. If not, run the app.

Wait for a few minutes (5 minutes or more) and select the Refresh button to see function invocations.

Select an invocation to see details.

Event Grid distributes event data to the subscribers. The following example shows event data generated when data streaming through an event hub is captured in a blob. In particular, notice the

fileUrlproperty in thedataobject points to the blob in the storage. The function app uses this URL to retrieve the blob file with captured data.{ "topic": "/subscriptions/<AZURE SUBSCRIPTION ID>/resourcegroups/rgDataMigration/providers/Microsoft.EventHub/namespaces/spehubns1207", "subject": "hubdatamigration", "eventType": "Microsoft.EventHub.CaptureFileCreated", "id": "4538f1a5-02d8-4b40-9f20-36301ac976ba", "data": { "fileUrl": "https://spehubstorage1207.blob.core.windows.net/windturbinecapture/spehubns1207/hubdatamigration/0/2020/12/07/21/49/12.avro", "fileType": "AzureBlockBlob", "partitionId": "0", "sizeInBytes": 473444, "eventCount": 2800, "firstSequenceNumber": 55500, "lastSequenceNumber": 58299, "firstEnqueueTime": "2020-12-07T21:49:12.556Z", "lastEnqueueTime": "2020-12-07T21:50:11.534Z" }, "dataVersion": "1", "metadataVersion": "1", "eventTime": "2020-12-07T21:50:12.7065524Z" }

Verify that the data is stored in the dedicated SQL pool

In the browser tab where you have the query window open, query the table in your dedicated SQL pool for the migrated data.

Next steps

- For more information about setting up and running the sample, see Event Hubs Capture and Event Grid sample.

- In this tutorial, you created an event subscription for the

CaptureFileCreatedevent. For more information about this event and all the events supported by Azure Blob Storage, see Azure Event Hubs as an Event Grid source. - To learn more about the Event Hubs Capture feature, see Capture events through Azure Event Hubs in Azure Blob Storage or Azure Data Lake Storage.