Configure key-less authentication with Microsoft Entra ID

Important

Items marked (preview) in this article are currently in public preview. This preview is provided without a service-level agreement, and we don't recommend it for production workloads. Certain features might not be supported or might have constrained capabilities. For more information, see Supplemental Terms of Use for Microsoft Azure Previews.

Models deployed to Azure AI model inference in Azure AI Services support key-less authorization using Microsoft Entra ID. Key-less authorization enhances security, simplifies the user experience, reduces operational complexity, and provides robust compliance support for modern development. It makes it a strong choice for organizations adopting secure and scalable identity management solutions.

This article explains how to configure Microsoft Entra ID for inference in Azure AI model inference.

Understand roles in the context of resource in Azure

Microsoft Entra ID uses the idea of Role-based Access Control (RBAC) for authorization. Roles are central to managing access to your cloud resources. A role is essentially a collection of permissions that define what actions can be performed on specific Azure resources. By assigning roles to users, groups, service principals, or managed identities—collectively known as security principals—you control their access within your Azure environment to specific resources.

When you assign a role, you specify the security principal, the role definition, and the scope. This combination is known as a role assignment. Azure AI model inference is a capability of the Azure AI Services resources, and hence, roles assigned to that particular resource control the access for inference.

You identify two different types of access to the resources:

Administration access: The actions that are related with the administration of the resource. They usually change the state of the resource and its configuration. In Azure, those operations are control-plane operations and can be executed using the Azure portal, the Azure CLI, or with infrastructure as code. Examples of includes creating a new model deployments, changing content filtering configurations, changing the version of the model served, or changing SKU of a deployment.

Developer access: The actions that are related with the consumption of the resources. For example, invoking the chat completions API. However, the user can't change the state of the resource and its configuration.

In Azure, administration operations are always performed using Microsoft Entra ID. Roles like Cognitive Services Contributor allow you to perform those operations. On the other hand, developer operations can be performed using either access keys or/and Microsoft Entra ID. Roles like Cognitive Services User allow you to perform those operations.

Important

Having administration access to a resource doesn't necessarily grants developer access to it. Explicit access by granting roles is still required. It's analogous to how database servers work. Having administrator access to the database server doesn't mean you can read the data inside of a database.

Follow these steps to configure developer access to Azure AI model inference in the Azure AI Services resource.

Prerequisites

To complete this article, you need:

An Azure subscription. If you are using GitHub Models, you can upgrade your experience and create an Azure subscription in the process. Read Upgrade from GitHub Models to Azure AI model inference if it's your case.

An Azure AI services resource. For more information, see Create an Azure AI Services resource.

An account with

Microsoft.Authorization/roleAssignments/writeandMicrosoft.Authorization/roleAssignments/deletepermissions, such as the Administrator role-based access control.To assign a role, you must specify three elements:

- Security principal: e.g. your user account.

- Role definition: the Cognitive Services User role.

- Scope: the Azure AI Services resource.

If you want to create a custom role definition instead of using Cognitive Services User role, ensure the role has the following permissions:

{ "permissions": [ { "dataActions": [ "Microsoft.CognitiveServices/accounts/MaaS/*" ] } ] }

Configure Microsoft Entra ID for inference

Follow these steps to configure Microsoft Entra ID for inference:

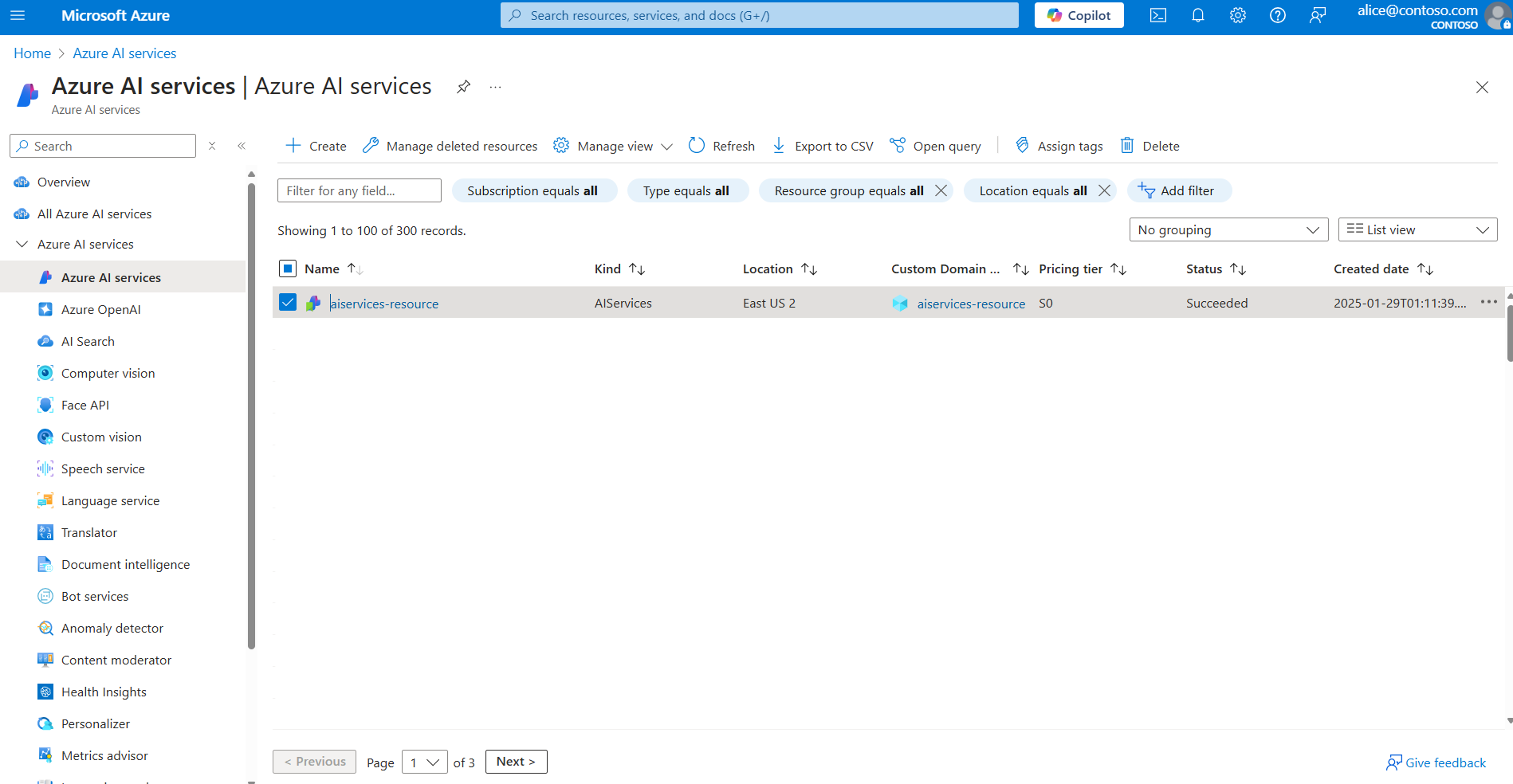

Go to the Azure portal and locate the Azure AI Services resource you're using. If you're using Azure AI Foundry with projects or hubs, you can navigate to it by:

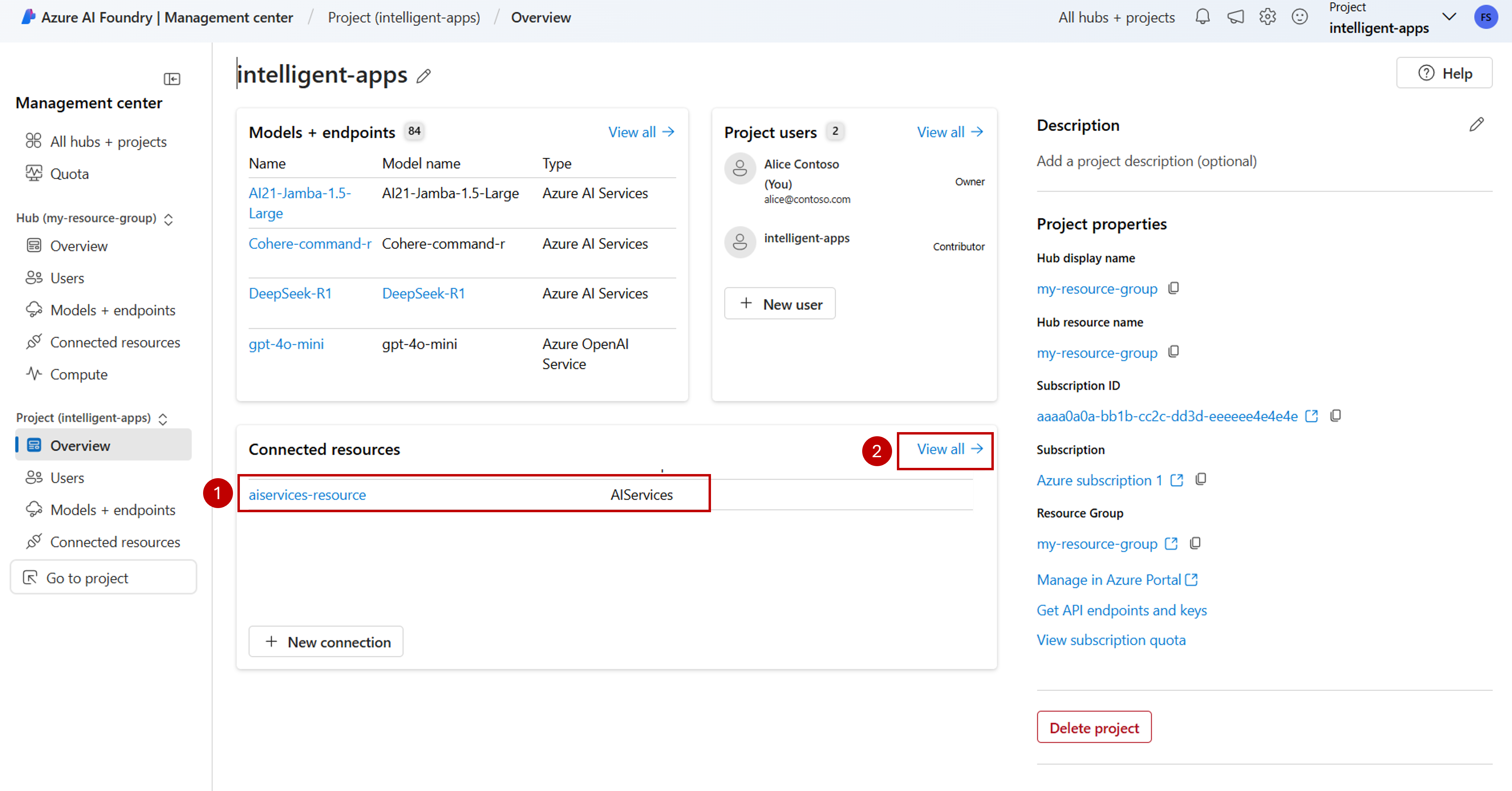

Go to Azure AI Foundry portal.

On the landing page, select Open management center.

Go to the section Connected resources and select the connection to the Azure AI Services resource that you want to configure. If it isn't listed, select View all to see the full list.

On the Connection details section, under Resource, select the name of the Azure resource. A new page opens.

You're now in Azure portal where you can manage all the aspects of the resource itself.

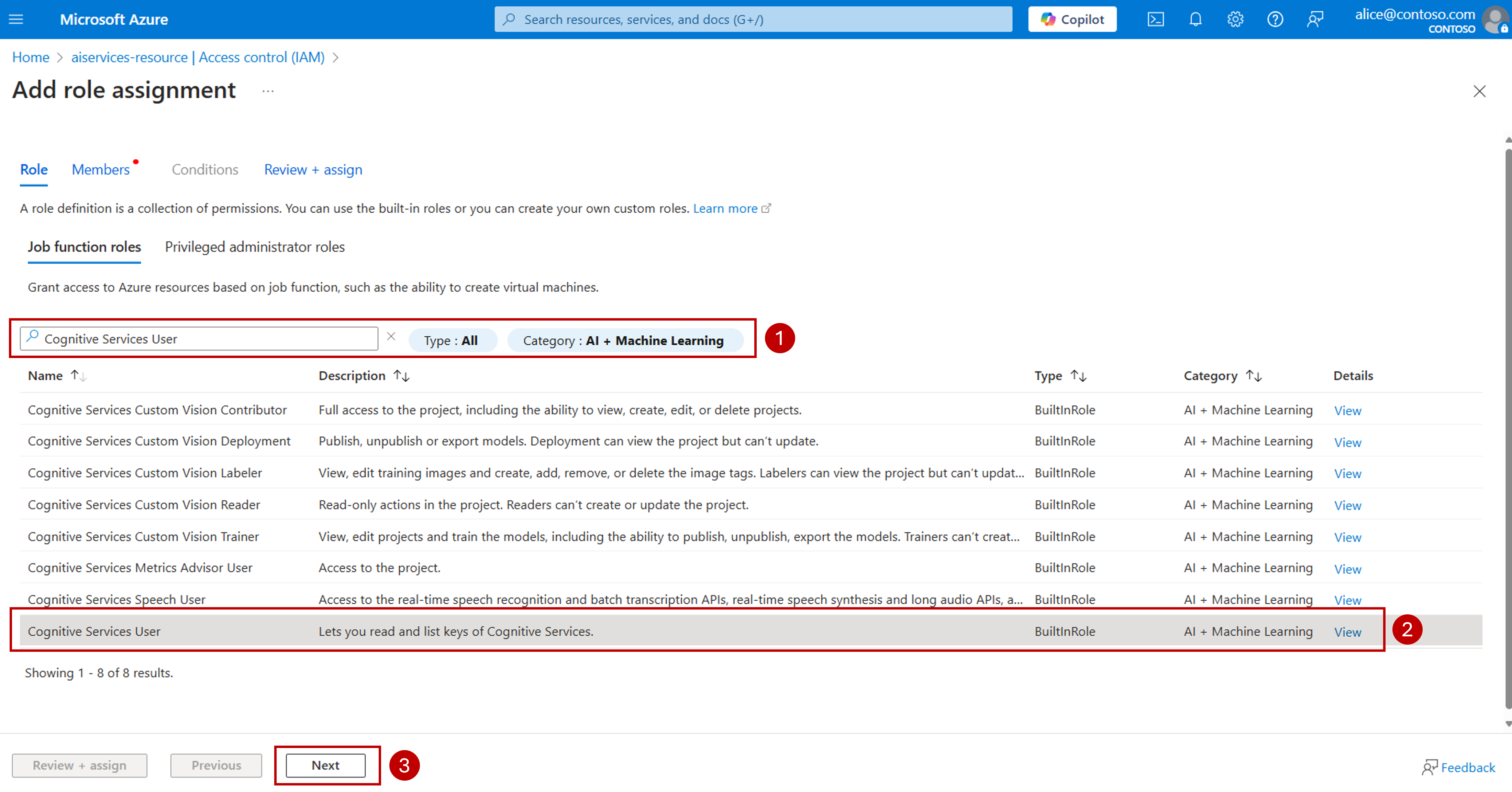

On the left navigation bar, select Access control (IAM) and then select Add > Add role assignment.

Tip

Use the View my access option to verify which roles are already assigned to you.

On Job function roles, type Cognitive Services User. The list of roles is filtered out.

Select the role and select Next.

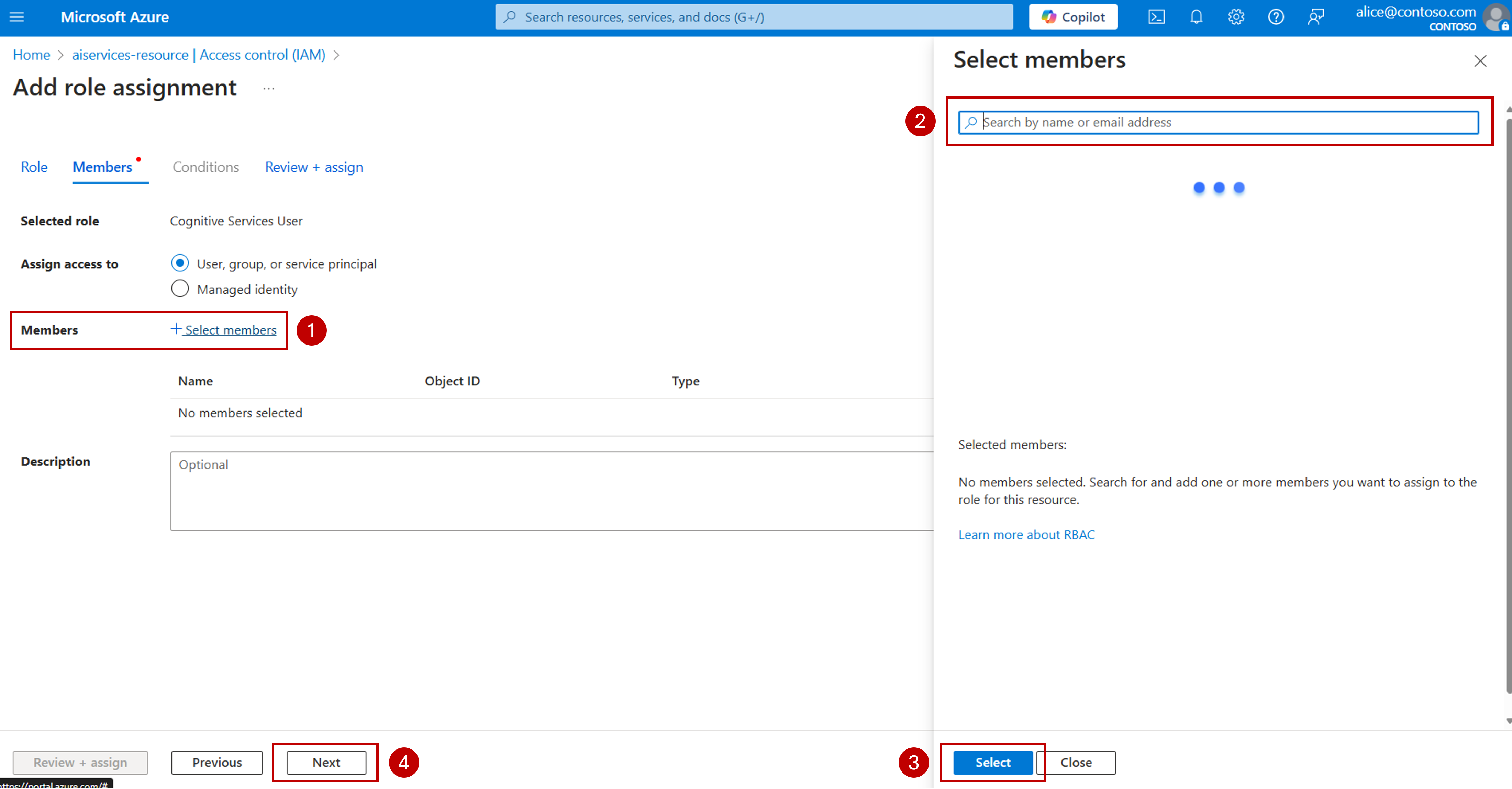

On Members, select the user or group you want to grant access to. We recommend using security groups whenever possible as they are easier to manage and maintain.

Select Next and finish the wizard.

The selected user can now use Microsoft Entra ID for inference.

Tip

Keep in mind that Azure role assignments may take up to five minutes to propagate. When working with security groups, adding or removing users from the security group propagates immediately.

Notice that key-based access is still possible for users that already have keys available to them. If you want to revoke the keys, in the Azure portal, on the left navigation, select Resource Management > Keys and Endpoints > Regenerate Key1 and Regenerate Key2.

Use Microsoft Entra ID in your code

Once you configured Microsoft Entra ID in your resource, you need to update your code to use it when consuming the inference endpoint. The following example shows how to use a chat completions model:

Install the package azure-ai-inference using your package manager, like pip:

pip install azure-ai-inference

Then, you can use the package to consume the model. The following example shows how to create a client to consume chat completions with Entra ID:

import os

from azure.ai.inference import ChatCompletionsClient

from azure.identity import DefaultAzureCredential

client = ChatCompletionsClient(

endpoint="https://<resource>.services.ai.azure.com/models",

credential=DefaultAzureCredential(),

credential_scopes=["https://cognitiveservices.azure.com/.default"],

)

Options for credential when using Microsoft Entra ID

DefaultAzureCredential is an opinionated, ordered sequence of mechanisms for authenticating to Microsoft Entra ID. Each authentication mechanism is a class derived from the TokenCredential class and is known as a credential. At runtime, DefaultAzureCredential attempts to authenticate using the first credential. If that credential fails to acquire an access token, the next credential in the sequence is attempted, and so on, until an access token is successfully obtained. In this way, your app can use different credentials in different environments without writing environment-specific code.

When the preceding code runs on your local development workstation, it looks in the environment variables for an application service principal or at locally installed developer tools, such as Visual Studio, for a set of developer credentials. Either approach can be used to authenticate the app to Azure resources during local development.

When deployed to Azure, this same code can also authenticate your app to other Azure resources. DefaultAzureCredential can retrieve environment settings and managed identity configurations to authenticate to other services automatically.

Best practices

Use deterministic credentials in production environments: Strongly consider moving from

DefaultAzureCredentialto one of the following deterministic solutions on production environments:- A specific

TokenCredentialimplementation, such asManagedIdentityCredential. See the Derived list for options. - A pared-down

ChainedTokenCredentialimplementation optimized for the Azure environment in which your app runs.ChainedTokenCredentialessentially creates a specific allowlist of acceptable credential options, such asManagedIdentityfor production andVisualStudioCredentialfor development.

- A specific

Configure system-assigned or user-assigned managed identities to the Azure resources where your code is running if possible. Configure Microsoft Entra ID access to those specific identities.

Troubleshooting

Before troubleshooting, verify that you have the right permissions assigned:

Go to the Azure portal and locate the Azure AI Services resource you're using.

On the left navigation bar, select Access control (IAM) and then select Check access.

Type the name of the user or identity you are using to connect to the service.

Verify that the role Cognitive Services User is listed (or a role that contains the required permissions as explained in Prerequisites).

Important

Roles like Owner or Contributor don't provide access via Microsoft Entra ID.

If not listed, follow the steps in this guide before continuing.

The following table contains multiple scenarios that can help troubleshooting Microsoft Entra ID:

| Error / Scenario | Root cause | Solution |

|---|---|---|

| You're using an SDK. | Known issues. | Before making further troubleshooting, it's advisable to install the latest version of the software you are using to connect to the service. Authentication bugs may have been fixed in a newer version of the software you're using. |

401 Principal does not have access to API/Operation |

The request indicates authentication in the correct way, however, the user principal doesn't have the required permissions to use the inference endpoint. | Ensure you have: 1. Assigned the role Cognitive Services User to your principal to the Azure AI Services resource. 2. Wait at least 5 minutes before making the first call. |

401 HTTP/1.1 401 PermissionDenied |

The request indicates authentication in the correct way, however, the user principal doesn't have the required permissions to use the inference endpoint. | Assigned the role Cognitive Services User to your principal in the Azure AI Services resource. Roles like Administrator or Contributor don't grand inference access. Wait at least 5 minutes before making the first call. |

You're using REST API calls and you get 401 Unauthorized. Access token is missing, invalid, audience is incorrect, or have expired. |

The request is failing to perform authentication with Entra ID. | Ensure the Authentication header contains a valid token with a scope https://cognitiveservices.azure.com/.default. |

You're using AzureOpenAI class and you get 401 Unauthorized. Access token is missing, invalid, audience is incorrect, or have expired. |

The request is failing to perform authentication with Entra ID. | Ensure that you are using an OpenAI model connected to the endpoint https://<resource>.openai.azure.com. You can't use OpenAI class or a Models-as-a-Service model. If your model is not from OpenAI, use the Azure AI Inference SDK. |

You're using the Azure AI Inference SDK and you get 401 Unauthorized. Access token is missing, invalid, audience is incorrect, or have expired. |

The request is failing to perform authentication with Entra ID. | Ensure you're connected to the endpoint https://<resource>.services.ai.azure.com/model and that you indicated the right scope for Entra ID (https://cognitiveservices.azure.com/.default). |

404 Not found |

The endpoint URL is incorrect based on the SDK you are using, or the model deployment doesn't exist. | Ensure you are using the right SDK connected to the right endpoint: 1. If you are using the Azure AI inference SDK, ensure the endpoint is https://<resource>.services.ai.azure.com/model with model="<model-deployment-name>" in the payloads, or endpoint is https://<resource>.openai.azure.com/deployments/<model-deployment-name>. If you are using the AzureOpenAI class, ensure the endpoint is https://<resource>.openai.azure.com. |

Use Microsoft Entra ID in your project

Even when your resource has Microsoft Entra ID configured, your projects may still be using keys to consume predictions from the resource. When using the Azure AI Foundry playground, the credentials associated with the connection your project has are used.

To change this behavior, you have to update the connections from your projects to use Microsoft Entra ID. Follow these steps:

Go to Azure AI Foundry portal.

Navigate to the projects or hubs that are using the Azure AI Services resource through a connection.

Select Management center.

Go to the section Connected resources and select the connection to the Azure AI Services resource that you want to configure. If it's not listed, select View all to see the full list.

On the Connection details section, next to Access details, select the edit icon.

Under Authentication, change the value to Microsoft Entra ID.

Select Update.

Your connection is configured to work with Microsoft Entra ID now.

Disable key-based authentication in the resource

Disabling key-based authentication is advisable when you implemented Microsoft Entra ID and fully addressed compatibility or fallback concerns in all the applications that consume the service. Disabling key-based authentication is only available when deploying using Bicep/ARM.

Important

Items marked (preview) in this article are currently in public preview. This preview is provided without a service-level agreement, and we don't recommend it for production workloads. Certain features might not be supported or might have constrained capabilities. For more information, see Supplemental Terms of Use for Microsoft Azure Previews.

Models deployed to Azure AI model inference in Azure AI Services support key-less authorization using Microsoft Entra ID. Key-less authorization enhances security, simplifies the user experience, reduces operational complexity, and provides robust compliance support for modern development. It makes it a strong choice for organizations adopting secure and scalable identity management solutions.

This article explains how to configure Microsoft Entra ID for inference in Azure AI model inference.

Understand roles in the context of resource in Azure

Microsoft Entra ID uses the idea of Role-based Access Control (RBAC) for authorization. Roles are central to managing access to your cloud resources. A role is essentially a collection of permissions that define what actions can be performed on specific Azure resources. By assigning roles to users, groups, service principals, or managed identities—collectively known as security principals—you control their access within your Azure environment to specific resources.

When you assign a role, you specify the security principal, the role definition, and the scope. This combination is known as a role assignment. Azure AI model inference is a capability of the Azure AI Services resources, and hence, roles assigned to that particular resource control the access for inference.

You identify two different types of access to the resources:

Administration access: The actions that are related with the administration of the resource. They usually change the state of the resource and its configuration. In Azure, those operations are control-plane operations and can be executed using the Azure portal, the Azure CLI, or with infrastructure as code. Examples of includes creating a new model deployments, changing content filtering configurations, changing the version of the model served, or changing SKU of a deployment.

Developer access: The actions that are related with the consumption of the resources. For example, invoking the chat completions API. However, the user can't change the state of the resource and its configuration.

In Azure, administration operations are always performed using Microsoft Entra ID. Roles like Cognitive Services Contributor allow you to perform those operations. On the other hand, developer operations can be performed using either access keys or/and Microsoft Entra ID. Roles like Cognitive Services User allow you to perform those operations.

Important

Having administration access to a resource doesn't necessarily grants developer access to it. Explicit access by granting roles is still required. It's analogous to how database servers work. Having administrator access to the database server doesn't mean you can read the data inside of a database.

Follow these steps to configure developer access to Azure AI model inference in the Azure AI Services resource.

Prerequisites

To complete this article, you need:

An Azure subscription. If you are using GitHub Models, you can upgrade your experience and create an Azure subscription in the process. Read Upgrade from GitHub Models to Azure AI model inference if it's your case.

An Azure AI services resource. For more information, see Create an Azure AI Services resource.

An account with

Microsoft.Authorization/roleAssignments/writeandMicrosoft.Authorization/roleAssignments/deletepermissions, such as the Administrator role-based access control.To assign a role, you must specify three elements:

- Security principal: e.g. your user account.

- Role definition: the Cognitive Services User role.

- Scope: the Azure AI Services resource.

If you want to create a custom role definition instead of using Cognitive Services User role, ensure the role has the following permissions:

{ "permissions": [ { "dataActions": [ "Microsoft.CognitiveServices/accounts/MaaS/*" ] } ] }

Install the Azure CLI.

Identify the following information:

Your Azure subscription ID.

Your Azure AI Services resource name.

The resource group where the Azure AI Services resource is deployed.

Configure Microsoft Entra ID for inference

Follow these steps to configure Microsoft Entra ID for inference in your Azure AI Services resource:

Log in into your Azure subscription:

az loginIf you have more than one subscription, select the subscription where your resource is located:

az account set --subscription "<subscription-id>"Set the following environment variables with the name of the Azure AI Services resource you plan to use and resource group.

ACCOUNT_NAME="<ai-services-resource-name>" RESOURCE_GROUP="<resource-group>"Get the full name of your resource:

RESOURCE_ID=$(az resource show -g $RESOURCE_GROUP -n $ACCOUNT_NAME --resource-type "Microsoft.CognitiveServices/accounts")Get the object ID of the security principal you want to assign permissions to. The following example shows how to get the object ID associated with:

Your own logged in account:

OBJECT_ID=$(az ad signed-in-user show --query id --output tsv)A security group:

OBJECT_ID=$(az ad group show --group "<group-name>" --query id --output tsv)A service principal:

OBJECT_ID=$(az ad sp show --id "<service-principal-guid>" --query id --output tsv)Assign the Cognitive Services User role to the service principal (scoped to the resource). By assigning a role, you're granting service principal access to this resource.

az role assignment create --assignee-object-id $OBJECT_ID --role "Cognitive Services User" --scope $RESOURCE_IDThe selected user can now use Microsoft Entra ID for inference.

Tip

Keep in mind that Azure role assignments may take up to five minutes to propagate. Adding or removing users from a security group propagates immediately.

Use Microsoft Entra ID in your code

Once Microsoft Entra ID is configured in your resource, you need to update your code to use it when consuming the inference endpoint. The following example shows how to use a chat completions model:

Install the package azure-ai-inference using your package manager, like pip:

pip install azure-ai-inference

Then, you can use the package to consume the model. The following example shows how to create a client to consume chat completions with Entra ID:

import os

from azure.ai.inference import ChatCompletionsClient

from azure.identity import DefaultAzureCredential

client = ChatCompletionsClient(

endpoint="https://<resource>.services.ai.azure.com/models",

credential=DefaultAzureCredential(),

credential_scopes=["https://cognitiveservices.azure.com/.default"],

)

Options for credential when using Microsoft Entra ID

DefaultAzureCredential is an opinionated, ordered sequence of mechanisms for authenticating to Microsoft Entra ID. Each authentication mechanism is a class derived from the TokenCredential class and is known as a credential. At runtime, DefaultAzureCredential attempts to authenticate using the first credential. If that credential fails to acquire an access token, the next credential in the sequence is attempted, and so on, until an access token is successfully obtained. In this way, your app can use different credentials in different environments without writing environment-specific code.

When the preceding code runs on your local development workstation, it looks in the environment variables for an application service principal or at locally installed developer tools, such as Visual Studio, for a set of developer credentials. Either approach can be used to authenticate the app to Azure resources during local development.

When deployed to Azure, this same code can also authenticate your app to other Azure resources. DefaultAzureCredential can retrieve environment settings and managed identity configurations to authenticate to other services automatically.

Best practices

Use deterministic credentials in production environments: Strongly consider moving from

DefaultAzureCredentialto one of the following deterministic solutions on production environments:- A specific

TokenCredentialimplementation, such asManagedIdentityCredential. See the Derived list for options. - A pared-down

ChainedTokenCredentialimplementation optimized for the Azure environment in which your app runs.ChainedTokenCredentialessentially creates a specific allowlist of acceptable credential options, such asManagedIdentityfor production andVisualStudioCredentialfor development.

- A specific

Configure system-assigned or user-assigned managed identities to the Azure resources where your code is running if possible. Configure Microsoft Entra ID access to those specific identities.

Troubleshooting

Before troubleshooting, verify that you have the right permissions assigned:

Go to the Azure portal and locate the Azure AI Services resource you're using.

On the left navigation bar, select Access control (IAM) and then select Check access.

Type the name of the user or identity you are using to connect to the service.

Verify that the role Cognitive Services User is listed (or a role that contains the required permissions as explained in Prerequisites).

Important

Roles like Owner or Contributor don't provide access via Microsoft Entra ID.

If not listed, follow the steps in this guide before continuing.

The following table contains multiple scenarios that can help troubleshooting Microsoft Entra ID:

| Error / Scenario | Root cause | Solution |

|---|---|---|

| You're using an SDK. | Known issues. | Before making further troubleshooting, it's advisable to install the latest version of the software you are using to connect to the service. Authentication bugs may have been fixed in a newer version of the software you're using. |

401 Principal does not have access to API/Operation |

The request indicates authentication in the correct way, however, the user principal doesn't have the required permissions to use the inference endpoint. | Ensure you have: 1. Assigned the role Cognitive Services User to your principal to the Azure AI Services resource. 2. Wait at least 5 minutes before making the first call. |

401 HTTP/1.1 401 PermissionDenied |

The request indicates authentication in the correct way, however, the user principal doesn't have the required permissions to use the inference endpoint. | Assigned the role Cognitive Services User to your principal in the Azure AI Services resource. Roles like Administrator or Contributor don't grand inference access. Wait at least 5 minutes before making the first call. |

You're using REST API calls and you get 401 Unauthorized. Access token is missing, invalid, audience is incorrect, or have expired. |

The request is failing to perform authentication with Entra ID. | Ensure the Authentication header contains a valid token with a scope https://cognitiveservices.azure.com/.default. |

You're using AzureOpenAI class and you get 401 Unauthorized. Access token is missing, invalid, audience is incorrect, or have expired. |

The request is failing to perform authentication with Entra ID. | Ensure that you are using an OpenAI model connected to the endpoint https://<resource>.openai.azure.com. You can't use OpenAI class or a Models-as-a-Service model. If your model is not from OpenAI, use the Azure AI Inference SDK. |

You're using the Azure AI Inference SDK and you get 401 Unauthorized. Access token is missing, invalid, audience is incorrect, or have expired. |

The request is failing to perform authentication with Entra ID. | Ensure you're connected to the endpoint https://<resource>.services.ai.azure.com/model and that you indicated the right scope for Entra ID (https://cognitiveservices.azure.com/.default). |

404 Not found |

The endpoint URL is incorrect based on the SDK you are using, or the model deployment doesn't exist. | Ensure you are using the right SDK connected to the right endpoint: 1. If you are using the Azure AI inference SDK, ensure the endpoint is https://<resource>.services.ai.azure.com/model with model="<model-deployment-name>" in the payloads, or endpoint is https://<resource>.openai.azure.com/deployments/<model-deployment-name>. If you are using the AzureOpenAI class, ensure the endpoint is https://<resource>.openai.azure.com. |

Important

Items marked (preview) in this article are currently in public preview. This preview is provided without a service-level agreement, and we don't recommend it for production workloads. Certain features might not be supported or might have constrained capabilities. For more information, see Supplemental Terms of Use for Microsoft Azure Previews.

Models deployed to Azure AI model inference in Azure AI Services support key-less authorization using Microsoft Entra ID. Key-less authorization enhances security, simplifies the user experience, reduces operational complexity, and provides robust compliance support for modern development. It makes it a strong choice for organizations adopting secure and scalable identity management solutions.

This article explains how to configure Microsoft Entra ID for inference in Azure AI model inference.

Understand roles in the context of resource in Azure

Microsoft Entra ID uses the idea of Role-based Access Control (RBAC) for authorization. Roles are central to managing access to your cloud resources. A role is essentially a collection of permissions that define what actions can be performed on specific Azure resources. By assigning roles to users, groups, service principals, or managed identities—collectively known as security principals—you control their access within your Azure environment to specific resources.

When you assign a role, you specify the security principal, the role definition, and the scope. This combination is known as a role assignment. Azure AI model inference is a capability of the Azure AI Services resources, and hence, roles assigned to that particular resource control the access for inference.

You identify two different types of access to the resources:

Administration access: The actions that are related with the administration of the resource. They usually change the state of the resource and its configuration. In Azure, those operations are control-plane operations and can be executed using the Azure portal, the Azure CLI, or with infrastructure as code. Examples of includes creating a new model deployments, changing content filtering configurations, changing the version of the model served, or changing SKU of a deployment.

Developer access: The actions that are related with the consumption of the resources. For example, invoking the chat completions API. However, the user can't change the state of the resource and its configuration.

In Azure, administration operations are always performed using Microsoft Entra ID. Roles like Cognitive Services Contributor allow you to perform those operations. On the other hand, developer operations can be performed using either access keys or/and Microsoft Entra ID. Roles like Cognitive Services User allow you to perform those operations.

Important

Having administration access to a resource doesn't necessarily grants developer access to it. Explicit access by granting roles is still required. It's analogous to how database servers work. Having administrator access to the database server doesn't mean you can read the data inside of a database.

Follow these steps to configure developer access to Azure AI model inference in the Azure AI Services resource.

Prerequisites

To complete this article, you need:

An Azure subscription. If you are using GitHub Models, you can upgrade your experience and create an Azure subscription in the process. Read Upgrade from GitHub Models to Azure AI model inference if it's your case.

An Azure AI services resource. For more information, see Create an Azure AI Services resource.

An account with

Microsoft.Authorization/roleAssignments/writeandMicrosoft.Authorization/roleAssignments/deletepermissions, such as the Administrator role-based access control.To assign a role, you must specify three elements:

- Security principal: e.g. your user account.

- Role definition: the Cognitive Services User role.

- Scope: the Azure AI Services resource.

If you want to create a custom role definition instead of using Cognitive Services User role, ensure the role has the following permissions:

{ "permissions": [ { "dataActions": [ "Microsoft.CognitiveServices/accounts/MaaS/*" ] } ] }

Install the Azure CLI.

Identify the following information:

- Your Azure subscription ID.

About this tutorial

The example in this article is based on code samples contained in the Azure-Samples/azureai-model-inference-bicep repository. To run the commands locally without having to copy or paste file content, use the following commands to clone the repository and go to the folder for your coding language:

git clone https://github.com/Azure-Samples/azureai-model-inference-bicep

The files for this example are in:

cd azureai-model-inference-bicep/infra

Understand the resources

The tutorial helps you create:

- An Azure AI Services resource with key access disabled. For simplicity, this template doesn't deploy models.

- A role-assignment for a given security principal with the role Cognitive Services User.

You are using the following assets to create those resources:

Use the template

modules/ai-services-template.bicepto describe your Azure AI Services resource:modules/ai-services-template.bicep

@description('Location of the resource.') param location string = resourceGroup().location @description('Name of the Azure AI Services account.') param accountName string @description('The resource model definition representing SKU') param sku string = 'S0' @description('Whether or not to allow keys for this account.') param allowKeys bool = true @allowed([ 'Enabled' 'Disabled' ]) @description('Whether or not public endpoint access is allowed for this account.') param publicNetworkAccess string = 'Enabled' @allowed([ 'Allow' 'Deny' ]) @description('The default action for network ACLs.') param networkAclsDefaultAction string = 'Allow' resource account 'Microsoft.CognitiveServices/accounts@2023-05-01' = { name: accountName location: location identity: { type: 'SystemAssigned' } sku: { name: sku } kind: 'AIServices' properties: { customSubDomainName: accountName publicNetworkAccess: publicNetworkAccess networkAcls: { defaultAction: networkAclsDefaultAction } disableLocalAuth: allowKeys } } output endpointUri string = 'https://${account.outputs.name}.services.ai.azure.com/models' output id string = account.idTip

Notice that this template can take the parameter

allowKeyswhich, whenfalsewill disable the use of keys in the resource. This configuration is optional.Use the template

modules/role-assignment-template.bicepto describe a role assignment in Azure:modules/role-assignment-template.bicep

@description('Specifies the role definition ID used in the role assignment.') param roleDefinitionID string @description('Specifies the principal ID assigned to the role.') param principalId string @description('Specifies the resource ID of the resource to assign the role to.') param scopeResourceId string = resourceGroup().id var roleAssignmentName= guid(principalId, roleDefinitionID, scopeResourceId) resource roleAssignment 'Microsoft.Authorization/roleAssignments@2022-04-01' = { name: roleAssignmentName properties: { roleDefinitionId: resourceId('Microsoft.Authorization/roleDefinitions', roleDefinitionID) principalId: principalId } } output name string = roleAssignment.name output resourceId string = roleAssignment.id

Create the resources

In your console, follow these steps:

Define the main deployment:

deploy-entra-id.bicep

@description('Location to create the resources in') param location string = resourceGroup().location @description('Name of the resource group to create the resources in') param resourceGroupName string = resourceGroup().name @description('Name of the AI Services account to create') param accountName string = 'azurei-models-dev' @description('ID of the developers to assign the user role to') param securityPrincipalId string module aiServicesAccount 'modules/ai-services-template.bicep' = { name: 'aiServicesAccount' scope: resourceGroup(resourceGroupName) params: { accountName: accountName location: location allowKeys: false } } module roleAssignmentDeveloperAccount 'modules/role-assignment-template.bicep' = { name: 'roleAssignmentDeveloperAccount' scope: resourceGroup(resourceGroupName) params: { roleDefinitionID: 'a97b65f3-24c7-4388-baec-2e87135dc908' // Azure Cognitive Services User principalId: securityPrincipalId } } output endpoint string = aiServicesAccount.outputs.endpointUriLog into Azure:

az loginEnsure you are in the right subscription:

az account set --subscription "<subscription-id>"Run the deployment:

RESOURCE_GROUP="<resource-group-name>" SECURITY_PRINCIPAL_ID="<your-security-principal-id>" az deployment group create \ --resource-group $RESOURCE_GROUP \ --securityPrincipalId $SECURITY_PRINCIPAL_ID --template-file deploy-entra-id.bicepThe template outputs the Azure AI model inference endpoint that you can use to consume any of the model deployments you have created.

Use Microsoft Entra ID in your code

Once you configured Microsoft Entra ID in your resource, you need to update your code to use it when consuming the inference endpoint. The following example shows how to use a chat completions model:

Install the package azure-ai-inference using your package manager, like pip:

pip install azure-ai-inference

Then, you can use the package to consume the model. The following example shows how to create a client to consume chat completions with Entra ID:

import os

from azure.ai.inference import ChatCompletionsClient

from azure.identity import DefaultAzureCredential

client = ChatCompletionsClient(

endpoint="https://<resource>.services.ai.azure.com/models",

credential=DefaultAzureCredential(),

credential_scopes=["https://cognitiveservices.azure.com/.default"],

)

Options for credential when using Microsoft Entra ID

DefaultAzureCredential is an opinionated, ordered sequence of mechanisms for authenticating to Microsoft Entra ID. Each authentication mechanism is a class derived from the TokenCredential class and is known as a credential. At runtime, DefaultAzureCredential attempts to authenticate using the first credential. If that credential fails to acquire an access token, the next credential in the sequence is attempted, and so on, until an access token is successfully obtained. In this way, your app can use different credentials in different environments without writing environment-specific code.

When the preceding code runs on your local development workstation, it looks in the environment variables for an application service principal or at locally installed developer tools, such as Visual Studio, for a set of developer credentials. Either approach can be used to authenticate the app to Azure resources during local development.

When deployed to Azure, this same code can also authenticate your app to other Azure resources. DefaultAzureCredential can retrieve environment settings and managed identity configurations to authenticate to other services automatically.

Best practices

Use deterministic credentials in production environments: Strongly consider moving from

DefaultAzureCredentialto one of the following deterministic solutions on production environments:- A specific

TokenCredentialimplementation, such asManagedIdentityCredential. See the Derived list for options. - A pared-down

ChainedTokenCredentialimplementation optimized for the Azure environment in which your app runs.ChainedTokenCredentialessentially creates a specific allowlist of acceptable credential options, such asManagedIdentityfor production andVisualStudioCredentialfor development.

- A specific

Configure system-assigned or user-assigned managed identities to the Azure resources where your code is running if possible. Configure Microsoft Entra ID access to those specific identities.

Troubleshooting

Before troubleshooting, verify that you have the right permissions assigned:

Go to the Azure portal and locate the Azure AI Services resource you're using.

On the left navigation bar, select Access control (IAM) and then select Check access.

Type the name of the user or identity you are using to connect to the service.

Verify that the role Cognitive Services User is listed (or a role that contains the required permissions as explained in Prerequisites).

Important

Roles like Owner or Contributor don't provide access via Microsoft Entra ID.

If not listed, follow the steps in this guide before continuing.

The following table contains multiple scenarios that can help troubleshooting Microsoft Entra ID:

| Error / Scenario | Root cause | Solution |

|---|---|---|

| You're using an SDK. | Known issues. | Before making further troubleshooting, it's advisable to install the latest version of the software you are using to connect to the service. Authentication bugs may have been fixed in a newer version of the software you're using. |

401 Principal does not have access to API/Operation |

The request indicates authentication in the correct way, however, the user principal doesn't have the required permissions to use the inference endpoint. | Ensure you have: 1. Assigned the role Cognitive Services User to your principal to the Azure AI Services resource. 2. Wait at least 5 minutes before making the first call. |

401 HTTP/1.1 401 PermissionDenied |

The request indicates authentication in the correct way, however, the user principal doesn't have the required permissions to use the inference endpoint. | Assigned the role Cognitive Services User to your principal in the Azure AI Services resource. Roles like Administrator or Contributor don't grand inference access. Wait at least 5 minutes before making the first call. |

You're using REST API calls and you get 401 Unauthorized. Access token is missing, invalid, audience is incorrect, or have expired. |

The request is failing to perform authentication with Entra ID. | Ensure the Authentication header contains a valid token with a scope https://cognitiveservices.azure.com/.default. |

You're using AzureOpenAI class and you get 401 Unauthorized. Access token is missing, invalid, audience is incorrect, or have expired. |

The request is failing to perform authentication with Entra ID. | Ensure that you are using an OpenAI model connected to the endpoint https://<resource>.openai.azure.com. You can't use OpenAI class or a Models-as-a-Service model. If your model is not from OpenAI, use the Azure AI Inference SDK. |

You're using the Azure AI Inference SDK and you get 401 Unauthorized. Access token is missing, invalid, audience is incorrect, or have expired. |

The request is failing to perform authentication with Entra ID. | Ensure you're connected to the endpoint https://<resource>.services.ai.azure.com/model and that you indicated the right scope for Entra ID (https://cognitiveservices.azure.com/.default). |

404 Not found |

The endpoint URL is incorrect based on the SDK you are using, or the model deployment doesn't exist. | Ensure you are using the right SDK connected to the right endpoint: 1. If you are using the Azure AI inference SDK, ensure the endpoint is https://<resource>.services.ai.azure.com/model with model="<model-deployment-name>" in the payloads, or endpoint is https://<resource>.openai.azure.com/deployments/<model-deployment-name>. If you are using the AzureOpenAI class, ensure the endpoint is https://<resource>.openai.azure.com. |

Disable key-based authentication in the resource

Disabling key-based authentication is advisable when you implemented Microsoft Entra ID and fully addressed compatibility or fallback concerns in all the applications that consume the service. You can achieve it by changing the property disableLocalAuth:

modules/ai-services-template.bicep

@description('Location of the resource.')

param location string = resourceGroup().location

@description('Name of the Azure AI Services account.')

param accountName string

@description('The resource model definition representing SKU')

param sku string = 'S0'

@description('Whether or not to allow keys for this account.')

param allowKeys bool = true

@allowed([

'Enabled'

'Disabled'

])

@description('Whether or not public endpoint access is allowed for this account.')

param publicNetworkAccess string = 'Enabled'

@allowed([

'Allow'

'Deny'

])

@description('The default action for network ACLs.')

param networkAclsDefaultAction string = 'Allow'

resource account 'Microsoft.CognitiveServices/accounts@2023-05-01' = {

name: accountName

location: location

identity: {

type: 'SystemAssigned'

}

sku: {

name: sku

}

kind: 'AIServices'

properties: {

customSubDomainName: accountName

publicNetworkAccess: publicNetworkAccess

networkAcls: {

defaultAction: networkAclsDefaultAction

}

disableLocalAuth: allowKeys

}

}

output endpointUri string = 'https://${account.outputs.name}.services.ai.azure.com/models'

output id string = account.id