DSL 2010 Feature Dives: T4 Preprocessing - Part Two - Basic Design

Lat time we looked at the "WHy" of T4 preprocessing - now let's look at an overview of the how.

To explain the new features, it's helpful to have a look at the current way data flows through T4 2008. Let's suppose you have a trivial template generating some trivial output:

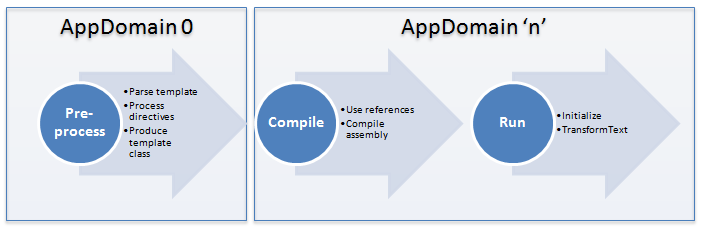

Now to get from the template to it's output, the T4 engine goes through three stages:

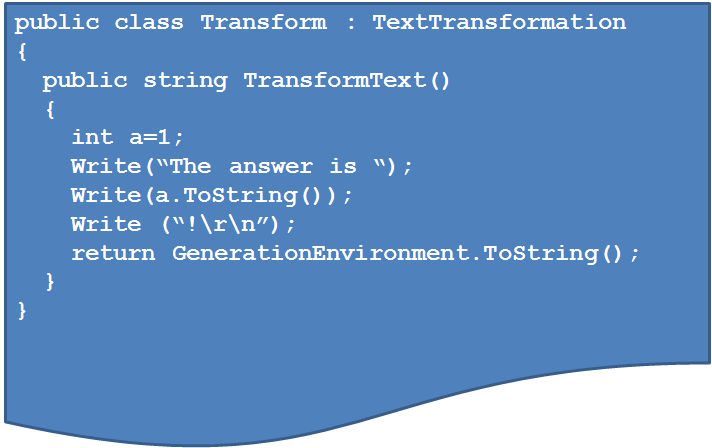

In the first, Preprocess stage, the template is parsed into a series of blocks, any directives found within it are acted on (for example, to set the output extension or to include a sub-template) and then a "Template class" is generated that represents code to produce the final output of the template. In this case, the template class would look something like the following (I've simplified a bit):

T4 supplies the base class TextTransformation, which contains the Write family of helpers which simply write to a StringBuilder exposed by the GenerationEnvironment property.

The second stage takes the text of this class and compiles it using the CodeDOM to produce a temporary assembly.

The third stage loads the temp assembly, creates an instance of the Transform class and calls the TransformText method on it to produce the final output of the template.

So that's what happens at present. What do we need to change if we want to run the template in arbitrary applications?

Firstly, we need to split up the stages. Indeed, if the template is going to be built as part of some application, T4 doesn't need to get involved in stage two at all - the user will presumably have their build environment set up for compiling and deploying application code and the template class will now just become part of that code. So we'll need new APIs and tools for Stage One that return the code for the template class, and we can ignore Stage Two.

For Stage Three, the host application will need to run the template. Clearly it can just call the TransformText method as it has a trivial API - although it may have to do so via reflection if the set of templates is in any way dynamic as there is no interface implemented here, just a standard signature. (Actually, the new dynamic feature in C# 4.0 should make this a breeze). However, this tells us we'll need a way for the new Stage One API to specify more detail (such as the name and namespace) about the template class to be generated.

Finally for stage three, the base class, TextTransformation, becomes a problem. This class lives in the T4 assembly, which only ships in Visual Studio, so you will likely not have it available in your deployment environment. Luckily the contents of this base class are rather trivial helper methods on the whole. To address this, if you don't specify a base class, we'll make T4 generate the helpers directly into your template class, relieving you of the dependency. Alternatively, it's pretty easy to add a set of helpers to any base class that you add to your own deployable code, so long as they fulfill the signature that the template class needs.

Next time we'll go into detail on what APIs will be available and then fill in some details where I've oversimplified in this explanation.

Technorati Tags: T4, DSL Tools, Domain-Specific Language, Code Generation, Visual Studio 2010

Comments

Anonymous

November 13, 2008

With the pre-processed templates and winforms binding of model, do you see the enabling of VS 2010 scenarios where the models can be re-used outside of VS for interrogation, modification and transformation. In other words, will similar de-coupling from VS core be occurring in the modeling namespaces?Anonymous

November 13, 2008

I guess you could say that the equivalent decoupling has always been there for modeling. If you look at this work as an exercise in using the pieces without any reference to the VS product assemblies, then the equivalent is the fact that the models serialize as easily parseable XML that can be read with regular .Net XML code. Now T4 will also spit out code that only relies on regular .net code too.