SQL Server 2014 – Updated VLF creation algorithm

Update: Someone just pointed out that Paul Randal beat me to posting on this exact topic by 2 days! Check out his post here: https://www.sqlskills.com/blogs/paul/important-change-vlf-creation-algorithm-sql-server-2014/

Something that I come across in many SQL Server environments is a high number of Virtual Log Files (VLFs) in database transaction logs. Just Bing the subject and you will find dozens of blogs & articles going back almost a decade describing why this is bad, how to determine how many you have and how many is too many. See below for a few good examples.

- Certain database operations take a very long duration to complete or encounter errors when the transaction log has numerous virtual log files

- High Virtual Log Files (VLF) Count

- Performance impact: a large number of virtual log files – Part I

In summary, the impact of a high number of VLFs can be:

- Slow database start up

- Slow database restores

- Slow update/insert performance

If there are so many articles on the subject, why create a new one?

I wanted to create a script that could be used to demonstrate the impacts of too many VLFs. The script would create three databases with their transaction logs having 16, 1000 and 10000 VLFs respectively which could then have a series of tests performed against them.

So I spun up my SQL Server 2014 instance (12.0.2456.0) to start testing.

Setting up the databases using the known VLF creation algorithm. Is my script broken?

VLFs are created when a transaction log grows (either manually or through auto-growth) and I was using the formula that Kimberly Tripp posted, which seems to be the most 'official' documentation I can find on this. Even our own Microsoft KB has a link to the SQLSkills blog! The formula is:

chunks less than 64MB and up to 64MB = 4 VLFs

chunks larger than 64MB and up to 1GB = 8 VLFs

chunks larger than 1GB = 16 VLFs

To create a database with a transaction log with 1000 VLFs should be simple then! I just need to grow the transaction log 249 times (my initial size is 10MB which means it starts with 4 VLFs to begin) in 10MB increments.

4 VLFS + (249 x 4 VLFs) = 1000 VLFs

10MB + (249 x 10MB) = 2500MB

I run the script and…. 277 VLFs. The size was as expected but why so few VLFs? Is my script broken? I am confused...

So I then ran the script against my SQL 2012 instance and got exactly 1000 VLFs as initially expecting. Now I am confused and suspicious.

What is going on?

After running numerous tests it became clear that at some point, 1 VLF would be created instead of the expected 4.

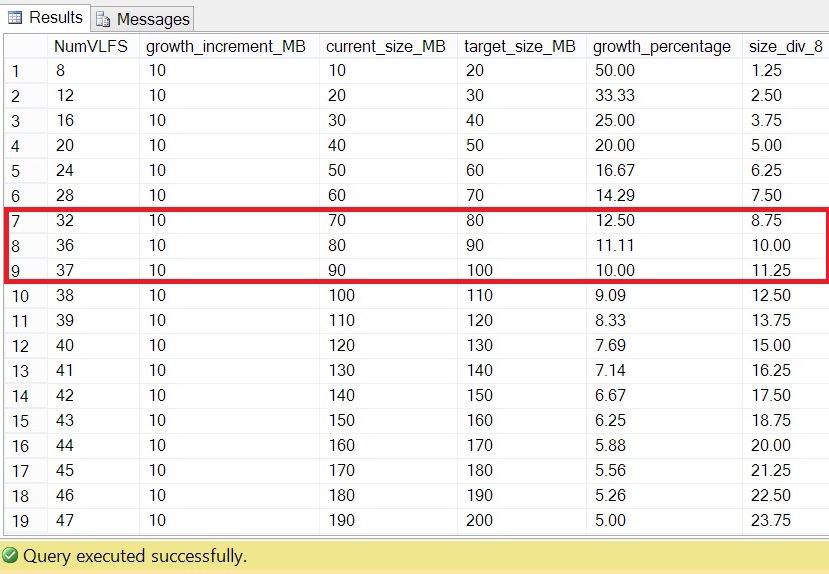

Below are the results of a test which show what is going on. Looks what happens between rows 7 to 9.

After extensive testing, I found that if the growth amount is less than the current size of the transaction log divided by 8, it will create 1 VLF. Further testing showed that this new algorithm is applied before reaching the old one

What is the new algorithm in SQL Server 2014?

If

growth < current size / 8 = 1 VLF

else

growth <= 64MB = 4 VLFs

growth > 64MB AND <= 1024MB = 8 VLFs

growth > 1024MB = 16 VLFS

Final thoughts

I suspect this change is to reduce the number of VLFs in databases that are created with default values which is great. By default, a transaction log has an initial size of 1MB and growth rate of 10%. If it were to auto grow to 1051MB it would have the below results

SQL 2012 and below = 416 VLFS

SQL 2014 = 77 VLFS

That is a lot less in SQL Server 2014 and should mean we should see fewer customer databases with VLF problems in the future.

I never got around to finishing my demo script after finding this change but will return to it one day soon.