Azure Batch for the IT Pro - Part 1

I spent some time on working with Azure Batch for a customer, and what struck me that it was not so easy for an IT Pro to create a meaningful testing setup. The stumbling point is that you need to have an application doing meaningful work.

So what is Azure Batch? It is the PaaS version of High Performance Computing (HPC). Azure gives you the infrastructure, you give it the application and define the tasks and jobs telling Azure what to do. Azure Batch is suited to intrinsically parallel workloads, such as scientific model calculations, video rendering, data reduction, etc.

My goal here is to set up an example that means something to the IT Pro who, like me, is familiar with Powershell but does not write .NET code in Visual Studio every day. The project is hosted on my Azure-Batch repo on Github.

Overview Azure Batch walkthrough based on a Powershell application

This Azure Batch walkthrough creates a Batch account, storage account, package, a pool of VMs, and executes a job with multiple tasks to generate decimal representations of Mersenne prime numbers. If you just want to do the walkthrough, do the following steps; if you also want the why and how, read the background information as well.

- Make sure you have access to an Azure subscription. You will need to type credentials of at least Contributor level.

- Install the latest Azure Powershell modules.

- Download the two toplevel scripts Create-BatchAccountMersenne.ps1 and Create-BatchJobsAndTasks.ps1.

- Run Create-BatchAccountMersenne.ps1.

- Open the Azure Portal, locate for the (default) resource group named "rg-batch-walkthrough", and inspect it a bit.

- Run Create-BatchJobsAndTasks.ps1. This might take a while to complete.

- Inspect the jobs, tasks, and task output.

- Locate the Storage Account, File Server, a share called (default) "mersenneshare", and you should have the Mersenne primes right there.

This ends the walkthrough steps. Next up is a discussion of the Powershell code needed to create the Azure Batch infrastructure.

Creating the Batch Account using Powershell

The full script to create the Azure Batch walkthrough account is Create-BatchAccountMersenne.ps1 . The code below is straight from the script, stripped from comments and additions. You can either execute the script itself after downloading, or run the snippets below one-by-one.

First, log on and select the appropriate subscription.

Add-AzureRmAccount

# select a subscription using select-azurermsubscription, if needed.

We need some definitions to lock down the configuration. They mostly speak for themselves. Important to note is that the name Mersenne is used everywhere and should not be changed without a thorough investigation. The variable PackageURL is a URL to download the actual (zip) package containing the code to be run. In this case, the application code is Powershell script. Feel free to host the ZIP file wherever you like. The hash definition $poolWindowsVersion is the human-readable definition of the Windows version to use for the pool VMs.

$ResourceGroupName = "rg-batch-walkthrough"

$Region = "Central US"

$BatchAccountNamePrefix = "walkthrough"

$WindowsVersion = "2016"

$Applicationname = "Mersenne"

$PoolName = "Pool1"

$ShareName ="mersenneshare"

$Nodecount = 2

$PackageURL = "https://github.com/wkasdorp/Azure-Batch/raw/master/ZIP/MersenneV1.zip"

$poolWindowsVersion = @{

"2012" = 3

"2012R2" = 4

"2016" = 5

}

The next bit is interesting: we need some worldwide unique names, preferably without having the user specify them or trying variations until we get a good one. For this, we use a function that takes the ID of a resource group and mangles this to a semi-random number. Take a look at an earlier post that explains this function in detail.

function Get-LowerCaseUniqueID ([string]$id, $length=8)

{

$hashArray = (New-Object System.Security.Cryptography.SHA512Managed).ComputeHash($id.ToCharArray())

-join ($hashArray[1..$length] | ForEach-Object { [char]($_ % 26 + [byte][char]'a') })

}

The business part starts here. First, we need a Resource Group. Because I anticipate that this part of the code may need to be re-run a couple of times, I made it restartable (meaning, do the smart thing if the Resource Group exists):

$ResourceGroup = $null

$ResourceGroup = Get-AzureRmResourceGroup -Name $ResourceGroupName -ErrorAction SilentlyContinue

if ($ResourceGroup -eq $null)

{

$ResourceGroup = New-AzureRmResourceGroup –Name $ResourceGroupName -Location $Region -ErrorAction Stop

}

An Azure Batch accounts needs a Storage Account to store packages and data, so we first create the Storage Account, and then provision the Batch Account with a reference to the Storage Account. We also create an SMB share in the Storage Account, because an SMB share is easy to write to from Powershell running in the pool VMs. This part is also restartable, which is why we end up with explicitly retrieving the Azure Batch context. Note the use of function Get-LowerCaseUniqueID to determine the names of the Batch and Storage accounts.

$BatchAccountName = $BatchAccountNamePrefix + (Get-LowerCaseUniqueID -id $ResourceGroup.ResourceId)

$StorageAccountName = "sa$($BatchAccountName)"

$BatchAccount = $null

$BatchAccount = Get-AzureRmBatchAccount –AccountName $BatchAccountName –ResourceGroupName $ResourceGroupName -ErrorAction SilentlyContinue

if ($BatchAccount -eq $null)

{

$StorageAccount = New-AzureRmStorageAccount -ResourceGroupName $ResourceGroupName -Name $StorageAccountName -SkuName Standard_LRS -Location $Region -Kind Storage

$Share = New-AzureStorageShare -Context $StorageAccount.Context -Name $ShareName

$BatchAccount = New-AzureRmBatchAccount –AccountName $BatchAccountName –Location $Region –ResourceGroupName $ResourceGroupName -AutoStorageAccountId $StorageAccount.Id

}

$BatchContext = Get-AzureRmBatchAccountKeys -AccountName $BatchAccountName -ResourceGroupName $ResourceGroupName

Next up is to create an application generating some data. As mentioned, the application is pre-packaged as a ZIP file containing two Powershell scripts. We download this into a temporary file, and then generate a new Azure Batch application definition in the existing account. To make life easier for application management, we explicitly define a default version ("1.0").

$tempfile = [System.IO.Path]::GetTempFileName() | Rename-Item -NewName { $_ -replace 'tmp$', 'zip' } –PassThru

Invoke-WebRequest -Uri $PackageURL -OutFile $tempfile

New-AzureRmBatchApplication -AccountName $BatchAccountName -ResourceGroupName $ResourceGroupName -ApplicationId $applicationname

New-AzureRmBatchApplicationPackage -AccountName $BatchAccountName -ResourceGroupName $ResourceGroupName -ApplicationId $applicationname `

-ApplicationVersion "1.0" -Format zip -FilePath $tempfile

Set-AzureRmBatchApplication -AccountName $BatchAccountName -ResourceGroupName $ResourceGroupName -ApplicationId $applicationname -DefaultVersion "1.0"

Finally, we create a pool of VMs that will be used to run the package. I made a number of design choices here.

- I used a cloud service (PaaS) VM. This is faster to deploy, but more limited in functionality. For instance, we are limited to Windows although Azure Batch supports Linux as well.

- The pool has dedicated VMs which run as long as the pool exists. Again, this is faster for testing, but also more expensive. The alternative is to use low priority nodes that get provisioned when needed. Also, it is possible to increase or decrease the number of nodes in pool.

$appPackageReference = New-Object Microsoft.Azure.Commands.Batch.Models.PSApplicationPackageReference

$appPackageReference.ApplicationId = $applicationname

$appPackageReference.Version = "1.0"

$PoolConfig = New-Object -TypeName "Microsoft.Azure.Commands.Batch.Models.PSCloudServiceConfiguration" -ArgumentList @($poolWindowsVersion[$WindowsVersion],"*")

New-AzureBatchPool -Id $PoolName -VirtualMachineSize "Small" -CloudServiceConfiguration $PoolConfig `

-BatchContext $BatchContext -ApplicationPackageReferences $appPackageReference -TargetDedicatedComputeNodes $Nodecount

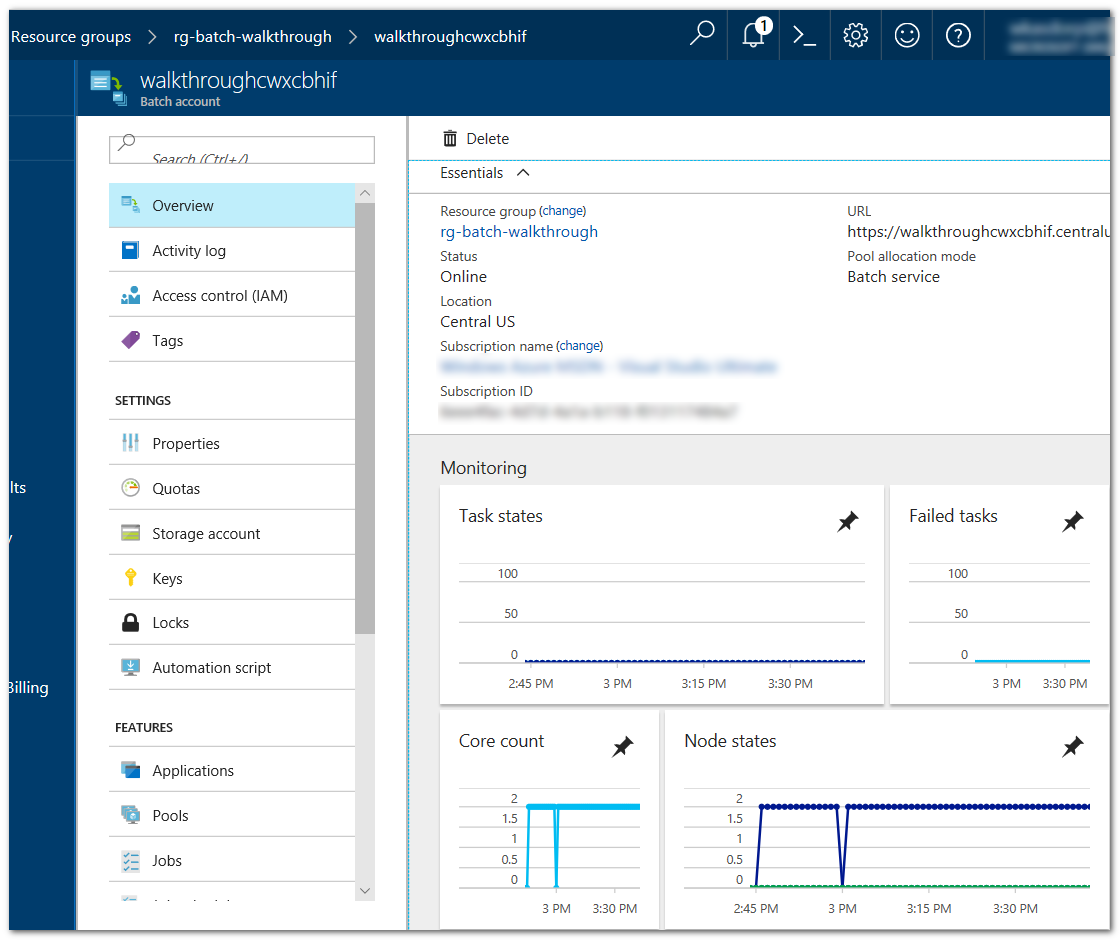

After executing this code, the Azure Fabric provisions two VMs in the pool. This should take about 10-20 minutes. After the nodes have fully initialized you should have the Azure Portal looking something like the following screenshot. There are zero task states, obviously, and two running nodes.

At this point, we are ready to run the application package, Mersenne. Doing this is slightly involved because we need to create a Batch Job, with a task for each Mersenne number to be calculated. The command line used to start the Powershell script in the VMs needs to be constructed as well. This is the subject of the next post in this short series.

Application code: decimal version of Mersenne Prime Numbers

This section really is nothing more than background information on the sample application. All you need to know is that it generates prime numbers.

The sample application for for this walk-through is a very simple but CPU-intensive calculation. Throughout known history people have searched for ever larger prime numbers, which are numbers that have no divisors except themselves. You know: 2, 3, 5, 7, 11, 13, 17, ... etc. There is an infinity of them. Currently, the largest known prime numbers are Mersenne numbers. I explained this a little bit in the readme.md on Github. The very short summary: a Mersenne number is an exponential of 2, minus one. The exponent is always a prime number. Examples: 27-1 = 127, 231-1 = 2147483647, and the currently (1-3-2018) largest one: 277232917-1 = <huge number> .

The Mersenne application packed in the ZIP file MersenneV1.zip simply calculates (some) of these numbers. The following is slightly simplified from the version in the package. You can paste this in a Powershell ISE windows, and it will just work:

# left out argument handling, using Read-Host instead.

$MersenneExponents = @(

2, 3, 5, 7, 13,

17, 19, 31, 61, 89,

107, 127, 521, 607, 1279,

2203, 2281, 3217, 4253, 4423,

9689, 9941, 11213, 19937, 21701,

23209, 44497, 86243, 110503, 132049,

216091, 756839, 859433, 1257787, 1398269,

2976221, 3021377, 6972593, 13466917, 20996011,

24036583, 25964951, 30402457, 32582657, 37156667,

42643801, 43112609, 57885161, 74207281, 77232917

)

function PrintMersenneDecimal ([int] $n, $width = 80)

{

$prime = [numerics.biginteger]::pow(2,$n)-1

$s = $prime.ToString()

"Mersenne prime 2^$n-1 has $($s.length) digits."

for ($n=0; $n -lt $s.length; $n += $width)

{

$s.Substring($n, [math]::min($width, $s.Length - $n))

}

}

$index = $(Read-Host -Prompt "Which Mersenne number to calculate? (0-$($MersenneExponents.count-1))")

PrintMersenneDecimal -n $MersenneExponents[$index]

Simple enough, I guess. The part doing the actual work is a oneliner based on a .NET library: [numerics.biginteger]::pow(2,$n)-1.

Let's move on the final part, a glue script called generate_decimal_mersenne_and_upload.ps1. Its purpose is to serve as an interface between the Azure Batch infrastructure and the code doing the actual work. It reads the arguments passed to the Azure Batch Tasks, and takes care of writing the resulting data back to the SMB share defined on the Storage Account. Simplified version:

[CmdletBinding()]

Param

(

[int] $index,

[string] $uncpath,

[string] $account,

[string] $SaKey

)

$batchshared = $env:AZ_BATCH_NODE_SHARED_DIR

$batchwd = $env:AZ_BATCH_TASK_WORKING_DIR

$outfile = (Join-Path $batchwd "Mersenne-$($index).txt")

$generateMersenne = "$env:AZ_BATCH_APP_PACKAGE_MERSENNE\calculate_print_mersenne_primes.ps1"

&$generateMersenne -index $index > $outfile

New-SmbMapping -LocalPath z: -RemotePath $uncpath -UserName $account -Password $SaKey -Persistent $false

Copy-Item $outfile z:

The main points to note are the mandatory use of environment variables for file and directory paths, the fact that some variable names are based on the actual package name (Mersenne), and the literal reference to the script calculating the Mersenne primes: calculate_print_mersenne_primes.ps1.

Next step: submit a job to the Azure Batch account.

In this post I have shown you step-by-step how to create an Azure Batch account using Powershell. The next step is to create a batch job to run the Mersenne test application on the pool nodes. For this, continue to part 2:

Comments

- Anonymous

September 03, 2018

The comment has been removed- Anonymous

September 04, 2018

Hi Daniel, The error suggests an incompatibility between ARM and the Powershell module. Did you upgrade to the latest and greatest modules? Otherwise you should log a case, I guess. The alternative would be to fall back to the REST API, which can be called from Powershell as well -- or so people tell me ;)

- Anonymous