Fabric runtime support in VS Code extension

For Fabric runtime 1.1 and 1.2, two local conda environments are created by default. Activate the conda environment before running the notebook on the target runtime. To learn more, see Choose Fabric Runtime 1.1 or 1.2

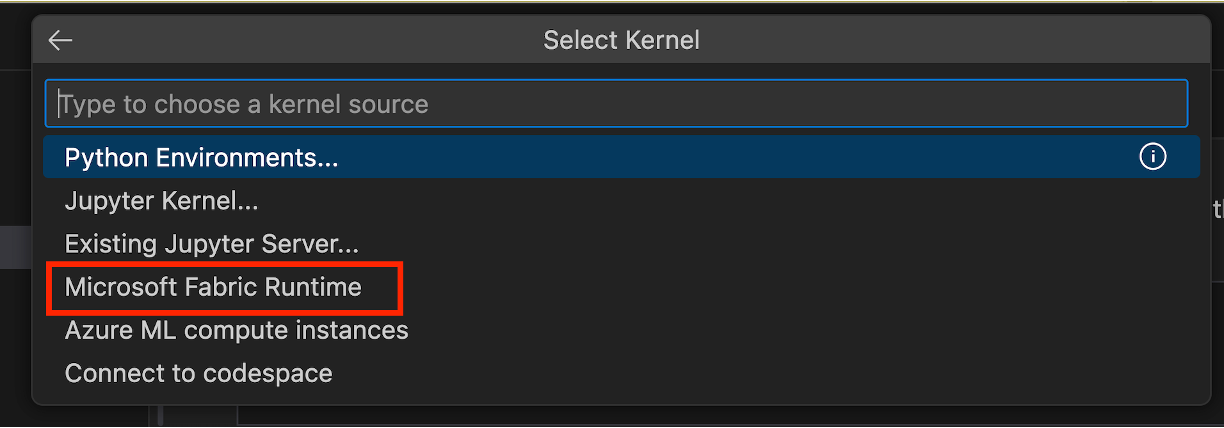

For Fabric runtime 1.3 and higher, the local conda environment is not created. You can run the notebook directly on the remote Spark compute by selecting the new entry in the Jupyter kernel list. You can select this kernel to run the notebook or Spark Job Definition on the remote Spark compute..

Considerations for choosing local conda environment or remote Spark compute

When you run the notebook or Spark Job Definition, you can choose the local conda environment or remote Spark Runtime. Here are some considerations to help you choose:

There are scenarios you may want to choose the local conda environment:

- You need to work under some disconnected setup without the access to the remote compute.

- You like evaluate some python library before uploading them to the remote workspace. you can install the library in the local conda environment and test it.

Choose the Fabric runtime kernel in the following scenarios:

- You need to run the notebook on Runtime 1.3.

- In your code, there is some hard dependency to the remote runtime

- MSSparkUtils is a good example. It is a library that is only available in the remote runtime. If you want to use it, you need to run the code on the remote runtime.

- NotebookUtils is another example. It is a library that is only available in the remote runtime. If you want to use it, you need to run the code on the remote runtime.