Microsoft's Custom Vision Cognitive Service

I'm in Sydney, Australia this week to speak at the Build Tour. Besides the keynote, I'm presenting a session on Artificial Intelligence and Microsoft's new Custom Vision Service. Custom Vision has received significant developer interest since Cornelia Carapcea introduced it in the Build keynote this year. As I prepared for my Australia trip, I thought I'd build something neat with the Custom Vision Service and document my findings/experience in this blog post. Let me introduce the Snakebite Bot…a bot that can help visually identify venomous snakes in North America.

Figure 1: Snakebite Bot (https://snakebite.azurewebsites.net)

Background

Cornelia demonstrated the use of the Custom Vision Service to identify plants by their leaves. I immediately saw the value in identifying poisonous plants like Poison Ivy. When I heard I would be presenting Custom Vision in Australia, I immediately thought…everything in Australia can kill you…I'll start with snakes. Why snakes? When I was a little kid, I was bitten by a poisonous Copperhead snake at Possum Kingdom Lake (any Toadies fans out there?). You would think this would make me scared of snakes, but it did the opposite…dreams of becoming a herpetologist quickly emerged. Fast-forward and my snake career never took off, but becoming a developer did. That said, I never lost my fascination with reptiles. Enter the Snakebite bot…Custom Vision, bots, and snakes…what could be better?

Building the model

Microsoft's AI stack includes a number of Cognitive Services that are more commodity than "custom"…you just call the services with your data (ex: facial recognition). Custom Vision is a true "custom" service…you build and train a model specific to your needs. "Custom" doesn't mean complex as building and consuming a Custom Vision model is as easy as 1-2-3**.

- Upload/tag photos

- Train and test the model

- Call into the model to get the most probably tag matches for a photo

**An important fourth step would be to continually enhance the model by evaluating photos with low probability matches. The Custom Vision Service will improve in this area as the service matures.

Upload/tab photos

While in preview, a model in the Custom Vision Service supports 1000 photos and 50 tags. You can work with Microsoft to expand these quotas, but this is at Microsoft's discretion during preview. I would caution that multiple small models might be better than one monolithic model. For example, if I wanted to identify any venomous species, I might create specialized models for spiders, snakes, frogs, etc and use the Computer Vision cognitive services to determine which vision model to use.

I worked with Microsoft to expand my model to 10000 photos and 100 tags…and it still wasn't enough as North America has 129 species of snakes. What did I do? I consolidated very similar species (ex: there are 15 different Garter Snake species that all look very similar so I consolidated them into one "Garter Snake" tag). Although my model will identify the most probable snake species, the more important identification is if a snake is dangerous or not and that can be accomplished with two tags (venomous vs non-venomous). After consolidating common species I ended up with 84 tags (81 species and 3 venomous classifications).

Photos should also be at least 256px on shortest edge but no larger than 6MB in size. Additionally, Microsoft recommends at least 30 photos for every tag. The ideal photos for a Custom Vision model capture the subject(s) from multiple perspectives against a solid background (ex: white background). Backgrounds proved particularly challenging with snakes photos. Not only are solid backgrounds impossible, many snakes are camouflaged against their background. Any unique background attributes could cause a false positive between species. Consider training a model with photos of snakes being held…Custom Vision algorithms might false match on hands instead of snakes. Rather than trying to crop out backgrounds, I concentrated on image volume with minimal background patterns/accents. My initial model was loaded with about 3000 snake photos from Bing/Goolge image searches.

Figure 2: Example of human/holding causing false positive match

Train and test the model

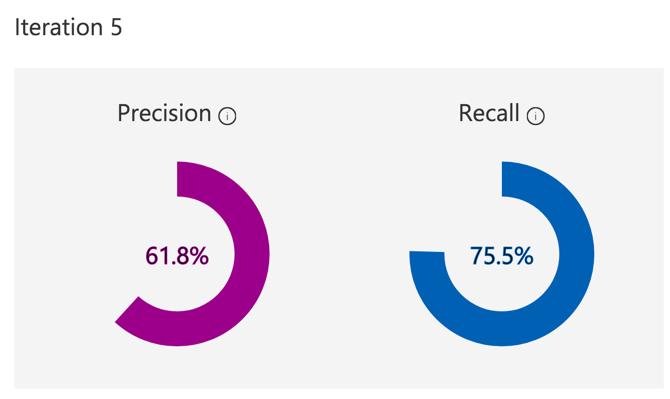

Model training is a bit of a black box in the Custom Vision Service. You don't have an opportunity to manipulate the algorithm only train on the existing algorithm. The final trained model has a 61.8% precision across all species. However, I've found it to be incredibly accurate considering only the venomous vs non-venomous tags (which was the ultimate goal).

Figure 3: Trained model precision and recall

Call into the model

Like the other Microsoft Cognitive Services, the Custom Vision Service provides a REST endpoint secured by a subscription key. Specifically, you POST an image in the body of the REST call to get tag probabilities back. The Custom Vision Service will use the default training iteration unless an iteration ID is specified in the API call. The Custom Vision documentation shows how to do this in .NET, but here is the same call in Node/Typescript. I'm offering this code because I spent the good part of a day getting the correct format for sending the image into the service correctly as a multipart stream.

Figure 4: Can sample for calling Custom Vision Service from Node/TypeScript

function (session, results) {

var attachment = session.message.attachments[0];

request({url: attachment.contentUrl, encoding: null}, function (error, response, body) {

// Take the image and post to the custom vision service

rest.post(process.env.IMG_PREDICTION_ENDPOINT, {

multipart: true,

headers: {

'Prediction-Key': process.env.IMG_PREDICTION_KEY,

'Content-Type': 'multipart/form-data'

},

data: {

'filename': rest.data('TEST.png', 'image/png', body)

}

}).on('complete', function(data) {

let nven = 0.0; //non-venomous

let sven = 0.0; //semi-venomous

let fven = 0.0; //full-venomous

let topHit = { Probability: 0.0, Tag: '' };

for (var i = 0; i < data.Predictions.length; i++) {

if (data.Predictions[i].Tag === 'Venomous')

fven = data.Predictions[i].Probability;

else if (data.Predictions[i].Tag === 'Non-Venomous')

nven = data.Predictions[i].Probability;

else if (data.Predictions[i].Tag === 'Semi-Venomous')

sven = data.Predictions[i].Probability;

else {

if (data.Predictions[i].Probability > topHit.Probability)

topHit = data.Predictions[i];

}

}

let venText = 'Venomous';

if (nven > fven)

venText = 'Non-Venomous';

if (sven > fven && sven > nven)

venText = 'Semi-Venomous';

session.endDialog(`The snake you sent appears to be **${venText}** with the closest match being **${topHit.Tag}** at **${topHit.Probability * 100}%** probability`);

}).on('error', function(err, response) {

session.send('Error calling custom vision endpoint');

}).on('fail', function(data, response) {

session.send('Failure calling custom vision endpoint');

});

});

}

Conclusions

In all, I was incredibly impressed by the ease and accuracy of Microsoft's Custom Vision Service (even with poor backgrounds). I envision hundreds of valuable scenarios the service can help deliver and I hope you will give it a test drive.