Containers in Enterprise, Part 3 : Orchestration

In previous post, we looked at container devops. Let's talk about container orchestration in this post.

In previous post, we briefly touched upon micro-services. Besides enabling micro-service architecture, orchestrators help in lot of other ways. Let's start by asking ourselves why do we need orchestrators in the first place?

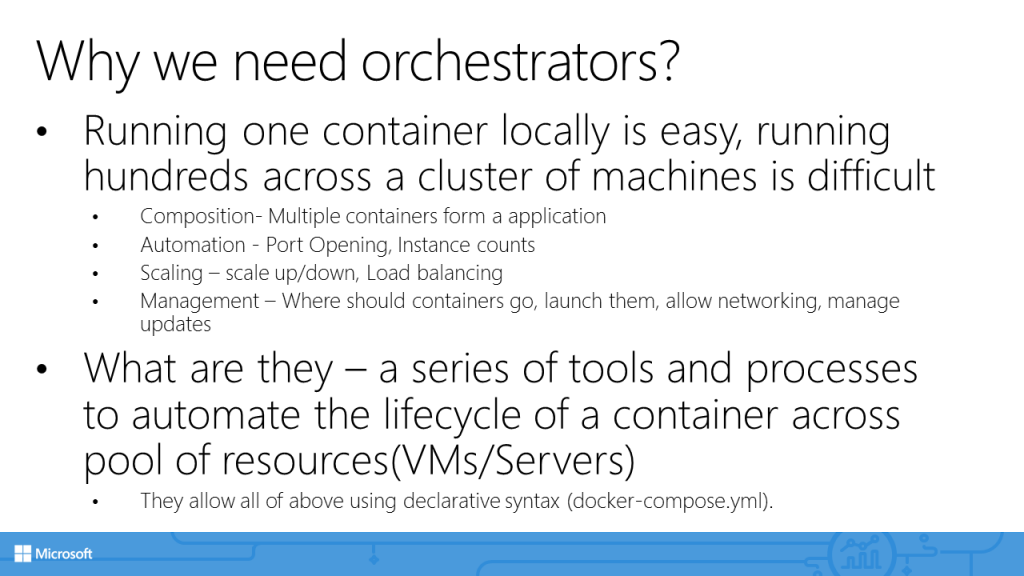

Well! running container locally is easy. DevOps helps to an extent where we can have a container image ready to be deployed anywhere. However, inspite of that, running hundreds of containers across a cluster of machines is difficult. Let's spend some time discussing why so.

1st, application spans across multiple containers. A typical 3 tier application will have a web tier, a service tier and a database tier. All of them combined forms an application. So composition of these containers should be taken care of. Also depending upon the type of container, you need to open different ports. For a web tier, you'll have to open port 80. For a database tier, you may need to open port 1433. So there is a great deal of automation that is involved. Once you get these containers up and running then you also need to worry about scaling them up or down and general load-balancing. Lastly, there is a general management aspect as well. Where should you run your containers, how should they be launched, general management, etc.

So what are containers? They are a series of tools and processes to automate the container life-cycle across a pool of resource. These resources could be virtual machines or physical servers. They allow solving the issues we discussed above using a declarative syntax. This syntax is expressed in docker-compose.yml file.

There are 2 aspects of orchestratos. 1st is the orchestrator itself and 2nd is the infrastructure they need to operate upon. This infrastructure consists of storage, networking and compute.

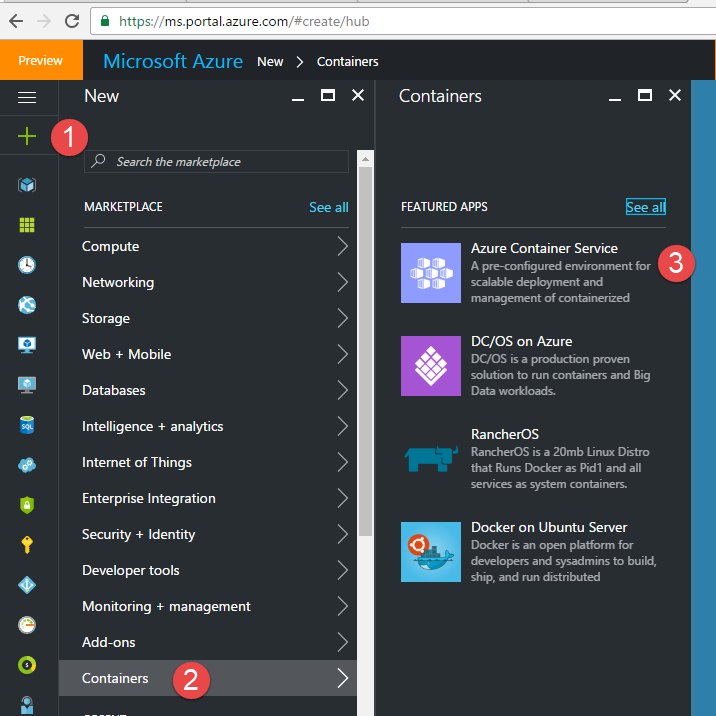

In Azure, these infrastructure services are provided by Azure Container Services. You can spin up a new ACS instance by clicking New-->Containers-->Azure Container Service from Azure portal as shown below.

Once you go though Wizard, you'll have a ACS cluster ready in about 10 mins. Note that in the 2nd wizard window, you get a choice to select between any one of the 3 main orchestrators -

- Mesos DC/OS

- Docker Swarm

- Google Kubernetes

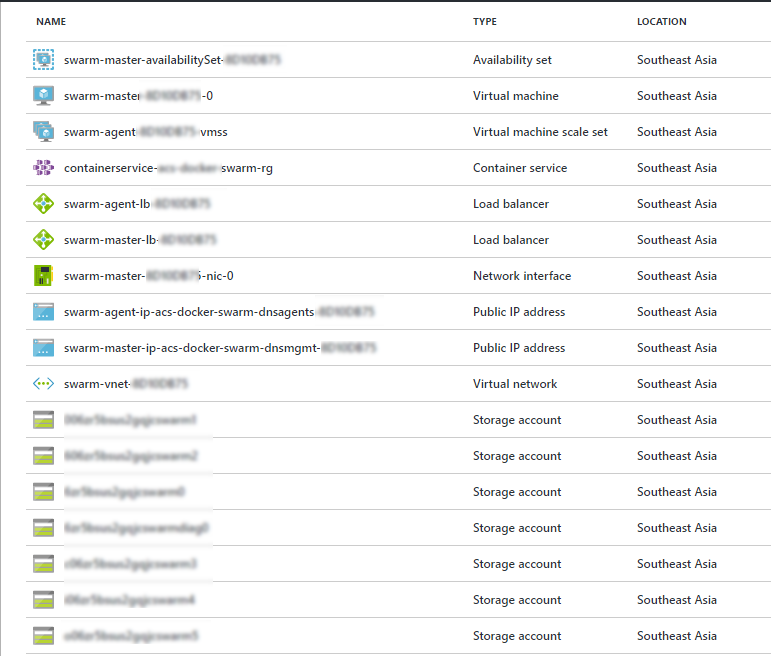

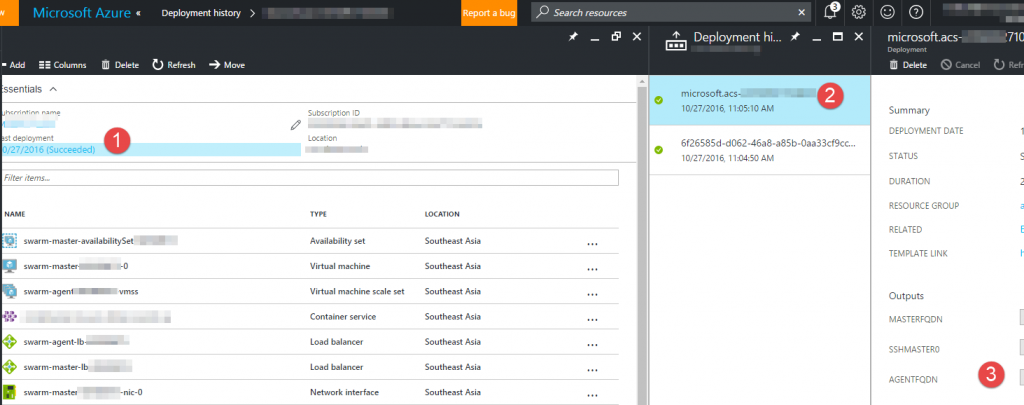

I selected Docker Swarm for this post. My Completed ACS cluster looks like below.

As mentioned above its a bunch of storage, network and compute resources.

I've also set up the SSH connection to this cluster via Putty as mentioned here and here.

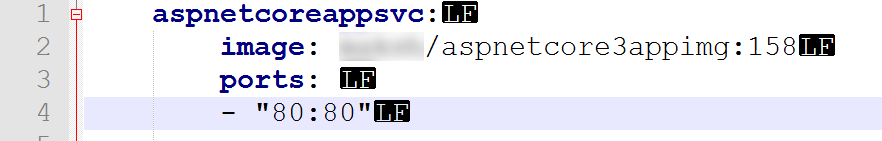

Let's switch back to orchestrator. As discussed previously, you can use the declarative syntax of docker-compose.yml file to orchestrate containers. docker-compose.yml file is similar to dockerfile. It is a text file and contains instructions.

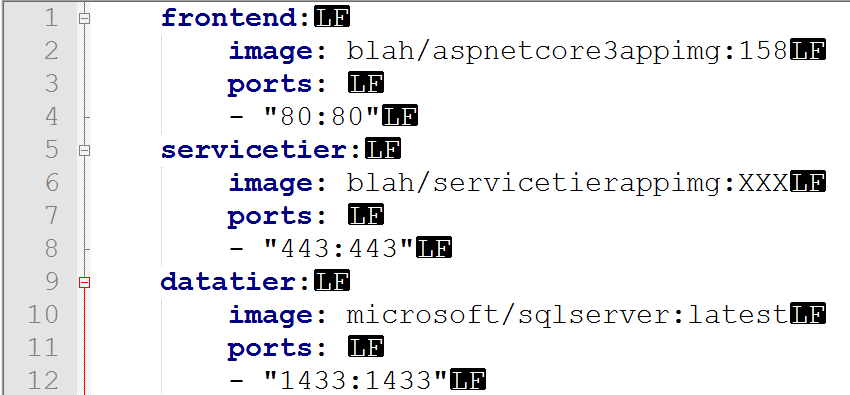

Let's take a look at docker-compose file line by line.

1st line indicates the service to be created on the cluster. Instead of deploying a container on each node, a service needs to be created.

2nd line indicates the container image to be used for this service. This is same image we uploaded to Docker hub using devops pipeline in previous post.

3rd line instructs the ports to be opened on host and container.

4th line are the actual ports on host and container.

This file can container additional services as well. e.g. a typical 3 tier application may have a docker-compose file as below.

Once you finalize your docker-compose file, you run docker-compose commands from a console connected to ACS cluster.

I have found that setting the DOCKER_HOST to listen on port 22375 works as opposed to 2375 mentioned here.

Once you run above command, all the docker commands you execute locally, will actually run on remote ACS cluster.

First thing you do is navigate to the folder containing docker-compose file. In my case, it's C:\Users\<my-user-id>. Run following command -

This command will execute commands in docker-compose file. It will create 1 or many services as mentioned in the docker-compose file.

Once the service is created, you can browse to ACS endpoint and browse the application. ACS endpoint is the Agent FQDN, which you can copy from the deployment history of the ACS cluster itself as shown below.

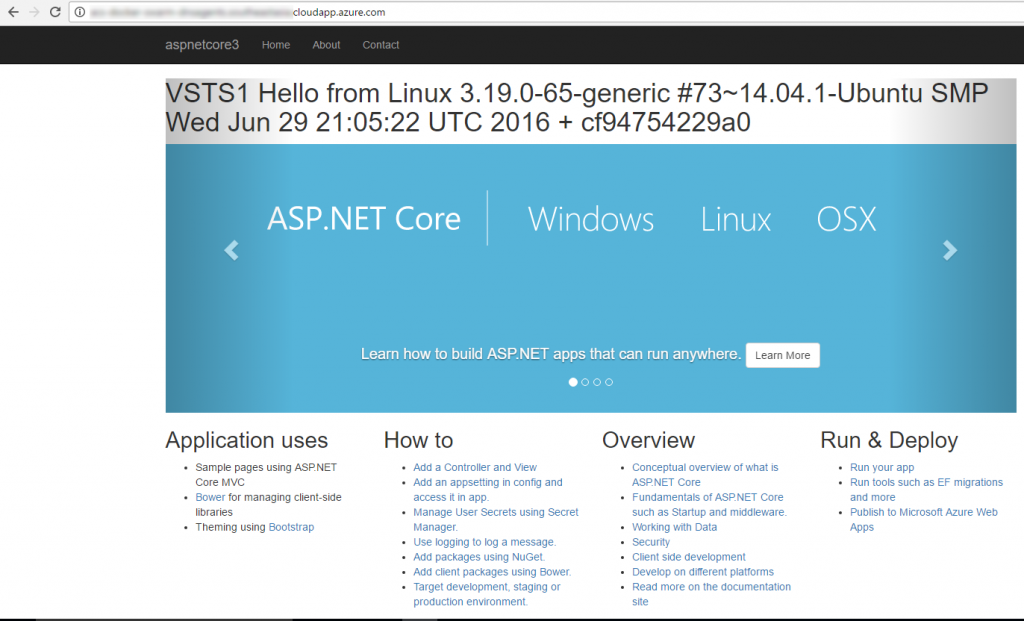

The actual application running on ACS cluster is shown below.

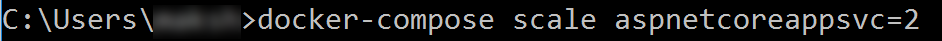

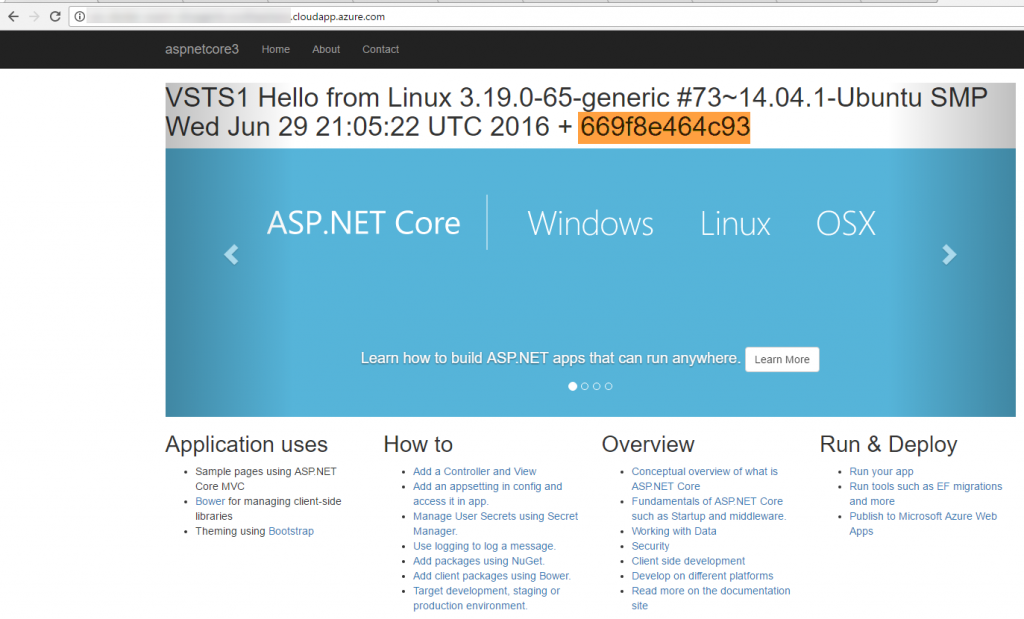

This is good! We've got an application running on ACS cluster. Note that this is only one instance running. We want to have 1 more instance running behind a load-balancer. How do we do that? Well! its as simple as running following command.

I instruct to scale, pass the name of the service and how many instances I need. Application the gets deployed to another instance. See below the highlighted machine name is something new apart from cf94754229a0 shown in the picture above.

So, we have got a load-balanced, multi-node deployed application created from a container image deployed using a devops pipeline!

Let's talk more about the orchestrator choices we've got.

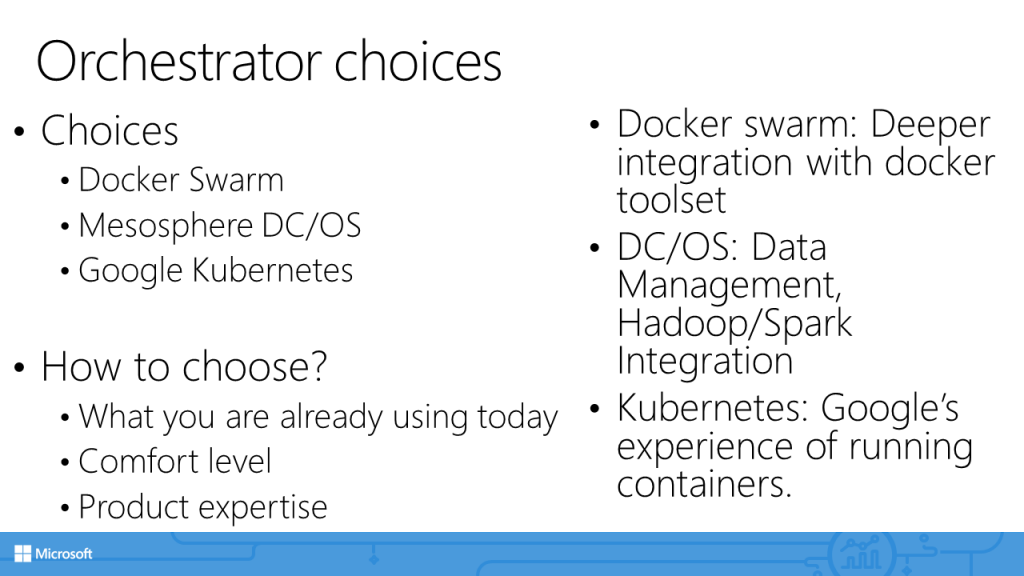

As discussed above, in Azure, you can choose between Mesos DC/OS, Docker Swarm and Google Kubernetes as your orchestrator. Given that orchestrators are a relatively new technology, there is no prescriptive guidance as such available to choose one over the other.

It all comes to what you are already using, your comfort level and product expertise within enterprise.

In general, Docker swarm has deeper integration with docker ecosystem. Mesos DC/OS has a great data management story and is used by many big data project teams. Kebernetes builds on Google's experience of running container in production for many years.

With this, we come to an end of the blog series that talked about containers in enterprise.