Everything you wanted to know about SR-IOV in Hyper-V Part 7

Only a few more topics in this series remain uncovered. This part of the series looks at resiliency with SR-IOV. By resiliency, I mean, of course, NIC teaming, otherwise known as Load Balancing/Failover (LBFO), the ability to aggregate network links or fail over should a link fail. I won’t get into the details of configuring NIC teaming which is inbox in Windows Server “8”, just how it relates to the use of SR-IOV.

When a NIC team is created on top of two or more SR-IOV capable physical network adapters, the SR-IOV capability is not propagated upwards. Hence, the two features are not compatible in the parent partition.

The solution for virtual machine networking redundancy with SR-IOV and Windows Server “8” guest operating systems, is to do teaming inside the virtual machine guest operating system itself. To configure this:

- Each physical NIC should have a virtual switch bound to it in the parent partition, each with SR-IOV enabled

- The VM is configured with two network adapters, each connected to one of the virtual switches.

- In the parent partition, the virtual machines network adapters MUST be configured to allow teaming (meaning each network adapter can spoof the MAC address of the other) by running the following PowerShell command:

- Get-VMNetworkAdapter –VMName “VMName” | Set-VMNetworkAdapter –AllowTeaming On

- Configure the IOVWeight on the virtual network adapters as covered in a previous part of this series

- Configure teaming in the guest operating system in switch independent, address hash distribution mode.

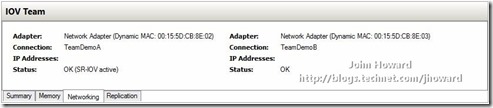

The following is a screenshot from inside a virtual machine configured in this way, with one of the physical links failed (by disabling one of the NICs using ncpa.cpl in the parent partition).

It is possible to create a team in the guest operating system where, in the parent partition, one virtual switch is in SR-IOV mode and the other not (or bound to a network adapter which does not support SR-IOV). This is fully supported, but you should bear in mind that it may introduce side effects. LBFO is not aware of what is backing the NICs in the team, hence not aware that one path is SR-IOV enabled (potentially with a VF), and the other path not. In this situation, while a VM still has redundancy against link failure, you may want to configure the virtual NICs for Active/Standby operation inside the VM.

While a team interface can be created on up to 32 NICs, inside a virtual machine, the (unenforced) support limit is for 2 NICs.

Note that the networking tab in Hyper-V will not show the IP address(es) of the virtual network adapters when a team has been created inside a VM. This is because the virtual network adapters do not have an IP address. It’s the team interface inside the VM which has an IP address.

The following screenshot was taken with the physical NIC bound to virtual switch “TeamDemoB” disabled.

Hyper-V Manager is correctly showing the status of the second virtual network adapter as “OK” from the Hyper-V perspective. This is because it still has connectivity to other virtual machines connected to “TeamDemoB”. In other words, Hyper-V Manager does not ripple through the underlying physical link status.

In the next part of this series, I’ll give you the tools needed to make you an SR-IOV debugging superhero.

Cheers,

John.

Comments

Anonymous

December 10, 2013

I can`t believe nobody commented in this blog, this is the most awesome article I have ever read, I could not stop reading!Anonymous

June 18, 2014

The comment has been removed