My personal Azure FAQ on Azure Networking (v3)

Last updated: April 6th, 2017.

IMPORTANT : The information I reported in this post is time sensitive since may change in the future. I will try to maintain the content updated, but you should double-check with official Azure documentation sources. This is v3 of my previously published blog posts below, I will maintain previous versions for historical reasons:

My personal Azure FAQ on Azure Networking SLAs, bandwidth, latency, performance, SLB, DNS, DMZ, VNET, IPv6 and much more (ARM)

My personal Azure FAQ on Azure Networking SLAs, bandwidth, latency, performance, SLB, DNS, DMZ, VNET, IPv6 and much more (ASM)

In the last five years, I worked extensively on Azure core IaaS, during engagements with my partners I have been asked many questions on Azure networking in general, and more specifically on Virtual Network (VNET) and VMs in new Azure Resource Manager (ARM) world. Providing adequate answers is not always easy, sometime the documentation is not clear or missing in details, while other times you can deduct yourselves but requires pretty good knowledge. Since I detected some recurring patterns in these questions, I decided to write my personal FAQ list in this blog post. Just to be clear: even if I’m a MSFT and have inner knowledge of Azure, I’m not going to unveil you any reserved or secret information. Everything you will read here can be found playing directly with Azure or retrieved by using official and public documentation. If you have additional “nice” questions requiring a non-trivial or non-easy-to-retrieve answer, fill free to insert a comment below this post. Additionally, if you have proposals or feedbacks regarding new feature that Azure networking should have, please use the link below to submit your ideas or vote existing ones:

FEEDBACK FORUM: Networking (DNS, Traffic Manager, VPN, VNET)

As usual, you can also follow me on Twitter at @igorpag. Regards.

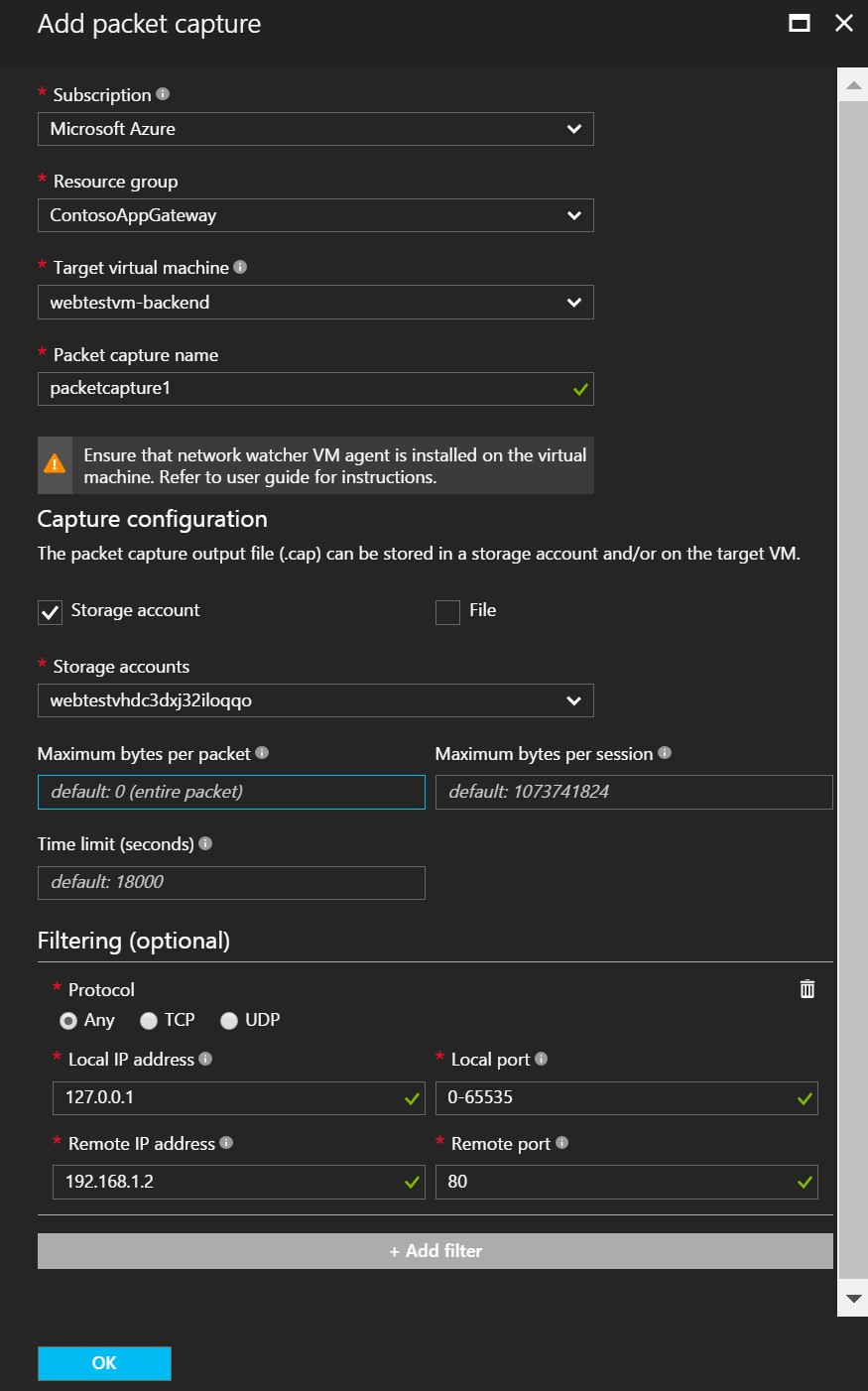

Can I take a network trace inside my Azure Virtual Network?

YES. This cool and useful feature has been recently released under the Azure “Network Watcher” service. You can now capture packet data in and out of a virtual machine. Advanced filtering options and fine-tuned controls such as being able to set time and size limitations can be applied as well. The packet data can be stored in a blob store or on the local disk in .cap format, then use tools like WireShark to analyze and display.

Introduction to variable packet capture in Azure Network Watcher

/en-us/azure/network-watcher/network-watcher-packet-capture-overview

Additionally, packet capture provides the capability of running proactive captures based on defined network anomalies, thus triggering actions to react to network conditions. More details in the article below:

Use packet capture to do proactive network monitoring with Azure Functions

/en-us/azure/network-watcher/network-watcher-alert-triggered-packet-capture

Be aware that packet capture requires a virtual machine extension to be installed inside the VM (AzureNetworkWatcherExtension). It is available for both Windows and Linux Operating Systems. This feature can be managed from the Azure Portal and PowerShell.

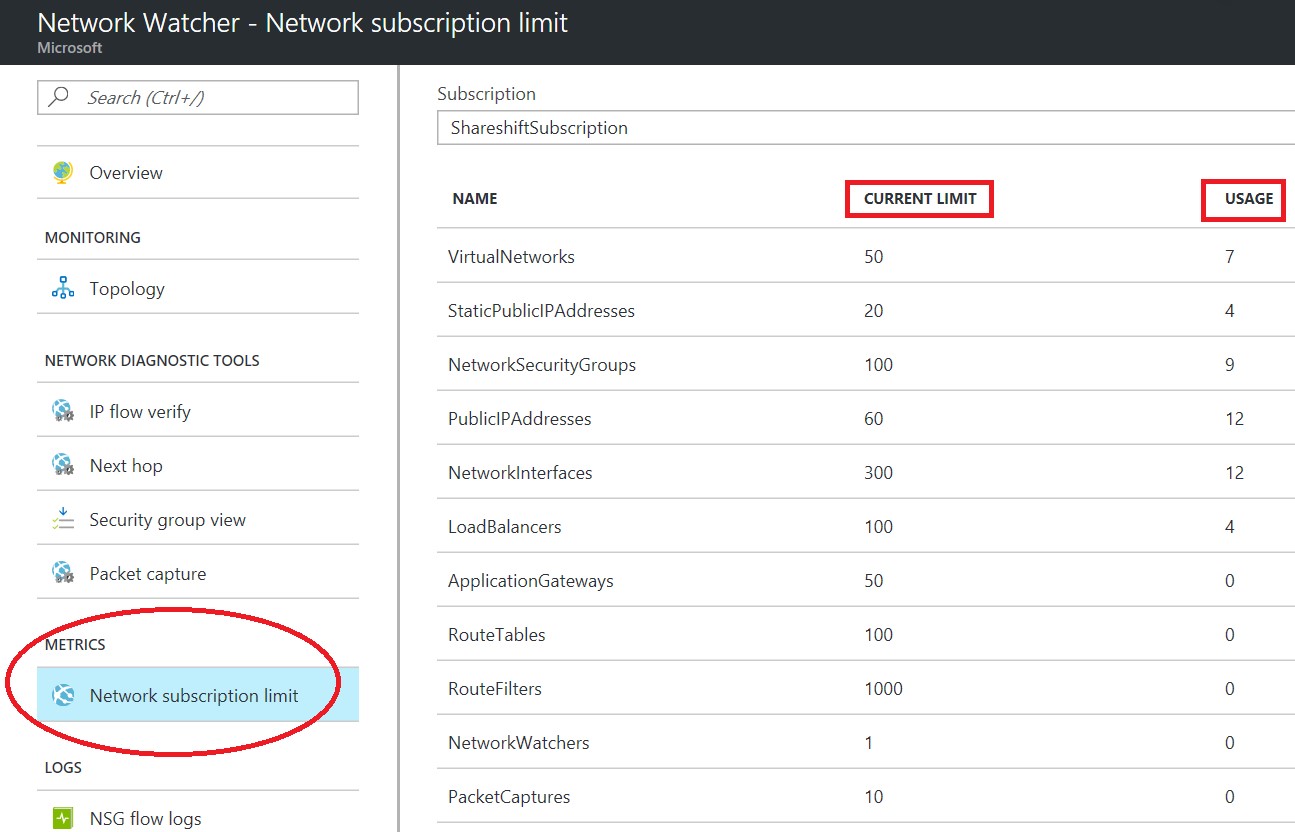

How can I check my network resources usage against my assigned limits?

One of the nice features of new Azure “Network Watcher”, is the possibility to check all networking related resources currently used in a specific region for a certain subscription. All Azure resource limits, including networking ones, are listed in the article below. Hard limits cannot be increased, for Soft Limits, instead, you can open an incident to Azure Support and ask to increase.

Azure subscription and service limits, quotas, and constraints (Networking)

/en-us/azure/azure-subscription-service-limits?toc=/azure/virtual-network/toc.json#networking-limits

Can I persist my VM MAC address?

YES. Azure now provides persistent MAC addresses generated at NIC creation time. This ID will not change over the entire NIC lifecycle, even if the VM will be stopped, crashed and recovered to a different Azure Host, resized, deallocated or unbound. Be aware that Azure assigns a MAC address to the NIC only after the NIC is attached to a VM and the VM is started the first time. You cannot specify the MAC address that Azure assigns to the NIC. The MAC address remains assigned to the NIC until the NIC is deleted or the private IP address assigned to the primary IP configuration of the primary NIC is changed.

Network interfaces

/en-us/azure/virtual-network/virtual-network-network-interface

Is there any limit on VNET and subnet sizing?

YES. Inside a VNET you can create a maximum of 1000 subnets. This is a “soft” limit that can be eventually increased. The smallest subnet we support is a /29 and the largest is a /8 (using CIDR subnet definitions). Inside each subnet, Azure reserves some IP addresses within each subnet. The first and last IP addresses of the subnets are reserved for protocol conformance, along with 3 more addresses used for Azure services. Regarding IP addresses, currently Azure VNET supports 4096 IP addresses, independently from the number of NICs and VMs and from their state, stopped VM will still count toward the limit.

Azure Subscription limits for Networking

/en-us/azure/azure-subscription-service-limits#networking-limits

Can I create a Storage Account or Azure SQLDB with restricted access from a VNET only?

While is possible to create a Virtual Machine not exposed to the Internet, for Azure Storage Accounts and Azure SQLDB logical servers there is a mandatory DNS name created that need to be exposed. During creation of these resources, a FQDN records is created and exposure cannot be avoided since this resource locator is required to access the resource. Both these resources are protected by Microsoft DDoS and Azure SQLDB can be also configured with its own firewall mechanism.

Configure an Azure SQL Database server-level firewall rule using the Azure Portal

https://azure.microsoft.com/en-us/documentation/articles/sql-database-configure-firewall-settings

Currently there is a feature request to provide an internal-only endpoint for storage and Azure SQLDB to VMs inside a VNET: they will be able to access these services even without Internet connectivity. This functionality should be available in the second half of calendar year 2017.

Create predefined NSG for Azure Datacenters IP Range https://feedback.azure.com/forums/217313-networking/suggestions/11093742-create-predefined-nsg-for-azure-datacenters-ip-ran

What is IP address “169.254.169.254”?

If you monitored in some way network traffic and connections for your Azure VMs, you may have been noticed IP address “169.254.169.254”: what is this? Don’t worry, it is not a malicious attempt to hack or attack your VM. This is a non-routable IP address that is accessible only from inside an Azure VM and is used to communicate with the Azure Host for some very specific scenarios and information retrieval.

Preview: “What is about to happen to my VM?” In-VM Notification Service

https://azure.microsoft.com/en-us/blog/what-just-happened-to-my-vm-in-vm-metadata-service

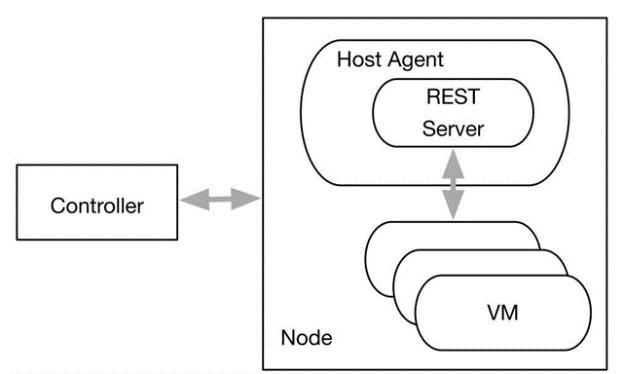

Today, this special IP is used to retrieve the planned maintenance events that will touch the VM, as described in the article above, but soon will be used also to retrieve metadata information related to the VM itself. Figure below describes the high level overview of today’s communication framework. The REST Server is the only place the VM can communicate. For the metadata instance server, we use the standard Link-Local addresses i.e. 169.254/16 which is aligned with RFC3927.

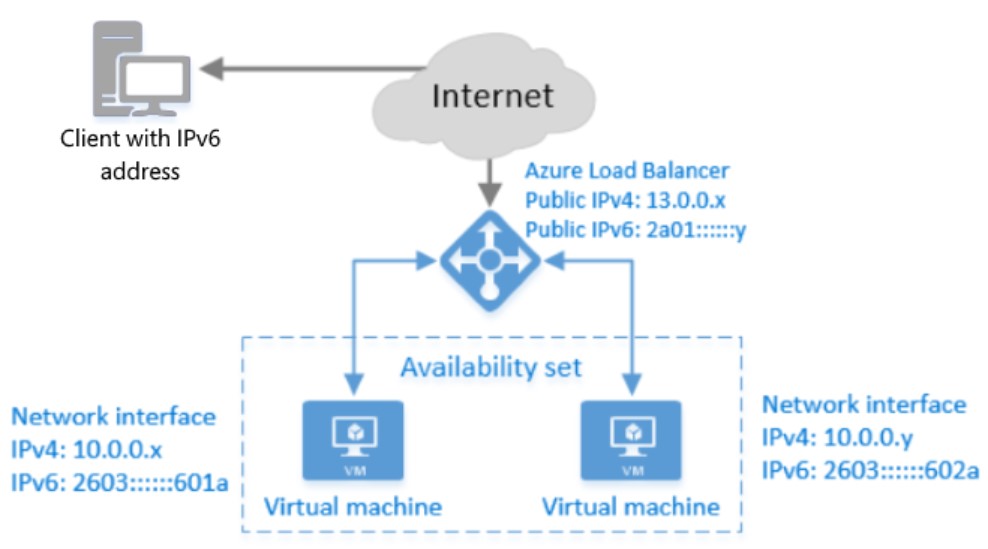

Does Azure support IPv6?

YES. Azure now provides support for IPv6 public facing IPs, both static and dynamic. Please note that you cannot use IPv6 inside a VNET for VM-to-VM communications: using an IPv6 address as an internal VM IP (DIP) or for ILB is *not* supported. The public IPv6 addresses cannot be assigned to a VM as an instance level IP (ILPIP or PIP). They can only be assigned to the external Azure Load Balancer. It is worth mentioning that currently is not possible to use NSG with IPV6, but access can be restricted using load balancing rules.

Overview of IPv6 for Azure Load Balancer

/en-us/azure/load-balancer/load-balancer-ipv6-overview

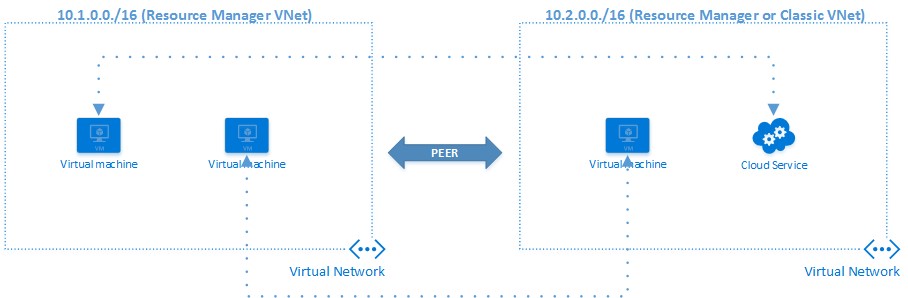

Can I connect Azure Virtual Networks without any Gateway?

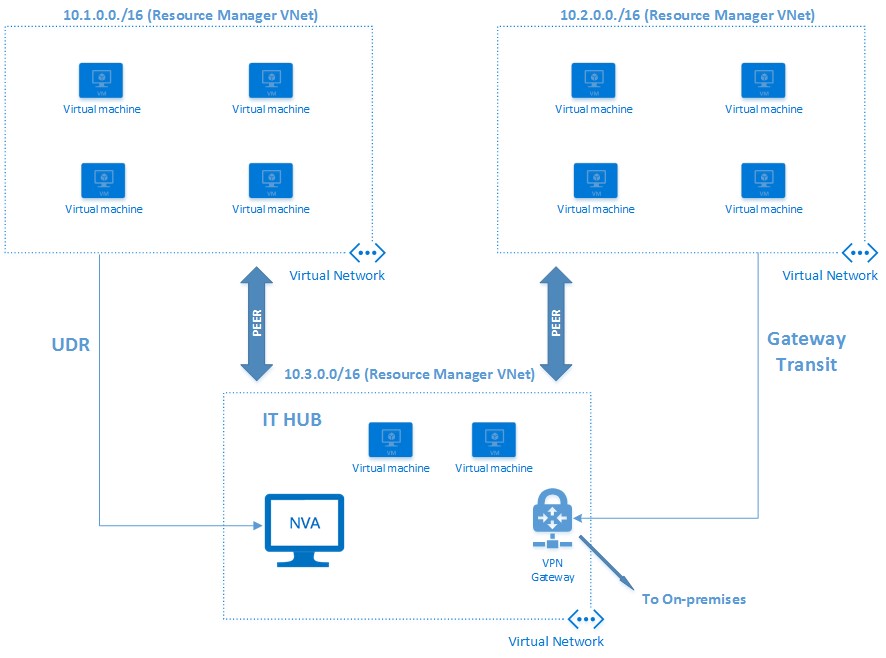

YES, this features is called “VNET Peering“ and can connect two VNETs without any gateway. Only VNETs in the same region can be peered, mixing ASM and ARM is supported, gateways in the peered VNET can be used by the peering VNET.

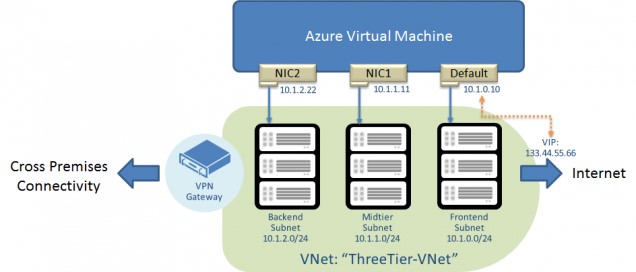

Is it possible to have multiple NICs for an Azure VM?

YES, this feature has been available since 2014 but with some limitations. What has been recently changed here is that you are now able to add (or remove) additional NICs even after VM creation, the only restriction is that VM must be stopped and deallocated. Additionally, you can mix single and multiple NIC VMs in the same Availability Set (AS), you can assign public IPs and NSGs also to the secondary NICs. Each NIC can support up to 250 IP addresses. Be aware that adding more NICs will not give you more bandwidth, all will share the same limit granted to the VM level. Maximum number of NICs is depending on the VM SKU and size as documented in the article below:

Sizes for Windows virtual machines in Azure

/en-us/azure/virtual-machines/virtual-machines-windows-sizes

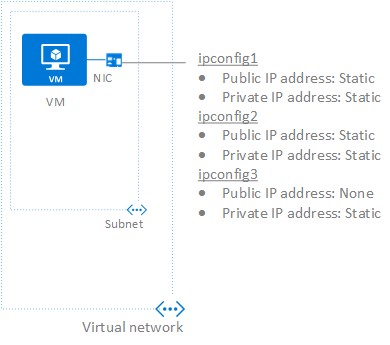

Can I assign multiple IPs to my single Network Interface Card (NIC)?

YES. An Azure Virtual Machine (VM) has one or more network interfaces (NIC) attached to it. Any NIC can have one or more static or dynamic public and private IP addresses assigned to it. Every NIC attached to a VM has one or more IP configurations associated to it. Each configuration is assigned one static or dynamic private IP address. Each configuration may also have one public IP address resource associated to it. A public IP address resource has either a dynamic or static public IP address assigned to it. The actual limit for assigned IP per NIC is 250, there is also a limit to the subscription level, you can check the article below:

Sizes for Windows virtual machines in Azure

/en-us/azure/virtual-machines/virtual-machines-windows-sizes

Be aware that maximum number of NICs is dependent on the VM SKU and size. Finally, network throughput will not increase adding more NICs since they are logical constructs.

Is it possible to create custom TAGs for NSG?

Today, you can create a NSG containing “rules” and then assign to subnets (or single NIC). You are limited to one NSG per subnet, and you cannot create nested or linked NSG. Additionally, it is not possible to create custom TAGs containing predefined sets of VMs or IP addresses, and you cannot leverage “system” TAGs to refer to Azure services, like storage or Azure SQLDB, to restrict access. Azure Networking team heard feedbacks around these functionalities and is considering exposing system tags for STORAGE and SQL in the near term. System TAGs are also on the roadmap for future improvements.

Azure Networking Feedbacks Forum

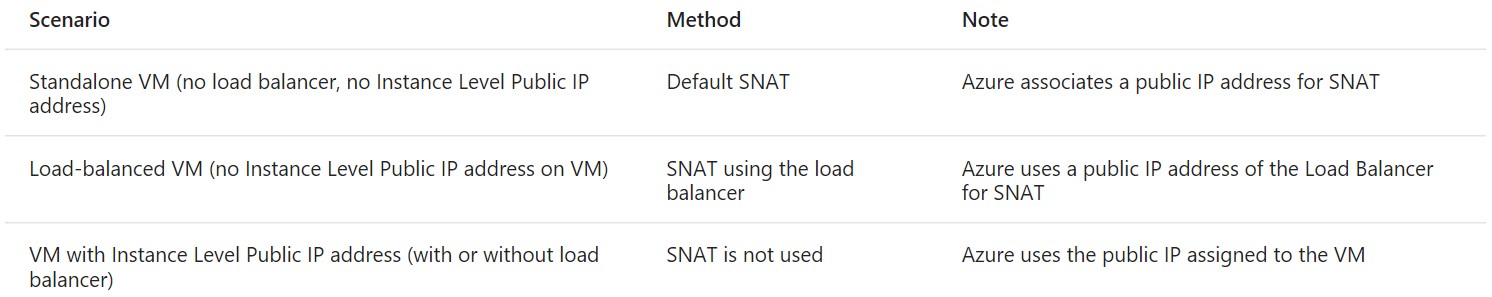

Which is the Public IP what a VM will use to send out network packets to Internet?

In older ASM API Azure days, a VM was always contained in a Cloud Service logical container, and a VIP has been always associated to it. In this scenario, network packets originated from the VM exited Azure network using the Cloud Service VIP as source IP. Making this VIP “reserved”, permitted users to know that IP and configure, for example, VIP white-listing on their on-premises perimeter firewalls. In ARM, there is no more mandatory Cloud Service (and related VIP). When you create a VM inside a VNET, there is no default VIP associated if you don’t specifically ask it. The net result is that if network packets originated from that VM exited Azure network, the VIP used was one from a pool of Azure managed VIPs with no possibility to predict which one and no guarantee that would remain the same. If you want your VM to send packets out to the Internet using a fixed/reserved IP, you need to assign a VIP to the VM, through the Azure Load Balancer, or assign a VIP directly to the VM (PIP or ILPIP). All scenarios are well explained in the article below:

Understanding outbound connections in Azure

/en-us/azure/load-balancer/load-balancer-outbound-connections

Should I care about Azure Load Balancer capacity for VM outgoing traffic?

While Azure Load Balancer “filter” incoming connections and flows to VNET contained VMs, for outgoing traffic the load balancer is bypassed and network packets reach directly the Internet. This is true independently from the type of VIP, if any, assigned to the VM. If you want to have strict control on outgoing traffic, you need use a NVA and use User Defined Routing (UDR) to force the traffic through it.

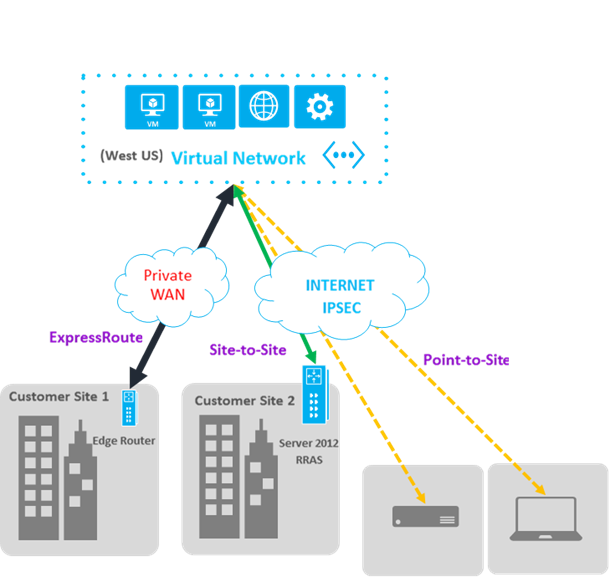

Do Azure VPN or ExpressRoute provide traffic encryption?

Azure VPN tunnels, for Site-to-Site connectivity (S2S), are by design/default encrypted using IPSEC. Encryption is also provided for Point-to-Site (P2S) using SSTP (Secure Socket Tunneling Protocol). ExpressRoute does not provide network traffic encryption for its circuits.

VPN Gateway FAQ

https://azure.microsoft.com/en-us/documentation/articles/vpn-gateway-vpn-faq

For Azure VPN Gateways, is not possible to customize IPSEC parameters, this is something that should be possible in the future.

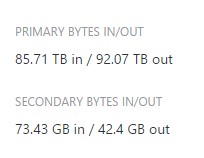

Is it possible to obtain ExpressRoute usage statistics?

YES, it is possible. Azure Portal provides only two simple counters as in the example below, but as you can see they are cumulative and it is difficult to monitor usage over time.

To obtain more granular Azure ExpressRoute statistics, you can use the PowerShell script below from GitHub:

Visualize Azure Express Route Statistics

https://github.com/tvuylsteke/azure-ea-powerbi/tree/master/Express%20Route%20Stats%20-%20V1

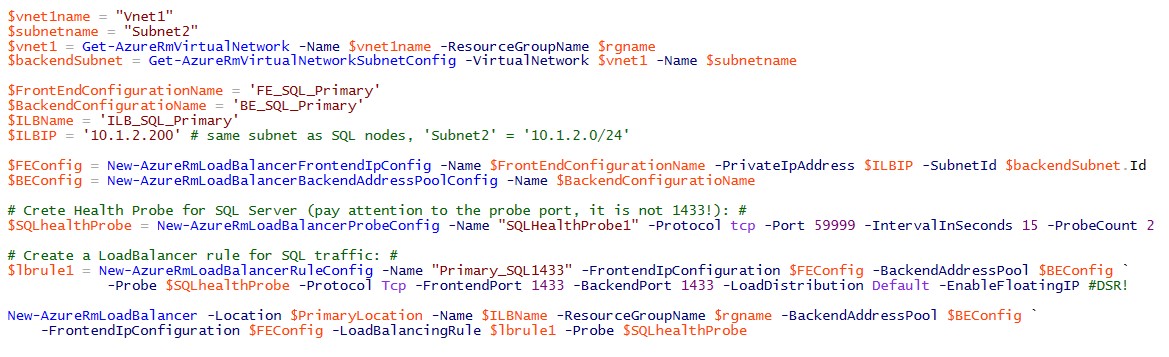

Does Azure Load Balancer support multiple IPs?

YES, Azure Load Balancer supports multiple IPs both in the public facing VIP scenario (SLB) and internal only scenario (ILB). In the first case, it is possible to have multiple public IP (VIPs) assigned to the same external load balancer, and then traffic forwarded to the same set of Virtual Machines included in one single Availability Set. It is also possible to combine this feature with multiple NICs per VM, as described in the article below:

Load balancing on multiple IP configurations using PowerShell

The configuration described above is possible since last year, what has been introduced only recently is the multiple IP support for Azure Internal Load Balancer (ILB) using internal IPs (DIPs). After initially creating an ILB with a single internal DIP, you can add more using the steps outlined in section “Example script: Add an IP address to an existing load balancer with PowerShell” in the article below. In short, each IP address requires its own load balancing rule, probe port, and front port. It is worth mentioning that thanks to this feature, it is now possible to deploy multiple SQL Server AlwaysOn Availability Groups (AG) using the same set of Azure VMs.

Configure one or more Always On Availability Group Listeners - Resource Manager

NOTE: Today, you can have a load balancer with multiple VIPs backed by a single VM “Backend Pool”, but you cannot use multiple Availability Sets for a single load balancer.

Does ICMP work in Azure?

It depends from where you want to use ICMP tools and which targets you want to reach. ICMP works inside an Azure Virtual Network, between different Azure Virtual Networks connected via VPN or ExpressRoute or hybrid connections between on-premise and Azure VNET. It will *not* work if you have to cross the Azure Load Balancer (SLB). If you need ICMP for diagnostic and troubleshooting, there are valid alternatives to PING and TRACERT not based on ICMP but TCP. My favorite ones, among many others available in Internet, are PSPING from Mark Russinovich SysInternals tools and NMAP:

PsPing v2.01

https://technet.microsoft.com/en-us/sysinternals/jj729731.aspx

NMAP

Inside an Azure VNET, you can use TCP, UDP, and ICMP TCP/IP protocols. Multicast, broadcast, IP-in-IP encapsulated packets, and Generic Routing Encapsulation (GRE) packets are blocked within VNets. Remember: Azure VNET is a Layer-3 virtual network.

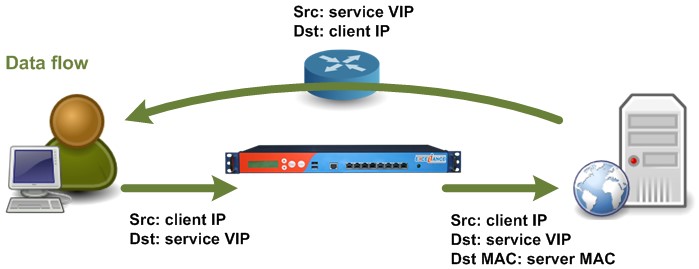

What is Direct Server Return (DSR) for Azure Load Balancer? Should I use it?

“Direct Server Return” (DSR) is probably one of the most unknown and mis-understood parameter in all Azure networking objects configurations, probably because is seldom used and documentation is not good enough. In short, it is a parameter used to enable a special behavior when configuring Azure Load Balancer load-balanced rules, both external and internal ones: when enabled, Load Balancer does not perform any Network Address Translation (NAT) of the request and will pass the incoming packet to the destination VM using the Load Balancer VIP as the destination IP, instead of the VM internal IP (DIP).

In the above picture, please note on the right the “Dst: service VIP” label. Conversely, when DSR is disabled (default behavior), “Dst: service VIP” will be replaced by VM internal IP. It is also worth noting that the target VM will respond to the Client directly, bypassing the Load Balancer. Now the second question: should I use it? The answer is NO, if you don’t need it for specific application or service. For sure, you will need if you want to use SQL Server AlwaysOn Availability Group feature, as described in the article below:

Configure an ILB listener for SQL Server AlwaysOn Availability Groups in Azure ARM

In the last PowerShell command above, DSR is enabled using “ -EnabledFloatingIP” parameter. If you want to use DSR for other applications, be careful because the VM will need to receive network packets where the destination IP is not the VM IP but the load balancer VIP. If you don’t have application or service specific support to enable this, as in the SQL Server case, you will need to add a loopback adapter to the VM and manually add the load balancer VIP to it.

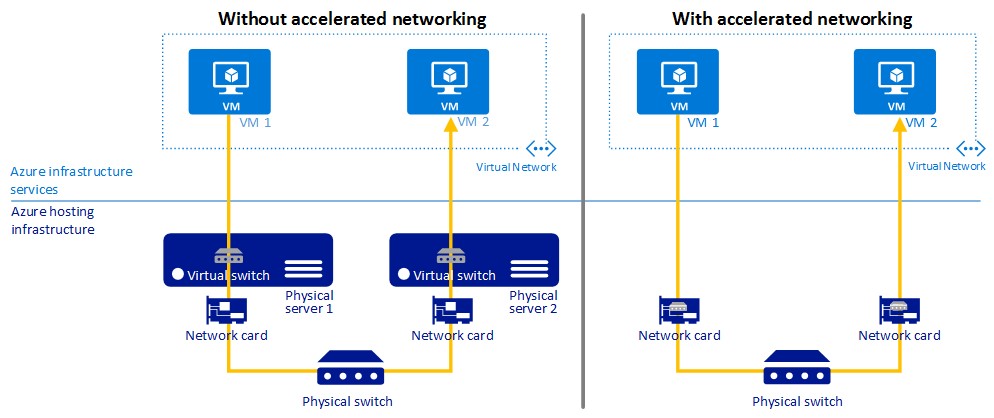

Does Azure support SR-IOV for accelerated networking?

Azure feature currently in preview, and soon broadly available, called “Accelerated Networking” enables “Single Root I/O Virtualization” (SR-IOV) to a virtual machine (VM), greatly improving its networking performance. This high-performance path bypasses the host from the datapath reducing latency, jitter, and CPU utilization for use with the most demanding network workloads on supported VM types.

Accelerated networking for a virtual machine using the Azure portal

/en-us/azure/virtual-network/virtual-network-accelerated-networking-portal

Can I use Receive Side Scaling (RSS) optimization in Azure VM?

YES. Azure Host hypervisor can support “Receive Side Scaling” (RSS) at the VM level. Azure Virtual Machines have default network settings that can be further optimized for network throughput. Following the instructions in the article below, you can optimize network throughput for both Windows and Linux Guest OS, including major distributions such as Ubuntu, CentOS and Red Hat.

Optimize network throughput for Azure virtual machines

/en-us/azure/virtual-network/virtual-network-optimize-network-bandwidth

A VM using Receive Side Scaling (RSS) can reach higher maximal throughput than a VM without RSS. RSS may be disabled by default in a Windows VM, you can easily check following the previous article. For Linux, starting January 2017 this optimization is enabled by default, but you need to use a Linux Integration Services (LIS) version equal or higher than 4.1.3.

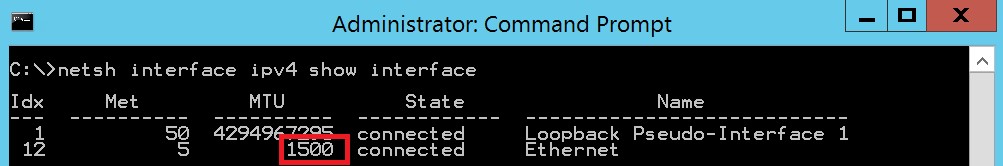

Can I manually change protocol and NIC settings at the Guest OS level?

If not explicitly stated in any Microsoft official documentation, manual overriding of network interface or TCP settings at the Guest OS level is not recommended. This may cause loss of connectivity, or severe VM malfunctions. Some settings at the NIC level, like MTU, should be left at default values, in this case 1500. For DNS settings at the TCP level, changing are supported but from external Azure API/Portal/PowerShell/CLI-tool. Changing OS Firewall configuration is safe and supported, as long as you will not close out your remote connection.

Does Azure provide SLA for VM network bandwidth?

I tested VM bandwidth limits on almost all VM sizes and found the results pretty consistent over time, then in theory there is nothing preventing you from generating your measure. What Azure documentation provide is a relative measure of network capacity for each VM SKU/SIZE as you can see in the table below:

Sizes for virtual machines in Azure

https://azure.microsoft.com/en-us/documentation/articles/virtual-machines-windows-sizes

If you look at column “Max Network Bandwidth”, you can find tags as LOW, MODERATE, HIGH, VERY HIGH, EXTREMELY HIGH. If you found in Internet, some old tables and information related to maximum network bandwidth for various VM SKUs, you should not trust them since outdated and Azure infrastructure evolved in the meanwhile. The only exceptions are Azure HPC instances where it is exactly specified the type of network adapter provided, that is 1 NIC 32Gbit/s and 1 NIC 10Gbit/s for A8/A9, and 1 NIC 10Gbit/s for A10/A11. See at the link below for more information:

About H-series and compute-intensive A-series VMs for Windows

If you want to run performance tests on network throughput, be sure to use a tool able to provide multi-threaded connections. For both Windows and Linux, I recommend using NTttcp tool mentioned in the next paragraph and iPerf3 for Linux only.

Does Azure provide SLA on VM-to-VM network latency?

During my tests and projects, Azure network Always provided excellent performances in terms of latency. At least at my knowledge, there is no Public Cloud vendor able to guarantee a maximum network latency, backed up by a formal SLA, between two different VMs. Since you don’t have much control over the Azure network infrastructure, there are few things you can try to optimize not only the latency but networking performances in general:

- Chose the appropriate VM SKU since different ones have different networking equipment and then different underlying performances. As explained in the previous section, each VM SKU has a classification for networking capabilities, bandwidth is highlighted but also latency is different.

- Place VMs that need to communicate frequently in the same Azure VNET: be aware that this will not guarantee single hop between all your VMs, with the introduction of regional VNETs, your virtual network can span multiple Azure Host Clusters in the same datacenter.

- If you want to provide maximum proximity, and ensure VMs will be in the same Azure Host Cluster, you should put relevant VMs in the same Availability Set (AS): be aware that this is not what AS is intended for, this may have impacts on planned maintenance and availability of your VMs.

- Even if still in preview, the best thing you can test is Azure “Accelerated Networking”, see previous section "Does Azure support SR-IOV for accelerated networking?" to discover how to do that.

- Even if main improvement is for network throughput, enabling RSS at the Guest OS level, both Linux and Windows, can provide latency improvements, see previous section “Can I use Receive Side Scaling (RSS) optimization in Azure VM? ” for more details.

- For Linux, be sure to use the latest LIS driver: starting version 4.1.3, Microsoft introduced lots of performance improvements and some bug fixing, it’s highly recommended to upgrade this component.

- If your VMs are in different VNETs connected using VPN or ExpressRoute, and are in the same region, consider using “Virtual network peering” instead.

It’s very easy to test yourself and have a good understanding of average latencies, but you need to be very careful on your test criteria. First of all, you need to test over various hours during the day including peak and non-peak hours, various days over the week including weekends and working days, then finally you should use calculate the average and consider a good percentile sampling near the 95% to eliminate peaks and occasional strange values. Additionally, don’t assume latencies will be the same in all Azure datacenters. If you want to do your own tests, for Windows, I would recommend you PSPING tool from SYSINTERNAL suite that you can find here: http://technet.microsoft.com/en-us/sysinternals/bb896649.aspx. What is nice regarding this tool, is that it will not use ICMP then no problem to traverse firewalls and load-balancers, you can decide which TCP port to use and, very important, you can test for throughput, not only for latency. If you want to do your networking tests using Linux, I would recommend also this tool, highly recommended if you want to test network throughput and performances:

NTttcp Utility: Profile and Measure Windows Networking Performance

https://gallery.technet.microsoft.com/NTttcp-Version-528-Now-f8b12769

NTTTCP-for-Linux

https://github.com/Microsoft/ntttcp-for-linux

Azure backend traffic will go through public Internet?

Azure traffic between our datacenters stays on our network and does not flow over the Internet. This includes all traffic between Microsoft services anywhere in the world. For example, within Azure, traffic between virtual machines, storage, and SQL communication traverses only the Microsoft network, regardless of the source and destination region. Intra-region VNet-to-VNet traffic, as well as cross-region VNet-to-VNet traffic, stays on the Microsoft network., see the article below, authored by Yousef Khalidi (CVP, Azure Networking) for great and comprehensive details:

How Microsoft builds its fast and reliable global network

https://azure.microsoft.com/en-us/blog/how-microsoft-builds-its-fast-and-reliable-global-network

Microsoft owns and operates its dark fibers to support WAN network connecting 38 Azure regions (at the time of writing this article) and 100s of datacenters. Customer traffic enters our global network through strategically placed Microsoft edge nodes, our points of presence (PoP). These edge nodes are directly interconnected to more than 2,500 unique Internet partners through thousands of connections in more than 130 locations.

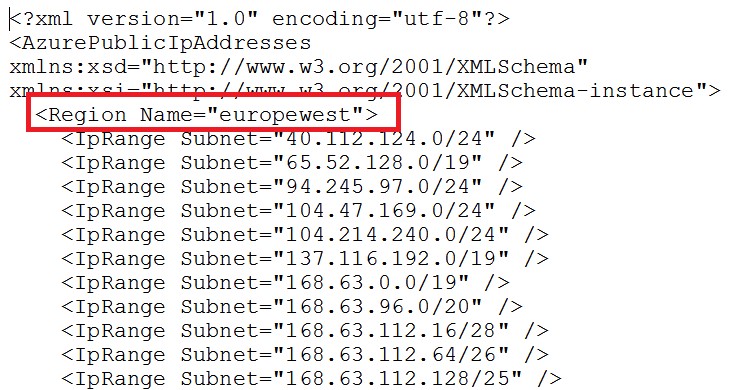

Can I white-list Azure public IP addresses?

YES, all Azure public IP ranges are published at the link below. If you want to white-list, for security reasons, on your on-premise network you can do that selectively by region but not by service.

Microsoft Azure Datacenter IP Ranges

http://www.microsoft.com/en-us/download/details.aspx?id=41653

From the link above you can download an XML file, divided in sections per regions but there is no way currently to retrieve the same information using an API. Azure Networking team is working to address this issue:

Provide a rest api to access the list of Azure IP Addresses

If you rely on specific Azure resources, for example an Azure SQLDB instance or blob storage account, be aware that the assigned IP you will see, resolving their specific DNS names, may change without notice. IMPORTANT: don’t rely on any geo-location service for Internet IP used by Azure since may report incorrect information, for more details see the blog post below:

Microsoft Azure’s use of non-US IPv4 address space in US regions

Be aware that the internet facing public IP (VIP) associated to your resources may change under some conditions, if you need to be sure it will never happen, you need to enable "Reserved IP" feature as described at the blog post below:

IP address types and allocation methods in Azure

/en-us/azure/virtual-network/virtual-network-ip-addresses-overview-arm

If you still need to use legacy Cloud Services, see the article below on how to use Reserved IP using ASM API:

Reserved IP addresses for Cloud Services & Virtual Machines

http://azure.microsoft.com/blog/2014/05/14/reserved-ip-addresses

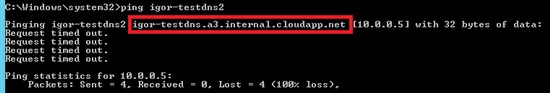

What is “*.internal.cloudapp.net” DNS suffix in Azure VM?

If you build an Azure VM and join to an Azure Virtual Network and try to ping it using the VM host name (legacy Netbios name to be clear), this is what you will see:

As you can see in the picture, there are two strange parts in the FQDN: nothing strange in “cloudapp.net”, but what are “internal” and “a3”? You can easily guess that “internal” is the default DNS sub-zone that Azure internal DNS (iDNS) will use to host records for VMs and resolve to internal VM IP (DIP). “a3” instead is a little bit complex: in short, it’s describing the network zone, inside the specific Azure datacenter, where the VM is allocated. There is no official documentation or list of these zones, then don’t ask more, but please note that it will change not only between Azure datacenters, but also inside a single datacenter. You can programmatically retrieve internal DNS setting using the simple PowerShell command below:

(Get-AzureRmNetworkInterface -Name "mynicname" -ResourceGroupName $rgname).DnsSettings.InternalFqdn

Output will be something like the string below:

InternalDomainNameSuffix : 0fcdtenvgl4urm0sv5aj1xkcrc.fx.internal.cloudapp.net

Which protocols Azure Load Balancer supports?

Azure Load Balancer, for both external and internal connections, can support only TCP (Protocol ID “6”) or UDP (Protocol ID “17”). It cannot support protocols like ICMP (Protocol ID “1”). As an example, also IPSec (and VPN using it) is not supported since you should open UDP port 500 (that is fine) and permit IP protocol numbers 50 and 51. UDP Port 500 should be opened to allow Internet Security Association and Key Management Protocol (ISAKMP) traffic to be forwarded through Azure Load Balancer. IP protocol ID 50 should be set to allow IPSec Encapsulating Security Protocol (ESP) traffic to be forwarded. Finally, IP protocol ID 51 should be set to allow Authentication Header (AH) traffic to be forwarded. As additional note, since this would be interesting for some scenarios, Azure internal load balancer (ILB) does *not* support today “any-protocol/any-traffic”

Protocol Numbers

http://www.iana.org/assignments/protocol-numbers/protocol-numbers.xhtml

How many connections a VM can support?

For Windows Server, a VM can support about 500k TCP connections but you need to be careful about other potential limits that may come into the game before this threshold: if you expose your VM to Internet traffic through an Azure Load Balancer endpoint, you may be limited by SLB capacity or DDOS security mechanisms. Actually, there is no public documentation on SLB or Endpoint limits, neither on the number of connection nor network bandwidth, then you should conduct your own performance tests to ensure everything will work correctly.

Can I have multiple VNET Gateways?

Currently you can only have one virtual network Gateway per single VNET per single type, that is one for VPN and one for ExpressRoute. With VPN, if you configure multiple tunnels, they will share the same maximum network bandwidth. If you need more throughput for a VPN connection, you may want to consider using Network Virtual Appliances (NVA) or 3rd-party VPN software like OpenSwan and StrongSwan with big enough Azure VM SKUs/sizes.

ExpressRoute and VPN can coexist in the same VNET?

YES, it is possible to have a VNET with gateways supporting ExpressRoute and VPN at the same time. The only requirement is to enable ExpressRoute before VPN. One Gateway per VNET restriction persists.

New Networking Capabilities for a Consistent, Connected and Hybrid Cloud

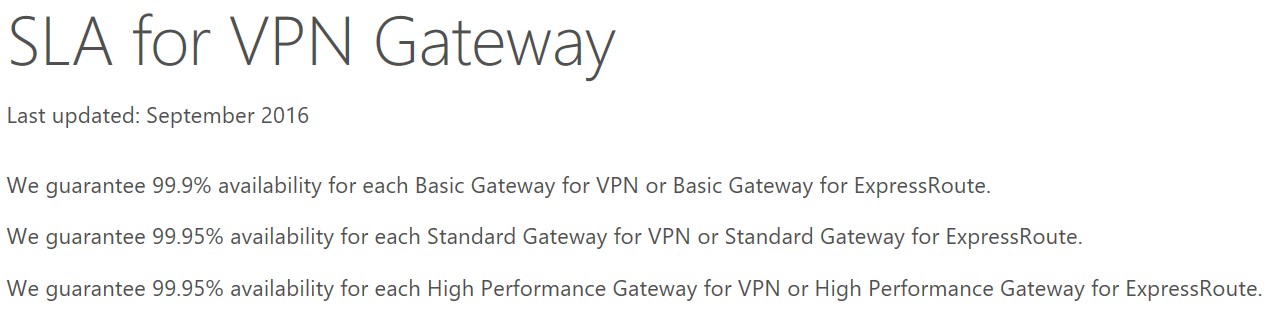

Do I need a Gateway to connect my VNET to ExpressRoute?

YES, if you want to use ExpressRoute (ER) to connect your VNET, you need to use and configure “Private Peering” routing domain, then you need to deploy a Virtual Network Gateway inside your Azure Virtual Network. This implies two important facts:

- There are several Virtual Network Gateway sizes that can be used with ER circuits: be sure to use the right size (1000, 2000, 10000 Mbps) able to support your VNET traffic requirements, otherwise you will not be able to use all the bandwidth allocated to your circuit (50 Mbps, 100 Mbps, 200 Mbps, 500 Mbps, 1 Gbps, 10 Gbps).

- Virtual Network Gateway, depending on the type, and ER circuit have different SLA, be sure to review this based on your requirements. We guarantee a minimum of 99.95% ExpressRoute Dedicated Circuit availability, and between 99,90% and 99,95% for the Gateway:

SLA for ExpressRoute

https://azure.microsoft.com/en-us/support/legal/sla/expressroute/v1_2

SLA for VPN Gateway

https://azure.microsoft.com/en-us/support/legal/sla/vpn-gateway/v1_2

Can I connect VNETs inside and outside a region?

YES, Azure provides three ways to connect VNETs: ExpressRoute, VPN and Virtual Network Peering. The last one has been recently released and permits to connect two VNETs in the same region without any gateway as for the other options. For this feature, there is a nominal fee based on the amount of GB transferred between the peered VNETs.

Virtual Network Peering

/en-us/azure/virtual-network/virtual-network-peering-overview

With VPN, there is no boundary on the region, geography or subscription, essentially the tunnel only need Gateway VIPs to see each other. In case you need more throughput and lower latency, you can eventually use ExpressRoute technology to connect up to 100 VNETs and share circuits between them, even with different subscriptions. Before Premium add-on announced in May 2015, ExpressRoute posed limitations on geographical boundaries: now it is possible not only to connect VNETs in the same geography (US, Europe, etc.) but also across different geographies. For example, you can connect a VNET in West US to a VNET in North Europe.

New Networking Capabilities for a Consistent, Connected and Hybrid Cloud

Does Azure provide DDoS network protection?

YES, Azure infrastructure is designed to protect the network from DDOS originating from the Internet and also internally from other tenants VMs, you can read the details in the white-paper below:

Microsoft Azure Network Security Whitepaper version 3 is now available

Please be aware of the following important points:

- Windows Azure’s DDoS defense system is designed not only to withstand attacks from the outside, but also from within.

- Windows Azure monitors and detects internally initiated DDoS attacks and removes offending VMs from the network.

- Windows Azure’s DDoS protection also benefits applications. However, it is still possible for applications to be targeted individually. As a result, customers should actively monitor their Windows Azure applications.

To augment your tenant security, you can deploy 3rd-party Network Virtual Appliances (NVA) in your Virtual Network, but be aware that custom DDoS to protect Azure public IPs (VIPs) is not supported.

Are Broadcast and Multicast supported within Azure Virtual Network?

This type of communication is not allowed inside a VNET or even across Azure Load Balancer.

Support Multicast within Virtual Networks

You can use TCP, UDP, and ICMP TCP/IP protocols within VNets. Multicast, broadcast, IP-in-IP encapsulated packets, and Generic Routing Encapsulation (GRE) packets are blocked within VNets.

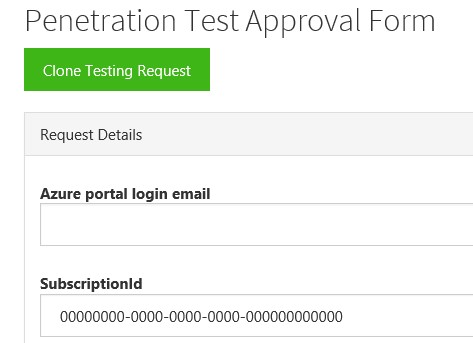

Is it possible to run network penetration tests against my Azure VMs?

YES, you can do that but it’s highly recommended to follow the specific procedure mentioned below, before running the test, otherwise Azure monitoring and defense systems will trigger in and blacklist your connections, IPs and/or VMs. Be sure your request will be approved before starting the test, you can find the form to fill-in at the bottom of the page URL reported below:

Penetration Test Notification Process

https://security-forms.azure.com/penetration-testing/terms

Microsoft conducts regular penetration testing to improve Azure security controls and processes. We understand that security assessment is also an important part of our customers' application development and deployment. Therefore, we have established a policy for customers to carry out authorized penetration testing on their applications hosted in Azure. Because such testing can be indistinguishable from a real attack, it is critical that customers conduct penetration testing only after notifying Microsoft. Penetration testing must be conducted in accordance with our terms and conditions.

Comments

- Anonymous

April 10, 2017

An Amazing post.