Having a BLAST on Windows HPC with Windows Azure burst (1/3)

My previous blog showed you how to make a movie using ray tracing software and the powerful HPC scheduler on Azure with little programming. Today, we’ll look at a more complex example in a series of 3 blog posts in the coming months. 1. How blast works with the parametric sweep engine. 2. Details of Genome database management. 3. Adding a REST based UI interface to control the process.

A BLAST search enables a researcher to compare a query sequence with a library or database of sequences, and identify library sequences that resemble the query sequence above a certain threshold. Different types of BLASTs are available according to the query sequences. In our case, we used the blast software from:

https://www.ncbi.nlm.nih.gov/staff/tao/URLAPI/pc_setup.html

To take advantage of Azure’s scaling out capability, we need to figure out a way to take the queries we want to run and parallelize that. In the case of Blast it was easy. Your queries are 1000s of entries in the format of:

>gi|27543|emb|Z20800.1| HSAAACWWS X, Human Liver tissue Homo sapiens cDNA similar to (Genbank M12158) orangutan adult alpha-2-globin gene., mRNA sequence

GCCACCGCCGAGTTCACCCCTGCAGTGCACGCCTCCCTGGACAAGTTCCTGGCTTCTGTGAGCACCGTGCTGACCTCCAA

ACACCGTTAAGCTGGAGCCTCGGTAGCCGTTCCTCCTGCCCACTGGACTCCCAACAGGCCCTCCTCCCCTCCTTGCACCG

GCCCTTCCTGGTCTTTGAATAAAGTCTAAGCGGGCAGC

Blast input is broken into a series of inputs. The “Run.cmd” command calls blastn with its arguments and broken up input files on blog storage. You can use sed or split to split the file. I did my operations of splitting the files up under the cygwinX , “split test_query.txt -n 1000 –d” did the trick for me, since I don’t mind losing a few queries at the boundaries to just test things out. If you want to make it look nicer, you can rename all the files to input.xxx using your favorite scripting language’s foreach command. Then, just like the movie rendering example you have seen (Youtube video), we make a command file like below. 1. copy input file to local node from blog storage. 2. run blast. 3. copy output to blob storage again. ( Note: You can find a copy of AzureBlobCopy.exe here before 5/7/11. )

%root%\AzureBlobCopy\AzureBlobCopy.exe -Action Download -BlobContainer inputncbi -LocalDir %inputdir% -FileName %inputFile%

%root%\bin\blastn.exe -db est_human -query %inputdir%\%inputFile% -out %outputdir%\%outputFile%

%root%\AzureBlobCopy\AzureBlobCopy.exe -Action Upload -BlobContainer outputncbi -LocalDir %outputdir% -FileName %outputFile%

This is the command line you run using the job scheduler.

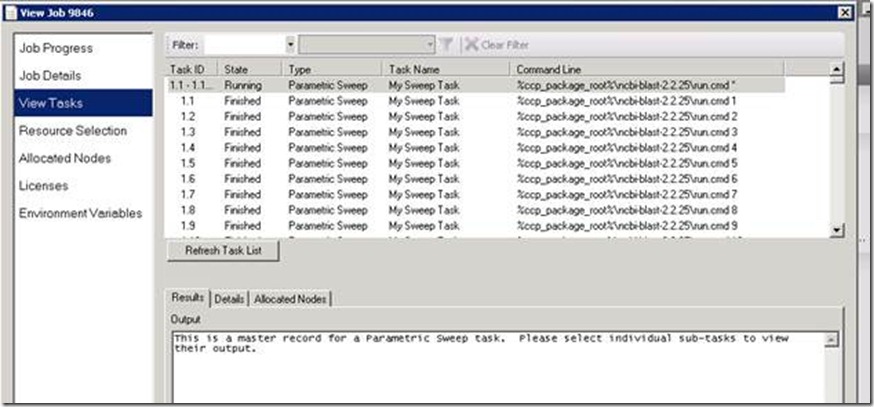

Here’s the Job View of 1000 tasks containing queries actually running distributed onto a 10 node azure burst cluster.

The job that I submitted this morning is not quiet done yet: BLAST can consume computing power of 1000s of nodes.

Outputs are large files, should’ve compressed them to save space!

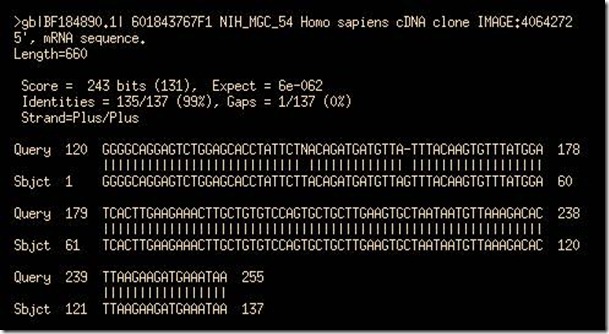

Sample output from one of these files above.

Once again this proves that little or no programming is required to scale your computing workload to azure using HPC Azure burst. In the next blog we’ll cover how to deal with the large databases as a pre-task, so that each node has a local copy of these huge databases that it can search against.

Thank you for reading this blog.