How to document your infrastructure in an XML file by using parallelexecution powershell module

Hello again

Today, I will showcase how we can leverage the parallelexecution powershell module, to document a whole environment.

We will use advanced features such as the usage of commands files and of parallel script execution. For this example, we will work against an Active Directory environment, but it could be easily modified to document any other kind of infrastructure that supports Powershell such as Exchange, Sharepoint, Lync and many others.

IMPORTANT NOTE: This code is a sample that will probably crash due to an out of memory condition if executed as is against a big enterprise Active Directory, so please use it carefully and understand what you are doing before running against a production AD)

All code I will use for this example is published on github here:

https://github.com/fernandorubioroman/DocumentAD/

The main code we will use is this

#requires -modules parallelexecution

#requires -version 3

#First we get an array of Domains, of all DCs in the Forest and of the best ones (in the same site as we are) to collect extensive domain data from them

$domains=(Get-ADForest).domains

$dcs=@()

$bestdcs=@()

$date=get-date -Format yyyyMMddhhmm

foreach ($domain in $domains){

$dcs+=get-addomaincontroller -filter * -server $domain

$bestdcs+=get-addomaincontroller -server $domain

}

#Then we get the information per domain

$bestdcs| select -ExpandProperty hostname| Start-ParallelExecution -InputCommandFile .\commandDomain.csv -OutputFile ".\output_domains_$($date).xml" -TimeoutInSeconds 900 -Verbose

#Then we get the information we want per DC

$dcs| select -ExpandProperty hostname | Start-ParallelExecution -InputCommandFile .\commandDC.csv -OutputFile ".\output_DCs_$($date).xml" -TimeoutInSeconds 900 -ScriptFolder .\scripts -Verbose

#we compress and delete the output files

Get-ChildItem -Path .\ |where{$_.Extension -eq ".xml"} | Compress-Archive -DestinationPath .\DocumentedAD$date.zip

Get-ChildItem -Path .\ |where{$_.Extension -eq ".xml"} | remove-item

Let understand that code line by line

#requires -modules parallelexecution

#requires -version 3

As we said we will use the parallelexecution module, so if you have not yet installed it in your collection machine, go review this article.

We also need version 3 of PowerShell (but really, on the machine you use to launch scripts you should be using PowerShell 5 anyway)

#First we get an array of Domains, of all DCs in the Forest and of the best ones (in the same site as we are) to collect extensive domain data from them

$domains=(Get-ADForest).domains

$dcs=@()

$bestdcs=@()

$date=get-date -Format yyyyMMddhhmm

foreach ($domain in $domains){

$dcs+=get-addomaincontroller -filter * -server $domain

$bestdcs+=get-addomaincontroller -server $domain

}

We first get the list of domains from the AD forest, then we initialize a couple of other arrays, $dcs will be used to store all Domain Controllers in the forest, $bestdcs will contain one Domain Controller per domain that is near (in the same site) that the machine we are using to collect extensive AD data that is replicated at the Domain level and that therefore we just need to collect once for Domain.

We also get the current date and time, so that output files are timestamped and finally, we iterate over the domains populating the dcs and bestdcs arrays.

#Then we get the information per domain

$bestdcs| select -ExpandProperty hostname| Start-ParallelExecution -InputCommandFile .\commandDomain.csv -OutputFile ".\output_domains_$($date).xml" -TimeoutInSeconds 900 -Verbose

Now, is when the magic begins. We pipe the list of the preferred DCs per domain (as objects with lots of properties), we extract from there the hostnames as that is all we are interested about (select -ExpandProperty hostname) and then call start-parallelexecution using that list of machines as input. Let´s understand which parameters we are using

-InputCommandFile .\commandDomain.csv

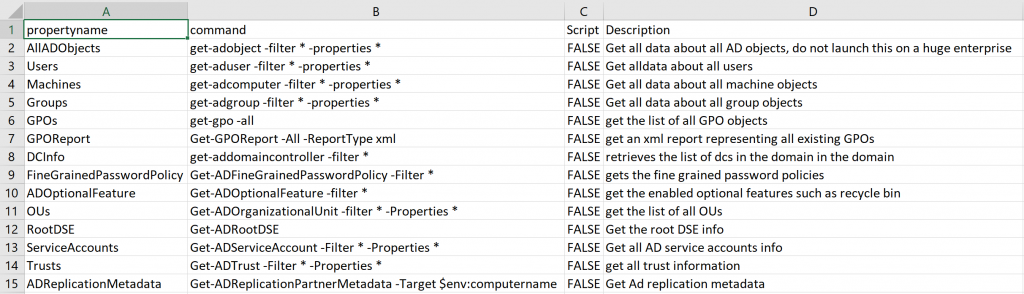

So instead of asking our engine to execute a single command, we use a commands file. This is a # separated file (we didn´t use “,” or “;” as these characters are used from time to time inside powershell commands) In our example commandDomain.csv looks like this

propertyname#command#Script#Description

AllADObjects#get-adobject -filter * -properties *#false#Get all data about all AD objects, do not launch this on a huge enterprise

Users#get-aduser -filter * -properties *#false#Get alldata about all users

Machines#get-adcomputer -filter * -properties *#false#Get all data about all machine objects

Groups#get-adgroup -filter * -properties *#false#Get all data about all group objects

GPOs#get-gpo -all#false#get the list of all GPO objects

GPOReport#Get-GPOReport -All -ReportType xml#false#get an xml report representing all existing GPOs

DCInfo#get-addomaincontroller -filter *#false#retrieves the list of dcs in the domain in the domain

FineGrainedPasswordPolicy#Get-ADFineGrainedPasswordPolicy -Filter *#false#gets the fine grained password policies

#ADOptionalFeature#Get-ADOptionalFeature -filter *#false#get the enabled optional features such as recycle bin

OUs#Get-ADOrganizationalUnit -filter * -Properties *#false#get the list of all OUs

RootDSE#Get-ADRootDSE#False#Get the root DSE info

ServiceAccounts#Get-ADServiceAccount -Filter * -Properties *#False#Get all AD service accounts info

Trusts#Get-ADTrust -Filter * -Properties *#False#get all trust information

ADReplicationMetadata#Get-ADReplicationPartnerMetadata -Target $env:computername#False#Get Ad replication metadata

It´s easier to read or modify it if you open it in Excel and use # as separator

Much better. Our commands file has 4 columns. Propertyname is just a name, that will be used to store the results. (more on this later), Command is the actual command or script we want to execute. If command as in this example we just put there the actual code we want executed, if script we put the name of the script. The script columns specify if we are running a command (false) or a script (true). And finally, description is a text that helps describing the command we are executing.

-OutputFile ".\output_domains_$($date).xml"

The next parameter is the output file we want to extract the collected info to, we could just pipe this to the console, or to a variable but in this case, we want to save the collected info. We include the date on it so that if you end up creating a scheduled task for this, it is easy to take your time machine and see how the environment was at a specific date.

-TimeoutInSeconds 900

This parameter specifies for how long we are going to wait for each command to finish before failing.

-Verbose

This parameter specifies if we want verbose output, so instead of running silently you get an output such as this:

Going back to our code…

#Then we get the information we want per DC

$dcs| select -ExpandProperty hostname | Start-ParallelExecution -InputCommandFile .\commandDC.csv -OutputFile ".\output_DCs_$($date).xml" -TimeoutInSeconds 900 -ScriptFolder .\scripts -Verbose

Very similar to the previous one, the only differences is that we run this against all DCs in the forest, that we use a different commands file, commandsDC.csv and that we are specifying a scripts folder

The commandsDC.csv used looks like this

propertyname#command#Script#Description

Fixes#get-hotfix | Select-Object PSComputerName,InstalledOn,HotFixID,InstallDate,Description#False#Get the list of installed hotfixes #

Services#get-service#False#Get the services information

ScheduledTasks#get-scheduledtask#False#Get the scheduled tasks

RegAssociationsMachine#get-childitem -path "HKLM:\Software\Classes"#False#gets the machine file associations

Drivers#get-windowsdriver -online#False#Get the list of third party drivers running on the machine

Audit#auditpol.ps1#true#get the audit policy of the machine using auditpol

OSInfo#get-wmiobject Win32_OperatingSystem#False#Get the OS information and version#

ComputerInfo#get-wmiobject Win32_ComputerSystem#False#Get the machine name, manufacturer, model, etc#

DiskInfo#Get-wmiobject Win32_logicaldisk |where-object{$_.drivetype -eq 3}#False#Get the disk information

##############Network Config##############

IPInfo#get-wmiobject Win32_NetworkAdapterConfiguration -Filter IPEnabled=TRUE #False#Get the ip network card information#

Shares#get-wmiobject Win32_share#False#Get the list of shares

netstat#get-NetTCPConnection#False#Get the opened network connections

routeprint#get-netroute#false#gets the machine routes

HostParsed#hostparsed.ps1#true#Gets the parsed contents of the host file

##############Certificates##############

RegCertificatesmachine#get-childitem -path "HKLM:\Software\Policies\Microsoft\SystemCertificates" -recurse#False#get the machine info about certificates from registry

CertificatesAuthroot#get-childitem -path cert:\LocalMachine\Authroot#False#get the certificates in the authroot container

certificatesroot#get-childitem -path cert:\LocalMachine\root#False#get the certificates in the root container

##############Miscelaneous##############

EnvironmentVariables#Get-ChildItem Env:#False#Get the environment Variables

ADReplicationConnection#Get-ADReplicationConnection -Server $env:COMPUTERNAME#false#get the replication connections

A couple of important things about the commands file.

First notice that lines starting with # are ignored so you can use that to split the files in parts or to disable specific checks.

Then look at this line

Audit#auditpol.ps1#true#get the audit policy of the machine using auditpol

Here is an example of a script being called, instead of the command we specify the name of the script (the path is specified in the scriptsfolder parameter) and the third column is set to true because this is an script. (The actual code of the auditpol script that I use to parse the audit policy is on the github I referenced at the beginning of this article)

#we compress and delete the output files

Get-ChildItem -Path .\ |where{$_.Extension -eq ".xml"} | Compress-Archive -DestinationPath .\DocumentedAD$date.zip

Get-ChildItem -Path .\ |where{$_.Extension -eq ".xml"} | remove-item

These last lines retrieve the generated xml files, compress them for future reference and then deletes the original files.

Well, that is, piece of cake, right? The question is what we can do with the collected info. To load the output to a variable we can run:

$domainsdata=Import-Clixml -Path .\output_domains_201801261041.xml

$DCdata=Import-Clixml -Path .\output_DCs_201801261041.xml

Then, we can access the collected information such as in the picture

The collected data, is a hashtable, for a more detailed explanation about hashtables check:

/en-us/powershell/module/microsoft.powershell.core/about/about_hash_tables?view=powershell-5.1

in simple terms, a hashtable is a indexed collection of keys and values. In our case, the keys are the name of the machines we have been working with,and the values are powershell objects, that will have one property per each one of the commands we executed against them (named using the propertyname, first column of the csv file). The content of each property will be the result of the command we executed against that machine.

For example running

$DCdata.'rootdc1.contoso.com'.fixes | select HotfixID,Installedon | ft *

That’s all for today, hope you have found it interesting. I will keep updating the csv files that document the AD infrastructure, if you have any suggestion please let me know!

Cheers

Fernando