Partitioning & Archiving tables in SQL Server (Part 2: Split, Merge and Switch partitions)

In the 1st part of this post, I explained how to create a partitioned table using a partition function as well as a partition schema. Now I’ll continue talking about how to merge or split partitions changing the partition function and the partition schema and how to move data between partitions using alter table command.

Here´s where the importance of the function definition makes sense, the way partitions split or merge depends of the RIGHT or LEFT specification. The easiest way you can understand how they work is looking at the following examples. I will use a partition function with LEFT specification, look at the process and the result after splitting and merging the table:

I already created a database PartitionDBLeft, this is how the database looks like:

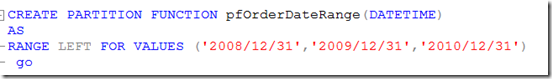

And these are the definitions for partition schema, partition function and orders table:

In this case, the orders table is stored in a partition schema (psOrderDateRange) as well as the index. Also note that my primary key contains the columns OrderID plus OrderDate which is the partition key (the column by which the table is partitioned). When you create a primary key, SQL creates an index, usually a clustered index which sorts the table by that column. If the column OrderDate is not part of the primary key, you won´t be able to create a clustered key because in partitioned tables the sort order is determined by the partition key so you would need to create a non-clustered key instead. Later in this post you’ll see how important is this.

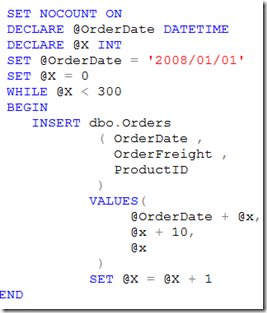

The partition function is defined using LEFT, now let´s insert some orders for 2008:

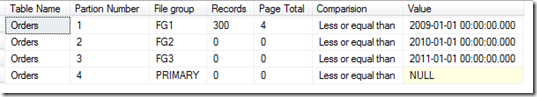

Now, let’s query the partitions, using the queries explained the Part 1, as you see the records are stored inside partition 1 (FG1)

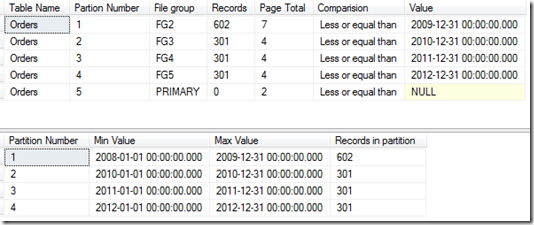

Repeat the procedure inserting 300 for 2009 and 300 records for 2010, (just change the value for @OrderDate), this is how it looks:

Maybe you would expect to have 300 records for each year (2008, 209 and 2010) but the results are different, this is due to the function definition. When using LEFT, each value defined in the function corresponds to the UPPER limit of each partition which you can see in the the column Max Value in the last query. On the other hand if you had use RIGHT instead of LEFT, the result would be the expected. By now, I will drop the table as well as the schema and the function and will define the function again this time using a different value for each range:

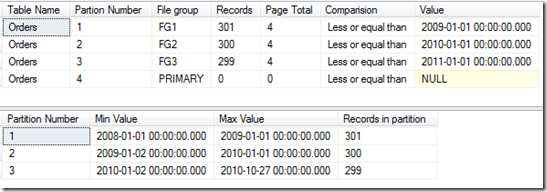

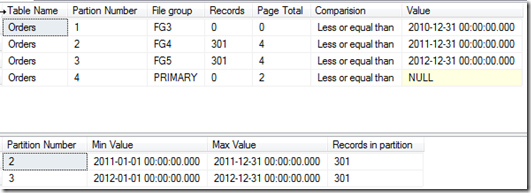

The schema will remain the same as well as the table, this time using the new partition function. After inserting the same records this is how it looks:

In fact, you could insert the dates ‘2008/12/31, 2009/12/31 and 2010/12/31 and those would be the limit for each partition:

Split a partition

According to the partition schema definition, If you insert a record for 2011, that record will be inserted in partition 4 which is the PRIMARY partition. Suppose you need to insert the orders from 2011 in a new partition (inside a new filegroup, let’s say FG4). First, you have to add a new filegroup (FG4) and a new partition (an .NDF file) inside FG4:

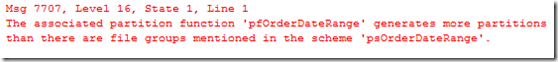

Now you can modify your partition function to SPLIT the last range (which currently holds all records greater than 2010/12/31) to create a new range for orders from 01/01/2011 to 31/12/2010. Before doing so, you need to alter the partition schema to include the new filegroup (FG4) to let the partition function map the new range data to this filegroup, otherwise you will get this error:

The following script will alter the partition schema making FG4 the next available filegroup:

Now let´s alter the partition function to include the new range:

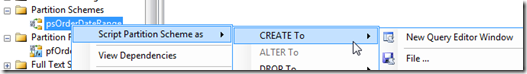

Now, check how the partition schema and partition function look after the change, you can use Management Studio to create the scripts:

This is the result:

This is the expected behavior in most of cases when you need to add a new partition in a rotating base of time to hold new data, in this scenario I would do it in december to prepare my orders table for the next year data. I can always use PRIMARY as the last partition for future data since I´m sure there will not be data there, however the partition function requires an additional range for all data that exceeds the last range value.

If I insert data between 2011/01/01 and 2011/12/31 that would be inserted in FG4:

For the next year, all you have to do is repeat the process, I mean: add FG5, alter partition function marking FG5 the next used filegroup and alter the partition function splitting the last range including 2012/12/31 and voilà, you have a new partition ready to hold 2012 records!

Merge Partitions

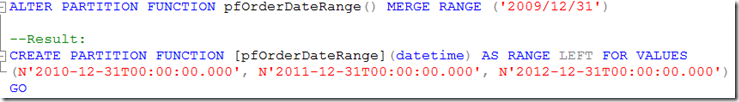

Now let´s talk about merging partitions. After some years, you decide all those partitions are difficult to maintain, it would be better to have all data for the first n years in a single partition. Let’s suppose you want to join all orders from 2008/01/01 to 20010/12/31 in a single file. Well, you can merge those 3 partitions but just 2 at time.

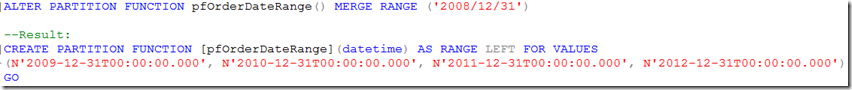

Merging is as simple as alter the partition function specifying the range that will be the new upper limit for the joined ranges:

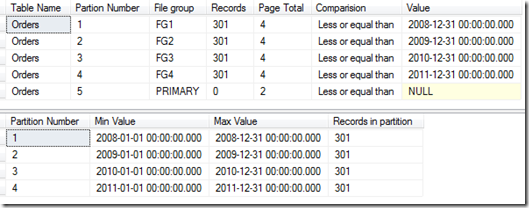

Lets firs join FG1 and FG2 and see the result:

If you query the partitions, this is what you will see:

Note that all records from FG1 moved to FG2, now you only have 5 partitions instead of the 6 originals. and FG1 does not contains any data.

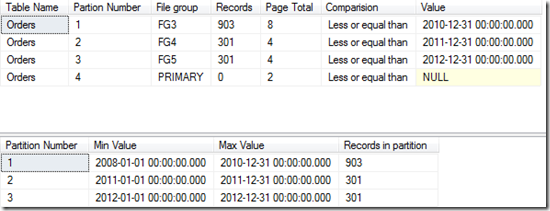

Now let´s join FG2 and FG3:

Let´s look what’s inside each filegroup:

Again, data from FG2 moved to FG3.

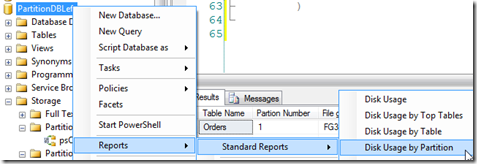

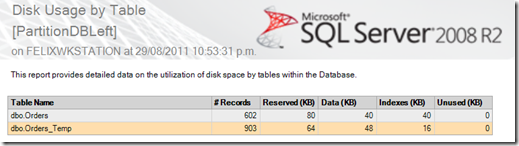

You can also check the reports included in management studio:

What about FG1 and FG2?. In fact, this filegroups are empty, you could use for future data, for other kind of data or you could remove them.

Switch Partitions

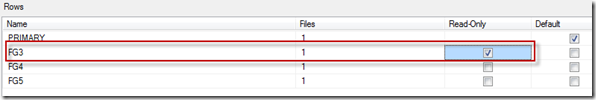

Now suppose you need to archive all data from 2008/01/01 to 2010/12/31 since this is historical data. At the current time, all this data is stored in FG3 which is now partition No. 1. By the way, If this data will never change anymore, you could mark this filegroup as READONLY:

This is not necessary to archive data but is recommended to protect historical information against writing and to simplify backup strategy which I’ll discuss in another post.

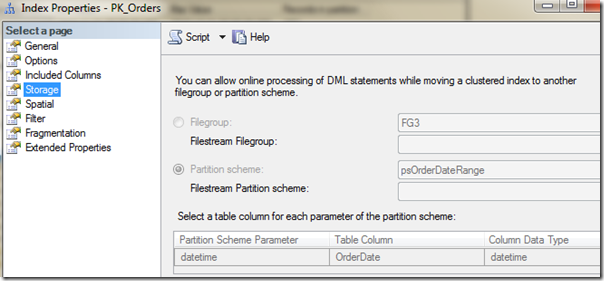

The first thing you must be aware of is index aligning. Remember: if the partition key (OrderDate column) is part of the primary Key (OrderID + OrderDate) the index is aligned with the data. This means that each corresponding portion of the index is allocated in its corresponding partition because it uses the same partition schema and you can have a clustered index. If this is not the case (suppose your primary key is just OrderID), you can´t have a clustered index in the primary key because the data needs to be sorted using the partition key (which is Order Date) and you would have to create a non-clustered index in the primary key and all the index should be stored in a different partition (usually the PRIMARY filegroup).The main problem with this design is that you would need to drop the index before switching the partitions and then recreate the index after the switch operation but also, the process to move data from 1 partition to another (switch) would be slower. Fortunately our Orders table has a aligned index which you can see in the index properties window:

You can see the index is stored in the partition schema (psOrderDateRange) so it is aligned with the data.

In this scenario, I will move data from FG3 to a different table (Orders_History) which can be in the same or in a different database, I just want to archive those records and free space from my current database. Instead of write a delete query which can be slow and use a lot of transaction log space, I will simply move FG3 to a temporary table inside the same filegroup which will be very fast since only metadata will be moved and then I will transfer those records to the historical table which can be stored in the same or in a different database using an INSERT SELECT clause. Of course this will take some time but I can move it in a simpler way and without locking my production table or affecting my users.

The process is:

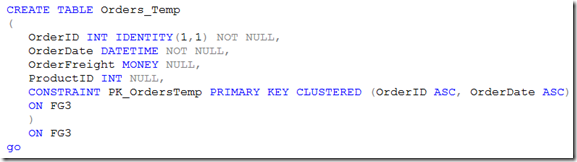

- Create a temporary table with the same structure from the original table (orders) in the SAME FILEGROUP. This is necessary since you can only switch data from partitions located in the same filegroup.

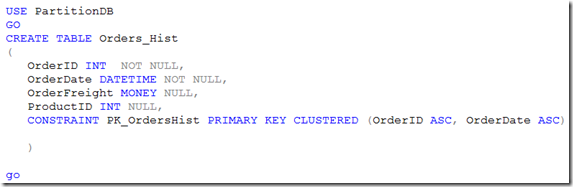

- Create the destination table (orders_history) with the same structure as the original and the temporary.

- Switch the desired partition using ALTER TABLE clause

- insert the data from the temporary table to the destination table using INSERT SELECT clause.

- Drop the temporary table.

Let´s see:

This is my temporary table created on FG3:

This is my Historical table created in a different database, note I excluded INDENTITY since this value will exists in the source table.

The data I want to move lives in FG3 which is now partition 1. I just simply alter orders table this way:

What is surprising is that it doesn't matter how big partition 1 is, the data will be switched very fast!

Before moving the data to the history table, let´s query the partitions again:

FG3 has not any records.

All the data is now in the Orders_Temp table:

Now let´s move the data to Orders_History:

Finally, drop Orders_Temp table:

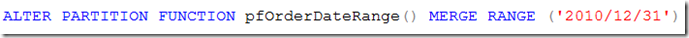

However you cannot drop FG3 because one range of the orders table is allocated in this filegroup, although you know there is not data there. However you can merge FG3 and FG4 this will release FG3 if you want to drop it. in the last query you can se FG3 boundary is 2010-12-31, so you can merge using this value:

If you query the partitions, FG3 is not used anymore:

Now you can remove FG3 if you want.

Conclusion

You can create a partitioning strategy in very large tables in order to simplify administration, enhance performance, create an archiving strategy to purge out data from the database and manage partitions merging or splitting them to provision space for future data. You must take care of table design and choose the correct partition key as well as index. If you make a wrong decision it could be very difficult to manage future data. Try to reproduce the same examples in this post but now using RIGHT instead of LEFT and you´ll see what I mean. Also, try to create a table without the OrderDate column in the primary key to see how the index is built.

I hope you find this information helpful, If that´s the case leave me a message in the blog.

Thanks.

Comments

Anonymous

March 08, 2012

Thank you!Anonymous

March 08, 2012

I think this day is very useful for me as I have read this great article. Thank youAnonymous

March 08, 2012

I have one question. As soon as partitioning without clustered key is not good case, which is the best way to partition relational data using Date field? e.g. I have 2 tables: Orders (Primary Key: OrderId) and Order_Details (primary key and foreign key: OrderId) Orders.OrderId = Order_Details.OrderIdAnonymous

March 08, 2012

Is including OrderDate field from Orders table also to Order_Details table good ideaAnonymous

March 08, 2012

One more question please: Whats the difference in efficiency between creating one data file with several partitions and creating as many data files as partitions (as its described in you article)Anonymous

April 03, 2012

Hello Giorgi, sorry for this late answer. Responding your first question: It is good idea to include the field which is used for join tables, in fact since Orders and order_details are two related tables, it would be a good idea to partition both tables using the same partition schema because this way the query will only use the files (partition) which contains the required data. About your second question: When you créate multiple data files, thread management is more efficient, since rows can be distributed simultaneously between all data files at the same time which is very important for highly transactional systems. Also you could put each file in a separate LUN which would improve performance and you could have a better backup strategy. I hope this Works for you. Thank you.Anonymous

March 05, 2013

Thanks! Immensely helpful coz of the detailed examples.Anonymous

July 02, 2013

Many Thanks Félix for this ArticleAnonymous

August 21, 2013

Please check below link which is better then this. www.ghanchiasif.blogspot.in/.../auto-maintain-archival-process.htmlAnonymous

December 09, 2013

Article was very helpful. And I have followed the same way when archiving old data. I have a question. I need to archive the old data into another table every month. Will I be able to do it like a job instead of doing it manually for every datekey? If yes, any ideas on how to do it? I think I need to pass the datekey dynamically to the partition function then. Please suggest.Anonymous

December 10, 2013

Found it very useful. Thanks for the amazing articleAnonymous

April 07, 2014

Great article. Thank you!Anonymous

April 24, 2014

Nice Article, Very useful to me.Thank youAnonymous

May 07, 2014

Hi Felix, I would like to partition a table on a date column and have only two partitions (and filegroups): [PRIMARY] for current year data; and [HistoryArchive] for all other data. I understand how to create these two partitions, but how would I go about putting the data in the primary partition into the archive partition and then adding the data for the new current year into the primary partition when the date changes to the current year (let's say on Jan 1 of the new year)? Your help will be appreciated. Thanks, Todd tmcdaniel@follett.comAnonymous

June 18, 2014

Hi, We have change tracking enabled on our tables. These tables are growing very rapidly already in 100s of GB sizes. We are planning to use Partitioning and during our tests we experienced errors using Alter Table Switch statement as it seems you can not use it on tables which has Change Tracking enabled. Since the tables are growing very fast we will have to go with the Partitioning approach for the tables. This means we need to replace the change tracking we are currently using to send the changes from the live to the other environments such as Reporting and Ware houses with something that works with Partition Switch. Could I ask you if you have any suggestions for our situation where in We can have Partitioning and some Change Tracking mechanism, we are trying Change Data Capture but it does seem like an expensive mechanism that reads transactions out of the transaction logs and could potentially put load on the server. Regards, SQL Server Lover.Anonymous

June 23, 2014

Would you recommend split range operation in case of existing table with the data? Or do you suggest to have drop cluster index, partition schema/function and re-create with the required addition/modification? As per my understand in case if you have table with the data split range operation will take long time and usage of t-log will be un-predicted. Can you please share your thoughts on this? ThanksAnonymous

May 05, 2015

The comment has been removedAnonymous

May 31, 2015

The comment has been removedAnonymous

August 10, 2015

Another really good article. ThanksAnonymous

September 09, 2015

Can I use the part of the table Ex: I want to use the rows which are created after specific date. So is it possible to use the portion of table without table partitioning??Anonymous

March 17, 2016

this is a very very great article, thank you