SharePoint Infrastructure as Code

On a recent project, a developer was having many issues in migrating a cloud CAM application to on-premises SharePoint. One would ask why go from cloud to on-prem, the answer is Government; but that is a different story!

When writing enterprise applications with multiple people on a project team, it is very important to be able to recreate data structures and reference data to different environments accurately, quickly and efficiently. In the SQL application world, people have been doing this forever. They script DB builds and auto load reference data. They sometimes take it up a notch and use tools like Entity Framework and Typed Datasets to help generate compile time errors to prevent run time errors when the data structures change. This is currently referred to as “Infrastructure as Code”.

In the CAM/Add-in world, SharePoint lists can be a challenge to manage. There are ways, like “export to template” that can do this but it is hard to keep the data in-sync and up-to-date with new reference data etc. There are a few admin steps to activate these features and enable them. Some people make a list of the lists they need to move and manually add them to the different site collections. Typos anyone? This can be really hard to trouble-shoot in a new system and in the end, the developer will have to use the “works on my box” line; not good.

Enter the DataApp! A simple solution to get your team to an enterprise development standard. This DataApp should be able to create and load all data structures and any reference data your application needs. It does not have to be glamorous or efficient. The DataApp just has to be able to create the data structures and data. When a change is needed, you make it in the DataApp and have the DataApp push it to your SharePoint site. This allows your projects to always have a way to reproduce data and structures. To take it one step further, this DataApp can also generate constants for your application to use. If your application uses the constants, when a column is renamed or deleted, the complier will give you an error; runtime error avoided! How cool is that!

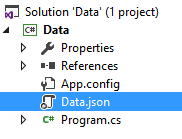

Let’s walk through the DataApp, it consists of a JSON file with all the configurations and a CSOM app.

JSON Configuration File

In this file, you define your tables and relationships. You can also add reference data rows. In the head of the file you specify your log-in credentials and if this is talking to o365 OR on-prem (handles both really cool!).

JSON File:

"ApplicationName": null,

"Server": "https://win-ebqb1hd3ns1/DansBikes",

"User": "Administrator",

"Password": "xxx",

"Domain": "win-ebqb1hd3ns1",

"Iso365": false

These are all pretty self explanatory. In my example I did not use an application name, feel free to. The next part is defining a table.

{

"Type": "List",

"Name": "Customer",

"Ignore": false,

"TitleName": "Email",

"SharePointColumns":

[

{

"Name": "First",

"Type": "Text",

"Parent": null,

"Data": null

},

{

"Name": "Last",

"Type": "Text",

"Parent": null,

"Data": null

},

{

"Name": "Phone",

"Type": "Text",

"Parent": null,

"Data": null

}

]

This creates a “List” named “Customer” with the columns below: First, Last and Phone. In the head of this area, there is a switch to override the “Title” field. Here we rename it to “Email”. You can’t really get rid of the Title field, so use it well, it’s a primary key usually. For a customer table, Email is a great key. After the structure is created, you can add reference rows like this:

"SharePointReferenceRows": [

{

"SharePointReferenceColumns": [

{

"Name": "First",

"Value": "Dan"

},

{

"Name": "Title",

"Value": "Dan.Biscup@Microsoft.com"

},

{

"Name": "Last",

"Value": "Biscup"

},

{

"Name": "Phone",

"Value": "(813) 123-1234"

}

]

}

JSON is really fast, add as many rows as you need here.

In the application, there really is nothing special going on, it uses this JSON file and builds the structures. It is not the best code in the world, and if you notice it creates the SP context a ton of times. Using o365 gave a few context issues, so this is the reason. Once again the importance here is the ability to create the infrastructure quickly and efficiently.

One thing to note is that sometimes you just want to do a partial build. In the head of the list creation you can set “Ignore” to true. This will skip the rebuild of the table. When this builds the structures, it deletes them first. Make note of that.

"Ignore": false

After the data app runs, it creates a .cs file with constants in them. This can be referenced on your consuming application. This will prevent column name change errors. If your infrastructure changes, the compiler will know. Hardcoding columns in your SharePoint item is so dangerous. As soon as the lists change you will get strange, hard to troubleshoot errors. Use constants!

In this example JSON file, an Entities.cs file was created. For the customer table it created this:

public class Entities_Customer

{

public const string ListName = "Customer";

public const string First = "First";

public const string Last = "Last";

public const string Phone = "Phone";

public const string Email = "Title";

}

In my SharePoint add-in I add a link/reference to the Entities.cs file and add a new customer like this:

List lCustomerList = ctx.Web.Lists.GetByTitle(Entities.Entities_Customer.ListName);

ListItemCreationInformation liciCustomer = new ListItemCreationInformation();

ListItem liCustomer = lCustomerList.AddItem(liciCustomer);

liCustomer[Entities.Entities_Customer.Email] = Model.Email;

liCustomer[Entities.Entities_Customer.First] = Model.FirstName;

liCustomer[Entities.Entities_Customer.Last] = Model.LastName;

liCustomer[Entities.Entities_Customer.Phone] = Model.Phone;

liCustomer.Update();

ctx.ExecuteQuery();

Everything is a constant. If the “Last” field in the “Customer” table changes to “LastName”, this code will break at compile time! This codebase is changing frequently. I plan on releasing it soon to GitHub to see what people add like views etc!

NOTICE: I will be presenting at COSPUG 9/10/2015 on this topic: https://www.cospug.com/