Concurrency Series: Why do I get blocking when I use Read Uncommitted isolation level or use NOLOCK hint?

Author: Sunil Agarwal

Reviewers: Kun Cheng, Chuck Heinzelman, Shaun Tinline-Jones, Sanjay Mishra, Kevin Liu

When using NOLOCK hint on the table or using read uncommitted isolation level, customers sometimes get surprised when they experience blocking. Let me explain this using the following example

Example: Concurrent DDL operation

-- create a simple table for our examples

create table basic_locking (c1 int, c2 int, c3 int)

go

-- insert 500 rows into the table

declare @i int = 0

while (@i < 500)

begin

insert into basic_locking values (@i, @i+ 100, @i+10000)

set @i += 1

end

-- In session-1, do an update in a transaction

-- It will acquire X lock on all the rows in the table

-- or possibly an X lock on the table.

begin tran

update basic_locking set c2 = 1000 where c1 = 1

-- script to show blocking and locks

select

t1.request_session_id as spid,

t1.resource_type as type,

t1.resource_database_id as dbid,

t1.resource_description as description,

t1.request_mode as mode,

t1.request_status as status

from sys.dm_tran_locks as t1

where t1.request_session_id = @@SPID

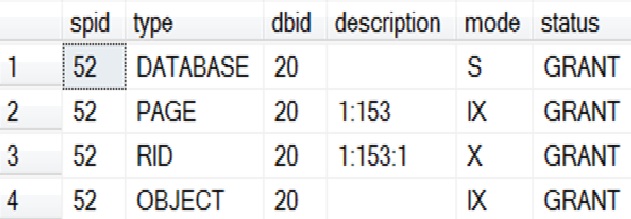

Here is the output of the locks taken using the following query. Since only one row is being updated, you see a X lock on the data row and the IX lock on the data page (i.e. 153)containing the row and IX lock on the table.

-- Session-2 use read uncommitted to access the data. Since the query –- is executed with NOLOCK hint or read uncommitted isolation level,

-- it will run without getting blocked

begin tran

-- As expected, this select will not block

-- and will return 100 which ‘dirty’ data

select top 1 c1 from basic_locking with (NOLOCK)

Now,let us execute the DDL operation in session-1 as follows:

-- In session-1, add a column to the table under the same

-- transaction

alter table basic_locking add c4 int

-- Session-2 use read uncommitted to access the data

-- This statement will block while waiting to to acquire

-- SCH-S lock on the table.

select c2 from basic_locking with (NOLOCK) where c1 = 1

-- use the following script to show blocking and locks

select

t1.request_session_id as spid,

t1.resource_type as type,

t1.resource_database_id as dbid,

t1.request_mode as mode,

t1.request_status as status,

t2.blocking_session_id

from sys.dm_tran_locks as t1 left outer join sys.dm_os_waiting_tasks as t2

ON t1.lock_owner_address = t2.resource_address

WHERE t1.request_status = 'WAIT'

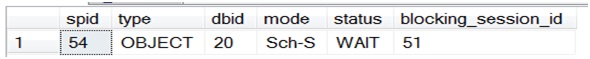

Here is the output that shows that session -1 (SPID-51) is blocking session-2(SPID-54) on SCH-S lock request.

The reason for this blocking is that during the execution of a SQL statement, the SQL Server requires a schema stability lock (i.e. SCH-S) on objects referenced in the statement to protect against concurrent DDL. For the simple example above, if this lock was not taken, the question will be should the returned rows have 3 column or 4 columns? Or the rows that were returned before DDL operation committed show 3 columns while the remaining rows show 4 columns?

In fact, if you use RCSI mode or snapshot isolation level, the query will get blocked similarly. Since DDL is a not a common operation in production environment, this kind of blocking should happen rarely.

Thanks

Sunil Agarwal

Comments

Anonymous

March 07, 2011

Nice article. However, one particular situation where the Sch-M lock is a source of blocking problems in production environments is when partition switching is used for loading data into partitioned tables in e.g. a data warehouse environment.Anonymous

November 27, 2011

nice post ,thanks a lot.Anonymous

February 23, 2015

The answer is since select starts first, it should use row-versioning to return the data as the time when query starts without blocking. That is 3 columns.Anonymous

February 26, 2015

Michael: are you referring to this issue on primary replica? Note, SQL sever 2014 has changed the locking strategy for partitioning operations thanks