From the MVPs: Updated Column Store Indexes in SQL Server 2014

This is the 42nd in our series of guest posts by Microsoft Most Valued Professionals (MVPs). You can click the “MVPs” tag in the right column of our blog to see all the articles.

Since the early 1990s, Microsoft has recognized technology champions around the world with the MVP Award . MVPs freely share their knowledge, real-world experience, and impartial and objective feedback to help people enhance the way they use technology. Of the millions of individuals who participate in technology communities, around 4,000 are recognized as Microsoft MVPs. You can read more original MVP-authored content on the Microsoft MVP Award Program Blog .

This post is by SQL Server MVP Sergio Govoni . Thanks, Sergio!

Updateable Column Store Indexes in SQL Server 2014

Introduction

Column store indexes had been released with SQL Server 2012 to optimize data warehouse workloads that have specific patterns, data are loaded through T-SQL scripts or through SSIS packages, several times a day, in any case the frequency is not important, only that the available data is loaded in the same execution. At the end of ETL process, data is read with reporting tools. Usually data is written one time, then read multiple times.

In SQL Server 2012 there was the non-clustered column store index only; like a traditional B-Tree non-clustered index, it was a secondary index. However, it differs from a traditional B-Tree index because it is based on a columnar structure, though, the base table remains organized by row (in a structure called row-store and saved in 8K data pages).

The column store indexes are part of Microsoft In-Memory Technologies because they use xVelocity engine for data compression optimization and its implementation is based on a columnar structure such as PowerPivot and SSAS Tabular. Data in column store indexes are organized by column, each memory page stores data from a single column, so each column can be accessed independently. This means that SQL Server Storage Engine will be able to fetch the only columns it needs. In addition, data is highly compressed, so more data will fit in memory and the I/O operations can greatly decrease.

Column store indexes structure

Before talking about new feature of column store indexes in SQL Server 2014, it is important to introduce three keywords: Segment, Row Group and Compression. In a column store index, a segment contains values for one column of a particular set of rows called row group. As it is possible to see in the following picture, each red and gray portions are segments. When you create a column store index, the rows in the table will be divided in groups and each row group contains about 1 million rows (the exact number of rows in each row group is 1,048,576; in other word there are 220 rows in each row group). Column store transforms the internal index organization from row organization to columnar organization and there will be one segment for each column and for each row group. Column store indexes are part of Microsoft In-Memory technologies in which data is compressed and the compression plays a very important role, so each segment is compressed and stored in a separate LOB.

This article does not detail the algorithms to compress data in column store index. At any rate, keep in mind that each segment stores the same type of data, so in a segment, there will be homogeneous data and in this scenario, the compression algorithms will be more efficient than the one used to compress table rows because row usually contains different type of data. In general, data compression can be implemented using different techniques such as:

- Dictionary Encoding

- Run-length Encoding

- Bit Packing

- Archival Compression (only in SQL Server 2014)

- It can reduce disk space up to 27%

The techniques used by SQL Server to compress data are undocumented. The following picture shows an example of row groups and segments in a column store index.

Picture 1 – Row groups and segments

Thinking about table partitioning in SQL Server, as you may know, since SQL Server 2005 you can partitioning table, using a partition function and a partition scheme. When a table is partitioned, indexes defined on it can be aligned to the partitions (this is the default for the new indexes created on a partitioned table). Partitioning SQL Server can have several benefits; one of these makes the Query Processor more efficiently because it is able to apply a task known as Partition Elimination. By Partition Elimination task, Query Processor can eliminate inapplicable partitions from the query plan, and just access the partitions implied by the filter on the queries. Like Partition Elimination task used by Query Processor on partitioned table, the Segment Elimination task is used by Query Processor to eliminate segments that don’t contain data for the submitted query, in according to the WHERE clause and columns specified in the SELECT columns list.

Column store Indexes in SQL Server 2014

The most important feature that SQL Server 2014 has implemented on column store indexes is the possibility to have clustered column store indexes, so, as you can imagine, this feature means:

- Column store indexes can be updatable

- Table schema can be modified (without drop column store indexes)

- Structure of the base table can be columnar

- Space saved by compression effects (with a column store index, you can save between 40 to 50 percent of initial space used for the table)

In addition, they support:

- Row mode and Batch mode processing

- BULK INSERT statement

- More data types

The clustered column store index of a table is not used during the non-clustered index construction because the clustered column store index is the only one allowed for the underlined table. To create other indexes would not make sense; anyway, it is not possible by design. In more detail, the clustered column store index is created on all table columns, so it is like to have a B-Tree non-clustered index for each column of the same table. With a clustered column store index, all filter possibilities are already covered; Query Processor, using Segment Elimination, will be able to consider only the segments required by the query clauses. On the columns where it cannot apply the Segment Elimination, all scans will be faster than B-Tree index scans because data are compressed so less I/O operations will be required. The follow piece of T-SQL code shows how to create a clustered column store index with the CREATE INDEX statement.

CREATE CLUSTERED COLUMNSTORE INDEX <index_name> ON <table_name>

WITH (<options>)

As you can see, it is not specified the index key columns in the CREATE INDEX statement as you have to do when you create a B-Tree Index, because all table columns are included by design.

The architecture of updatable column store indexes

As already explained the column store indexes in SQL Server 2012 were not updatable, instead it is possible with SQL Server 2014 and it gives the possibility to have an updatable and clustered column store index. Now we are ready to discover how SQL Server 2014 is able to manage updates on this type of indexes, keep in mind that data is organized and compressed in a columnar structure, horizontal divided in row groups and vertical divided in segments. The updates (INSERT/UPDATE/DELETE) are managed by two additional structures that are Delta Store and Delete Bitmap.

Delta store is a heap B-Tree structure that contains data not already compressed and not stored in a segment. A delta store is created when you perform an INSERT, UPDATE or MERGE statement, on a table that has a clustered column store index. For the INSERT statement, if you use BULK INSERT or INSERT…SELECT no delta store will be created except for BULK INSERT that treats less than 100,000 rows. Over this limit, the inserted rows will be added into a delta store and they will be compress and store in a segment immediately, even if they are less than the perfect rows number for BULK INSERT batch, in a column store, that is exactly 1,048,576 rows.

Delete bitmap is a heap B-Tree structure organized like delta store, which contains references to delete rows in row groups. Delete bitmap is created when you perform a DELETE statement on a table that has a column store index.

There is an automatic process called Tuple Mover that every five minutes checks the number of rows stored in the delta store and if it finds a row group in a delta store that has at least 1,048,576 rows, it sets on that row group the state CLOSED, then it performs data compression and stores optimized data in segments. This process can be also executed manually forcing a REBUILD or REORGANIZE index.

The following picture shows this concept: segments in row groups are immutable for a while, updates are managed by additional structures called delta store and delete bitmap; data are stored in the official index structures when the minimum rows dimension will be achieved.

Picture 2 – Delta Store and Delete Bitmap used to manage updates in column store index

Locking

The delta store is physically divided in row groups, when SQL Server performs an insert of one or more rows in a row group placed in a delta store, that row group will be locked entirely in exclusively mode, in high concurrency scenario, inserts will be serialized. BULK INSERT statements that treat at least 100,000 will not apply locks on row groups in the delta store because the inserted rows will be compressed and stored in a segment immediately without passing through the delta store. With Tuple Mover locks in exclusive mode, all row groups process so that with those row groups locked no new inserts will be allowed until Tuple Mover will end its work.

Now, let’s show how SQL Server 2014 manages updates on column store indexes. The following examples are based on the AdventureWorksDW2012 database which you can download from CodePlex web site. AdventureWorksDW2012 is a data warehouse based on AdventureWorks. Now using the table dbo.FactProductInventory we will create a new table named dbo.FactProductInventoryXL that is the extra-large version of the dbo.FactProductInventory table. The following piece of T-SQL code contains the CREATE TABLE statement used to create the new table on AdventureWorksDW2012 database.

USE [AdventureWorksDW2012];

GO

CREATE TABLE dbo.FactProductInventoryXL

(

ProductKey int NOT NULL

,DateKey int NOT NULL

,MovementDate date NOT NULL

,UnitCost money NOT NULL

,UnitsIn int NOT NULL

,UnitsOut int NOT NULL

,UnitsBalance int NOT NULL

);

GO

Below is an example of data entry into this new table that has the same schema of the dbo.FactProductInventory table. The best way to archive this task is using an INSERT…SELECT statement with GO <count> argument, where in the argument we have specified to do the INSERT statement twice, as you can see in the following piece of T-SQL code. At this time, no index has been created yet.

INSERT INTO

dbo.FactProductInventoryXL

SELECT

fpi.*

FROM

dbo.FactProductInventory AS fpi

GO 2

The next step is the creation of a clustered column store index on the dbo.FactProductInventoryXL table that has 1,552,572 rows. The following piece of T-SQL code shows the CREATE INDEX statement where it is important to observe that any key columns have been specified for the index because in a clustered column store index it is forbidden by design, and all columns will be included in the clustered index. In addition, we have specified to use archival compression by the value COLUMNSTORE_ARCHIVE assigned to the option DATA_COMPRESSION.

CREATE CLUSTERED COLUMNSTORE INDEX CCI_FactProductInventoryXL

ON dbo.FactProductInventoryXL

WITH (DATA_COMPRESSION=COLUMNSTORE_ARCHIVE);

GO

Rows in the dbo.FactProductInventoryXL have been divided in row groups and for all there is a segment for each column. To know how many row groups are related to a table it is possible to use the sys.column_store_row_groups catalog view that returns one row for each row group, for tables having a clustered or non-clustered column store index. Executing the following SELECT statement it discovers how many row groups there are for the clustered column store index CCI_FactProductInventoryXL.

SELECT

csrg.object_id

,csrg.index_id

,csrg.row_group_id

,csrg.delta_store_hobt_id

,csrg.state_description

,csrg.total_rows

,csrg.deleted_rows

,size_in_MB = (csrg.size_in_bytes/1000000.00)

FROM

sys.column_store_row_groups AS csrg

WHERE

OBJECT_NAME(csrg.object_id) = 'FactProductInventoryXXL';

GO

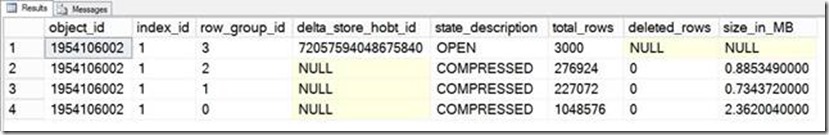

The output is shown on the following picture.

Picture 3 – Column store row groups for

There are three different row groups; the first one, which has row_group_id equal zero, already contains the maximum number of rows allowed for a row group (1,048,576 rows) and it cannot accept other rows. The others two row group are compressed as well, but they can accept other rows. No delta store and delete bitmap structures are present at this moment for the dbo.FactProductInventoryXL table. According to the books on-line, the descriptions that you can see in state_description column can be:

- INVISIBLE (state value 0): A hidden compressed segment in the process of being built from data in a delta store. Read actions will use the delta store until the invisible compressed segment is completed. Then the new segment is made visible, and the source delta store is removed

- OPEN (state value 1): A read/write row group that is accepting new records. An open row group is still in row store format and has not been compressed to column store format

- CLOSED (state value 2): A row group that has been filled, but not compressed yet by the Tuple Mover process

- COMPRESSED (state value 3): A row group that has filled and compressed

I think that you will see the efficiency of the column store archival compression. The value in size_in_MB column related to the row group that has row_group_id equal zero provides great information, only 2.36 MB required to store more than 1 million rows!

Now we will try to insert 3,000 rows with a loop that performs 3,000 INSERT…SELECT statement where each statement inserts one row. We will use the following T-SQL code.

SET NOCOUNT ON;

DECLARE @i INTEGER = 0;

WHILE @i <= 3000

BEGIN

INSERT INTO

dbo.FactProductInventoryXL

SELECT

fpi.*

FROM

dbo.FactProductInventory AS fpi

ORDER BY

ProductKey

,DateKey

OFFSET @i ROWS FETCH NEXT 1 ROW ONLY;

SET @i = @i + 1;

END;

SET NOCOUNT OFF;

Executing the following SELECT statement it discovers how many row groups there are for the clustered column store index CCI_FactProductInventoryXL.

SELECT

csrg.object_id

,csrg.index_id

,csrg.row_group_id

,csrg.delta_store_hobt_id

,csrg.state_description

,csrg.total_rows

,csrg.deleted_rows

,size_in_MB = (csrg.size_in_bytes/1000000.00)

FROM

sys.column_store_row_groups AS csrg

WHERE

OBJECT_NAME(csrg.object_id) = 'FactProductInventoryXXL';

GO

The output is shown on the following picture, where it is possible to see a new row group stored in the delta store structure, associated to the dbo.FactProductInventoryXL table (notice the hobt_id for delta row groups). The new row group is in OPEN state, the number of rows stored within it is 3,000; it can accept other rows and for this reason, it will not be closed by Tuple Mover process.

Picture 4 – Row groups stored in delta store

Now if we execute a REBUILD or REORGANIZE index statement, SQL Server 2014 will perform row compression and shift those 3,000 rows to another row group (or row groups) out of the delta store structure. The follow statement executes the REBUILD of the clustered column store index.

DBCC DBREINDEX('dbo.FactProductInventoryXL', CCI_FactProductInventoryXL);

GO

Executing the following SELECT statement it discovers how many row groups there are for the clustered column store index CCI_FactProductInventoryXL.

SELECT

csrg.object_id

,csrg.index_id

,csrg.row_group_id

,csrg.delta_store_hobt_id

,csrg.state_description

,csrg.total_rows

,csrg.deleted_rows

,size_in_MB = (csrg.size_in_bytes/1000000.00)

FROM

sys.column_store_row_groups AS csrg

WHERE

OBJECT_NAME(csrg.object_id) = 'FactProductInventoryXXL';

GO

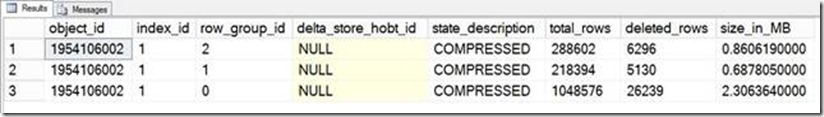

The output is shown in the following picture, where you can see the new rows distribution.

Picture 5 – Rows distribution within row groups after a column store index rebuild

The DELETE statement below shows how to eliminate the rows in dbo.FactProductInventoryXL that have MovementDate values between 2005-09-17 and 2005-10-17.

DELETE FROM

dbo.FactProductInventoryXL

WHERE

(MovementDate BETWEEN '2005-09-17' AND '2005-10-17');

Executing the following SELECT statement it discovers how many row groups there are for the clustered column store index CCI_FactProductInventoryXL.

SELECT

csrg.object_id

,csrg.index_id

,csrg.row_group_id

,csrg.delta_store_hobt_id

,csrg.state_description

,csrg.total_rows

,csrg.deleted_rows

,size_in_MB = (csrg.size_in_bytes/1000000.00)

FROM

sys.column_store_row_groups AS csrg

WHERE

OBJECT_NAME(csrg.object_id) = 'FactProductInventoryXXL';

GO

The output is shown in the following picture where you can see the distribution of deleted rows within column store row groups. Deleted rows (37,665 in total) are also referenced by an entry in the delete bitmap structure; they will be physically deleted at the next execution of Tuple Mover or at the next rebuild/reorganize index. Anyway, if you try to execute a query with the same WHERE clause specified in the DELETE statement, no rows will be returned as you can see in the second part of the picture 6.

Picture 6 – Deleted rows are referenced by an entry in the delete bitmap structure

Now we have to perform an UPDATE on UnitCost column of the dbo.FactProductInventoryXL table. The UPDATE statement is shown in the following piece of code.

UPDATE

p

SET

p.UnitCost = p.UnitCost + 0.30

FROM

dbo.FactProductInventoryXL AS p

WHERE

(p.MovementDate BETWEEN '2008-03-01' AND '2008-05-01');

GO

Executing the following SELECT statement it discovers how many row groups there are for the clustered column store index CCI_FactProductInventoryXL.

SELECT

csrg.object_id

,csrg.index_id

,csrg.row_group_id

,csrg.delta_store_hobt_id

,csrg.state_description

,csrg.total_rows

,csrg.deleted_rows

,size_in_MB = (csrg.size_in_bytes/1000000.00)

FROM

sys.column_store_row_groups AS csrg

WHERE

OBJECT_NAME(csrg.object_id) = 'FactProductInventoryXXL';

GO

The output is shown in the following picture where you can see the effects of an UPDATE statement on a clustered column store index; behind the since, updates are managed by INSERT and DELETE statements. Deleted rows (old values) are referenced into delete bitmap structure and inserted rows (new values) are placed into a new row group saved in the delta store.

Picture 7 – Rows stored in delta store and delete bitmap structures

Now if we execute a REBUILD or REORGANIZE index statement, SQL Server 2014 will perform row compression and shift the 75,268 from delta store row group to another compressed row group and it physically performs the delete, also for the rows that have a reference in delete bitmap structure.

Conclusion

This article explains the most important feature that SQL Server 2014 has implemented on column store indexes, at any rate, there would be so much to say about Column Store Indexes in SQL Server 2014. To learn more about this topic check out this whitepaper from Microsoft Research.

Comments

Anonymous

June 17, 2014

Sir, Can anyone tell me How many questions generally i would get in MTA .NET Exam.I would like a detailed information about the types of questions.Are they really only MCQ questions! Thanks AniruddhaAnonymous

June 17, 2014

This link is the best one for understanding MTA - www.microsoft.com/.../mta-certification.aspxAnonymous

August 12, 2014

Excellent post Sergio. Thanks!Anonymous

November 10, 2014

Thank you for the feedback Marianok :)Anonymous

November 16, 2015

Very informative article. I am hoping that Columnstore indexes are made available in SQL LocalDB as well so that we can use it for development and testing.- Anonymous

November 24, 2016

Hi Noel,Thanks for your feedback. In-Memory ColumnStore are now available, with SQL Server 2016 SP1, also in the Standard and Web Editions. Here, you can find the features matrix for SQL Server 2016 SP1: https://msdn.microsoft.com/en-us/library/cc645993.aspxEnjoy!

- Anonymous