BentMaze 1.2: Lights Out!

I've been playing with 2D lighting lately so I can add it to my XNA maze game. Lighting in computer graphics, I think it's fair to say, is always a bit of a hack -- a matter of finding some middle ground between the (incredibly rich and complex) way light behaves in the real world on one hand, and what you can reasonably compute in real-time on the other hand. Even fancy global illumination models cannot hope to recreate all the subtleties of real-world lighting, at least in real-time. Especially for games, lighting is mainly a matter of doing something simple, deciding if it's "good enough," refining the model a bit, and repeating.

At least in 3D, we have the real world as a gold standard to compare our rendered images to (if realism is our goal). But is there a "proper" way to light an imaginary 2D world? How should a light source in my maze light the walls? If objects in the world are opaque, shouldn't they block the light and thus be completely black when viewed from above? How can the virtual camera be "above" the 2D plane anyway? The viewer is seeing the world from a rather omniscient perspective that the flat inhabitants could never experience. There's not really a "right" way to light a 2D world, so one should feel free to come up with something that's plausible, pleasing to the eye, and is compatible with the desired gameplay.

In the traditional 3D graphics pipeline, lighting is determined per-vertex or per-pixel as each polygon gets rendered, and the interaction between the incoming light and the object's material is dealt with before the pixel gets written to the frame buffer. However, you can also render your objects in unlit form, then determine lighting separately, then combine the two later for a final result, somewhat like the old precomputed light maps used to texture the walls in Quake. This approach is attractive in 2D because we can render the lighting as objects independent of the world. Consider a point light source with linear falloff. In 3D this produces a sphere of light that affects a volume of space, but it's tough to directly render the sphere in a way that we can combine with a rendered 3D scene. But in 2D, we can render a disc and easily blend this with our unlit 2D scene to produce a lit result.

There are other advantages to separating out the lighting into its own render target. Lighting can be done at lower spatial resolution than the rest of the scene, to conserve pixel bandwidth. If monochrome lighting is all you need, you could use a monochrome pixel format and save memory. Or if you want to do high-dynamic range lighting, you could use a floating-point frame buffer for your lighting.

Anyway, that's the approach I'm going with. I'm certainly not the first to go this direction to light a 2D game. Shawn Hargreaves talked about it near the end of this blog post. I followed the link to his demo app and was impressed to see that it still works on Windows XP, even though it's an old-school DOS game. Try it!

Here's my full-brightness scene, drawn to a render target that is the same size as the back buffer:

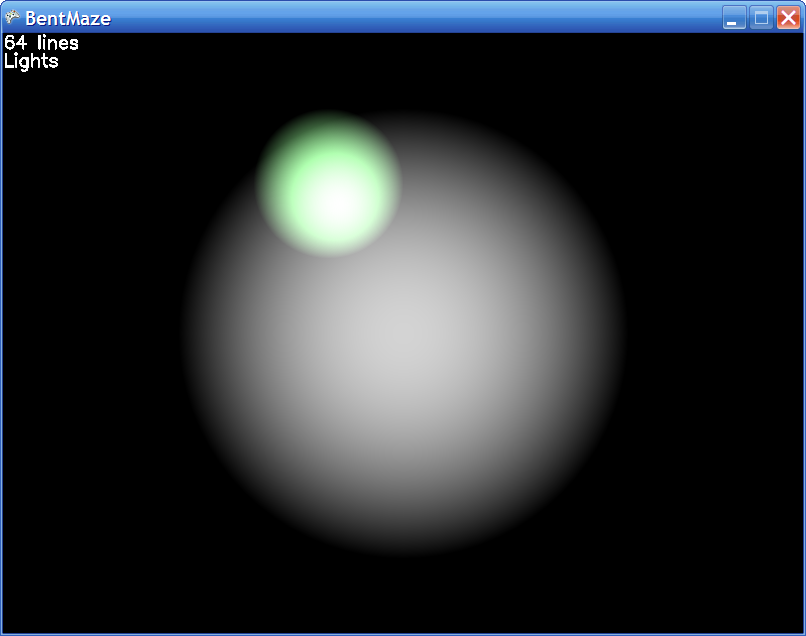

Here's my lighting, drawn to a different render target. The big white disc follows the orange dude around, and I threw in a few fixed colored lights too. I'm just using boring discs at the moment, but they could be other shapes to represent spotlights, flickering torches, etc:

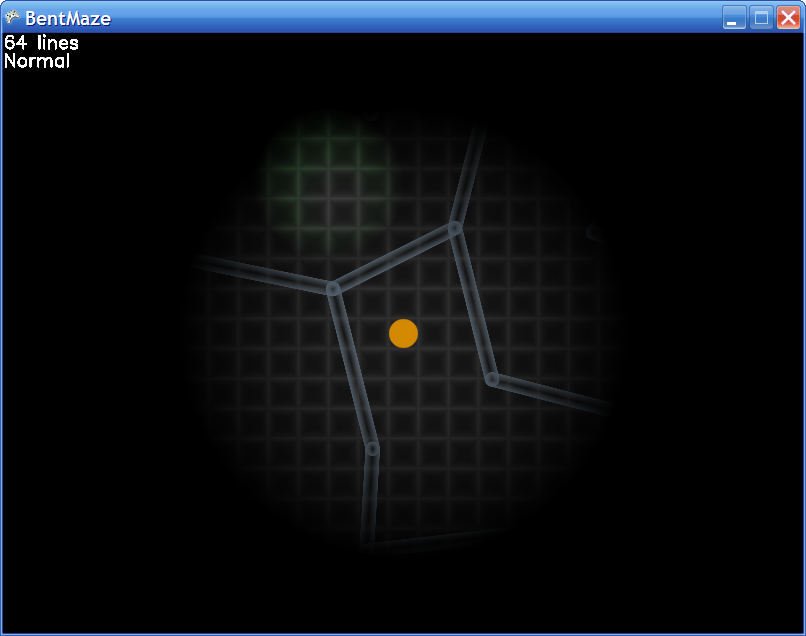

And here's the combination of the two:

I didn't use SpriteBatch to combine the buffers -- I wrote a little class to draw a fullscreen quad with a custom pixel shader to do the blending. This gives me the most flexibility to do more complex blending later.

I also wrote a custom shader to do the grid-like "floor" pattern of the maze. By passing the coordinates of the fullscreen quad backwards through the projection and view matrices, I can get the original worldspace coordinates, so the grid moves appropriately with the camera.

When two lights overlap, things look a little wonky -- you can see this in the middle screenshot above. I think there are two problems here. One is that the light map is getting saturated -- you're getting a "0.8 + 0.8 = 1.0" effect. With a HDR texture, we could properly combine the light values and then normalize the result. The other part of the issue is related to gamma. A linear ramp of pixel values from 0 to 1 doesn't produce a visually linear progression of brightness onscreen, due to the way monitors work. So really this gamma effect needs to be removed before combining lights, then re-added afterwards -- otherwise you get weird nonlinear boosts in brightness. See here for a nice discussion of the issue.

So this lighting effect works well -- the maze is nice and dark except where I've placed some lights. But if you're like me, you might wonder if things could be taken a bit further along the "realism" scale. I'm already on top of it and will have some double-extra-cool stuff to talk about next time.

-Mike

Comments

- Anonymous

March 13, 2007

The discs of light used by my XNA 2D lighting code look decent, but shadows would really add to the realism.