iSCSI or SMB Direct, Which one is better?

Since Windows Server 2012, we support placing Hyper-V VM and SQL Server's database in a file share (SMB Share). That provides a cost effective storage solution. Meanwhile, Windows Server also has built-in iSCSI Target and initiator. Then the question come up. Which one is better, iSCSI or SMB?

Like consultant's standard answer, "It depends..." In the following circumstances, you may want to use iSCSI.

I need shared SAN block storage because…

- It will be the shared storage for my new failover cluster

- My application delivers best performance with block storage

- I have an application not supported on File Servers

- I am most familiar with SAN block storage

- I want to boot from SAN storage

I prefer iSCSI SANs because…

- Unconstrained by <# of ports, distance…> of a shared SAS JBOD

- Fibre Channel is too expensive – expensive HBAs, expensive switches, expensive FC expertise…

- I don’t need special NICs or switches to build an iSCSI SAN

Then what's the benefit for using SMB?

- Easy to use. No need to worry about target, initiator, LUN provision, etc.

- Support RDMA, which provide low latency and more consistent performance.

- Support Multi-channel. Aggregate network bandwidth and provide fault tolerance if multiple paths are available between client and server. No need to configure MPIO or NIC Teaming. It's all automatically in most cases.

- Provide Server side caching, which is Microsoft iSCSI Target doesn't support.

- Durable handles. Transparently reconnect to server during temporary disconnection. Scale-out File server cluster can provide continuous availability over the traditional high availability.

- Be able to scale out. Microsoft iSCSI Target doesn't support scale out across multiple nodes.

You may already be able to make the decision. If not, I guess the next question would be how about the performance? Sounds iSCSI can provide better performance. It's block storage. Is that true?

In order to compare the performance of iSCSI and SMB. I don't want to hardware resource (say, network or storage) become the bottleneck. So I prepare the lab environment as below.

WOSS-H2-14 (Role: iSCSI Target and SMB File Share)

- DELL 730xd

- CPU: E5-2630v3 x2

- Memory: DDR4 128GB

- Storage: Micron P420m 1.4TB

- Network: Mellanox Connect-3 56Gb/s Dual port

- OS: Windows Server 2012 R2

WOSS-H2-16 (Role: iSCSI Initiator and Hyper-V host)

- DELL 730xd

- CPU: E5-2630v3 x2

- Memory: DDR4 144GB

- Network: Mellanox Connect-3 56Gb/s Dual port

- OS: Windows Server 2012 R2

Benchmark:

------------------------------

Before compare the iSCSI and SMB, let's first take a look the benchmark of the network and storage to make sure they could not be our bottleneck.

The above test environment can support the network throughput up to 3,459MB/s

Now let's look at the storage, you could see the drive can provide 3,477MB/s throughput and 786,241 IOPS 100% random raw disk read.

Test case 1: Performance of iSCSI Disk

----------------------

I created a 100GB iSCSI volume on WOSS-H2-14 and configure the iSCSI initiator on WOSS-H2-16 to connect that target and volume.

Then I run Crystal Disk Mark and FIO against that volume. As you could see from the screenshot below the read throughput is only 1,656MB/s, which is far below our network and storage's throughput. However the write throughput is same as our storage's benchmark. Micron P420m is not designed for write intensive application. It's write performance become bottleneck in this case. So let's more focus on compare the read performance between iSCSI and SMB.

The FIO output could tell us the 4KB random IO is only 24,000 IOPS. It's also far below the drive's capability.

Test case 2: Performance of VM running in an iSCSI volume

----------------------

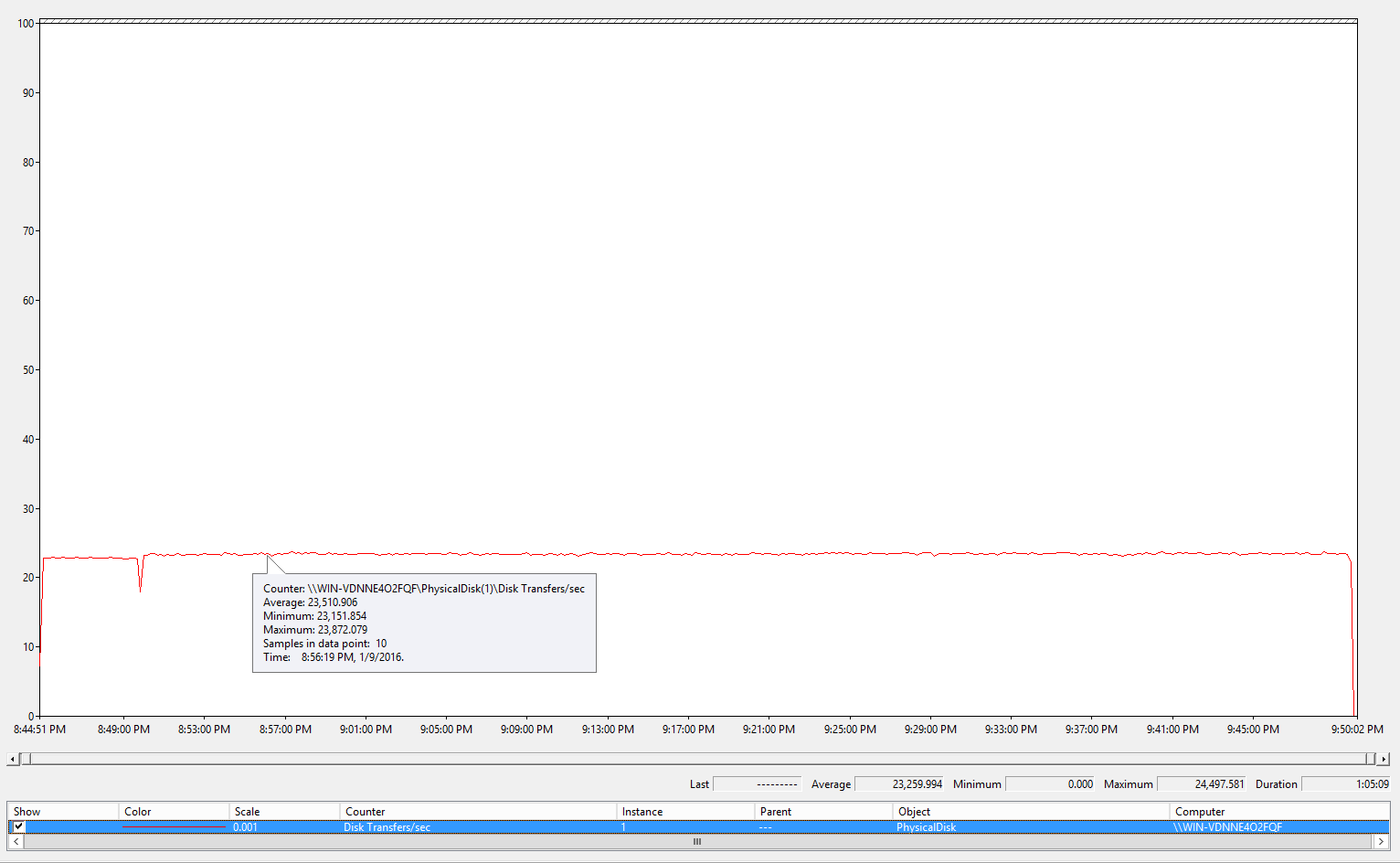

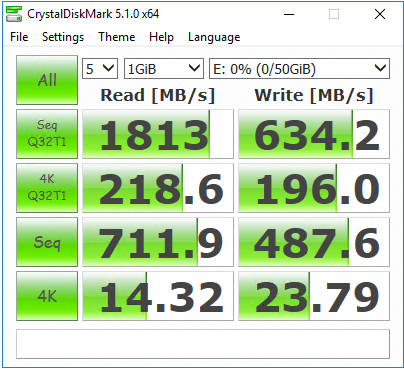

On WOSS-H2-16 I create a VM and attach a 50GB fixed data VHD to that VM. That data VHD is in the above iSCSI volume. Here is the result.

I also captured the performance log when I run FIO. The FIO test includes two parts. The first part 5 minutes is 100% 4KB random read. The second part is 60 minutes 100% 4KB random write. From the picture below, we can see both read and write 4KB random are capped at 230,000.

Test case 3: Performance of VM running in a SMB Share

----------------------

Move the above data VHD from iSCSI volume to a SMB share on WOSS-H2-14. Actually both the iSCSI volume and SMB share are on the same drive. Here is the test result for this case.

Summery:

---------------------

Compare the test results from the above test cases, we can find SMB can provide the better throughput, much higher IOPS and extremely lower latency.

Comments

- Anonymous

January 15, 2016

iSCSI 'imitator' => LOL ! here that's an initiator Larry :-)

thx anyway for your write-up and visual analysis, rly useful. - Anonymous

January 24, 2016

Thanks, Lauren! I will correct that typo. - Anonymous

June 01, 2016

Good information and very useful. Thank you for taking the time to put this together. - Anonymous

November 15, 2016

I agree. This is exactly the type of information I was looking for. I'm glad I found this article. Thanks. - Anonymous

December 09, 2016

Thank you very much, this is a very nice article! - Anonymous

April 25, 2017

Good job!!! Thank you very much.