Force network traffic through a specific NIC with SMB multichannel

Hello,

There are more and more Network Interface Cards (NICs) in our servers (Blades are different on this one). It is recommended to group (team) and dedicate NICs (with VLANs) to shape the traffic and gain performances.

But my setup didn’t allow the full deployment of this recommendation as:

- Some servers don’t have enough NICs

- The network uses mixed technologies (Ethernet and Infiniband)

What happened is I realized I was not getting the full throughput from my Storage components, and used SMB multichannel to solve it.

The setup

The setup is super classic:

- 1 ethernet network for administration

- 3 Infiniband HBA and Subnets (created by OpenSM) for the clustered storage

- The Storage is exposed to the cluster through a SMB Share (new feature from SMB 3, Hyper-V 2012 and Failover clustering for Hyper-V 2012 R2)

The issue:

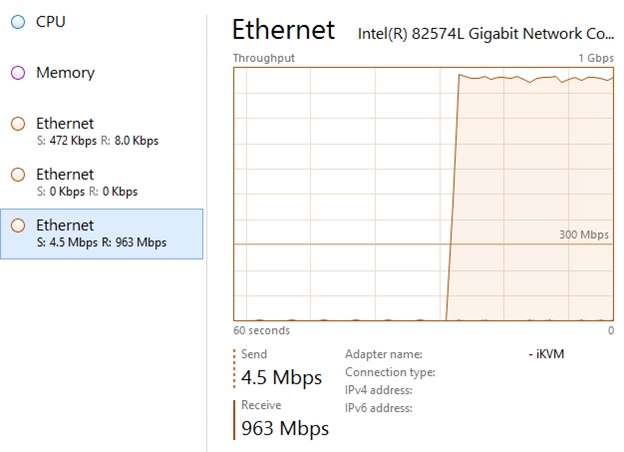

When the Administration NIC is enabled, the data copy between the Storage server and a cluster node, is capped by the Ethernet card through put (1 Gbps):

But it should go through the InfiniBand HBA as I created NIC metrics, modified hosts records etc. for the communication between the 3 servers to go through the InfiniBand cards that have a 10 Gbps throughput.

To test it is technically possible, I disabled the administration NIC and retested with the following results:

So, this is obvious, the data flow goes through the Admin card, even if host file and NIC metric are set to force the use of the dedicated subnet to connect to the storage server. As the copy goes through Windows File Shares (SMB protocol, using the \\Server\FileShare convention), I needed a way to force the flow through a specific IP / subnet.

The solution: SMB Multichannel

The SMB Multichannel solves the issue. It can force the network flow for the storage server to go through the high-speed NIC, and not the administration network. In this configuration, the storage server is the “SMB Server” and the 2 cluster nodes are the “SMB Clients”, which can be done following this configuration:

- Check SMB multichannel status:

On the Storage server (the SMB server), type:

Get-SmbServerConfiguration | Select EnableMultiChannel

If True is returned, you`re good. If not, type:

Set-SmbServerConfiguration -EnableMultiChannel $true

On the Cluster nodes (the SMB client), type:

Get-SmbClientConfiguration | Select EnableMultiChannel

If True is returned, you`re good. If not, type:

Set-SmbClientConfiguration -EnableMultiChannel $true

- Check SMB connection status on the connections:

On the Cluster nodes (the SMB client), type:

Get-SmbConnection

It returns the share that the node is connected to. Here, you want to check the version used (the Dialect). For Windows Server 2012 R2, you should have 3.02

Then, check how the connection is done (through which adapters does it go), typing:

Get-SmbMultichannelConnection

You can see the “Client IP” and the “Server IP”. It should allow you to check that SMB uses the appropriate network.

- Constraint SMB Multichannel

To fix the issue, I force the connection to happen through the adapter I want. In that case I list the adapters with this command:

Get-NetAdapter | select -Property Name, LinkSpeed

I choose the adapter name I want to force and the “SMB Server” name to forcely connect to:

New-SmbMultichannelConstraint -InterfaceAlias "AdapterToStorage" -ServerName "Storage"

Answer “Yes to All” to the confirmation prompt, then check the result typing:

Get-SmbMultichannelConnection

- Do that for all the Cluster nodes

The results are now the ones expected (with Admin card enabled):

The storage flow goes through the constrained adapter (the 10Gbps one), even if the Administrative network is connected on all the servers (Storage + Cluster nodes)

2 sides notes here:

- The increase from 2 Gbps to 5.5 Gbps transfer in the print screens is due to different files transferred and RAM caching effect for the testing

- The same principle is applied to the LiveMigration subnet to force transfers through SMB between the nodes to use the fastest network (Option in Hyper-V settings)

Hope this helps

<Emmanuel />

References:

- Deploy SMB multichannel: https://technet.microsoft.com/en-us/library/dn610980.aspx

Comments

Anonymous

February 02, 2016

This is nice, but you're leaving something out. You say, "From storage server, type Get-SmbServerConfiguration | Select EnableMultiChannel" - Type it from WHERE? Do we use PowerShell? You never said HOW to type it, and this not a standard Windows command? Is it only via some InfiniBand Software? Do we type this in PowerShell? Very confusing. Thanks for the info so far, though - very good overall!Anonymous

February 02, 2016

This is good, but you say "From the storage server, type 'get-smb...." " From WHERE? This is not a standard Windows command. Is this PowerShell? You never specified. Overall, very good, but please don't assume the reader knows to go into PowerShell. Or are these commands proprietary to some 'Infiniband' compoenent? And, if they are PowerShell, what module(s) do we need to load, in order to use these commands? Thanks for the wonderful details so far.