Generate scenarios for simulations

For autonomous driving and assistance systems, you must perform open and closed loop validations by simulating specific scenarios. OpenDRIVE and OpenSCENARIO are examples of widely adopted industry standards to define the driving scenarios. Today, data scientists often identify corner cases and then use a common scripting language such as Python to generate scenarios in a high-level script format compliant with OpenSCENARIO. Test managers manage the suite of scenarios and test engineers manage the test execution, but the engineers and managers can't manage the scripts.

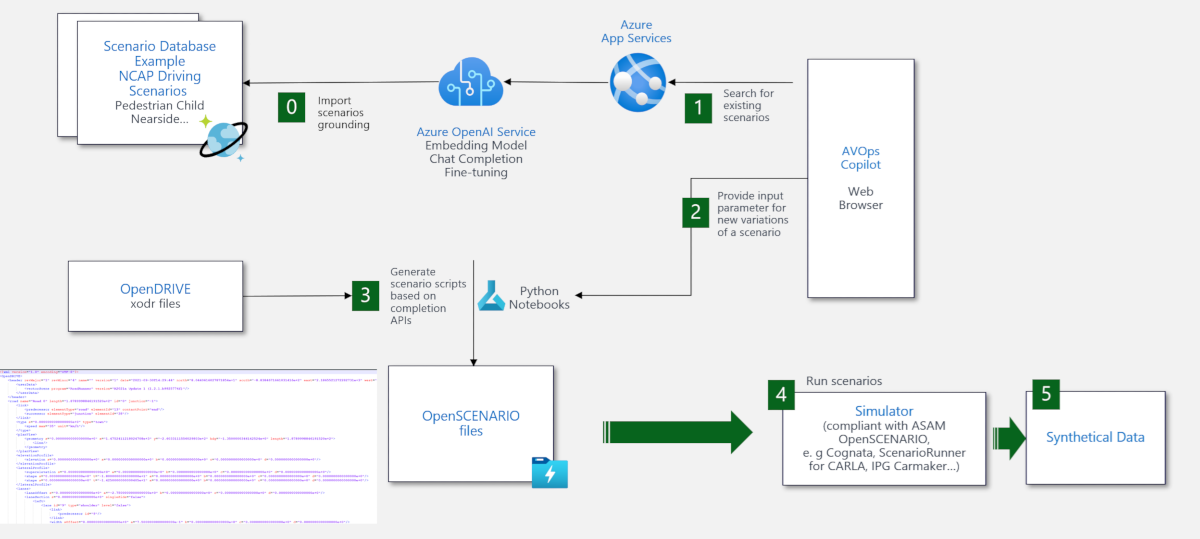

A Copilot interface using Azure OpenAI large language models (LLMs) can support the generation of OpenSCENARIO files passed to simulation engines and make the entire validation process more efficient.

Example

Test managers might need to develop vague scenarios and often need to iterate. A Copilot can accept the test manager's first draft of a scenario, such as Two cars on a highway overtaking each other, and provide a response on refining the scenario and supporting the script generation process.

The following example shows an end-to-end process of generating and evaluating a scenario:

Create scenarios using generative AI. In this manner, you can refine the model based on an existing scenario database.

Based on the created scenarios, generate test scripts or open scenario files that comply with OpenSCENARIO and add them as inputs into the simulator.

Run the simulation.

If the test scenario fails, add similar test scenarios and variations, and refine them further. For more information, see Pegasus Method

Using this type of process, you can evaluate algorithms in long-tailed specialized cases. The following diagram shows the architecture using Microsoft AI capabilities to support the refinement process: