Observing SQL Server Transaction Log Flush Sizes using Extended Events and Process Monitor

In this blog post we’ll take a look at how SQL Server interacts with the transaction log file using Extended Events and Process Monitor. We will see how small transactions can have a negative impact on transaction log IO throughput and how larger transactions can actually be much faster than a lot of small transactions.

My goal with this post is to demonstrate the behavior of a Log Flush operation to the transaction log file for a given database and how the size of different transactions have an influence on the size of the IOs written to the log file. While I will be showing cases where larger transactions do perform better than smaller transactions, it is not entirely appropriate for every environment. Transaction scope should ultimately be dictated by business requirements, and not solely throughput. Choices made in this area not only have an effect on performance, but also on concurrency and business needs, so choose wisely.

First, let’s open up SSMS and create a table to use for our example.

USE AdventureWorks2012

GO

IF OBJECT_ID('dbo.Numbers') IS NOT NULL

DROP TABLE dbo.Numbers

GO

CREATE TABLE dbo.Numbers

(

Num INT NOT NULL,

CONSTRAINT PK_Numbers PRIMARY KEY CLUSTERED(Num)

)

GO

Next, open up Process Monitor (which can be downloaded here: https://technet.microsoft.com/en-us/sysinternals/bb896645.aspx). We will only be looking for the sqlservr.exe Process and the Path to the transaction log file for AdventureWorks2012, in my case C:\SQL\AdventureWorks2012_log.ldf. The idea here is to watch the size of the IO flush to the transaction log file. The size will vary from the sector size on disk (usually 512 bytes) all the way up to 60k in size. The smaller the log flush, the more IO we have to do to write log records out to the transaction log file (known as a log flush). If the transaction is larger (closer to 60K) then the less work we do in terms of write frequency to the log because we can fit more log records into a single write.

Next I am going to dump 12,000 records into the Numbers table I previously created. Since each iteration is a separate implicit transaction, we will have to flush the log records in the log buffer to the transaction log upon each transaction completion. There are 3 things that cause SQL Server to need to flush a log buffer to disk (to an adjoining log block in the transaction log).

1. A transaction commits and that transaction has an active log record in a log buffer. This will cause all log records in those buffer (again, up to 60K worth of transaction log record for each log buffer) to be flushed to the log file.

2. We hit the 60K size limit for the log buffer and must flush it to disk.

3. We have data pages that we must write to disk and there are log records related to those pages. We must write those log records to the log file BEFORE we write the data pages to the data files (this supports our Write Ahead Logging (WAL) protocol).

SET STATISTICS IO OFF

SET NOCOUNT ON

DECLARE @Number INT

SET @Number = 1

WHILE @Number <= 12000

BEGIN

INSERT INTO dbo.Numbers(Num)

SELECT @Number

SET @Number = @Number + 1

END

GO

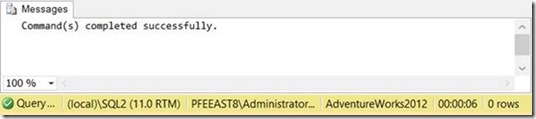

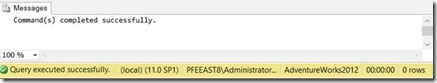

We can see here that the above statement took around 6 seconds on my system. Your mileage will vary here in aspect to duration.

And we can see that we did, in fact, insert 12,000 records into the table.

SELECT COUNT(*) AS RecordCount

FROM dbo.Numbers

GO

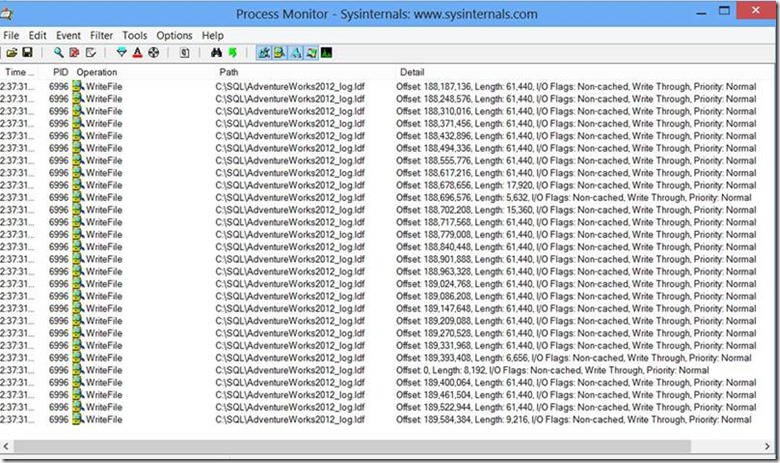

More interestingly, looking at Process Monitor we can see the size of the writes to the transaction log file. Because these were very small transactions, we see that we wrote many, many times to the transaction log file in 512 byte increments. That is a lot of work to do, and we can see the result in the 6 second duration in the above INSERT statement loop.

Using extended events, I can use a histogram target to capture the size of the writes and how many times that write size occurred to the transaction log. In this case, almost every one of the 12,000 inserts was in 512 byte increments. I use the database_id of 5 in my example script below because my AdventureWorks2012 database_id is 5 on my system.

CREATE EVENT SESSION [LogRecords] ON SERVER

ADD EVENT sqlserver.log_flush_start(

ACTION(sqlserver.database_name)

WHERE ([database_id]=(5)))

ADD TARGET package0.histogram(SET filtering_event_name=N'sqlserver.log_flush_start',

source=N'write_size',source_type=(0))

WITH (MAX_MEMORY=4096 KB,EVENT_RETENTION_MODE=ALLOW_SINGLE_EVENT_LOSS,

MAX_DISPATCH_LATENCY=30 SECONDS,MAX_EVENT_SIZE=0 KB,MEMORY_PARTITION_MODE=NONE,

TRACK_CAUSALITY=OFF,STARTUP_STATE=OFF)

GO

Now, what happens if we batch our INSERT statements up into one large transaction? First, clear out the table using a TRUNCATE statement:

TRUNCATE TABLE dbo.Numbers

GO

Next, run the same INSERT statement as before, this time just wrap the statement into an explicit transaction.

SET STATISTICS IO OFF

SET NOCOUNT ON

DECLARE @x INT

SET @x = 1

BEGIN TRANSACTION

WHILE @x <= 12000

BEGIN

INSERT INTO dbo.Numbers(Num)

SELECT @x

SET @x = @x + 1

END

COMMIT TRANSACTION

GO

We can see that the transaction is MUCH faster this time – less than a second in duration.

Looking at Process Monitor we see that the writes to the transaction log file are now mostly 60k in size rather than the 512 byte writes that we previous observed. This means that there were many fewer writes to the log file.

The xEvent histogram backs up what we see with Process Monitor – all but a few of the transaction log writes are in 60k increments. This happens to be much faster than writing in very small increments. It is also worth noting here that the write nature to the transaction log is always sequential. A log flush always writes its transaction log records logically to the transaction log file in a place directly “after” the previous log flush. The reason I stress using “after” here is because the transaction log is also circular in nature. If when writing to the transaction log, the end of the file is reached, it is certainly possible to wrap back to the beginning of the file if the VLF boundaries at the beginning of the file are available for reuse. For more information regarding the physical architecture of the transaction log, see this article: https://technet.microsoft.com/en-us/magazine/2009.02.logging.aspx

Here are a couple of other articles that also dig into transaction log internals:

https://msdn.microsoft.com/en-us/library/cc917726.aspx

Tim Chapman

Comments

Anonymous

November 05, 2013

Hi, thanks for explaining this so clearly. I'm now off to read up on Extended Events, as this looks like a very powerful feature. Do you happen to know what the effects of a very large transaction log (>20GB) would be, if the DB was running in Simple mode? Any additional seek time involved (time to get the current log position etc)... I'm trying to investigate if there is any performance benefit in shrinking the logs prior to using them, if I've just moved my DB into Simple mode from Full Recovery mode in preparation for a massive bulk load operation (whole database being unloaded and then re-loaded back in again - it's an SAP system). Thanks very much. DarrylAnonymous

July 29, 2016

Hi Tim,Thanks for the article. I observed the same as you have mentioned above but when i tried dropping the table and running the same against from top all transactions are running in 60K. Can you help me understand why?