Page Compression and the 4038 Length Limitation

While it is well documented that when applying page compression to a table only in-row data will be compressed, it is not so well known that only strings up a maximum length of 4038 can be compressed with page compression. If you have ever turned on page compression for large blobs of data and wondered why you are not getting a good compression ratio this post will explain why.

There are several prerequisites to page compression. One well documented stipulation is that compression will only work on in-row data. If a varchar(MAX) is used the string data will only be held in-row if it is 8000 characters or less than in length. Varchar(MAX) data can be compressed with page compression but it has to be of a small enough string length. However, although 8000 characters and under will allow a record to fit in-row, it would need to be at least 4038 characters or less for page compression.

The following example shows page compression not working for in-row data. Here we create a table, add 1000 records to it with a length of 8000 characters, apply page compression and then return the number of pages associated with that table.

CREATE TABLE MSCompressionDemoTestTable (stringdata varchar(8000))

GO

INSERT INTO MSCompressionDemoTestTable (stringData) VALUES (REPLICATE('a', 8000))

GO 1000

ALTER TABLE MSCompressionDemoTestTable REBUILD PARTITION = ALL WITH (DATA_COMPRESSION = PAGE);

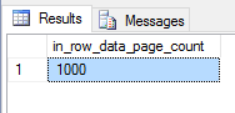

SELECT in_row_data_page_count FROM sys.dm_db_partition_stats WHERE OBJECT_ID = OBJECT_ID('MSCompressionDemoTestTable')

GO

DROP TABLE MSCompressionDemoTestTable

1000 pages are still being used by this table. No compression has been performed.

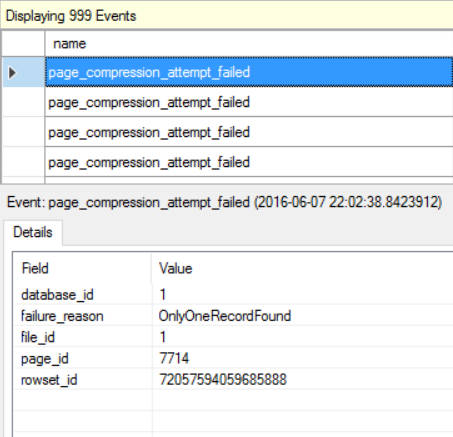

To investigate why this is not working we can look at the extended event sqlserver.page_compression_attempt_failed.

CREATE EVENT SESSION [PageCompressionErrors] ON SERVER

ADD EVENT sqlserver.page_compression_attempt_failed

WITH (MAX_MEMORY=4096 KB,EVENT_RETENTION_MODE=ALLOW_SINGLE_EVENT_LOSS,MAX_DISPATCH_LATENCY=30 SECONDS,MAX_EVENT_SIZE=0 KB,MEMORY_PARTITION_MODE=NONE,TRACK_CAUSALITY=OFF,STARTUP_STATE=OFF)

GO

When attempting to compress the data the extended event is returning the failure_reason of OnlyOneRecordFound.

The algorithm that performs the page compression will look at each page it is compressing to see if there is more than one record on that page. If there is then it will attempt compression, if not then it will skip over that page. The algorithm sees no value in creating a dictionary and compressing just one record so ignores pages with one record in them.

If we half the size of the string data we will get two records per page and so the page compression will be successful.

CREATE TABLE MSCompressionDemoTestTable (stringdata varchar(8000))

GO

INSERT INTO MSCompressionDemoTestTable (stringData) VALUES (REPLICATE('a', 4000))

GO 1000

ALTER TABLE MSCompressionDemoTestTable REBUILD PARTITION = ALL WITH (DATA_COMPRESSION = PAGE);

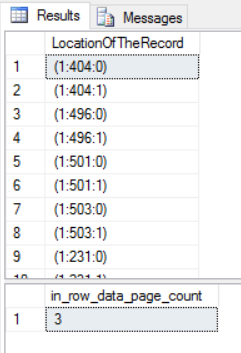

SELECT in_row_data_page_count FROM sys.dm_db_partition_stats WHERE OBJECT_ID = OBJECT_ID('MSCompressionDemoTestTable')

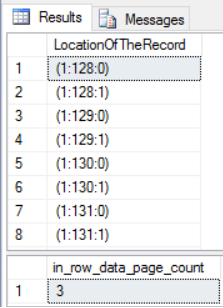

SELECT sys.fn_PhysLocFormatter (%%PHYSLOC%%) LocationOfTheRecord FROM MSCompressionDemoTestTable

GO

DROP TABLE MSCompressionDemoTestTable

We can see from the results that each page has two slots (or records). After we turned on page compression we have compressed from 1000 records down to 3 pages.

Up until now we have been looking at non unicode data. The following example uses an NVarchar (unicode) type.

CREATE TABLE MSCompressionDemoTestTable (stringdata Nvarchar(4000))

GO

INSERT INTO MSCompressionDemoTestTable (stringData) VALUES (REPLICATE(N'a', 4000))

GO 1000

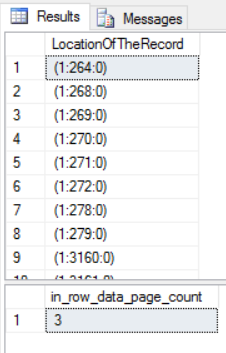

SELECT sys.fn_PhysLocFormatter (%%PHYSLOC%%) LocationOfTheRecord FROM MSCompressionDemoTestTable

ALTER TABLE MSCompressionDemoTestTable REBUILD PARTITION = ALL WITH (DATA_COMPRESSION = PAGE);

SELECT in_row_data_page_count FROM sys.dm_db_partition_stats WHERE OBJECT_ID = OBJECT_ID('MSCompressionDemoTestTable')

GO

DROP TABLE MSCompressionDemoTestTable

From the results we can see we have filled up the page as we have one page per record, but the page compression still compresses to 3 pages.

This is because before page compression is run row compression is first run. Row compression take any data types down to the smallest data type it can be. In this case NVarchar(4000) can be converted down to Varchar(4000) and Varchar(4000) as we have seen before will allows 2 records to held onto a page, so page compression succeeds.

In the following example we are explicitly turning on row compression so the impact can be demonstrated.

CREATE TABLE MSCompressionDemoTestTable (stringdata Nvarchar(4000))

GO

INSERT INTO MSCompressionDemoTestTable (stringData) VALUES (REPLICATE(N'a', 4000))

GO 1000

SELECT sys.fn_PhysLocFormatter (%%PHYSLOC%%) LocationOfTheRecordBeforeRowcompression FROM MSCompressionDemoTestTable

ALTER TABLE MSCompressionDemoTestTable REBUILD PARTITION = ALL WITH (DATA_COMPRESSION = ROW);

SELECT sys.fn_PhysLocFormatter (%%PHYSLOC%%) LocationOfTheRecordAfterRowCompression FROM MSCompressionDemoTestTable

ALTER TABLE MSCompressionDemoTestTable REBUILD PARTITION = ALL WITH (DATA_COMPRESSION = PAGE);

SELECT in_row_data_page_count FROM sys.dm_db_partition_stats WHERE OBJECT_ID = OBJECT_ID('MSCompressionDemoTestTable')

GO

DROP TABLE MSCompressionDemoTestTable

The results show after row compression we have two rows per page so page compression will be able to compress and take us down to 3 pages

If we run the same example but this time with unicode data, the page compression will fail as the first step of row compression cannot compress enough to fit more than one record on a page.

CREATE TABLE MSCompressionDemoTestTable (stringdata Nvarchar(4000))

GO

INSERT INTO MSCompressionDemoTestTable (stringData) VALUES (REPLICATE(NCHAR(1234) + NCHAR(1299), 2000))

GO 1000

ALTER TABLE MSCompressionDemoTestTable REBUILD PARTITION = ALL WITH (DATA_COMPRESSION = PAGE);

SELECT in_row_data_page_count FROM sys.dm_db_partition_stats WHERE OBJECT_ID = OBJECT_ID('MSCompressionDemoTestTable')

GO

DROP TABLE MSCompressionDemoTestTable

The largest string we can compress is 4038 characters of non unicode data when we are using a heap with no other columns. This example show this in action where the row compression stage is first managing to shrink the data into two records so they can fit on one page.

CREATE TABLE MSCompressionDemoTestTable (stringdata varchar(8000))

GO

INSERT INTO MSCompressionDemoTestTable (stringData) VALUES (REPLICATE('a', 4038))

GO 1000

ALTER TABLE MSCompressionDemoTestTable REBUILD PARTITION = ALL WITH (DATA_COMPRESSION = ROW);

SELECT sys.fn_PhysLocFormatter (%%PHYSLOC%%) LocationOfTheRecord FROM MSCompressionDemoTestTable

ALTER TABLE MSCompressionDemoTestTable REBUILD PARTITION = ALL WITH (DATA_COMPRESSION = PAGE);

SELECT in_row_data_page_count FROM sys.dm_db_partition_stats WHERE OBJECT_ID = OBJECT_ID('MSCompressionDemoTestTable')

SELECT sys.fn_PhysLocFormatter (%%PHYSLOC%%) LocationOfTheRecord FROM MSCompressionDemoTestTable

GO

DROP TABLE MSCompressionDemoTestTable

All these examples have been using a single heap with one column to give the maximum row size we can compress. Every column we add to the table will reduce the amount of string data we can compress as it will limit how many rows can fit on a page.

So when using page compression, you may be getting a much worst compression then expected if you have wide rows and long strings. It is always best to check before implementing page compression how many rows you can fit on each page after row compression has been performed. If that number is one then page compression will have no impact.