Scale up with SQL Server 2005 / SQL Server 2008 - NUMA

This article is related to the NUMA (Non Uniform Memory Access) feature available with SQL Server. NUMA is a very useful feature to scale up the Server.

Even before we discuss how SQL Server 2005 supports NUMA we have a few questions that needs to be addressed. A question that is frequently asked is:

What is the difference between Scale Up and Scale Out ?

Scale Up is a process to improve the capabilities of a server in a way that there is only one OS Image on this server like if you have a 2 processor box then you add 2 more processors or make it a 8 processor box and add more storage space by adding higher capacity disks or adding more RAM to the server. Scale out is a process in which the processing load is distributed among a bunch of relatively inexpensive boxes. SQL Server 2005/2008 supports both Scale Outs and Scale Ups.

One more frequently asked question is whether Scaling Up the server is best or Scaling out is best ... I would say it is completely situational and depends upon the requirements.

Let's understand what is NUMA and how it helps in Scale Up of the server.

NUMA stands for NON UNIFORM MEMORY ACCESS. Present day computers contain very powerful CPUs which can work faster than the RAM installed on the computers. This means that every time we upgrade the CPU we need to upgrade the CPUs to extract the best performance from these servers because the Memory Access is limited/slow and the CPUs are fast so the processes starve for the inputs and if this a Multi Processor System then the situation is even more worse. When Modern Operating Systems are capable to crunch out the performance from these Modern CPUs, the Memory Access Model is not very efficient to get the best performance. NUMA architecture tries to address the issue by providing separate memory for each processor, avoiding the performance hit when several processors attempt to address the same chunk of memory.

In NUMA each group of processors has its own memory and possibly its own I/O channels. However, each CPU can access memory associated with the other groups in a coherent way. Each group is called a NUMA node. The number of CPUs within a NUMA node depends on the hardware vendor. It is faster to access local memory than the memory associated with other NUMA nodes. This is the reason for the name, non-uniform memory access architecture.

On NUMA hardware, some regions of memory are on physically different buses from other regions. Because NUMA uses local and foreign memory, it will take longer to access some regions of memory than others. Local memory and foreign memory are typically used in reference to a currently running thread. Local memory is the memory that is on the same node as the CPU currently running the thread. Any memory that does not belong to the node on which the thread is currently running is foreign. Foreign memory is also known as remote memory. The ratio of the cost to access foreign memory over that for local memory is called the NUMA ratio. The main benefit of NUMA is scalability. The NUMA architecture was designed to surpass the scalability limits of the SMP architecture.

Scenarios to Use NUMA

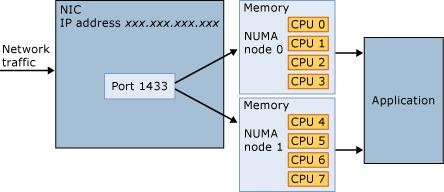

1) No port to NUMA affinity : This is the default condition on a computer with hardware NUMA and a single instance of SQL Server 2005. All traffic enters through a single port and is distributed on a round-robin basis to any available NUMA node. NUMA improves the locality of memory and CPU access and increases the number of I/O and lazy writer threads. Connections, once established, are scoped to that node. This provides automatic load balancing among the NUMA nodes. Client applications can connect to a single port, and are easy to deploy.

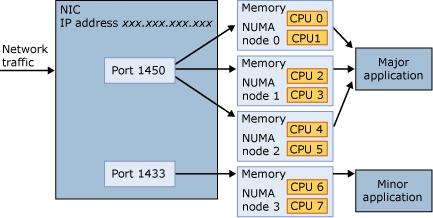

2) Associating a single port to multiple nodes to provide improved performance for a priority application : Affinitize one port to several hardware NUMA nodes to serve a major priority application. Affinitize a second port to another hardware NUMA node to serve a second minor application. The memory and CPU resources for the two applications are fixed in the unbalanced manner, providing the major application with three times the local memory and three times the CPU resources of the minor application. The minor application can be a second instance of the Database Engine, a less important function in the same Database Engine instance, or even the same database. This can provide a form of priority thread execution by providing extra resources to the favored connection.

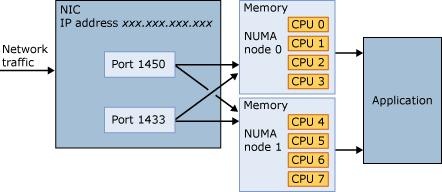

3) Associating multiple ports to multiple nodes : More than one port can be mapped to the same NUMA nodes. This permits you to configure differing permissions to various ports. For example, you can tightly restrict the access provided through a port by controlling permissions on the corresponding TCP endpoint. In this example, port 1450 is widely available to the intranet. Port 1433 is open to the Internet through a firewall, but access is tightly restricted. Both ports take full and equal advantage of NUMA.

Hardware vs Software NUMA

This section helps you understand the Hardware NUMA and Software NUMA. Servers supporting Hardware NUMA will have more than one system bus each listening and serving small set of CPUs, each group of processors will have its own set of memory and would have its own I/O channel. Each CPU would have the ability to access the memory dedicated to other groups in a coherent way. Each of this group is called NUMA NODE. The hardware vendor should be able to help you understand how many CPUs are supported within one NUMA NODE. If you have hardware NUMA, it may be configured to use interleaved memory instead of Software NUMA. In that case, Windows and therefore SQL Server will not recognize it as NUMA, you can run the following SQL Query to to find the number of memory nodes available to SQL Server.

SELECT DISTINCT memory_node_id FROM sys.dm_os_memory_clerks |

SYS.DM_OS_MEMORY_CLERKS is a Dynamic Management View and a detailed documentation could be found at https://msdn2.microsoft.com/en-us/library/ms175019.aspx

If SQL Server returns only a single memory node (node 0), either the hardware NUMA is not present or the hardware is configured as interleaved (non-NUMA). If you think your hardware NUMA is configured incorrectly, contact your hardware vendor to enable NUMA. SQL Server ignores NUMA configuration when hardware NUMA has four or less CPUs and at least one node has only one CPU. SQL Server 2005 allows you to group CPUs into nodes referred to as soft-NUMA. You usually configure soft-NUMA when you have many CPUs and do not have hardware NUMA, but you can also use soft-NUMA to subdivide hardware NUMA nodes into smaller groups. Only the SQL Server scheduler and SQL Server Network Interface (SNI) are soft-NUMA aware. Memory nodes are created based on hardware NUMA and therefore not impacted by soft-NUMA. So, for example, if you have an SMP computer with eight CPUs and you create four soft-NUMA nodes with two CPUs each, you will only have one memory node serving all four NUMA nodes. Soft-NUMA does not provide memory to CPU affinity. The benefits of soft-NUMA include reducing I/O and lazy writer bottlenecks on computers with many CPUs and no hardware NUMA. There is a single I/O thread and a single lazy writer thread for each NUMA node. Depending on the usage of the database, these single threads may be a significant performance bottleneck. Configuring four soft-NUMA nodes provides four I/O threads and four lazy writer threads, which could increase performance.

You cannot create a soft-NUMA that includes CPUs from different hardware NUMA nodes. For example, if your hardware has eight CPUs (0..7) and you have two hardware NUMA nodes (0-3 and 4-7), you can create soft-NUMA by combining CPU(0,1) and CPU(2,3). You cannot create soft-NUMA using CPU (1, 5), but you can use CPU affinity to affinitize an instance of SQL Server to CPUs from different NUMA nodes. So in the previous example, if SQL Server uses CPUs 0-3, you will have one I/O thread and one lazy writer thread. If, in the previous example SQL Server uses CPUs 1, 2, 5, and 6, you will access two NUMA nodes and have two I/O threads and two lazy writer threads.

How SQL Maps Software NUMA Nodes to Hardware NUMA Nodes

Soft-NUMA is defined once for all SQL Server 2005 instances on the computer, so multiple instances of the Database Engine all see the same soft-NUMA nodes. Each instance of the Database Engine then uses the affinity mask option to select the appropriate CPUs. Each instance will then use whatever soft-NUMA nodes are associated with those CPUs.

When starting, Windows allocates memory for the operating system from hardware NODE 0. Accordingly, hardware NODE 0 has less local memory available for other applications than the other nodes. This problem is accentuated when there is a large system file cache. When SQL Server starts on a computer with more than one NUMA node, it tries to start on a NUMA node other than NODE 0 so that its global structures can be allocated on the local memory.

How Connections Are Assigned to NUMA Nodes

Both TCP and VIA can affinitize connections to one or more specific NUMA nodes. When not affinitized, or when connecting with named pipes or shared memory, connections are distributed to NUMA nodes on a round-robin basis. Within a NUMA node, the connection is run on the least loaded scheduler on that node. Due to the round-robin nature of assigning new connections, it is possible that all CPUs within a node are busy while another node is idle. If you have very few CPUs (for example, 2) and if you see large scheduling imbalances because of long-running batches such as bulk load, you may have better performance if you turn off NUMA.

How to Configure NUMA on SQL Server 2005 / 2008

It is highly recommended that you backup your system before configuring the system.

Please refer the following links:

https://msdn2.microsoft.com/en-us/library/ms345357.aspx

https://msdn2.microsoft.com/en-us/library/ms345346.aspx

New in SQL Server 2008

There are two new Dynamic Management Views included in SQL Server 2008 which are very interesting.

1) dm_os_memory_nodes : You would need a VIEW SERVER STATE permission on the server for this DMV to be executed. The allocations that are internal to SQL Server use the SQL Server Memory Manager. Tracking the difference between process memory counters and internal counters can indicate memory use from external components in the SQL Server memory space. Nodes are created per physical NUMA Memory Nodes. These might be different from the CPU nodes in sys.dm_os_nodes. The allocation done directly by Windows are not tracked, it would only track the memory allocations done by SQL Server. The following table shows the details.

Column name |

Data type |

Description |

memory_node_id |

smallint |

ID of the memory node. Related to memory_node_id of sys.dm_os_memory_clerks. |

virtual_address_space_reserved_kb |

bigint |

Number of virtual address reservations, in kilobytes (KB), that are neither committed nor mapped to physical pages. |

virtual_address_space_committed_kb |

bigint |

Amount of virtual address, in KB, that has been committed or mapped to physical pages. |

locked_page_allocations_kb |

bigint |

Amount of physical memory, in KB, that has been locked by SQL Server. |

single_pages_kb |

bigint |

Amount of committed memory, in KB, that is allocated by using the single page allocator by threads running on this node. This memory is allocated from the buffer pool. This value indicates the node where allocations request occurred, not the physical location where the allocation request was satisfied. |

multi_pages_kb |

bigint |

Amount of committed memory, in KB, that is allocated by using the multi page allocator by threads running on this node. This memory is from outside the buffer pool. This value indicates the node where the allocation requests occurred, not the physical location where the allocation request was satisfied. |

shared_memory_reserved_kb |

bigint |

Amount of shared memory, in KB, that has been reserved from this node. |

shared_memory_committed_kb |

bigint |

Amount of shared memory, in KB, that has been committed on this node. |

2) dm_os_nodes : You would need a VIEW SERVER STATE permission on the server for this DMV to be executed. The SQL OS an internal component which is not visible outside creates the node structures that resembles hardware processor locality. These structures could be changed by using soft-NUMA to create custom node layouts. Please refer the following table:

Column name |

Data type |

Description |

node_id |

smallint |

ID of the node. |

node_state_desc |

Nvarchar(256) |

Description of the node state. Values are displayed with the mutually exclusive values first, followed by the combinable values. For example: Online, Thread Resources Low, Lazy Preemptive. There are four mutually exclusive node_state_desc values: Online: Node is online Offline:Node is offline Idle: Node has no pending work requests, and has entered an idle state. Ready for Idle:Node has no pending work requests, and is ready to enter an idle state. There are three combinable node_state_desc values: DAC: This node is reserved for the Dedicated Administrative Connection. Thread Resources Low: No new threads can be created on this node because of a low-memory condition. |

memory_object_address |

varbinary(8) |

Address of memory object associated with this node. One-to-one relation to sys.dm_os_memory_objects. memory_object_address. |

memory_clerk_address |

varbinary(8) |

Address of memory clerk associated with this node. One-to-one relation to sys.dm_os_memory_clerks. memory_clerk_address. |

io_completion_worker_address |

varbinary(8) |

Address of worker assigned to IO completion for this node. One-to-one relation to sys.dm_os_workers. worker_address. |

memory_node_id |

smallint |

ID of the memory node this node belongs to. Many-to-one relation to sys.dm_os_memory_nodes. memory_node_id. |

cpu_affinity_mask |

bigint |

Bitmap identifying the CPUs this node is associated with. |

online_scheduler_count |

smallint |

Number of online schedulers that aremanaged by this node. |

idle_scheduler_count |

smallint |

Number of online schedulers that have no active workers. |

active_worker_count |

int |

Number of workers that are active on all schedulers managed by this node. |

avg_load_balance |

int |

Average number of tasks per scheduler on this node. |

timer_task_affinity_mask |

bigint |

Bitmap identifying the schedulers that can have timer tasks assigned to them. |

permanent_task_affinity_mask |

bigint |

Bitmap identifying the schedulers that can have permanent tasks assigned to them. |

resource_monitor_state |

bit |

Each node has one resource monitor assigned to it. The resource monitor can be running or idle. A value of 1 indicates running, a value of 0 indicates idle. |