Walkthrough - Troubleshooting a native memory leak

Problem:

A customer called in. They had a Web Service running on a single IIS6. Memory usage would slowly increase and not be released. As a workaround they'd currently set the application pool to recycle at 500 MB, causing a few failed requests upon each restart.

I thought I'd describe how I went about troubleshooting this scenario.

The first dump

The customer had already taken a memory dump using DebugDiag, so obviously I asked them to upload it. It was a huge dump. 1.64 GB! I opened it up in windbg and ran the following command:

!eeheap -gc

I haven't mentioned !eeheap before. It gives me a nice summary of what's on the managed heap(s).

0:000> !eeheap -gc

Number of GC Heaps: 4

------------------------------

Heap 0 (0x000dfaf0)

generation 0 starts at 0x1124b1c0

generation 1 starts at 0x111c3684

generation 2 starts at 0x102d0030

ephemeral segment allocation context: none

segment begin allocated size

0x102d0000 0x102d0030 0x1165033c 0x138030c(20,448,012)

Large object heap starts at 0x202d0030

segment begin allocated size

0x202d0000 0x202d0030 0x20636810 0x003667e0(3,565,536)

Heap Size 0x16e6aec(24,013,548)

------------------------------

Heap 1 (0x000dfee8)

generation 0 starts at 0x15365b5c

generation 1 starts at 0x152f2138

generation 2 starts at 0x142d0030

ephemeral segment allocation context: none

segment begin allocated size

0x142d0000 0x142d0030 0x1577d230 0x14ad200(21,680,640)

Large object heap starts at 0x252d0030

segment begin allocated size

0x252d0000 0x252d0030 0x25391190 0x000c1160(790,880)

Heap Size 0x156e360(22,471,520)

------------------------------

Heap 2 (0x000e09f8)

generation 0 starts at 0x19480e94

generation 1 starts at 0x193ec5b0

generation 2 starts at 0x182d0030

ephemeral segment allocation context: none

segment begin allocated size

0x182d0000 0x182d0030 0x1985ed34 0x158ed04(22,605,060)

Large object heap starts at 0x222d0030

segment begin allocated size

0x222d0000 0x222d0030 0x222e8748 0x00018718(100,120)

Heap Size 0x15a741c(22,705,180)

------------------------------

Heap 3 (0x000e14e0)

generation 0 starts at 0x1d0f887c

generation 1 starts at 0x1d056738

generation 2 starts at 0x1c2d0030

ephemeral segment allocation context: none

segment begin allocated size

0x1c2d0000 0x1c2d0030 0x1d56af68 0x129af38(19,509,048)

Large object heap starts at 0x232d0030

segment begin allocated size

0x232d0000 0x232d0030 0x23319578 0x00049548(300,360)

Heap Size 0x12e4480(19,809,408)

------------------------------

GC Heap Size 0x54e06e8(88,999,656)

So, as we can see the managed memory is pretty evenly distributed in the 4 managed heaps. If you look down at the bottom you'll see that total memory usage is at 88 MB. (This is a figure I would have gotten by running !dumpheap -stat as well. So in case you're wondering; that would have worked just as fine.) Anyway, if only ~90 MB is in the managed heap, then the remaining 1.55 GB must be on the native heap.

New dumps

Troubleshooting the native heap is a bit harder. The sos extension and windbg is a really nice team so working without them can be tough. Fortunately there is a nice feature included in DebugDiag that lets it perform an automatic analysis. This is usually the best way to get information from a native leak. Sometimes you may want to get a little additional information from windbg as well, but most of the time DebugDiag's auto analysis will get you a long way.

LeakTrack

DebugDiag comes with a handy little dll called LeakTrack. LeakTrack attaches to the process in question and monitors memory allocations and their related call stacks. Since the dll is injected into the process it can have a slight impact on performance, but if you want to troubleshoot the process you really have no other options.

There are two ways to start monitoring a process with LeakTrack:

- Create a "Memory and Handle Leak"-rule using the wizard normally displayed upon start up.

- Cancel the wizard. Go to the "Processes"-tab. Right-click the w3wp.exe you wish to monitor and select "Monitor for leaks".

LeakTrack will begin monitoring from the moment you inject it. Obviously, it won't be able to get any data for events that have already occurred, so injecting LeakTrack when memory usage is already at it's peak won't really do much good. Instead I usually recycle the process and attach LeakTrack as soon as possible.

This time I chose to let the customer create a "Memory and Handle Leak"-rule using the wizard. This rule then created dumps at certain intervals which the customer uploaded to me.

Analyzing the dumps

So the next step is to let DebugDiag analyze the dumps. Here's how you do it:

- Run DebugDiag

- Cancel the wizard

- Go to the "Advanced Analysis"-tab

- "Add Data Files" - point to the dump

- Choose "Memory Pressure Analysis" in the "Available Analysis Scripts" list.

- Make sure your dump is selected in the "Data Files" list.

- "Start Analysis"

The usual suspects

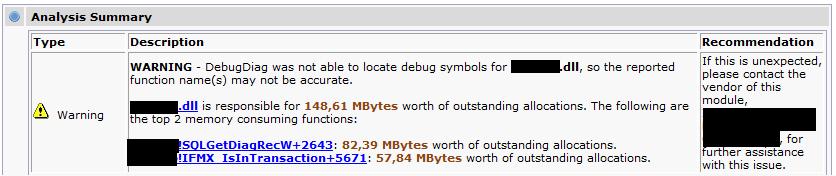

Once DebugDiag has finished analyzing the dump you will get a new browser window with a report. The report I got showed the following right at the top:

So what does this mean? It means that LeakTrack has monitored the allocations made by a certain third party dll. It has also monitored if that memory has been properly released. All in all the .dll now has almost 150 MB of allocations that are unaccounted for. It would be safe to assume that this is our culprit. Further down in the report we have a link called "Leak analysis report". Clicking it will bring us to the following graphical representation:

As you can see the .dll in question is right at the top. But wait a minute! According to the graph mscorsvr has ~9 MB of outstanding allocations as well. Does this mean that mscorsvr is leaking too, though not as much?

The answer is no. As I mentioned before; LeakTrack will monitor all allocations and check that the memory is properly released as well. All we know is that mscorsvr has allocated 8,75 MB of memory that hasn't been released yet. There's nothing to suggest it won't release it eventually. The odds of the stray 148 MB eventually being released, on the other hand, are a lot slimmer in my opinion.

What causes the leak?

Since the dll responsible for the allocations is third party I can't find out exactly what causes the leak within the third party dll. What I can find out is what calls in the customer's code lead us down the leaking code path in the dll. LeakTrack monitors the callstacks related to memory allocations. Looking at the report I was able to find some nice callstacks that the customer should keep an eye on. It might be possible for them to tweak their code slightly in order to avoid the scenario.

3rdparty!IFMX_IsInTransaction+5671

3rdparty!SQLEndTran+e456

odbc32!SQLFetch+160

[DEFAULT] [hasThis] Boolean System.Data.Odbc.OdbcDataReader.Read()

[DEFAULT] [hasThis] I4 System.Data.Common.DbDataAdapter.FillLoadDataRow(Class System.Data.Common.SchemaMapping)

[DEFAULT] [hasThis] I4 System.Data.Common.DbDataAdapter.FillFromReader(Object,String,Class System.Data.IDataReader,I4,I4,Class System.Data.DataColumn,Object)

[DEFAULT] [hasThis] Void System.Data.DataSet.set_EnforceConstraints(Boolean)

[DEFAULT] [hasThis] I4 System.Data.Common.DbDataAdapter.Fill(Class System.Data.DataSet,String,Class System.Data.IDataReader,I4,I4)

[DEFAULT] [hasThis] Void System.Data.DataSet.set_EnforceConstraints(Boolean)

[DEFAULT] [hasThis] I4 System.Data.Common.DbDataAdapter.FillFromCommand(Object,I4,I4,String,Class System.Data.IDbCommand,ValueClass System.Data.CommandBehavior)

[DEFAULT] [hasThis] I4 System.Data.Common.DbDataAdapter.Fill(Class System.Data.DataSet,I4,I4,String,Class System.Data.IDbCommand,ValueClass System.Data.CommandBehavior)

[DEFAULT] [hasThis] I4 System.Data.Common.DbDataAdapter.Fill(Class System.Data.DataSet)

[DEFAULT] [hasThis] Class System.Data.DataSet Company.App.Server.DAL.DAO.Informix.AbstractDAO.Fill(String,String,I4)

[DEFAULT] [hasThis] String Company.App.Server.DAL.DAO.Informix.ResourceDAO.SelectResourcePriceType(String,String)

[DEFAULT] [hasThis] Class System.Data.DataSet Company.App.Server.DAL.DAO.Informix.AbstractDAO.Fill(String,String,I4)

[DEFAULT] [hasThis] Void Company.App.Server.DAL.DAO.Informix.ResourceDAO..ctor(ByRef Class System.Data.IDbConnection,ByRef Class System.Data.IDbTransaction)

[DEFAULT] [hasThis] String Company.App.Server.Services.Pricing.PriceFinder.GetPriceType(String,String,ByRef Class System.Data.IDbConnection,Class System.Data.IDbTransaction)

[DEFAULT] [hasThis] Class Company.App.Core.Query.Result.PriceResult Company.App.Server.Services.Pricing.PriceFinder.GetResourceDiscountPrices(String,String,ValueClass System.DateTime,String,SZArray R4,String,String,ByRef Class System.Data.IDbConnection,Class System.Data.IDbTransaction)

[DEFAULT] [hasThis] String Company.App.Server.Services.Pricing.PriceFinder.GetPriceType(String,String,ByRef Class System.Data.IDbConnection,Class System.Data.IDbTransaction)

[DEFAULT] [hasThis] Class Company.App.Core.Query.Result.PriceResult Company.App.Server.Facade.BookingDataSource.GetResourceDiscountPrices(String,String,ValueClass System.DateTime,String,SZArray R4,String,String)

[DEFAULT] [hasThis] Class Company.App.Core.Query.Result.PriceResult App.BookingData.GetResourceDiscountPrices(String,String,ValueClass System.DateTime,String,SZArray R4,String,String)

[DEFAULT] [hasThis] Class Company.App.Core.Query.Result.PriceResult Company.App.Server.Facade.BookingDataSource.GetResourceDiscountPrices(String,String,ValueClass System.DateTime,String,SZArray R4,String,String)

mscorsvr!CallDescrWorker+30

mscorsvr!COMMember::InvokeMethod+95a

mscorsvr!COMMember::CreateInstance+358

[DEFAULT] [hasThis] SZArray Object System.Web.Services.Protocols.LogicalMethodInfo.Invoke(Object,SZArray Object)

[DEFAULT] [hasThis] Void System.Web.Services.Protocols.WebServiceHandler.Invoke()

[DEFAULT] [hasThis] SZArray Object System.Web.Services.Protocols.LogicalMethodInfo.Invoke(Object,SZArray Object)

[DEFAULT] [hasThis] Void System.Web.Services.Protocols.WebServiceHandler.CoreProcessRequest()

[DEFAULT] [hasThis] Void System.Web.Services.Protocols.WebServiceHandler.Invoke()

[DEFAULT] [hasThis] Void System.Web.Services.Protocols.SyncSessionlessHandler.ProcessRequest(Class System.Web.HttpContext)

[DEFAULT] [hasThis] Void System.Web.Services.Protocols.WebServiceHandler.CoreProcessRequest()

[DEFAULT] [hasThis] Void System.Web.HttpApplication/CallHandlerExecutionStep.System.Web.HttpApplication+IExecutionStep.Execute()

[DEFAULT] [hasThis] Class System.Exception System.Web.HttpApplication.ExecuteStep(Class IExecutionStep,ByRef Boolean)

[DEFAULT] [hasThis] Void System.Web.HttpApplication.ResumeSteps(Class System.Exception)

[DEFAULT] [hasThis] Class System.Exception System.Web.HttpApplication.ExecuteStep(Class IExecutionStep,ByRef Boolean)

[DEFAULT] [hasThis] Class System.IAsyncResult System.Web.HttpApplication.System.Web.IHttpAsyncHandler.BeginProcessRequest(Class System.Web.HttpContext,Class System.AsyncCallback,Object)

[DEFAULT] [hasThis] Void System.Web.HttpApplication.ResumeSteps(Class System.Exception)

[DEFAULT] [hasThis] Void System.Web.HttpRuntime.ProcessRequestInternal(Class System.Web.HttpWorkerRequest)

[DEFAULT] Void System.Web.HttpRuntime.ProcessRequest(Class System.Web.HttpWorkerRequest)

[DEFAULT] [hasThis] Void System.Web.HttpRuntime.ProcessRequestInternal(Class System.Web.HttpWorkerRequest)

[DEFAULT] [hasThis] I4 System.Web.Hosting.ISAPIRuntime.ProcessRequest(I,I4)

[DEFAULT] Void System.Web.HttpRuntime.ProcessRequest(Class System.Web.HttpWorkerRequest)

mscorsvr!ComToComPlusWorker+1e0

mscorsvr!ComToComPlusWorker_Wrapper+38

mscorsvr!Thread::DoADCallBack+5c

mscorsvr!ComToComPlusWorker+65

mscorsvr!ComCallWrapper::NeedToSwitchDomains+24

aspnet_isapi!HttpCompletion::ProcessRequestInManagedCode+17e

aspnet_isapi!HttpCompletion::ProcessCompletion+24

aspnet_isapi!CorThreadPoolWorkitemCallback+13

mscorsvr!ThreadpoolMgr::ExecuteWorkRequest+19

mscorsvr!ThreadpoolMgr::WorkerThreadStart+129

mscorsvr!ThreadpoolMgr::intermediateThreadProc+44

kernel32!GetModuleHandleForUnicodeString+20

kernel32!GetModuleHandleForUnicodeString+20

kernel32!BasepGetModuleHandleExW+17f

ntdll!LdrpGetProcedureAddress+b3

ntdll!LdrpGetProcedureAddress+b3

ntdll!LdrpCallInitRoutine+14

ntdll!LdrpInitializeThread+18f

ntdll!LdrpInitializeThread+18f

ntdll!ZwContinue+c

kernel32!BaseThreadStart+34

Okay, now what?

Well, this is actually more or less where the road ends for me. Had the problem lied within a Microsoft component it would have been up to us to fix it. Had it been within the customer's code I would have been able to use the customers symbols to get a more detailed callstack and provide him with pointers on how to resolve it as well, but since this is a third party component the next step is to forward it to the third-party vendor.

/ Johan

Comments

Anonymous

February 21, 2008

I just wanted to say that this is the first time I have seen someone take time out to actually explain the process of debugging a memory leak. It was very useful, thanks. The only thing else I wanted to comment on was the section of code in "What causes the leak?", and the fact that it is not clear (to me at least, sorry if I am being dense!) what the trace is actually inferring there. What bit should I be looking at for? Possible to highlight the offending bits please? That would be a great help on my journey. Thanks again.Anonymous

February 21, 2008

The comment has been removedAnonymous

February 28, 2008

The comment has been removedAnonymous

February 28, 2008

when I got the dump file as your step, click "Start Analysis", when "loading leak track info. please wait", it looks like dead. does this really need so much time and I just wait?Anonymous

February 28, 2008

can you give more ,write some more about native leak?Anonymous

March 02, 2008

Hi Baal, Native leaks are always a lot more difficult to track than the managed ones. To identify where in your code things go wrong I'd do the following:

- Use leak track to identify the assemblies that are growing abnormally.

- Investigate the memory usage report and look at the sample call stacks.

- Cross-reference the callstacks with your code to identify what sections are allocating memory without releasing it properly. / Johan

Anonymous

March 02, 2008

Hi Johan, I truely love your blog and find it very usefull. How it is possible that dump size is 300MB less then size indicated by perfmon? Could it be caused by "memory holes" in the native memory? What would you say about the following scenario: I have a WinForms app running for 14 hours without user interaction. Dump #1, at the beginning, size is 1020MB. Dump #2, at the end, size is 1080MB. GC Heap Size stays at ~200MB at both dumps. Perfmon shows a linear increase in private bytes, from 600MB to 800MB, and increase in virtual bytes from 1300MB to 1400MB.Anonymous

March 03, 2008

Hi David, I can't really say what the root cause is without investigating the dumps. If I had to guess I'd say that streaming is a likely cause of the inconsistency. / JohanAnonymous

March 03, 2008

Hi Johan, Can you help me investigate my dump file? the file is here: http://www.sula.cn/soft/w3wpdump.zip, then write some more for the article?Anonymous

March 03, 2008

Hi Baal, I'd recommend contacting your local Microsoft support representative and open up a regular support incident for this. Thanks / JohanAnonymous

March 04, 2008

I want to analyze the dump myself. How would you start doing so in the described scenario ?Anonymous

March 04, 2008

Hi David, Well, if you're not running the .NET framework I'd begin by running it through Debug Diag as described in the article above. If it's managed I'd use windbg. For usefulo commands please refer to the "Debugging School"-section to the left as well as http://blogs.msdn.com/johan/archive/2007/01/11/i-am-getting-outofmemoryexceptions-how-can-i-troubleshoot-this.aspx / JohanAnonymous

March 05, 2008

OK, but I am interested to know how streaming can cause such an inconsistency.. My application does use some kind of streaming.Anonymous

March 05, 2008

Like I said, It's not easy to guess without seeing it for myself. I know Memory Mapped Files can cause this type of situaiton. (Sorry for the "streaming" confusion.) There might be other possible reasons that I just can't think of right now. Does the dump look okay in all the other aspects or does it appear corrupt? / JohanAnonymous

May 14, 2008

The comment has been removedAnonymous

May 16, 2008

I find that very likely as well. I was hoping to find out the root cause eventually, but unfortunately I still do not know what it was... :( / JohanAnonymous

November 02, 2008

I have a native memory leak while running the ASP app on windows 2008 server under IIS6 management compatibility mode... But when I do the analysis using the steps mentioned by you, The memory size mentioned is in TBytes!! I have done this a Lot of times with a lot of options - any suggestions ?Anonymous

August 18, 2009

so how can we get the callstack of the leak function? I wrote a .net program, and allocate several large arrays without releasing them for test. I used the DebugDiag to monitor and analyze, finally i got the report. And it tells me that the leak function is mscorwks!EEVirtualAlloc+119, however, it doesnot show me any call stack sample. what's going on? could you please give me some suggestions?Anonymous

October 07, 2009

Johan thank you for this post, it's been very helpful! I have a similar issue but my callstack only shows memory addresses like below: Function Source Destination 0x79E8C582 0x79E717B4 0x79F8C96F kernel32!BaseThreadStart+34 I was expecting some .NET callstack info but as you can see there is none :) Do you know how I can further investigate this? ThanksAnonymous

October 07, 2009

Hi Denny! It's not too much to work with, but if debug diag is reporting that this is the callstack hogging memory from your application I'd look into just how many threads you have running in the application. (Use ~* in windbg) / JohanAnonymous

October 07, 2009

@Ling: (Sorry about the late reply) Debug diag doesn't show you managed callstacks. You'll have to use windbg for that. Open up the same dump in windbg, go to the thread identified by debug diag and use !clrstack to see the managed callstack. / JohanAnonymous

October 28, 2011

Nice post. I have one question; if you would have run !address -Summary command, what would RegionUsageHeap section will show in this case? Will it show that most of the memory is consumed in native allocations?Anonymous

May 16, 2012

This is a GREAT start at investigating unmanaged memory! Usually the details can be easy to jump into, but sometimes a primer like this is excellent. Narrowing down the possibilities is a GREAT start.