Windows Azure Guidance – Scenario – Part II

In this post, we’ll examine the application Adatum is considering migrating to the cloud as a proof point for their assumptions.

Adatum’s a-Expense

a-Expense is one application in Adatum’s finance support systems that helps them submit, track and process business expenses. Everyone in Adatum is required to use this application for requesting reimbursements. a-Expense is not a mission critical application, but it is clearly important. Users could tolerate a few hours downtime every once in a while, but it is clearly an important application nevertheless.

Adatum has a policy that all expenses are to be submitted for approval and processing before the end of each month. However, the vast majority of employees submit their expenses in the last 2 business days leading to relatively high demands during a short period of time. a-Expense is sized for average use, not for peak demand, therefore, during these 2 days, the system is slow and users complain.

a-Expense is currently deployed on Adatum’s data center and it is available for users on the intranet. People traveling have to access it through VPN. There’re have been requests in the past for publishing a-Expense directly on the internet, but it has never happened.

a-Expense stores quite a bit of information as most expense receipts need to be scanned and stored for 7 years. For this reason, the data stores used by a-Expense are backed up frequently.

Adatum wants to use this application as a test case for their evaluation of Windows Azure. They consider it to be a good representation of many other applications in their portfolio, surfacing many of the same challenges they are likely to encounter further on.

a-Expense Architecture

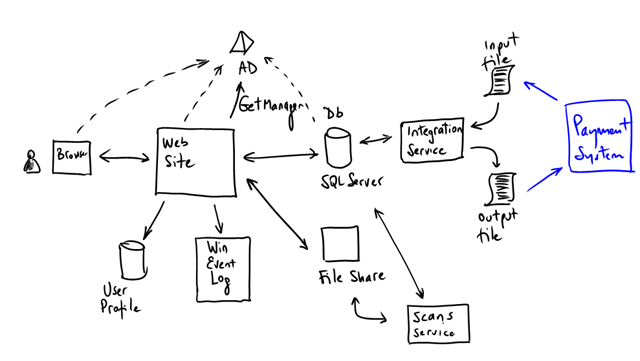

a-Expense’s current architecture is fairly straight forward and common:

- There’s a web site built with ASP.NET, which is the front-end users interact with.

- It uses Windows Authentication

- It relies on ASP.NET Membership and Profile (for storing user preferences)

- Exceptions and logs are implemented with Enterprise Library Exception Handling and Logging Application blocks.

- It uses DirectoryServices APIs to call AD and query for the employee’s manager (who is the default approver of his/her expenses) and the employee’s cost center.

- The web site uses a service account to login to SQL (trusted subsystem). This service account is in fact a Windows Domain account.

- The database is configured to use SQL Authentication.

- The application stores all information on SQL Server and images (receipt scans) on a file share.

- There’s a background service (implemented with a Windows Service) that runs periodically and generates thumbprints of the scanned receipts.

- There’s another background process (a Windows Service too) that periodically queries the database for expenses to be reimbursed and generates a flat file for the payment system to process. Using the same process, it imports payment results back to the application when the expense is paid and updates the expenses status (to “paid”).

Our “Secret Agenda” in case you haven’t noticed

You probably realize that the election of this scenario is not an accident. We believe that this is a realistic enough scenario, but more importantly we think it highlights quite some interesting challenges that can be easily extrapolated to other applications. It is also a functionally simple application, we don’t really want to build a 100% complete expense report system.

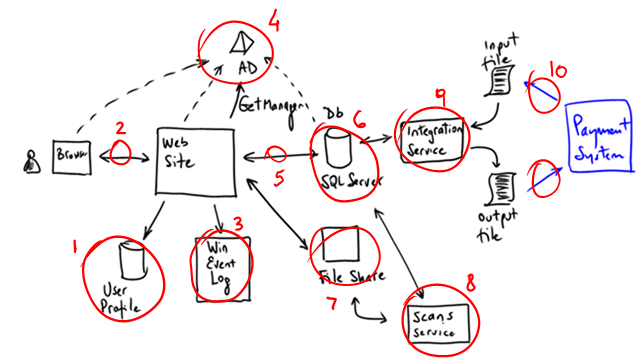

Application hot-spots

Almost everything in this application has considerations for moving it to Windows Azure. Most are rather simple things, a few require more thought. Of course, “making it work” on Windows Azure is not equal to “making the most” of the platform. So there will be room for improvement and optimization; and plenty of trade-offs.

Let’s examine some of these areas:

1- User profile is currently implemented using ASP.NET SQL Provider. This is a good candidate for using an Azure Table based provider. The data model is rather simple, there will not be a lot of information and it is probably cheaper than using SQL Azure, because even the smallest instance is larger than needed. Of course the SQL Azure implementation would just work with minimum changes.

2- In an intranet, you can usually afford not being very sensitive about how you use your network. Latency is low, bandwidth is rather high and it’s been paid for already. For example, the web pages might be very chatty or use a lot of bandwidth. Guess what? Once Adatum moves a-Expense outside their datacenter, latency and bandwidth limits will be more noticeable. There might be a need to optimize this (e.g. viewstate, pages, big images, etc). Also, bandwidth is something Adatum will start paying for. Money is a great motivator :-). Session management on the website will also need to be reviewed. Keeping session in memory and using server affinity are not viable options on Windows Azure (e.g. you can’t control the load balancer and you want at least 2 instances running at any given point in time).

3- The application is logging messages and exceptions to the Windows Event Log, where events are picked up by standard management tools.This is likely to change on Windows Azure. Luckily, Azure provides a compatible trace listener and tools to ship the information back to Adatum. This should mean minimal changes to the a-Expense itself.

4- As many intranet applications, a-Expense relies on AD for authenticating its users. This is good, because employees enjoy SSO, user provisioning is automated, etc. One issue is that Azure machines will not be joined to Adatum’s domain. Luckily again, we have a great guide on this front :-). This a great example of dealing with internal dependencies.

5 and 6 – The easiest way of moving a-Expense’s database to Azure is to simply use SQL Azure. Some possible issues are: SQL Azure won’t accept Windows Authentication, will only work over TCP (e.g. no named pipes) and there’re some features not available (e.g. SQL Broker, etc). a-Expense uses SQL Server in a fairly standard way. Should not be a big deal, unless: the storage needed doesn’t fit on SQL Azure and we’d need to split it in multiple instances. There’ll be some minor changes for issues dealing with shorter timeouts, connection drops, etc. But all that can easily be dealt with in the lower levels of the data layer. Another, and perhaps more interesting discussion is the tradeoffs of using Azure storage instead. That will require some changes on the application as a new data layer will have to be developed. Also, developers will have to learn new APIs. One motivation for this change being the lower cost of Azure tables compared to SQL Azure.

7- Uploaded files go to a file share in the current implementation. On Azure, this functionality will probably have to be changed to use Blobs.

8 and 9 – These two background processes (implemented as Windows Services) are perfect candidates for Worker roles in Azure. Some pretty obvious questions: how much of the code can be reused? Does it make sense to have 1 or 2 workers?

10- The last hot spot: sending files back and forth to the external system. There are many ways of addressing this of course.