LINQ to SQL N-Tier Smart Client - Part 1 Building the Middle-Tier

In my previous posts on LINQ to SQL I showed how to build LINQ to SQL classes and set up the data binding in your Windows applications. If you missed them:

Related Data Binding and ComboBoxes with LINQ to SQL

Creating Lookup Lists with LINQ to SQL

One-To-Many (Master-Detail) Forms with LINQ to SQL

Simple Validation with LINQ to SQL Classes

These articles focus on the binding and validation and use a connected model; meaning that the DataContext is alive and available tracking the changes we make to the collections of LINQ to SQL objects. Out of the box, LINQ to SQL is really easy to get working with a connected client-server architecture. As long as your objects are attached to the DataContext you get all the nice features like change tracking and automatic lazy loading of related collections. However, unlike the DataSet, once you disconnect from the data source you lose all automatic change tracking. So why use LINQ to SQL objects and not distributed DataSets?

The Great Debate - DataSets or objects?

It depends on your particular scenarios which approach you would take, and you are not limited to using just one approach in your application depending on your scenario. I always try to keep these guidelines in mind when I'm trying to decide which approach to take:

- DataSets are easy to define, serialize, and can now be easily separated from the data access logic in the TableAdapters. (Take a look at how to do this in VS 2008 in this video)

-DataSets have built-in change tracking, batched editing, viewing and filtering (also supports LINQ), and handles complex relationships automatically

-DataSets are easy to use with advanced DataBinding scenarios

-DataSets are easy to implement simple field and row validation but become challenging when complex behavior needs to be coded, they cannot inherit from a business class so complex business logic and data become separated

-DataSets should not be used if your clients are not all .NET clients because this type is a very complex type specific to .NET

-LINQ to SQL classes are plain old CLR objects (POCO) that only implement a couple property changed notification interfaces

-LINQ to SQL classes allow you full control over the validation, behavior, business logic, and can inherit from any class you provide so you can create a base business class

-LINQ to SQL object collections can be serialized as arrays to non-.NET clients

-LINQ to SQL objects, once detached from the DataContext, do not track changes or support any advanced DataBinding features out of the box, those must be implemented by you and the code is often non-trivial

Personally I like both approaches, it just depends on the scenario. Typically if I have a batch .NET data-entry form that I need to service with only a few field or column validations necessary, DataSets are my first choice, especially if there are more than a couple related tables. They are also a good choice for reports and simple view-only forms. If I have more complex rules and behavior or I need full control over my object hierarchy then POCO business objects are the better choice.

Going N-Tier with LINQ to SQL

If you're ready to jump off the cliff there's some really good information in the MSDN Library on how to set up LINQ to SQL in distributed applications. There are a variety of ways to handle LINQ to SQL in these scenarios as the library shows. In this post I'm going to walk through the most common scenarios to get this to work with a remote .NET Windows smart client (WPF coming soon!). This post will focus on the data access and service layer and I'll follow up with the client in the next post.

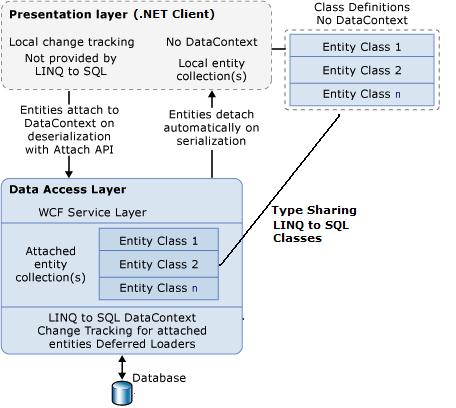

We're going to continue with the application that we built in the previous articles which was just a single Windows forms client built into one EXE. What we need to do is modify the design by adding a data access layer that communicates with our database as well as a service layer that the client will communicate with. Since our client and our server are both written in .NET I am also going to reuse the LINQ to SQL objects that contain our validation so we can keep those rules in one place. Please note that this is not SOA (Service Oriented Architecture). If you need to go that route then I highly recommend this book.

So here's a diagram of what we're going to implement.

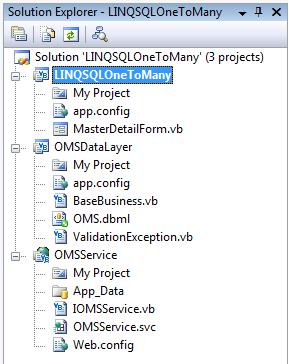

The first thing to do is add a Class Library project called OMSDataLayer to contain the data access layer and the generated LINQ to SQL classes (our business objects) along with the partial class code and base business object class. Unfortunately the O/R designer doesn't let you separate the generated classes from the DataContext object (database access) like the DataSet designer does so if you really want to be a stickler and you have a large project you're developing you should create your own classes and then you can decorate them with the right attributes to get them to work with LINQ to SQL or use an external mapping file.

Next add a reference to System.Data.Linq to the class library and then move all the associated .dbml files as well as the BaseBusiness and ValidationException classes into the project. Then add a project reference to this OMSDataLayer to the client project. At this point if I want the client to compile and work exactly like before I can add a project level imports to the OMSDataLayer on the client, Import the OMSDataLayer namespace, and run it like before (we just have to go through and change the namespace on our objects on our form).

Finally, add a new WCF Service project to the solution and add the OMSDataLayer as a project reference. Now we've got the structure of our solution set up.

Contract First

Now it's time to think about the interaction our client will need to have with our service. It's always a good idea to think about how the endpoints in your distributed applications need to communicate with each other before you start developing them. For this application we only need interfaces for retrieval of the Orders and Products and saving and deleting Orders and OrderDetails. So I defined the following operations:

Imports OMSDataLayer

<ServiceContract()> _

Public Interface IOMSService

<OperationContract()> _

Function GetOrdersByCustomerID(ByVal customerID As Integer) As IEnumerable(Of Order)

<OperationContract()> _

Function GetProductList() As IEnumerable(Of Product)

<OperationContract()> _

Function SaveOrders(ByRef orders As OrderList) As Boolean

<OperationContract()> _

Function DeleteOrders(ByVal orders As IEnumerable(Of Order), _

ByVal details As IEnumerable(Of OrderDetail)) As Boolean

End Interface

I'm just returning a collection of our business objects in the Get* methods and I've defined Save and Delete methods that accept a collection of orders to either Save (update or insert) and Delete against the database. I'm sending Orders and OrderDetail collections in the case of Delete because I implemented a very simple change-tracking strategy and I want to be able to delete OrderDetails independently. OrderList is a simple Serializable class definition that inherits from List(Of Order) and has the KnownType attribute applied. This is needed so I can pass the Orders collection by reference so that primary keys and validation messages are sent back on the objects cleanly. (There are many ways to do this like passing messages and keys back separately. This is the easiest and cleanest in my opinion but you need to be aware that there is more data on the wire in this approach.)

<KnownType(GetType(Order))> _

<Serializable()> _

Public Class OrderList

Inherits List(Of Order)

End Class

The last step to defining our contract is to set up our business objects so that they can be serialized as a WCF DataContract. We can do this via the O/R designer. Just open up the OMS.dbml file and in the properties for the DataContext set the Serialization mode to "Unidirectional".

This adds the DataContract attribute to the classes and the DataMember attribute to the properties. If we're using a WCF service with the DataContractSerializer then all this will work just dandy. This is because this serializer supports bi-directional relationships (like we have with Order and OrderDetail). However if we were trying to serialize this with the XMLSerializer used with non-WCF services we'd have issues; you'd only be allowed to serialize objects with no cyclic relationships.

We also need to add the DataContract and DataMember attributes to our BaseBusiness class as well because our objects inherit from this class. Also add a property called IsDirty to the BaseBusiness class that will help us later when we need to implement change tracking on our client. In the next post I'll talk about my approach to doing that when we talk about the client.

Disconnected Retrieval

Now that we have our contracts and solution set up we're ready to start partying on the data and write our data access layer code. Let's start with retrieving our Products and Orders. To recap what I mentioned in previous articles, when working in connected mode with the DataContext, collections of objects are not retrieved until they are accessed. This means that if I write a LINQ query to select an Order from the database it will not execute any statements to retrieve the OrderDetails until I attempt to access that child collection. This is a good thing because it saves calls to the database if they are not needed.

However when we are disconnected like in a distributed application there is no automatic lazy loading available, you have to roll your own. You need to decide how chatty you want to be in favor of less data on the wire. If you expect the client to be scrolling through smaller child collections it's probably better to just bring down a larger chunk of data in one call instead of making the client call the service multiple times. (Just to be clear, the client can still perform LINQ queries on the collections on the client-side to do additional filtering but that will be performed with standard LINQ to Objects, LINQ to SQL is only used on our server in the middle-tier.)

In this example, we won't need any child collections filled on Products, we're just using it as a lookup list. However, with Orders I want to pull all the Orders that haven't shipped for a specific customer ID and I want to also pull down those OrderDetails all in one call.

So now create a OMSDataManager class in our data access layer which our service implementation can call into. It's really easy to retrieve the Products. Note that the objects are automatically detached from the DataContext when they are serialized to the client through the service so we don't have to explicitly detach them here.

Imports System.Data.Linq

Public Class OMSDataManager

Public Shared Function GetProductsList() As IEnumerable(Of Product)

'Create the Datacontext

Dim db As New OMSDataContext

'Return the list of products.

'Objects are detached from the DataContext

' automatically on serialization through the service

Return db.Products.AsEnumerable()

End Function

Now with Orders because we want load the OrderDetails as well we need to specify this in a DataLoadOptions object and pass them to the DataContext. The way we use this object is we call the LoadWith method to specify which data related to the main target should also be retrieved at the same time, resulting in one trip to the database. You pass it a Lambda expression to specify this. So to retrieve the Orders and OrderDetails in one LINQ to SQL query we can write:

Public Shared Function GetOrdersByCustomerID(ByVal customerID As Integer) As IEnumerable(Of Order)

'Create the Datacontext

Dim db As New OMSDataContext

'We want to also retrieve the OrderDetails collection as well.

Dim dlo As New DataLoadOptions()

dlo.LoadWith(Of Order)(Function(o As Order) o.OrderDetails)

db.LoadOptions = dlo

Dim orders = From order In db.Orders _

Select order _

Where order.CustomerID = customerID AndAlso _

order.ShipDate Is Nothing

Return orders.AsEnumerable()

End Function

Disconnected Updates and Deletes

Disconnected updates are a bit more involved from a decision-making point of view. The way you update your data depends on the schema of your database and how you are performing concurrency checking. The documentation in the library goes into details on all the ways you can update your data but the simplest is using a timestamp field to track row modifications.

In our OMS database I have a "Modified" field specified on every table and I'm using that field to perform the concurrency checks. This makes it much easier to deal with updates on our middle-tier because that field's value is used to compare with the property value on the object and the update will only succeed if the values match. If you don't provide a RowVersion mechanism like a timestamp on your tables then you have to pass all the original values into the middle-tier as well. So I'm all for saving space on the wire and writing less code and timestamps are a common way to do row versioning and concurrency checking so I feel that this is the best approach for most applications.

The other issue you need to consider is what the best way is to work with your data. Is it better to update one entity at a time (for instance just sending one Order at a time to save) or is it better to accept a collection of updates and make just one chunky call to the middle-tier? Or a combination? I've almost always gone with the batch editing approach in the types of Windows applications I've written. Although there is more data on the wire, there's less calls to the middle-tier. So the client form caches all the updates, inserts and deletes to the collections and passes that around. You really need to watch how much data you're passing around, so in this example we're only pulling up open orders for a particular customer. Remember every scenario needs to be evaluated carefully. I took a simple approach, more data on the wire but 2 calls to the middle-tier, one to update/insert data and one call to delete data.

You can streamline this more by only sending the changes to the middle-tier from the client but then you need more code to figure out how to merge the middle-tier changes (validation messages and keys, for instance) back into the client. This is really easy to do with if we were using DataSets but for this example I took to simple route and am passing the entire collection -- but be warned -- this approach may not scale depending on your situation. As always, your mileage may vary.

So let's write our Save code for our Orders. Unlike when using DataSets with the TableAdapter, the DataContext in disconnected mode needs to be told explicitly what to do, insert, update or delete. An easy way to tell whether the objects in our collection are added or modified is by checking the primary key; if it's less then 1 then we know we have a new object to save. We also use the IsDirty flag we added on our BaseBusiness class to determine how to attach our objects to the DataContext.

Public Shared Function SaveOrders(ByVal orders As IEnumerable(Of Order)) As Boolean

If orders Is Nothing OrElse orders.Count = 0 Then

Return False

End If

Dim db As New OMSDataContext

For Each o In orders

If o.OrderID = 0 Then

db.Orders.InsertOnSubmit(o)

Else

db.Orders.Attach(o, o.IsDirty)

End If

For Each d In o.OrderDetails

If d.IsDirty Then

If d.OrderDetailID = 0 Then

db.OrderDetails.InsertOnSubmit(d)

Else

db.OrderDetails.Attach(d, True)

End If

End If

Next

Next

Try

'This will continue to process the

'rest of the orders even if one fails

db.SubmitChanges(ConflictMode.ContinueOnConflict)

'Reset the IsDirty flag

For Each o In orders

o.IsDirty = False

For Each d In o.OrderDetails

d.IsDirty = False

Next

Next

Catch ex As ChangeConflictException

'TODO: Conflict Handling

Throw

Return False

End Try

Return True

End Function

Disconnected deletes of Orders in our case are very easy because of the way we set up how deletes should work by manually setting setting the DeleteOnNull attribute to true (see the end of this post for info on that). We just need to attach the incoming Orders and OrderDetails to the DataContext and then we can delete them all.

Public Shared Function DeleteOrders(ByVal orders As IEnumerable(Of Order), _

ByVal details As IEnumerable(Of OrderDetail)) As Boolean

Dim db As New OMSDataContext

Dim submit = False

If orders IsNot Nothing AndAlso orders.Count > 0 Then

'Delete orders and related details

db.Orders.AttachAll(orders, False)

db.Orders.DeleteAllOnSubmit(orders)

For Each o In orders

For Each detail In o.OrderDetails

db.OrderDetails.DeleteOnSubmit(detail)

Next

Next

submit = True

End If

If details IsNot Nothing AndAlso details.Count > 0 Then

'Now delete the order details that were passed in

' (these order parents were not deleted, just the details)

db.OrderDetails.AttachAll(details, False)

db.OrderDetails.DeleteAllOnSubmit(details)

submit = True

End If

Try

If submit Then

db.SubmitChanges(ConflictMode.ContinueOnConflict)

Return True

End If

Catch ex As ChangeConflictException

'TODO: Conflict Handling

Return False

End Try

End Function

The last thing we need to do to our middle-tier is call the data access layer from our service implementation. Note that I'm catching the ValidationException that's thrown from our business objects if they are invalid when the call to db.SubmitChanges is made and just returning false, which keeps our service from entering the fault state. The client will also perform validation of our business objects before the data is submitted to save a round-trip but the validation is also run here on the middle-tier. Validation messages are collected in a dictionary and serialized back to the client. (Read this post on how we set up validation on our LINQ to SQL classes.)

Imports OMSDataLayer

Public Class OMSService

Implements IOMSService

Public Sub New()

End Sub

Public Function GetOrdersByCustomerID(ByVal customerID As Integer) _

As IEnumerable(Of OMSDataLayer.Order) _

Implements IOMSService.GetOrdersByCustomerID

Return OMSDataManager.GetOrdersByCustomerID(customerID)

End Function

Public Function GetProductList() As IEnumerable(Of OMSDataLayer.Product) _

Implements IOMSService.GetProductList

Return OMSDataManager.GetProductsList()

End Function

Public Function DeleteOrders(ByVal orders As IEnumerable(Of OMSDataLayer.Order), _

ByVal details As IEnumerable(Of OMSDataLayer.OrderDetail)) _

As Boolean _

Implements IOMSService.DeleteOrders

Return OMSDataManager.DeleteOrders(orders, details)

End Function

Public Function SaveOrders(ByRef orders As OrderList) As Boolean _

Implements IOMSService.SaveOrders

Try

Return OMSDataManager.SaveOrders(orders)

Catch ex As ValidationException

Return False

End Try

End Function

End Class

So that's the meat of our middle-tier. In the next post we'll build our client form and implement a simple technique for change tracking.

UPDATE: Read how to create the client in this post.

Enjoy!

Comments

Anonymous

April 12, 2008

PingBack from http://microsoftnews.askpcdoc.com/?p=1663Anonymous

April 12, 2008

Continuing my Microsoft LINQ alerts... This time LINQ to SQL for VB developers. I'm a big fan of theAnonymous

April 12, 2008

http://blogcastrepository.com/blogs/wisemx/archive/2008/04/12/beth-massi-brings-linq-to-the-vb-gladiators.aspxAnonymous

April 13, 2008

Kudos! This is a very educational and informative article. Thanks Beth. bill burrowsAnonymous

April 14, 2008

In my last post we built the service and data access layer for our LINQ to SQL N-Tier application. InAnonymous

April 14, 2008

Great articles Beth, looking forward to the WPF implementation!Anonymous

April 16, 2008

In my previous posts this week I showed how to build a simple distributed application with a WindowsAnonymous

April 16, 2008

Hi. Just want to know how you can implement anonymous types to be returned from wcf service. For example: Dim orders = From order In db.Orders _ Select order.customerID,order.shipdateAnonymous

April 21, 2008

Hi Kent, You cannot pass anonymous types around like this. You need to create a named class to hold the values and then pass the named type instance instead. i.e. Public Class OrderInfo Private _custId As Integer Public Property CustomerID() As Integer Get Return _custId End Get Set(ByVal value As Integer) _custId = value End Set End Property Private _date As Date Public Property ShipDate() As Date Get Return _date End Get Set(ByVal value As Date) _date = value End Set End Property End Class Then your LINQ query would look like: Dim orders = From order In db.Orders _ Select New OrderInfo With _ {.CustomerID = order.customerID, _ .ShipDate = order.shipdate} Then you can pass IEnumerable(Of OrderInfo) out of the method/service as the return value. HTH, -BAnonymous

April 22, 2008

Loved reading this post. It answered a lot of questions I had. Looking forward to reading the next one.Anonymous

April 23, 2008

Hi Beth. Thanks for your answer re: anonymous types. Another quick question. Does WCF service have a maximum returning rows or size? I test a query that returns almost 20,000 rows and an error occured even if my maxReceivedMessageSize was set to 2147483647. Maybe I should filter the records before returning it to the client. -kentAnonymous

April 23, 2008

Hi Kent, You definitely want to limit the amount of data you return from your service. You're may be timing out the request. Your service will not be scalable if you return too much from your query. Make sure you read the above post carefully and pay special attention to the paragraphs where I discuss this in the "Disconnected Updates and Deletes" section (though the guidelines apply to retrieval as well). HTH, -BAnonymous

April 26, 2008

This is a 3 part post where Beth Massi, MSDN Community Program Manager for Visual Basic, constructs anAnonymous

May 11, 2008

This sample shows you one way to build an n-tier application using LINQ to SQL classes and a disconnected DataContext.Anonymous

May 27, 2008

Hi Beth, I saw the video about Visual Basic Power Pack and tried it. Could you please tell me if there is any provision to exclude a list of controls in the form which should not be in the print out? [ like i dont want to print the button with text "click to print" ] I have sent a mail to you too on this matter. Thanks, Regards, AliAnonymous

May 31, 2008

In my previous posts on LINQ to SQL I showed how to build LINQ to SQL classes and set up the data binding in your Windows applications. If you missed them: Related Data Binding and ComboBoxes with LINQ to SQL Creating Lookup Lists with LINQ to SQL One-To-ManAnonymous

June 04, 2008

In my previous posts on LINQ to SQL I showed how to build LINQ to SQL classes and set up the data binding in your Windows applications. If you missed them: Related Data Binding and ComboBoxes with LINQ to SQL Creating Lookup Lists with LINQ to SQL One-To-ManAnonymous

July 07, 2008

At the end of June I run workshops with 2 ISVs both looking to completely re-develop their applications.Anonymous

July 07, 2008

At the end of June I run workshops with 2 ISVs both looking to completely re-develop their applications.Anonymous

September 17, 2008

As promised, the links from my session today on the new data stuff on 3.5 and 3.5 SP1. Thanks for listening.Anonymous

September 26, 2008

Presentation: Data and Data Access - State of the Nation Presenter: Eric Nelson http://blogs.msdn.com/ericnelAnonymous

September 26, 2008

Presentation: Data and Data Access - State of the Nation Presenter: Eric Nelson http://blogs.msdn.com/ericnelAnonymous

September 26, 2008

Presentation: Data and Data Access - State of the Nation Presenter: Eric Nelson http://blogs.msdn.com/ericnelAnonymous

October 21, 2008

Hi Beth, I have a question concerning the SaveOrders() method both the "service-tier" and the "data-tier". In the "service-tier" you pass the orders as a OrderList: Public Function SaveOrders(ByRef orders As OrderList) As Boolean _ Implements IOMSService.SaveOrders But in the "data-tier" you pass them as IEnumerable(Of Order): Public Shared Function SaveOrders(ByRef orders As IEnumerable(Of Order)) As Boolean Why is this and shouldn't it be both of the same type : notably => OrderList type ? thx for any response Emmanuel NuyttensAnonymous

October 21, 2008

Hi Beth, continuing on the OrderSave(), strange ... but in VB.NET you can (as mentioned above) declare the pending orders as OrderList in the "service-tier" and as "IEnumerable (Of Order)" in the "data-tier", but in my C# code i get next error: Error 2 Argument '1': cannot convert from 'ref OMSDataLayer.OrderList' to 'ref System.Collections.Generic.IEnumerable<OMSDataLayer.Order>' Which seems to be reasonable to me because these are 2 different implementations. Of course if I change the reftype to OrderList in my "data-tier" (OMSDataManager.cs) , then it works properly. any clue ? greetz, Emmanuel Nuyttens.Anonymous

October 22, 2008

Hi Emmanuel, Yes, VB is doing the cast for me automatically. I implemented just basic IEnumerable in the data tier because I wanted to have flexibility on my service layer to define business collections and not have the data tier coupled to them. But this is just a simple example so you may need to change this for your situation. HTH, -BAnonymous

November 02, 2008

Dear Beth, I am a great fan of your posts and bloggings and have really learnt a lot from your articles....Keep up the good work. I am currently working on rewriting my DAL for a project, for using Linq to SQL. I have a table with TIMESCHEDULEs which has columns for each day of week. These columns are foreign keys to a TIMEZONE table which contains hour of day. This means 7 relations between the tables. The Timeschedule objects most often contains many equal timezones. How can I get distinct objects from the Timezone table into the Timeschedule object? Also when I create a new Timeschedule object on the client and fill it with existing Timezone objects, Linq To SQL inserts new rows of timezones instead of using the "old". Can you help me along?Anonymous

November 17, 2008

Hello Beth, Some question about the "generated" classes in the *.dbml mapping for LinqToSql: For example, i have the next (details-ommited) generated OrderDetail class: public partial class OrderDetail fyPropertyChanged { private int _OrderDetailID; private int _OrderID; private int _ProductID; private int _Quantity; private System.Nullable<decimal> _Price; private System.Data.Linq.Binary _Modified; private EntityRef<Order> _Order; private EntityRef<Product> _Product; ... } My Question is:

Why store the _ProductId variable as we have the _Product entity Reference ? This looks a bit redundant to me as you can do the next to refer to the ProductID for the accessor: public int ProductId { get { return _Product.ProductId } . . . } Or is there any specific reason for storing AND the _ProductId , and the Product Reference ? Thx for any Reponse, Emmanuel Nuyttens.

Anonymous

November 30, 2008

Hi Beth, Can this Linq-To-SQL N-Tier walkthrough run on VS 2008 Express Edition? -LCAnonymous

December 02, 2008

Hi Emmanuel, I'm not really sure but I assume it's required for the framework to operate. You should ask the question on the LINQ to SQL forum. Cheers, -BAnonymous

December 02, 2008

Hi LC, Yes, this should work with the Express editions as well. Cheers, -BAnonymous

December 02, 2008

Hi Beth, But i don't think i'm able to add WCF Service on VS 2008 Express Edition. -LCAnonymous

December 03, 2008

Hi LC, The template for a WCF service isn't available and the ASP.NET Development server isn't included in VB and C# Express editions however you can still manually create and self host the service. http://msdn.microsoft.com/en-us/library/ms750530.aspx Also, I believe that Web Developer Express contains the WCFService template: http://www.microsoft.com/express/vwd/ HTH, -BAnonymous

December 03, 2008

Hi Beth, Do you mean that i' able to create WCFService by using Web Developer Express & Add | Reference | to this project from VB Express? -LCAnonymous

December 04, 2008

Hi LC, That's my understanding, I've never tried it. But you can just self host the WCF service instead. I'd check out the express and WCF forums for more information. Cheers, -BAnonymous

December 04, 2008

Hi Beth, I'll check out too for more information. Thanks, -LCAnonymous

December 13, 2008

Hi Beth, I've tried. That's not possible to run N-Tier project in Express Edition. & even it's not able to separate TableAdapters from Datatset in Express Edition. -LC :)Anonymous

December 15, 2008

Hi LC, Yes although it's possible to manually write a class and a WCF service host yourself, you cannot split the dataset from the tableadapters in Express edition. I didn't know about the dataset issue in Express. I think that should be allowed so I gave feedback to the team about that. Splitting the DataSet from the TableAdapters is just good software design, regardless if you physically split the tiers behind a service, you should always design with logical layers. LC, I think it's time to upgrade ;-). Here's a link to a 90-day trial of VS Pro: http://msdn.microsoft.com/en-us/evalcenter/bb655861.aspx Cheers, -BAnonymous

December 15, 2008

Hi Beth, I'm thinking to upgrade too, but let me explore some more before i get into VS Pro. Thanks for responding to my question all the time. :) Thanks, -LCAnonymous

October 22, 2009

Hello Beth, How do i implement Master detail in Web application?Anonymous

October 26, 2009

Hi ifeoma, Take a look at www.asp.net/learn for tutorials on web applications. For instance, this one looks like what you're looking for: http://quickstarts.asp.net/QuickStartv20/aspnet/doc/data/databases.aspx#masterdetails You can also ask questions in their forums: http://forums.asp.net/ HTH, -BAnonymous

December 08, 2009

Hi first Thanks . and then plz give this sample in C# codeAnonymous

February 19, 2010

Well...ur article is a relief for all of us Visual Basic programmers....i have spent weeks for a decent article on N-tier applications in VB. I have just found ur blog and i will research it upAnonymous

March 08, 2010

hi beth how can I save an xml file without bom with linq to sql way?