Having a problem with nodes being removed from active Failover Cluster membership?

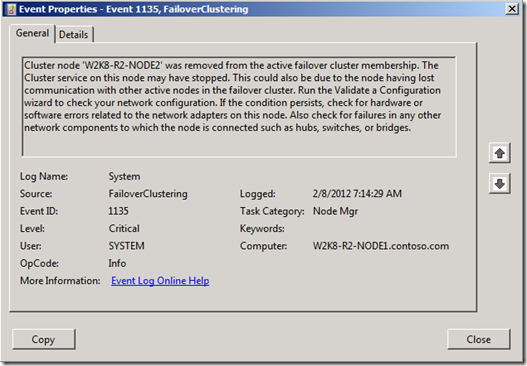

Welcome to the AskCore blog. Today, we are going to talk about nodes being removed from active Failover Cluster membership randomly. If you are having problems with a node being removed from membership, you are seeing events like this logged in your System Event Log:

This event will be logged on all nodes in the Cluster except for the node that was removed. The reason for this event is because one of the nodes in the Cluster marked that node as down. It then notifies all of the other nodes of the event. When the nodes are notified, they discontinue and tear down their heartbeat connections to the downed node.

What caused the node to be marked down?

All nodes in a Windows 2008 or 2008 R2 Failover Cluster talk to each other over the networks that are set to Allow cluster network communication on this network. The nodes will send out heartbeat packets across these networks to all of the other nodes. These packets are supposed to be received by the other nodes and then a response is sent back. Each node in the Cluster has its own heartbeats that it is going to monitor to ensure the network is up and the other nodes are up. The example below should help clarify this:

If any one of these packets are not returned, then the specific heartbeat is considered failed. For example, W2K8-R2-NODE2 sends a request and receives a response from W2K8-R2-NODE1 to a heartbeat packet so it determines the network and the node is up. If W2K8-R2-NODE1 sends a request to W2K8-R2-NODE2 and W2K8-R2-NODE1 does not get the response, it is considered a lost heartbeat and W2K8-R2-NODE1 keeps track of it. This missed response can have W2K8-R2-NODE1 show the network as down until another heartbeat request is received.

By default, Cluster nodes have a limit of 5 failures in 5 seconds before the connection is marked down. So if W2K8-R2-NODE1 does not receive the response 5 times in the time period, it considers that particular route to W2K8-R2-NODE2 to be down. If other routes are still considered to be up, W2K8-R2-NODE2 will remain as an active member.

If all routes are marked down for W2K8-R2-NODE2, it is removed from active Failover Cluster membership and the Event 1135 that you see in the first section is logged. On W2K8-R2-NODE2, the Cluster Service is terminated and then restarted so it can try to rejoin the Cluster.

For more information on how we handle specific routes going down with 3 or more nodes, please reference “Partitioned” Cluster Networks blog that was written by Jeff Hughes.

Now that we know how the heartbeat process works, what are some of the known causes for the process to fail.

1. Actual network hardware failures. If the packet is lost on the wire somewhere between the nodes, then the heartbeats will fail. A network trace from both nodes involved will reveal this.

2. The profile for your network connections could possibly be bouncing from Domain to Public and back to Domain again. During the transition of these changes, network I/O can be blocked. You can check to see if this is the case by looking at the Network Profile Operational log. You can find this log by opening the Event Viewer and navigating to: Applications and Services Logs\Microsoft\Windows\NetworkProfile\Operational. Look at the events in this log on the node that was mentioned in the Event ID: 1135 and see if the profile was changing at this time. If so, please check out the KB article “The network location profile changes from "Domain" to "Public" in Windows 7 or in Windows Server 2008 R2”.

3. You have IPv6 enabled on the servers, but have the following two rules disabled for Inbound and Outbound in the Windows Firewall:

- Core Networking - Neighbor Discovery Advertisement

- Core Networking - Neighbor Discovery Solicitation

4. Anti-virus software could be interfering with this process also. If you suspect this, test by disabling or uninstalling the software. Do this at your own risk because you will be unprotected from viruses at this point.

5. Latency on your network could also cause this to happen. The packets may not be lost between the nodes, but they may not get to the nodes fast enough before the timeout period expires.

6. IPv6 is the default protocol that Failover Clustering will use for its heartbeats. The heartbeat itself is a UDP unicast network packet that communicates over Port 3343. If there are switches, firewalls, or routers not configured properly to allow this traffic through, you can experience issues like this.

7. IPsec security policy refreshes can also cause this problem. The specific issue is that during an IPSec group policy update all IPsec Security Associations (SAs) are torn down by Windows Firewall with Advanced Security (WFAS). While this is happening, all network connectivity is blocked. When re-negotiating the Security Associations if there are delays in performing authentication with Active Directory, these delays (where all network communication is blocked) will also block cluster heartbeats from getting through and cause cluster health monitoring to detect nodes as down if they do not respond within the 5 second threshold.

8. Old or out of date network card drivers and/or firmware. At times, a simple misconfiguration of the network card or switch can also cause loss of heartbeats.

9. Modern network cards and virtual network cards may be experiencing packet loss. This can be tracked by opening Performance Monitor and adding the counter "Network Interface\Packets Received Discarded". This counter is cumulative and only increases until the server is rebooted. Seeing a large number of packets dropped here could be a sign that the receive buffers on the network card are set too low or that the server is performing slowly and cannot handle the inbound traffic. Each network card manufacturer chooses whether to expose these settings in the properties of the network card, therefore you would need to refer to the manufacturer's website to find out how to increase these values and what the recommended values should be. If you are running on VMware, the following blog talks about this in a little more detail including how to tell if this is the issue as well as points you to the VMWare article on the settings to change.

Nodes being removed from Failover Cluster membership on VMWare ESX?

https://blogs.technet.com/b/askcore/archive/2013/06/03/nodes-being-removed-from-failover-cluster-membership-on-vmware-esx.aspx

These are the most common reasons that these events are logged, but there could be other reasons also. The point of this blog was to give you some insight into the process and also give ideas of what to look for. Some will raise the following values to their maximum values to try and get this problem to stop.

| Parameter | Default | Range |

| SameSubnetDelay | 1000 milliseconds | 250-2000 milliseconds |

| CrossSubnetDelay | 1000 milliseconds | 250-4000 milliseconds |

| SameSubnetThreshold | 5 | 3-10 |

| CrossSubnetThreshold | 5 | 3-10 |

Increasing these values to their maximum may make the event and node removal go away, it just masks the problem. It does not fix anything. The best thing to do is find out the root cause of the heartbeat failures and get it fixed. The only real need for increasing these values is in a multi-site scenario where nodes reside in different locations and network latency cannot be overcome.

I hope that this post helps you!

Thanks,

James Burrage

Senior Support Escalation Engineer

Windows High Availability Group

Comments

- Anonymous

January 01, 2003

Is it possible to create a failover cluster utilzing Nic teaming. If so how are the public and private communications set? - Anonymous

January 01, 2003

Nice - Anonymous

January 01, 2003

Hey James, thank you for the document... Regarding this issue I had already worked with William Effinger and he has provided the same ... also recommended this KB .http://support.microsoft.com/default.aspx?scid=kb;en-US;2524478 - Anonymous

January 01, 2003

Nice post. - Anonymous

January 01, 2003

@gpo

the local account cannot be a part of the gpo and must remain. For the cluster, we use the local account for joins and communication. - Anonymous

July 22, 2012

Very informative article. What I like most is "The best thing to do is find out the root cause of the heartbeat failures and get it fixed". I have been in the habit of increasing the SUBNET values to overcome the issue, I will stop that now. - Anonymous

August 22, 2012

Thanks, - Anonymous

November 01, 2012

Good article, thanks for sharing :) - Anonymous

December 03, 2012

Can you tell me what are the various problems which will cause a node to failover in a Windows Server cluster?Can loss of comminication to a single LUN for a SQL Server cause the node to failover? - Anonymous

June 25, 2013

Great Article. Thank you so much - Anonymous

February 18, 2014

Nice post - Anonymous

September 07, 2014

Very informative!! Thanks - Anonymous

September 12, 2014

The comment has been removed - Anonymous

October 21, 2014

@John Marlin

http://blogs.technet.com/b/secguide/archive/2014/09/02/blocking-remote-use-of-local-accounts.aspx - Anonymous

April 06, 2015

Thanks John for the article. This helped me in understanding the failolvers occurring in our Environment. - Anonymous

May 26, 2015

I have Exchange 2010 sp1 VM , running on esxi 5.1 and using netbackup to run backup and snapshot . I have these problem when both Nodes snapshot are running at the same times . Log a case with netbackup , it say not their problem cause they will never cause cluster services crash , opened case with microsoft .. they ask me patched all hotfixes first for clustering .. but still no luck . Now event still occured .. i have tried all below solutions but still no clues .. anyone able to help ?

1)increase the cluster time

2)disabled ipv6

3)Disabled windows fw

4)installed hotfixes (windows 2008 r2sp1 clustering) - Anonymous

August 19, 2015

Hi Team

We have 2 servers with 0A and 0B node running on Vmware ESXi hosts. Initially 0B node was passive and 0A is active. We have a planned activity on 0B node which is passive and took this node down for few minutes, once this 0B node was powered on, it automatically became active node and the same issue happened with 0A node after the activity. May we know the reason why the node became active as soon as the passive was turned on.

Thanks,

Rishi - Anonymous

August 20, 2015

@Rishi,

This really looks like Preferred Owners is set and kicking in. Bring up the properties of the name of the role, far left column. You should see Preferred Owners. If both are set, it will do this. Set only the one node you want Preferred, not both.- Anonymous

October 26, 2017

I lsot connectivity between the nodes which gave me event id 1135. The preferred nodes are set to both. DO you think i should change it to one- Anonymous

October 31, 2017

I would leave the Preferred Nodes set to both unless they are in separate subnets. If they are on separate subnets, then some customers will set the Preferred Owners to be the nodes in the production subnet.

- Anonymous

- Anonymous