Collect SharePoint ULS Logs quicker || Say “No” to Merge-SPLogFile

As a SharePoint Support Engineer, we often need to collect ULS logs from a single server or several servers to help identify our customer's issues. Russ Maxwell has a great blog and script that helps collect ULS on a more "real-time" approach and collects from all servers in the farm from the time you execute the script to the time to terminate that script. ( Jeff shared this out in an email a few weeks back ). We contemplated writing and releasing this, but we wanted to spin the Log Collection process in slightly different direction that we also felt could be useful and more efficient for Admins when "Microsoft" asks them to go grab ULS logs from specific servers. The intent of this script is not to take anything away from Russ' work, just another method to collect ULS logs.

I am sure you may be asking, why not just use "Merge-SPLogFile"?

This is a valid question and I will tell you why I am not a fan of this command. I work specifically on the "SharePoint Search" team and when we have a customer turn up logging to VerboseEx ( just for Search categories), so that we can capture critical information within the crawling, indexing, querying process, this can generate some really large ULS logs. On top of that, we may not need ULS from ALL servers. We may only need them from 3 or 4 servers, like the Crawl or Content Processing Components. We have seen where customers just run a "Merge-SPLogFile" between specific time frames and it generates log files anywhere from 2GB - 70GB in size. If you have ever tried to parse files of this size, you will find that it can take you half a day using tools like "logparser" to split these files out into something that is easier to work with.

My colleague, Jeremy Walker, and I took Russ' concepts and decided to create a PowerShell script to collect ULS logs from a set of defined Servers, between a span of time, and copy them to a location of your choice and then zip them up. We felt this would make an Admin's job a bit easier of having to go grab those files themselves and zip them up. With that, I wanted to present the usage of this script..

Script Location:

https://gallery.technet.microsoft.com/Collect-ULS-logs-from-2d31fd19

Usage:

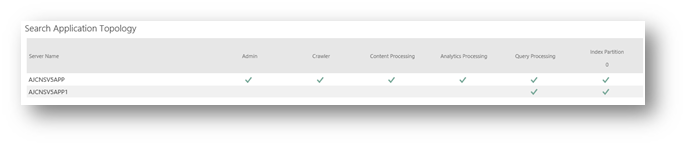

Let's say that you have 4 servers in your SharePoint Farm and of those 4, 2 of them belong to my search topology and my Topo looks something like this:

For my example here, I am troubleshooting a query related issue so I want to collect ULS logs from "ajcnsv5app" and "ajcnsv5app1" from earlier in the day ( 11/14/2017 ) between like 12:30 PM and 1:30 PM

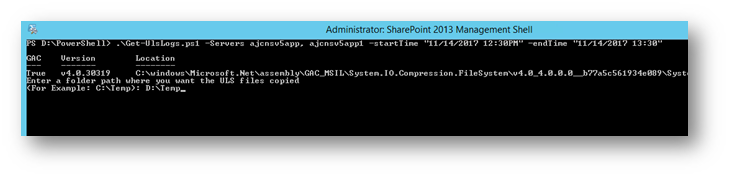

I have put this script on one of my servers, which has ample space, in a path like "D:\PowerShell"

I open the SharePoint Management Shell, or PowerShell ISE, and I change paths to "D:\PowerShell"

The command will be ran like: ( a few comments below about usage )

.\Get-UlsLogs.ps1 -Servers ajcnsv5app, ajcnsv5app1 -startTime "11/14/2017 12:30PM" -endTime "11/14/2018 13:30"

- You can pass in any array of servers you have. If you leave the "-Servers" switch off, it will grab ULS from All your SharePoint servers in the farm.

- If you do not append the "startTime\endTime" in the command, it will prompt you for each parameter as we consider these mandatory parameters

- In the example above, the time can be AM\PM or 24-hour format and you can wrap it in single or double quotes.

- It will prompt you for a location you want to save these files:

- As you can see I chose "D:\Temp" since I have plenty of space on my server to store these..

- The next thing it will do is check to see if the D:\Temp exists, if not, it will tell you and you will be prompted again for a path to store the files.

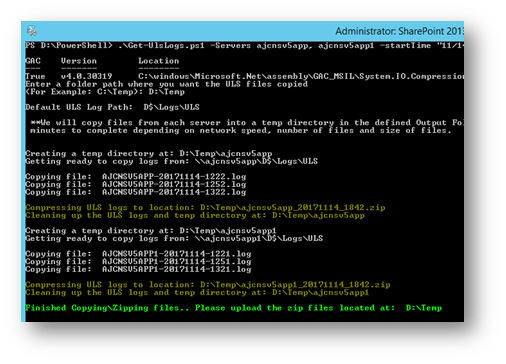

- When you hit Enter, it will begin working through the servers you defined and do the following:

- Create a temp directory @ "D:\Temp\ajcnsv5app"

- Look for ULS logs that match the startTime\endTime criteria ( we don't actually crack the ULS logs open and pull those specific times. We utilize the "CreationTime" property of the file and manipulate the startTime a little by substracting some minutes from the time entered. We did this to ensure we grabbed the ULS log that would contain the timeframe we are looking for. )

- It will copy the files that we matched on into this temp path @ "D:\Temp\ajcnsv5app"

- It will then zip those Log files up for you

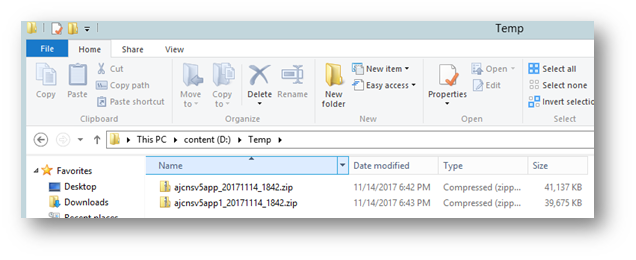

- It will also clean up the Temp Paths it creates for each server so that all you have left is the Zip files created:

- You can then upload these Zipped ULS logs to the Workspace that Microsoft provides for you.

I hope this is useful for those who choose to use this and we appreciate any feedback or questions you may have!

Comments

- Anonymous

November 15, 2017

thank you for this post! :)