Using nginx and Docker Containers on Azure to migrate a Java/HTML/MongoDB Solution

Overview

By Shawn Cicoria, Partner Catalyst Team

This case study focuses on migrating existing multi-tiered applications to Azure, using Docker containers and nginx to expedite rehydration and reduce the overall surface area for open ports for the main application. After reviewing this case study you’ll have a basic understanding on a use case for nginx and dealing with a common scenario for on-premises applications and migrating them to the cloud – specifically, handling non-standard HTTP ports that are artifacts of developer choices and masked when on-premises.

The Problem

The problems presented and addressed are two areas that often happen when certain implementation choices are made and lack of communication between an organization’s Development and Infrastructure teams exists. As teams progress towards a DevOps structure and culture, these issues are generally recognized and addressed early.

The example presented here is abstracted from a more complex solution and simplified to highlight the basic processes and core technologies that are applied to address some common issues.

Rapid Environment Setup and Deployment of Tiered Solution

Existing multi-tiered solutions are often hard to rehydrate in new environments and may take days, even weeks to get the right people focused on addressing the connections and linkage of all the moving parts.

In a good, well established DevOps culture, the team can easily and quickly establish a full environment for development, test, staging, disaster recovery, even production with minimal intervention. Often however, if teams are disconnected or lack concern, motivation, or just lack focus until its tool late, these proper processes and approaches are not followed, and migration is slower and more expensive than it needs to be.

Use of Non-Standard Ports

One primary issue is that developers often implement solutions that make use of non-standard TCP ports (e.g. HTTP(s) over port 8000 or 44300 – not 80 or 443). Standard or “well known ports” are listed here: https://en.wikipedia.org/wiki/List_of_TCP_and_UDP_port_numbers and here https://www.iana.org/assignments/service-names-port-numbers/service-names-port-numbers.xhtml.

When solutions are deployed internally on-premises, these issues generally go unnoticed, since traffic within the corporate network is usually routed without concern as ports are not generally locked down on routers, switches, and internal bridges. Other than additional configuration that may happen on the server to open up these additional ports (which may be open by default – no firewall), the use of non-standard ports goes undetected or pushed to a low priority fix. However, moving to the cloud requires that we have more explicit control and knowledge of port usage.

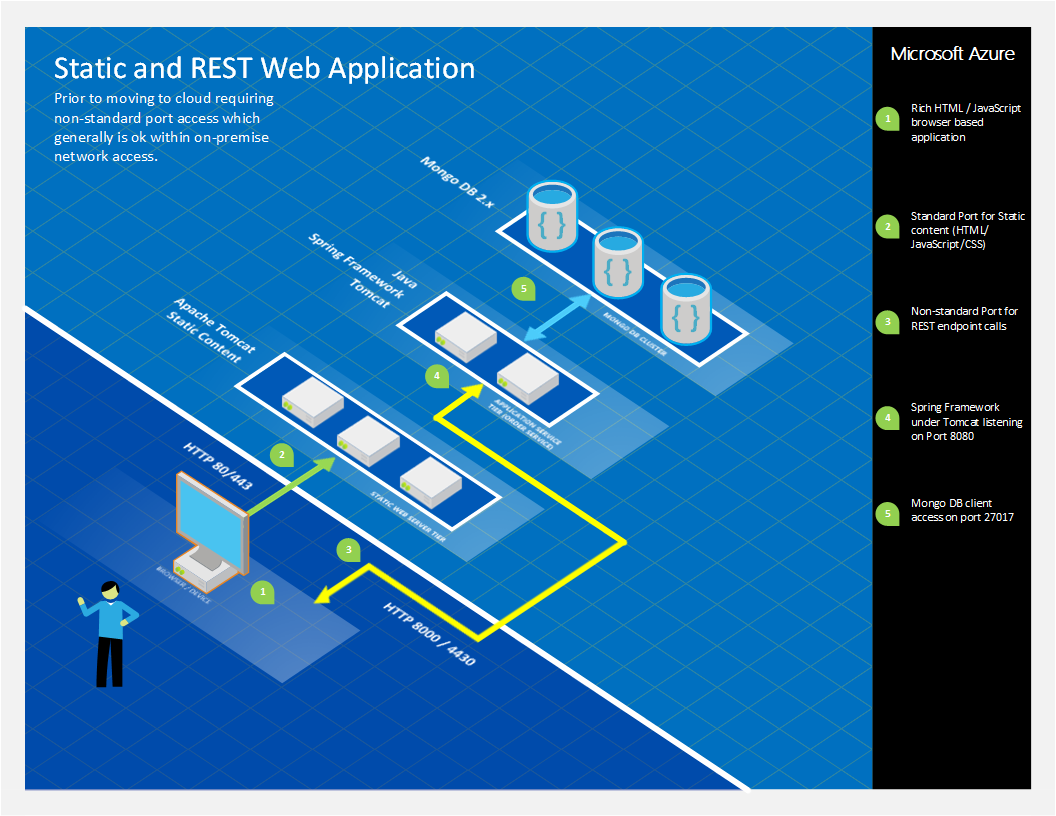

Existing On-Premise Solution Overview

The architecture that is being used is shown in the diagram in this section (Figure 1 - On-Premises and Non-Standard Ports). The core aspects of the solution are:

- Browser based rich client

- HTML & JavaScript

- Tomcat used for static content – port 80/443

- HTML / CSS / JavaScript / images

- No direct calls from this tier to any other tier

- “serverConfig.js” containing variable that identified the Spring Framework DNS name and Port – as example:

var baseAddress = 'https://apiserver:8080';

- Spring Framework / Java – port 8080/44300

- Used for REST services

- Mongo DB – port 27017

- Document Storage / Database

NOTE: While static content was deployed to an IaaS instance running Docker, further determination should be done to identify if CDN is applicable and other application remediation approaches; further review of nginx modules, CDN requirements, and cache-ability of content is required.

Non-Standard Ports

Non-standard ports are an issue as we move the solution to the cloud. Generally edge gateways and proxy servers expect most traffic or permit only traffic to known ports. While getting an exception to be implemented in a firewall rule for specific applications is an option, this creates governance overhead, management, and support issues as the number of solutions in the enterprise increase with these exceptions.

Rapid Environment Setup

As new developers and testers ramp onto a team and a solution, it’s important to be able to rapidly stand up a working environment so they can be productive right away. In addition, as new releases of the solution are implemented, testing the solution in a clean environments helps to diagnose situations where old code or remnants of prior solutions may remain. While production deployments may be different, using clean well known configurations and applying a release on that narrows the complexity and helps mitigate issues that occur as machines run over time and various configuration or environment changes are made and potentially not tracked.

Overview of the Solution

One approach is to use both Docker and nginx (https://nginx.org) along with the existing Spring-based Java “middle-tier” and keeping Mongo DB as the persistence tier.

Docker is a Linux container technology that provides lightweight runtime and packaging tool, and Docker Hub, allowing developers and IT Pros to automate their build pipeline and share artifacts with collaborators through public or private repositories. Please see https://docker.com for extensive information on Docker.

Rapid Environment Deployment and Repeatability

Docker is utilized as a deployment tool for defining and creating several Dockerfile files and Builds that are built and deployed rapidly – and run across 1 or more Docker Host containers as required.

Docker offers up many attributes that assist in the repeatability. While this case study did not cover clustering approaches (as there are several in Docker), Docker offers Swarm as their native (in beta) approach for Docker clustering.

This solution also did not publish to the Docker Hub – it took advantage of creating a build in a local Docker host container and running direct from there. While Docker Hub is an option, there are approaches to running your own Docker repository:

https://azure.microsoft.com/blog/2014/11/11/deploying-your-own-private-docker-registry-on-azure/

Mongo DB and Docker

Docker offers an official Mongo DB image; the following solution is based upon that official image, and includes some modifications to insert seed data and check for ready-state. This seed data is just for demonstration purposes and not intended for production. Note that the primary purpose of these Dockerfiles and the scripts was to rapidly establish a running Solution environment with all tiers working, so that developers and testers can validate a new build.

Dockerfile for Mongo

# Dockerizing MongoDB: Dockerfile for building MongoDB images

# Based on ubuntu:latest, installs MongoDB following the instructions from:

# https://docs.mongodb.org/manual/tutorial/install-mongodb-on-ubuntu/

FROM ubuntu:latest

MAINTAINER Shawn Cicoria shawn.cicoria@microsoft.com

ADD mongorun.sh .

# Installation:

# Import MongoDB public GPG key AND create a MongoDB list file

RUN apt-key adv --keyserver hkp://keyserver.ubuntu.com:80 --recv 7F0CEB10

RUN echo 'deb https://downloads-distro.mongodb.org/repo/ubuntu-upstart dist 10gen' | tee /etc/apt/sources.list.d/10gen.list

# Update apt-get sources AND install MongoDB

RUN apt-get update && apt-get install -y mongodb-org

# Create the MongoDB data directory

RUN mkdir -p -m 777 /data/db

RUN mkdir -p -m 777 /tmp

COPY mongodb.catalog.json /tmp/mongodb.catalog.json

COPY mongodb.dealers.json /tmp/mongodb.dealers.json

COPY mongodb.quotes.json /tmp/mongodb.quotes.json

COPY mongorun.sh /tmp/mongorun.sh

WORKDIR /tmp

RUN chmod +x ./mongorun.sh

# Expose port #27017 from the container to the host

EXPOSE 27017

VOLUME /data/db

ENTRYPOINT ["./mongorun.sh"]

Mongo Startup Script

The script utilized as the entry point in the Dockerfile initializes a log file, and then starts MongoDB on a background process. It then waits for MongoDB to be ready by reading the log file for the message “wating for connections on port” . This represents one way to check for readiness. In the OrderService approach that follows in the next section, you will see another way.

#!/bin/bash

# Initialize a mongo data folder and logfile

sudo rm -r /data/db 1>/dev/null 2>/dev/null

mkdir -p -m 777 /data/db

touch /data/db/mongodb.log

echo step 1

# Start mongodb with logging

# --logpath Without this mongod will output all log information to the standard output.

# --logappend Ensure mongod appends new entries to the end of the logfile. We create it first so that the below tail always finds something

/usr/bin/mongod --smallfiles --quiet --logpath /data/db/mongodb.log --logappend &

MONGO_PID=$!

echo step 2

# Wait until mongo logs that it's ready (or timeout after 60s)

COUNTER=0

grep -q 'waiting for connections on port' /data/db/mongodb.log

while [[ $? -ne 0 && $COUNTER -lt 90 ]] ; do

sleep 2

let COUNTER+=2

echo "Waiting for mongo to initialize... ($COUNTER seconds so far)"

grep -q 'waiting for connections on port' /data/db/mongodb.log

done

# Now we know mongo is ready and can continue with other commands

echo now populate

#some point do something to chedk if already run; but for this demo just do it.

/usr/bin/mongoimport -d ordering -c catalog < /tmp/mongodb.catalog.json

/usr/bin/mongoimport -d ordering -c dealers < /tmp/mongodb.dealers.json

/usr/bin/mongoimport -d ordering -c quotes < /tmp/mongodb.quotes.json

wait $MONGO_PID

Java Spring and Docker

For the Java Spring framework based application tier, which provides various REST services that the HTML front-end calls directly, the following Dockerfile was used

OrderService Dockerfile

FROM java:8-jre

MAINTAINER Shawn Cicoria shawn.cicoria@microsoft.com

ENV APP_HOME /usr/local/app

ENV PATH $APP_HOME:$PATH

RUN mkdir -p "$APP_HOME"

WORKDIR $APP_HOME

ADD ordering-service-0.1.0.jar $APP_HOME/

ADD startService.sh $APP_HOME/

RUN chmod +x startService.sh

EXPOSE 8080

#CMD ["java", "-jar", "ordering-service-0.1.0.jar"]

CMD ["./startService.sh"]

StartService Script

The OrderService Dockerfile references the following script, which polls MongoDB using a hostname of ‘mongodb’ (you’ll see that alias used in the ‘docker run –link’ commands later) to check for ready state.

#!/bin/bash

while ! curl https://mongodb:27017/

do

echo "$(date) - still trying"

sleep 1

done

echo "$(date) - connected successfully"

java -jar ordering-service-0.1.0.jar

nginx and Docker

nginx [engine x] is an HTTP and reverse proxy server that is popular and used in this solution to provide the static file content needs along with providing a proxy to the OrderService, using simple rules. The primary benefit is squashing down to a single IP port for all traffic – however, nginx offers up other potential for distributed and rule based proxy routing and an abstraction of the OrderService endpoints – thus location transparency.

Nginx Dockerfile

Note that the Dockerfile uses the base image from Docker for nginx and extends that for custom configuration and content. There is a parameter of “daemon off” to nginx as without it the container would just exit as Docker requires the process to continually run in order for it to assume it’s running and manageable.

FROM nginx:1.7.10

MAINTAINER Shawn Cicoria shawn.cicoria@microsoft.com

ENV WEB_HOME /usr/local/web

ADD Web.tar $WEB_HOME/

COPY nginx.conf /etc/nginx/nginx.conf

EXPOSE 8000

CMD ["nginx", "-g", "daemon off;"]

Nginx Configuration

worker_processes 1;

events {

worker_connections 1024;

}

http {

include mime.types;

default_type application/octet-stream;

sendfile on;

keepalive_timeout 65;

gzip on;

server {

listen 8000;

server_name localhost;

location / {

root /usr/local/web/Web;

index index.html index.htm;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

location /catalog {

proxy_pass https://orderservice:8080;

}

location /quotes {

proxy_pass https://orderservice:8080;

}

location /shipments {

proxy_pass https://orderservice:8080;

}

}

}

Solution Architecture and Container Approach

In the diagram at the end of this section, the solution takes advantage of Docker and can scale up to numerous Docker hosts (containers). Note that we’ve also achieved use of a single IP port along with location transparency to the running containers and the calling clients.

In addition, for full clustering support you must ensure that any calls made between each tier leverages Docker’s linking of containers as Docker provides dynamic IP addresses and container names for each running container.

Docker Build Script

The Docker build script is below and is fairly standard.

#!/bin/sh

cd mongoseed

docker build -t scicoria/mongoseed:0.1 .

cd ../orderService

docker build -t scicoria/orderservice:0.1 .

cd ../staticsite

docker build -t scicoria/staticsite:0.1 .

cd ..

Docker Run Script

For running the containers, it’s important that the “client” is aware or “linked” to containers is needs to initiate requests to. In the following script the following links are in place:

1. StaticWeb -> OrderService (DNS name OrderService) on Port 8000

2. OrderService -> MongoDB (DNS name mongodb) on Port 27017

#!/bin/sh

docker run -d -p 27017:27017 --name mongodb -v /data/db:/data/db scicoria/mongoseed:0.1

docker run -d -p 8080:8080 --name orderservice --link mongodb:mongodb scicoria/orderservice:0.1

docker run -d -p 8000:8000 --link orderservice:orderservice scicoria/staticsite:0.1

Docker and Linking

Docker provides virtual networking among containers and any communication among containers must be explicitly declared when running a container. While the ports that are identified on the command line with the ‘-p’ parameters are for external communication into the container, Docker prevents that communication – unless it is explicitly linked. In addition you should be aware of the hostnames and dynamic IP addressing and Ports that are also used, as Docker effectively provides NAT (network address translation) services among containers.

Eliminate use of Non-Standard Ports

Finally, with this solution we can eliminate the use of non-standard ports and reduce configuration, support, and troubleshooting issues with regards to corporate firewalls, proxy servers, etc. that may prevent non-standard port traffic from traversing the network.

The final code used in the static web site for the JavaScript calls simply builds the endpoint name using the browser’s location provider. Since all traffic is now being routed through the same nginx front-ends, and nginx is determining which traffic to proxy and send to OrderService, we’ve reduced that complexity by a significant amount.

So, in updating the static web sites “serverConfig.js” – which executes in the Browser to the following, we have a minimal impact on the static web site and it’s supporting JavaScript:

var baseAddress = window.location.protocol + '//' + window.location.hostname;

Code Artifacts

The source files are published to GitHub here – with the [nginix] branch represented here:

https://github.com/cicorias/IgniteARMDocker/tree/nginix [nginx] (note the different branch from ‘master’).

This solution and source is part of a Pre-MS Ignite Session on DevOps – with additional walk-through items here: https://github.com/Microsoft/PartsUnlimitedMRP

Opportunities for Reuse

- The use of nginx for a Web front end provides an approach for layer 7 proxy and SSL termination. This example just brushes the surface of the full capabilities but they are on par with Application Request Routing (ARR) under IIS and nginx also runs on both Linux and Windows.

- The various scripts and Dockefiles are representations of simple build and deployments and can be reused as is if published direct to Docker Hub.

Further Research

The pattern applied here is useful for many solutions. The concept of location transparency for service endpoints and the use of nginx provides a simple but effective and highly performant proxy based approach. In addition nginx is extensible allowing more static injection of routing of proxy calls to different endpoints – based upon various attributes such as load, time of day, versioning, etc.

Shawn Cicoria has been working on distributed systems for nearly 2 decades after dealing his last deck of cards on the Blackjack table. Follow Shawn at https://bit.ly/cicorias or @cicorias