Capturing data in C++ AMP

Hello, my name is Pooja Nagpal. I am an engineer on the C++ AMP team. In this post, I would like to talk about how data is passed into a parallel_for_each for use on the accelerator.

The simplest method of passing in data is by using lambdas in the parallel_for_each. Variables captured by the lambda are automatically packaged by the C++ AMP runtime and made available on the accelerator. Let’s look closely at different capture methods and how they affect the way data is passed in.

Empty capture list [ ]

The simplest parallel_for_each you could write uses the empty capture clause. This means it does not use any variables from the host side. Since no variables are passed in, the parallel_for_each cannot communicate anything back.

1 parallel_for_each(

2 extent<1>(size),

3 [] (index<1> idx) restrict(amp)

4 {

5 // no data passed in.

6 // cannot communicate results back

7 });

That would be a fairly useless way to try to use C++ AMP, so let’s try and capture something. C++ allows us to capture variables from the enclosing scope either by value or by reference. Let’s start by looking at capture by reference.

Capture by reference [&]

When we capture an object by reference, it means we are using the original object in the body of the lambda. The only types C++ AMP allows to be captured by reference are concurrency::array and concurrency::texture . Memory for these data containers is directly allocated in the address space of the specified accelerator and this allows the original object to be accessed inside a parallel_for_each.

Here is a small example where we have added ‘ &arr’ to the capture clause to specify that the body of the lambda will access the array variable ‘arr’ by reference. Since the array resides on the accelerator memory, updates to the data made are not visible on the host automatically. To get back updates, we need to explicitly transfer data from the accelerator to host memory after the call to parallel_for_each.

1 accelerator_view acc_view = accelerator().default_view;

2 std::vector<int> data(size);

3

4 //’arr’ is allocated on acc_view and ‘data’ is copied in

5 array<int, 1> arr(size, data.begin(), acc_view);

6

7 //’arr’ already present on accelerator.

8 // and must be captured by reference

9 parallel_for_each(

10 arr.extent,

11 [&arr] (index<1> idx) restrict(amp)

12 {

13 arr[idx] = arr[idx] + 1;

14 });

15

16 //explicitly copy updates to ‘arr’ back to host memory

17 copy(arr, data.begin());

If you accidently capture an array or texture by value, your app will fail to compile with an error like the one shown below.

error C3597: 'arr': concurrency::array is not allowed to be captured by value if the lambda is amp

restricted

You can only capture array and texture objects that are declared on the same accelerator as the one on which the parallel_for_each is executing. If mismatching accelerators are used, your app will fail with a runtime_exception.

Capture by value [=]

The other method of capturing variables is by value. As the name indicates, this capture method uses a copy of the original object in the body of the lambda. Since types other than array or texture are allocated in the address space of the host, a copy of the object needs to be created on the accelerator for use in the parallel_for_each. The C++ AMP runtime automatically takes care of this for us by marshaling over copies of all variables that are captured by value.

Here is an example where we have added ‘=’ to the capture clause to specify that the body of the lambda can access all captured variables by value unless explicitly opted out. (In this case, the array ‘arr’ is opted out of the capture by value)

1 array<int, 1> arr(size);

2

3 int a = 10; // ‘a’ must be copied by value

4

5 parallel_for_each(

6 arr.extent,

7 [ =,&arr](index<1> idx) restrict(amp)

8 {

9 //’a’ captured by value,

10 //’arr’ are captured by reference

11 arr[idx] = arr[idx] + a;

12 });

If you accidently capture any other variableby reference, your app will fail to compile with an error like the one shown below.

error C3590: 'a': by-reference capture or 'this' capture is unsupported if the lambda is amp restricted

error C3581: 'main::<lambda_5653ec9b558fda8cf41db3f029d82d48>': unsupported type in amp restricted code

pointer or reference is not allowed as pointed to type, array element type or data member type (except reference to concurrency::array/texture)

Capture by value [=] array_view and writeonly_texture_view

The rule to capture all other variables by value extends to the C++ AMP types concurrency::array_view and concurrency::graphics::writeonly_texture_viewas well.Instances of these types can only be captured by value.

These types represent views of data and can be thought of as pointers to the actual underlying data. If you declare an array_view or writeonly_texture_view object outside the parallel_for_each (in host code), the object essentially wraps a reference to the data which it is a view of. Thus, like any other regular C++ object, capturing the array_view by value in a parallel_for_each results in a copy of the object being marshaled to the accelerator. The copied object continues pointing to its underlying data source.

The example below captures the array_view ‘arr_view’ by value by adding a ‘=’ to the capture clause. To get back updates to the data, we can either explicitly copy the array_view back to the host or take advantage of its automatic synchronization on first use.

1 std::vector<int> data(size);

2

3 array_view<int, 1> arr_view(size, data);

4

5 // arr_view captured by value. If needed the underlying data

6 // is copied to the accelerator at the time of capture

7

8 parallel_for_each(

9 arr_view.extent,

10 [=] (index<1> idx) restrict(amp)

11 {

12 arr_view[idx]++;

13 });

14

15 // arr_view automatically synchronized on

16 // first access after parallel_for_each

17 std::cout << arr_view[0] << std::endl;

If you accidently capture an array_view or writeonly_texture_view by reference, your app will fail to compile with the error below.

error C3590: 'arr_view': by-reference capture or 'this' capture is unsupported if the lambda is amp restricted

error C3581: 'main::<lambda_5653ec9b558fda8cf41db3f029d82d48>': unsupported type in amp restricted code

pointer or reference is not allowed as pointed to type, array element type or data member type (except reference to concurrency::array/texture)

Capture by value [=] mutable

By default variables that are captured by value appear as if they were const and cannot be modified in the body of the lambda. For array_view or writeonly_texture_view objects, this means you are allowed to modify the data they point to but not change the views themselves to point to a different memory location.

To allow modifications to variables captured by value, add the mutable keyword to the lambda. Remember that these modifications will be local to the lifetime of the lambda and will not be visible outside of the parallel_for_each. Passing a mutable lambda to parallel_for_each is not advised since this can adversely affect the performance of your app, and we may disallow the mutable option in future versions anyway. To read more about the performance implications of mutable, please look at our blog post on constant memory.

1 array_view<int, 1> arr_view(…);

2

3 int a = 10;

4

5 // NOTE: Adding mutable can affect the performance of

6 // your app

7 parallel_for_each(

8 arr_view.extent,

9 [=](index<1> idx) mutable restrict(amp)

10 {

11 // Allow updates to ‘a’

12 a—;

13

14 // Allow updates to ‘arr_view’ object

15 arr_view = arr_view.section(…);

16 });

17

18 // Modifications to 'a' and 'arr_view' cannot be copied back out

Bringing it all together

Everything can be summarized in two simple capture rules:

1. array and texture objects must be captured by reference

2. Everything else must be captured by value

Now let us see how this all works together by using an example. In the code snippet below, the integer ‘a’, array_view ‘arr_view’, and array ‘arr’ are declared outside the parallel_for_each and captured for use on the accelerator. Everything but ‘arr’ is captured by value according to the rules above.

1 accelerator_view acc_view = accelerator().default_view;

2

3 int a = 10;

4 std::vector<int> view_data(size);

5 array_view<int, 1> arr_view(size, view_data);

6 std::vector<int> arr_data(size);

7 array<int, 1> arr(size, arr_data.begin(),acc_view);

8

9 // ‘arr_view’ copied by value

10 // ‘a’ copied by value.

11 // ‘arr’ already allocated on accelerator and copied by ref

12

13 parallel_for_each(

14 arr.extent,

15 [=,&arr](index<1> idx) restrict(amp)

16 {

17 arr[idx] = arr_view[idx] + a;

18 arr_view[idx]++;

19 });

20

21 // ‘arr’ needs to be explictly copied back

22 copy(arr, arr_data.begin());

23

24 // ‘arr_view’ is synchronized back on first use

25 std::cout << arr_view[0] << std::endl;

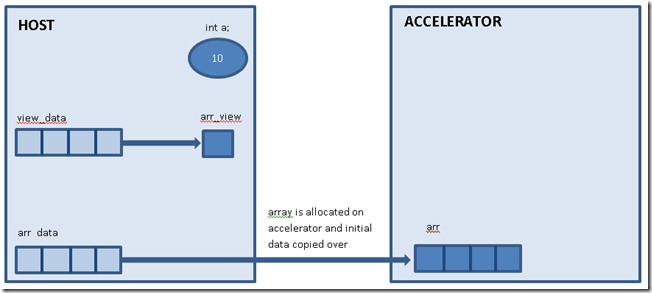

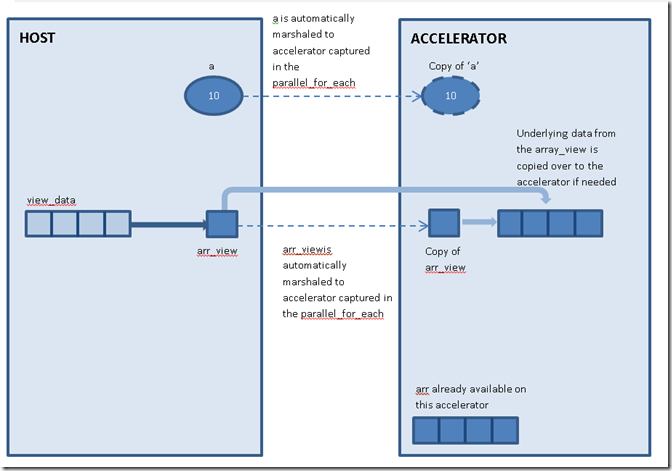

The picture below shows one way of visualizing how data makes its way from the host to the accelerator.

On declaration memory for the array ‘arr’ is immediately allocated on the accelerator and any initial data must be explicitly copied from the host. In this case we copy in data using a data container such as a vector. Variables ‘a’ and ‘arr_view’ are not bound to an accelerator and are not copied over just yet.

1 accelerator_view acc_view = accelerator().default_view;

2

3 int a = 10;

4 std::vector<int> view_data(size);

5 array_view<int, 1> arr_view(size, view_data);

6 std::vector<int> arr_data(size);

7 array<int, 1> arr(size, arr_data.begin(),acc_view);

8

When parallel_for_each is invoked, data that is not present on this accelerator is marshaled over. In our example, this would include integer ‘a’ and the array_view ‘arr_view’.

9 // ‘arr_view’ copied by value

10 // ‘a’ copied by value.

11 // ‘arr’ already allocated on accelerator and copied by ref

12

13 parallel_for_each(

14 arr.extent,

15 [=,&arr](index<1> idx) restrict(amp)

16 {

17 arr[idx] = arr_view[idx] + a;

18 arr_view[idx]++;

19 });20

Let’s look at how the data is copied back out once the parallel_for_each completes. Only data from a C++ AMP data container can be copied back. Updates to everything else don’t make it back to the host side.

21 // ‘arr’ needs to be explictly copied back

22 copy(arr, arr_data.begin());

23

24 // ‘arr_view’ is synchronized back on first use

25 std::cout << arr_view[0] << std::endl;

Here is another visualization of how the data is copied from the accelerator back to the host.

I hope this explains what happens under the hood of a parallel_for_each to make the variables you declare on the host memory available on the accelerator. As always, feedback is welcome below or on our MSDN Forum.

Comments

Anonymous

March 03, 2012

Great article. I loved it. Microsoft has done a great service in coming up with this C++ Amp technology.Anonymous

March 04, 2012

Thanks Peter, glad you like it!Anonymous

October 15, 2012

As a recommendation, how difficult will it be to simplify array_view to derive type and rank from the underlying data source? Are there cases where view would/could change data type? We already have auto so the ability to derive types already exists... Feels unnecessary to repeat the same specification over and over again... Size could be made optional too: only in those cases where a programmer would want to work on a subset of data... vector<int> data(size); array_view<int, 1> view(size, data);Anonymous

October 16, 2012

Thanks for your feedback, Alan. The extent can easily be inferred from size of the container. (since the C++ AMP open spec mandates that the container supports .data() and .size() members). The constructor cannot be used to infer the type of the array_view. However, another function which sets the array_view type to the value_type of the container can be used. Here is an example: template<typename T> array_view<typename T::value_type> make_array_view(T &container) { return array_view<typename T::value_type>(container.size(), container); } This would change the code snippet above to vector<int> data(size); auto view = make_array_view(data); If you think this will improve your code, feel free to add such an auxiliary function to your app. We will definitely consider your suggestion for the future. As an aside, one thing you can omit in the current release is the rank of an array / array_view since this is set is to 1 by default.Anonymous

October 16, 2012

This looks interesting (more like std api's), I will play with it to see which make the code look cleaner. Thank you poojanagpal!Anonymous

January 07, 2013

Hi, Is it also possible to pass in arrays of class objects like: class myClass{ public: int length; int width; myClass(int x, int y) { length = x; width = y; }; int GetVolume() { return length + width; }; }; .... use them on the GPU and then pass them back?Anonymous

January 07, 2013

The type needs to meet the requirements of restrict(amp), see: blogs.msdn.com/.../restrict-amp-restrictions-part-2-of-n-compound-types.aspx.Anonymous

August 26, 2015

Hi There! "On declaration memory for the array ‘arr’ is immediately allocated on the accelerator and any initial data must be explicitly copied from the host. In this case we copy in data using a data container such as a vector. Variables ‘a’ and ‘arr_view’ are not bound to an accelerator and are not copied over just yet." Please, is the above paragraph 'Okay' for the following code? Note that I have changed step 9.

- accelerator device(accelerator::default_accelerator);

- accelerator_view acc_view = accelerator().default_view;

- int a = 10;

- std::vector<int> view_data(10);

- std::iota(view_data.begin(), view_data.end(), 1);

- array_view<int, 1> arr_view(10, view_data);

- std::vector<int> arr_data(10);

- std::iota(arr_data.begin(), arr_data.end(), 10);

- array<int, 1> arr(10, arr_data.begin(), arr_data.end());

- Anonymous

November 21, 2015

7 array<int, 1> arr(size, arr_data.begin(),acc_view); There is no such constructor in array class !?!