A Deep Dive into Dynamic Group Calculation and How it Affects SCOM Performance

Disclaimer: Due to changes in the MSFT corporate blogging policy, I’m moving all of my content to the following location. Please reference all future content from that location. Thanks.

I would first like to give a special thanks to John Mckeown and Nick Masiuk, both of whom provided major contributions to the work described below.

For those that know SCOM, it isn’t necessarily the fastest application out there, particularly with console performance. Troubleshooting that can be painful, though it can typically be traced to adding some additional resources at some place in the environment. Needless to say, group calculation is not the first thing one thinks of. That said, in this case, we were working on some rather perplexing performance issues that simply could not be explained away by adding more RAM or using faster disks, as these properties were already well above Microsoft’s recommendations.

Over the course of troubleshooting, we used the following script. What it effectively does is query a few SQL dynamic views for its most intensive transactions so you can see what types of transactions are taking up the majority of your time. Before you take this and start using it, there are a few things worth noting:

- This is a bit expensive script in terms of resources. We did this testing mainly against development environments. It will work in a production environment, but if you can do your tests in a non-production environment, this would be better.

- There are some configurable parameters in blue. The DB name is strait forward, but this is only grabbing the last 10 minutes worth of transactions and only looking for transactions that run 2 or more time. These are configurable by editing the values accordingly.

- I’m not quite sure how this grabs data from SQL, but there does seem to be a lag between when a transaction is done and when it starts showing in the dynamic views we are querying.

Declare @oltp_db_name as varchar(128); -- ====================================== -- PARAMETERS -- set @oltp_db_name = 'OperationsManager'; -- ====================================== select * from ( select db.value AS dbid, COALESCE(DB_NAME(st.dbid), DB_NAME(CAST(db.value as int))+'*') AS DBNAME , qs.execution_count , qs.total_worker_time as cpu_time , qs.last_worker_time as last_execution_cpu_time , qs.total_worker_time/qs.execution_count as avg_cpu_time , substring(st.text, (qs.statement_start_offset/2)+1 , ((case qs.statement_end_offset when -1 then datalength(st.text) else qs.statement_end_offset end - qs.statement_start_offset)/2) + 1) as statement_text , qs.last_execution_time , qs.creation_time as plan_created_on , qp.query_plan , qs.total_elapsed_time/1000.0/1000.0 as total_elapsed_time_sec , (qs.total_elapsed_time/qs.execution_count)/1000.0/1000.0 as avg_elapsed_time_sec , qs.last_elapsed_time/1000.0/1000.0 as last_elapsed_time_sec , qs.min_elapsed_time/1000.0/1000.0 as min_elapsed_time_sec , qs.max_elapsed_time/1000.0/1000.0 as max_elapsed_time_sec , qs.total_clr_time, qs.last_clr_time , qs.min_clr_time, qs.max_clr_time ,qs.plan_handle ,qs.sql_handle from sys.dm_exec_query_stats as qs cross apply sys.dm_exec_query_plan (qs.plan_handle) as qp cross apply sys.dm_exec_sql_text(qs.sql_handle) as st outer apply sys.dm_exec_plan_attributes (qs.plan_handle) as db where qs.last_execution_time between dateadd(mi,-10,getdate()) and getdate() and qs.execution_count >= 2 and db.attribute = 'dbid' and db.value = (select db_id(@oltp_db_name)) ) a --where statement_text like '%from Cnsmr_accnt_ar_log this_ left outer join Cnsmr_accnt ca5_ on this_.cnsmr_accnt_id=ca5_.cnsmr_accnt_id inner join Usr%' --where statement_text like '%INSERT TEXT SEARCH HERE IF YOU WANT TO CHECK SPECIFIC CALCUlATIONS%' order by total_elapsed_time_sec desc |

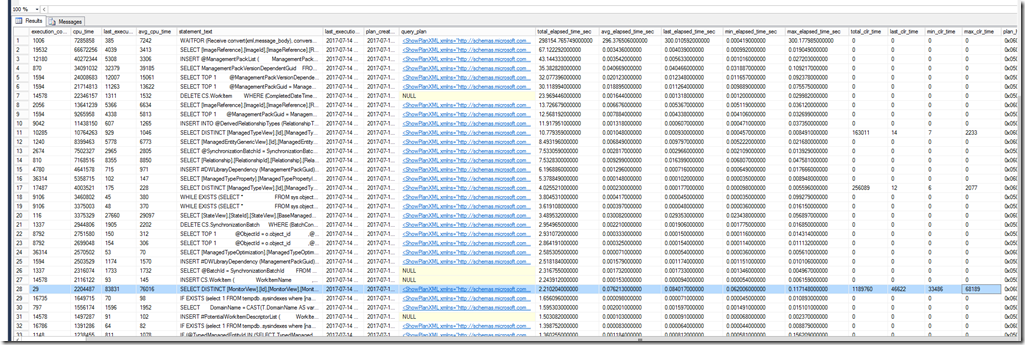

Below is an example of how the output looks in my lab:

This is for the most part, healthy. You can see from the total_elapsed_time_sec and avg_elapsed_time_sec columns that very few transactions have excessive run times. The top transaction, in my case, is the only thing consistently taking a while to run. You can further note that the average and last run values are very close to 300 seconds, which is consistent with an operation that is timing out. The statement_text column can be used to see what SQL is running. While you won’t see the values of variables that are being passed, you will see the SQL statements being run, which allowed us to isolate what was going on. The hi-lighted transaction in my screenshot is a CLR call that is being generated by a view.

In our case, we saw a number of transactions hitting that magic 300 second value, most of which were CLR calls, and most of which are like the one I have highlighted on line 28. I’m not a SQL expert, to be clear, but CLR times (as explained to me) are essentially transactions coming from an external source to SQL (aka. the SCOM console in the highlighted example, which uses SQL CLR types as a dependency). Looking at the statement text, we were seeing that these transactions were group calculation requests or views which were being filtered by the same groups. While we couldn’t see the specific data being requested, we could see enough logic behind it to see that both groups and views scoped by the same groups were the offending queries, eventually allowing us to start doing some isolation work. Here’s what we learned:

- Certain types of dynamic logic behave differently in terms of their performance. This is not a surprising when you think about it, but a close look showed us that the difference was in orders of magnitude. Using an ‘equals’ statement in a group calculation generates a straight SQL query, but using more advanced operations such as contains, matches wildcard, or matches regular expression generates a CLR type call using the same DBO function (matches_regular_expression). In terms of performance, the results were much more noticeable. We saw these transaction times increasing exponentially (in our case by powersof 10). Unfortunately, these calls are using the same function on the back end, so none of them had a noticeably better performance hit.

- This was further complicated by the way the matches_regular_function stored procedure worked. Calls to the DB essentially called this function for each condition in the group calculation (i.e. if you had 10 OR conditions, it would loop through the entire data set using the matches_regular_expression stored procedure 10 times).

- This is further exacerbated by large groups. To me, this is more or less common sense, but it is one thing to get an intellectual understanding of this compared to seeing the differences in real world implementations. This was a large environment, and the groups in question were pulling a large number of contained objects from a large number of servers. As the group size increased, this was causing these queries to consistently time out (and by proxy it appeared that they were being re-run). Multiple filters/or statements saw exponentially higher results, as it appears that the SQL calls loop back through the data with each piece of criteria. Naturally, this makes large groups with multiple inclusion/exclusion criteria to be very expensive.

- Likewise, because it is a CLR call, the call is essentially initiated against the SQL server from the management server. This means that network bandwidth issues can have a direct effect on group calculation (as well as the corresponding config generation with overrides targeted at a group).

- Not to be outdone, views that were scoped by these groups essentially redrew the group membership as part of the view. We could see that by looking at the statement text for the views, and we saw the same group query being included as a part of the view’s query. Effectively, this means that the same query is being run both for the group calculation as well as the view associated with said group. If you’ve ever had a large view that does not populate all of the objects in it, I suspect (though I haven’t proved yet) that the ultimate cause is these CLR queries timing out.

Ultimately, I don’t think many smaller environments have much to be concerned about, as generating groups with many objects is fairly difficult to do. However, in a large environment, it is worth noting that size of your dynamic groups likely matters, as does the logic you use to call them. We didn’t test every type of call, but we were able to confirm that simple calls seem to avoid using CLR altogether. Naturally, they also run faster. As for larger groups running against larger data sets, we had a few lessons learned.

- Consider writing registry discoveries to populate classes. Like groups, this can go overboard as SCOM does budget on the number of classes defined per monitored agent and what not. A few classes in place of large groups would be beneficial. The same logic to create a group could be used with a product such as SCCM DSC to populate a registry key in an organization specific portion of the registry. A SCOM discovery could be written that defines and populates a SCOM class based on value of this key. There are a few best practices to consider. First, classes should not change frequently, which means these keys should be static. An occasional change isn’t a deal breaker, but frequent changes are a problem. Second, don’t go overboard with this. Creating a few custom classes to get rid of a few expensive groups is fine, but creating classes for the sake of creating them without any consideration for how they are used is a poor way to manage the environment. A group is technically a class, but by using a registry key to define a class instead of a group, you eliminate the need for group calculation and move some of this work to the DB instead of the management server.

- When defining groups, use the most narrow class possible. This is already a best practice, but simply put, don’t use windows computer to define a subset of domain controllers. Use the windows domain controller class. This gives SCOM a much smaller subset of data to search through, thus reducing load for group calculations. Obviously, there will be scenarios where a broader class is needed. I’m not saying to never do this, but there is value in putting some thought into what you are trying to accomplish, how to do it, and whether or not your solution is efficient. Efficiency matters in large environments.

- For larger data sets, try and find ways to reduce the number of OR statements or a broader string for contains, matches wildcard, or matches regular expression checks. The string “ABCDE” will return less results than “ABCD” and if you need to throw a few excluded items in there, it will be more efficient in a large data set if you can avoid running additional iterations of that stored procedure.

- For smaller dynamic groups, use the equals value, as it effectively gets rewritten to a simple SQL statement. This allows for a direct query to get the exact data needed instead of a more expensive query that has to loop through the entire data set.

Comments

- Anonymous

July 21, 2017

https://rburri.wordpress.com/2009/01/14/dynamic-group-membership-authoring-and-performance-impact-on-rms/- Anonymous

July 21, 2017

I did a quick search on this when I wrote it up, so my apologies for not seeing it. This was a fun little exercise as it's not often I get to nerd it up with a customer. That said, this is a good read and well worth reading. I'm not sure how much has changed now that OpsMgr 2012 no longer uses the RMS, but certainly worth the read.- Anonymous

July 29, 2017

My dated post does still apply - albeit the group calculation is now done by one of the members of the "All Management Servers" pool. Long gone are the days of RMS as a single point of failure. However; that makes spotting the exception events trickier.Nathan's post does provide great insight into what is going on behind the scenes on the SQL end. Gone neardy indeed and I love it! The list of things to consider when doing dynamic group calculation is great. From my side I'd just add that "maxDepth" can be used to make the heavy lifting CRL call at least a little bit lighter when doing contains / contained calls (or at least I hope it does - maybe one could use Nathan's script to verify that...)

- Anonymous

- Anonymous