VMM Script Series, Part 7 – Seperate VMs

I’m publishing some of the scripts I have written for VMM. These are each based on specific customer asks or conversations that lead me to go sleepless in trying to solve the problems myself. I am very interested in your feedback, especially if you would like to offer a change to improve efficiency or effectiveness.

Seperate VMs

There isn’t a really good way in the Failover Clustering console to prevent 2 VMs from migrating to the same host. For example, let’s say you have 2 VMs that share a SQL cluster, or a NLB web solution, and to provide fault tolerance you would like to ensure that the two VMs are always on separate hardware. If there ever happens to be a physical hardware outage on one of the hosts the service will still be available with no downtime. I set out to solve this problem. My first instinct was to leverage PRO or even just an auto-resolving alert in OpsMgr but I realized that at minimum I was going to need a script that ran on the client to check if 2 VMs were co-located. Well, if a script is going to run, it might as well solve the problem right then and there if we know that in 100% of cases we always want to resolve the issue.

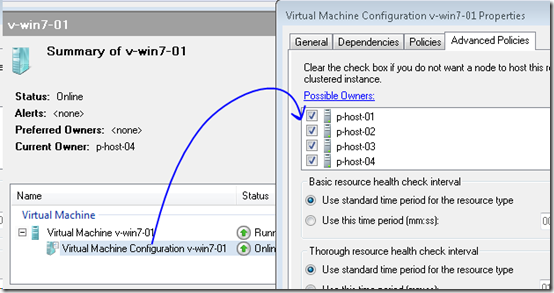

Before we go any further, understand there is another way to solve this. If you view the Properties dialogue of the VM settings in the Failover Cluster Console, you can prevent the application resource from ever coming online on specific nodes. You could easily only allow 1 VM to come online on 2 specific nodes, and the other VM to only come online on 2 other specific nodes. If you have a large cluster, this option would be worth consideration as it would provide no chance of the VMs ever ending up co-located. In a small cluster, you may not have enough nodes to follow this method and still provide adequate hosts for failover. This is why I wrote the script. If I were an admin trying to implement a solution like this, I would consider all my options given what I know about the environment.

This is the dialogue:

So the alternate method I have been testing is allowing the VM to move to any node, but scheduling a script on the VMM server to check in and determine if the VMs are on the same node. If they are the script will LiveMigrate one of them to another host using Best Placement. The VMs are listed as items in an array at the top of the script. In the case below, you see I selected “v-win7-01” and “v-win7-02”. I have scheduled the script in Windows Task Scheduler to run every 60 seconds.

In other words, this would not provide a solution where there is no chance the VMs will ever end up on the same node, only that risk would be reduced to a possible few minutes. I say few minutes, because if the VM is moved outside of VM, it could take a minute for VMM to discover changes to the VM properties, and then the script would run on the next 60 second interval. If for some reason the VM cannot be moved, the VMs would stay together. In a smaller environment, this is actually a desirable outcome because if only 1 node was available in the cluster, both VMs would still be allowed to come online even if there is a risk involved.

Notes

Hint: when you setup the Scheduled Task, you actually want to run powershell.exe and pass the script (with full UNC path, if applicable) as an arguement.

In each of the scripts for this series, I have noted any manually assigned variables at the top of the script just under the header. For this script you will want to update your VMM server name.

In each of the scripts for this series, I have added a line to load the VMM snap-in. This way it can be run from any machine, including a workstation, where the VMM console is installed by right clicking on the file and choosing “run”. If you run the script from within VMM you will get a few lines of error text that the snap-in is already loaded. You can either ignore this, or comment out the line if it bothers you.

Screen Shots

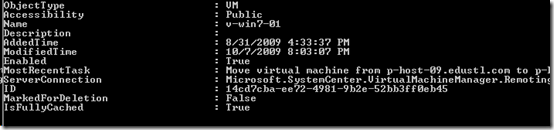

Output of move-vm when running the script manually.

Script

disclaimer: this script is not supported in any way. I have posted the code rather than the .ps1 file so that you can review it, modify it to make it your own, and test it before trusting it in a production environment. now this is your code, I am not responsible for its use.

1: # ------------------------------------------------------------------------------

2: # Seperate VMs

3: # ------------------------------------------------------------------------------

4: # blogs.technet.com/offcampus

5: # version 1.0

6: #

7: # Description

8: # Useful as a scheduled task on the VMM server to seperate 2 VMs across hosts

9: #

10: # ------------------------------------------------------------------------------

11:

12: # All variables in the script with customizable values are mapped here for convenience

13: $VMMServerName = "v-vmm-01"

14: $HostGroupName = "All Hosts"

15: $VMGroup = "v-win7-01", "v-win7-02"

16:

17: # Load Snap-Ins

18: Add-PSSnapin Microsoft.SystemCenter.VirtualMachineManager

19:

20: # Begin Script

21: Get-VMMServer $VMMServerName | out-null

22:

23: $VM = foreach ($Item in $VMGroup){get-vm | ? {$_.name -eq $Item}}

24: $List = $VM | group-object -property "VMHost" | ? {$_.count -gt 1}

25:

26: foreach ($Names in $List) {

27: $Cycle=1

28: while ($Cycle -lt $Names.count) {

29: $VMToMove = get-vm ($Names.group | select -first 1)

30: $VMHostRating = Get-VMHostRating -VM $VMToMove -VMHostGroup $HostGroupName -ismigration | ? {$_.Name -ne $VMToMove.VMHost} | sort -property rating -descending

31: Move-VM -vm $VMToMove -vmhost $VMHostRating[0].name -RunAsynchronously -BlockLMIfHostBusy -StartVMOnTarget

32: $Cycle++

33: }

34: }