The SHRINKDATABASE Evil

I mentioned in my previous Fragmentation post to not use the SRHINKDATABASE during the maintenance, and to switch off auto-shrink option. Some of you probably believe me and just don’t use it, but some of you wait an explanation and don’t accept advises based on promises :)

Well, let me prove you what the shrinkdatabase command can do for you to make your life.. everything else but not easier. The command actually sound very good – why not shrink the file; it has so much free space in the middle, when I delete some records or a whole table; I need the disk space, and I could make my database compact; etc. Those are the questions you could ask yourself and think that shrinkdatabase will be an answer, but neither of them actually are resolved using shrinkdatabase. Totally the opposite result is achieved. Actually I didn’t realize what it is doing until my MCM Training where we did some demos and Kimberly mentioned it in the class. I decided to use some of them and to add some more. So let’s do some testing:

1. Lets create a database ShrinkDemo

CREATE DATABASE ShrinkDemo;

GO

USE ShrinkDemo;

GO

SET NOCOUNT ON;

GO

2. Let’s create a table in the beginning of the database and fill with data

-- Create the 10MB first table in the begining of the data file

CREATE TABLE firstinfile(

c1 INT IDENTITY, c2 VARCHAR(8000))

GO

-- Fill up the firstinfile table

DECLARE @a INT;

SELECT @a = 1;

WHILE (@a < 1280) -- insert approx 10MB

BEGIN

INSERT INTO firstinfile VALUES (

REPLICATE ('a', 5000));

SELECT @a = @a + 1;

END;

GO

3. Create the second table which will be after the first in the database file

CREATE TABLE secondinfile(

c1 INT IDENTITY, c2 VARCHAR(8000))

GO

--create an index

CREATE CLUSTERED INDEX second_cl

ON secondinfile(c1);

GO

-- Fill in the secondinfile table

DECLARE @a INT;

SELECT @a = 1;

WHILE (@a < 1280) -- insert approx 10MB

BEGIN

INSERT INTO secondinfile VALUES (

REPLICATE ('a', 5000));

SELECT @a = @a + 1;

END;

GO

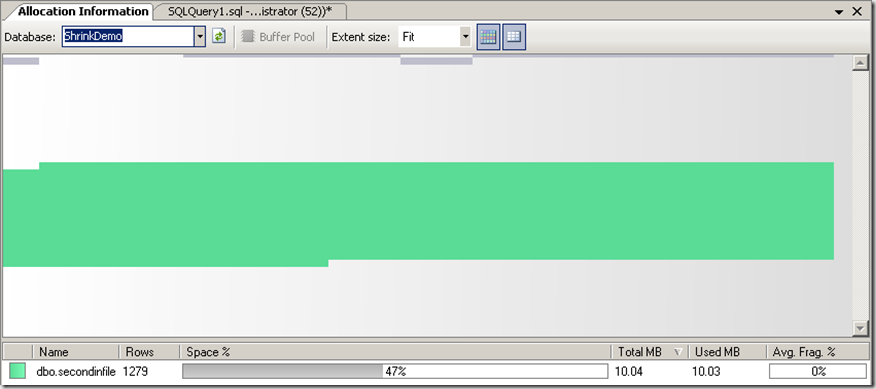

4. Now you can use the Allocation Info add-in () It is not very precise but you can still use it to take a look at your objects fragmentation inside the database.

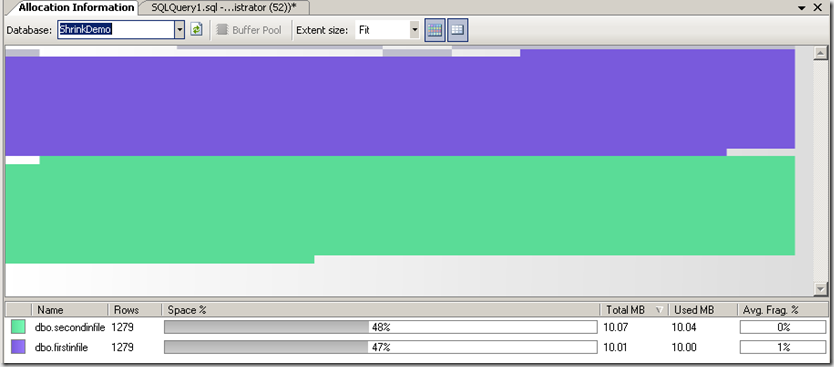

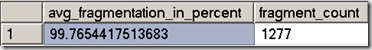

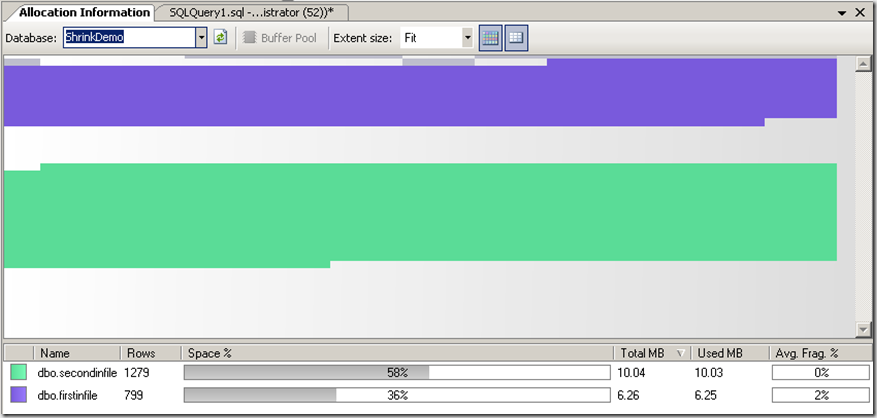

I like this picture! Now you can recognize the placement inside the data file of both tables one after another, and see that the Avg Frag % is around 0, they are not fragmented in this moment.

We can check fragmentation using this code and the DMVs also

SELECT

avg_fragmentation_in_percent,

fragment_count

FROM sys.dm_db_index_physical_stats (

DB_ID ('ShrinkDemo'),

OBJECT_ID ('secondinfile'),

1,

NULL,

'LIMITED');

GO

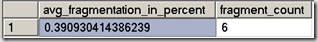

The result is the same

5. Now lets remove the firstinfile table creating a 10MB emtpy space in the beginning of the file

The secondinfile table is still 0% fragmented.

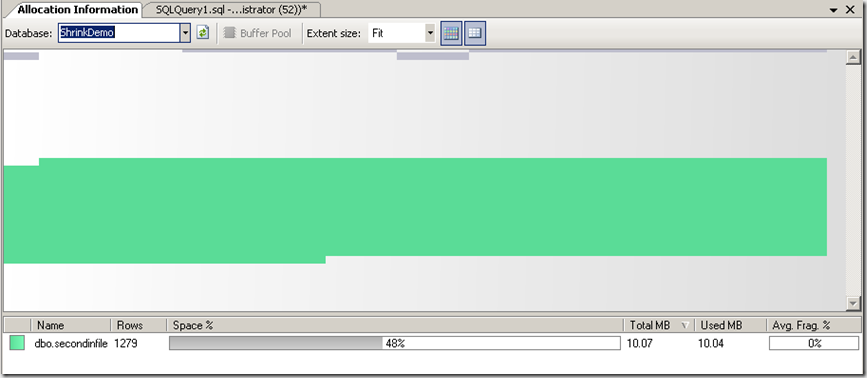

6. Now the next ‘logical’ action could be let’s shrink the database to get rid of the empty space.

DBCC SHRINKDATABASE (ShrinkDemo);

GO

Now what it the result of the srhinking?

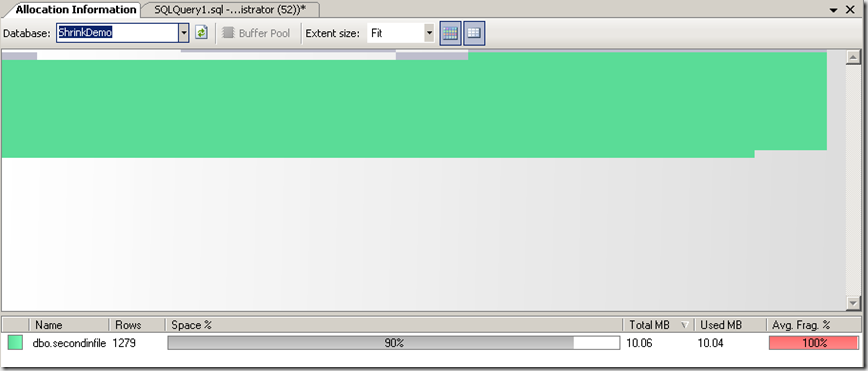

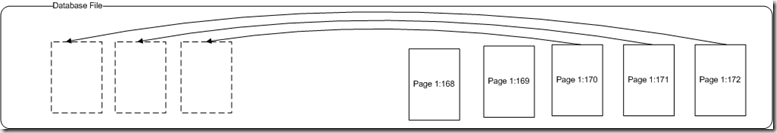

Well, the secondinfile table is in the beginnig of the file, BUT note the Avg Frag % !! It’s 100%! Right after creating a Clustered index for this object!

Here is the result from the DMV query

1277 are total number of fragments (number of blocks with continuing pages in order). We have a total of 1279 rows, which means that almost every page is a separate fragment, they are not in continuous order.

Why is this fragmentation occurs after shrinking the database. It is actually a logical scan fragmentation when the order of pages in the data file does not confirm to the order in allocation map of the object. Which means the object pages are totally mixed and not in continuous order as they should be. This prevents from executing an optimized read ahead reading of pages, such destructing the reading performance of scans. The result is such because the shrinkdatabase do the following – it gets the last page of the object and move it at the beginning of the file or empty space, it then takes the page before the last one and move it after the first was placed, and so on. I tried to visualize it on the picture bellow:

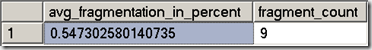

So, we ended up with totally fragmented table just after creating the clustered index. To make the picture even more complicated I will continue with focusing on the size of the database. After shrinking the database is 11MB, which means it has been reduced in size and it doesn't have any empty space in it. But achieving the desired size reducing we gain fragmentation. So we have to fix this further. To eliminate the fragmentation I will reorganize the index, in this case I could use index reorganize, because it eliminates just the logical fragmentation. and orders object pages.

7. So I execute the following

alter index second_cl on secondinfile reorganize

As a result

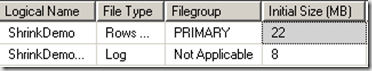

But what happened to the database size? It actually increased to 13MB, which is not too much because of reorganizing the index. But if I choose further to rebuild the index the database size increases to 22MB, which is approx 1.5 times the table. So we are actually reached the initial size the database was before shrinking (containing 10MB table and 10MB empty space) which mean we did nothing but making unnecessary efforts and actions.

More over, the allocation map of the database object looks like in the picture bellow. It is because rebuilding index creates a parallel indexing Btree which it populates, after that it switches it as a new cluster index. That’s why we need a free space in the database, else the SQL server will increase the database allocating and initializing new chunks.

And the final curious think is what would happen with allocation objects map if I create again the firstinfile table and populate it with 799 rows. Back to the beginning :)

Well, you probably have some questions at this time

Why there is an auto-shrink option?

Honestly I don’t know. It is absolutely un-useful. Imagine this shrink action on every 30 min executing on your database. It could be a disaster for your database IO operations, fragmentation and respectively performance. It is probably one of those legacy options that remain trough versions. Anyway, don’t ever switch this option on.

When actually should I use dbcc shrinkdatabase?

Well, I really couldn’t find a use case for shrinkdatabase. But there is a scenario to use shrinkfile statement. For example: you have deleted a large amount of data in your data file and some small objects remain. Then you can create new filegroup with a new data file (.ndf) in it, you rebuild clustered index on your existing objects on the new filegroup. This rebuild actually moves objects to the new filegroup. Then you can use shrinkfile with emtpyfile option to truncate your old data file, and then remove it from the database.

How can I deal with empty space inside the database?

You don’t have to. The empty space in the database will be used in your further operations as showed above. Empty space is not an issue. If it is a large empty area as the example above it will be used for the next created object. If it is a small empty space as soon as you rebuild indexes it will be outside of the object pages. So don’t worry about empty spaces in your database, monitor the object fragmentation and fix it regularly. If you insist to remove those spaces use the shrinkfile as explained in the above question.

Comments

Anonymous

January 01, 2003

PingBack from http://www.keyongtech.com/5222341-calling-a-stored-procedure-fromAnonymous

May 16, 2011

Hi, thanks for this - came across whilst googling. Very useful.