Transfer Learning for Text using Deep Learning Virtual Machine (DLVM)

This post is by Anusua Trivedi, Data Scientist, and Wee Hyong Tok, Data Scientist Manager, at Microsoft.

Motivation

Modern machine learning models, especially deep neural networks, can often benefit quite significantly from transfer learning. In computer vision, deep convolutional neural networks trained on a large image classification datasets such as ImageNet have proved to be useful for initializing models on other vision tasks, such as object detection (Zeiler and Fergus, 2014).

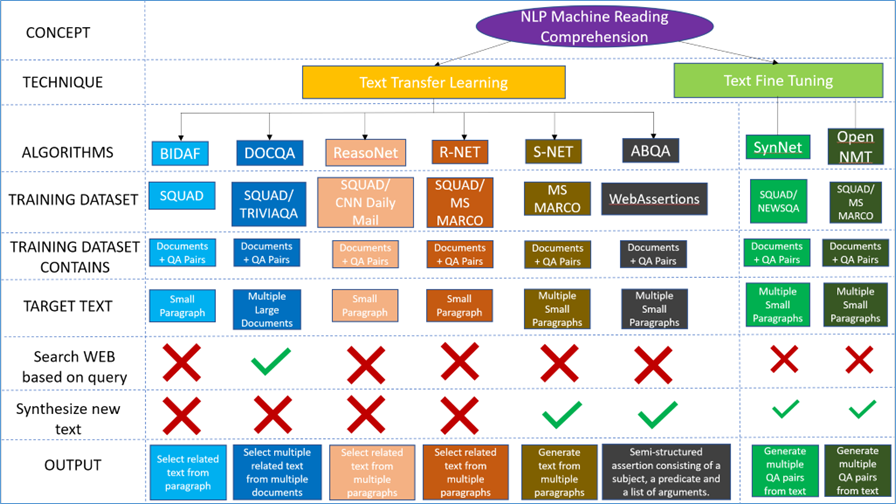

But how can we leverage the transfer leaning technique for text? In this blog post, we attempt to capture a comprehensive study of existing text transfer learning literature in the research community. We explore eight popular machine reading comprehension (MRC) algorithms (Figure 1). We evaluate and compare six of these papers – BIDAF, DOCQA, ReasoNet, R-NET, SynNet and OpenNMT. We initialize our models, pre-trained on different source question answering (QA) datasets, and show how standard transfer learning can achieve results on a large target corpus. For creating a test corpus, we chose the book Future Computed by Harry Shum and Brad Smith.

We compared the performance of the transfer learning approach for creating a QA system for this book using these pretrained MRC models. For our evaluation scenario, the performance of the Document-QA model outperforms that of other transfer learning approaches like BIDAF, ReasoNet and R-NET models. You can test the Document-QA model scenario using the Jupyter notebook here.

We compared the performance of the finetuning learning approach for creating a QA corpus for this book using a couple of these pretrained MRC models. For our evaluation scenario, the performance of the OpenNMT model outperforms that of the SynNet model. You can test the OpenNMT model scenario using the Jupyter notebook here.

Introduction

In natural language processing (NLP), domain adaptation has traditionally been an important topic for syntactic parsing (McClosky et al., 2010) and named entity recognition (Chiticariu et al., 2010), among others. With the popularity of distributed representation, pre-trained word embedding models such as word2vec (Mikolov et al., 2013) and glove (Pennington et al., 2014) are also widely used for natural language tasks.

Question answering is a long-standing challenge in NLP, and the community has introduced several paradigms and datasets for the task over the past few years. These paradigms differ from each other in the type of questions and answers and the size of the training data, from a few hundreds to millions of examples.

In this post, we are particularly interested in the context-aware QA paradigm, where the answer to each question can be obtained by referring to its accompanying context (i.e. paragraph or a list of sentences). For human beings, reading comprehension is a basic task, performed daily. As early as in elementary school, we can read an article, and answer questions about its key ideas and details. But for AI, full reading comprehension is still an elusive goal. Therefore, building machines that can perform machine reading comprehension is of great interest.

Machine Reading Comprehension

MRC is about answering a query about a given context paragraph. MRC requires modeling complex interactions between the context and the query. Recently, attention mechanisms have been successfully extended to MRC. Typically, these methods use attention to focus on a small portion of the context and summarize it with a fixed-size vector, couple attentions temporally, and/or often form a unidirectional attention. It has been shown that these MRC models perform well for transfer learning and finetuning of text for a new domain.

Why Are MRC Approaches Important for Enterprises?

The use of chatbots within enterprises has been on the rise for some time now. Research and industry has turned toward conversational AI approaches to advance such chatbot scenarios, especially in complex use cases such as in banking, insurance and telecommunications. One of the major challenges for conversational AI is the need to understand complex sentences of human speech in the same way that humans do. Real human conversation is never straightforward – it is full of imperfections consisting of multi-string words, abbreviations, fragments, mispronunciations and a host of other issues.

MRC is an integral component for solving the conversational AI problems we face today. Today, MRC approaches are able to answer objective questions such as "What causes rain?" with high accuracy. Such approaches can be used in real-world applications such as in customer service. MRC can be used both to navigate and comprehend such "give-and-take" interactions. Some common applications of MRC in business include:

- Translating conversations from one language to another.

- Automatic QA capability across different domains.

- Automatic reply of emails.

- Extraction of embedded information from conversations, for targeted ads/promotions.

- Personalized customer service.

- Creating personality and knowledge for bots, based on conversation domains.

Such intelligent conversational interfaces are the simplest way for businesses to interact with devices, services, customers, suppliers and employees everywhere. Intelligent assistants built using MRC approaches can be taught and continue to learn every day. Business impact can include cost reduction by increasing self-service, improving end-user experience/satisfaction, delivering relevant information faster, and increasing compliance with internal procedures.

In this blog post, we want to evaluate different MRC approaches to solve automatic QA capability across different domains.

MRC Transfer Learning

Recently, several researchers have explored various approaches to attack the MRC transfer learning problem. Their work has been a key step towards developing some scalable solutions to extend MRC to a wider range of domains.

Currently, most state-of-the-art machine reading systems are built on supervised training data–trained end-to-end on data examples, containing not only the articles but also manually labeled questions about articles and corresponding answers. With these examples, the deep learning-based MRC model learns to understand the questions and infer the answers from the article, which involves multiple steps of reasoning and inference. For MRC transfer learning, we have 6 models as shown in Figure 1.

MRC Fine Tuning

Despite the great progress of transfer learning using MRC, one key problem that has been overlooked until recently is – how does one build an MRC system for a very niche domain?

Currently, most state-of-the-art machine reading systems are built on supervised training data, trained end-to-end on data examples, containing not only the articles but also manually labeled questions about articles and corresponding answers. With these examples, the deep learning-based MRC models learn to understand the questions and infer the answers from the article, which involves multiple steps of reasoning and inference. This MRC transfer learning works very well for generic articles. However, for many niche domains or verticals, this supervised training data does not exist.

For example, if we need to build a new machine reading system to help doctors find valuable information about a new disease, there may be many documents available, but there is a lack of manually labeled questions about such articles and their corresponding answers. This challenge is magnified by the need to build a separate MRC system for each disease and the rapidly increasing volume of literature. It is therefore of crucial importance to figure out how to transfer an MRC system to a niche domain where no manually labeled questions and answers are available, but there exists a body of documents. The idea of generating synthetic data to augment insufficient training data has been explored before. For the target task of translation, Sennrich et.al., 2016 proposed to generate synthetic translations given real sentences to refine an existing machine translation system. However, unlike machine translation, for tasks like MRC, we need to synthesize both questions and answers for an article. Moreover, while the question is a syntactically fluent natural language sentence, the answer is mostly a salient semantic concept in the paragraph, such as a named entity, an action, or a number. Since the answer has a different linguistic structure than the question, it may be more appropriate to view answers and questions as two diverse types of data. A novel model called SynNet has been proposed by Golub et. al. 2017, to address this critical need. OpenNMTalso has a finetuning method implemented by Xinya Du et. al., 2017.

Figure 1: MRC Transfer Learning literature survey

Note:  indicates that a feature is not present and

indicates that a feature is not present and  indicates that a feature is present.

indicates that a feature is present.

Training the MRC Models

We use Deep Learning Virtual Machine (DLVM) as the compute environment with a NVIDIA Tesla K80 GPU, CUDA and cuDNN libraries. The DLVM is a specially configured variant of the Data Science Virtual Machine (DSVM) that makes it more straightforward to use GPU-based VM instances for training deep learning models. It is supported on Windows 2016 and the Ubuntu Data Science Virtual Machine. It shares the same core VM images (and hence all the rich toolsets) as the DSVM but is configured to make deep learning easier. All experiments were run on a Linux DLVM with 2 GPUs. We use TensorFlow and Keras with a Tensorflow backend to build the models. We pip installed all the dependencies in the DLVM environment.

Prerequisites

For each model follow Instructions.mdin GitHub to download the code and install dependencies.

Experimentation Steps

Once the code is setup in DLVM:

- We run the code for training the model.

- This produces a trained model.

- We then run the scoring code to test the accuracy of the trained model.

For all code and related details, please refer to our GitHub link here.

Operationalize Trained MRC Models on DLVM Using Python Flask API

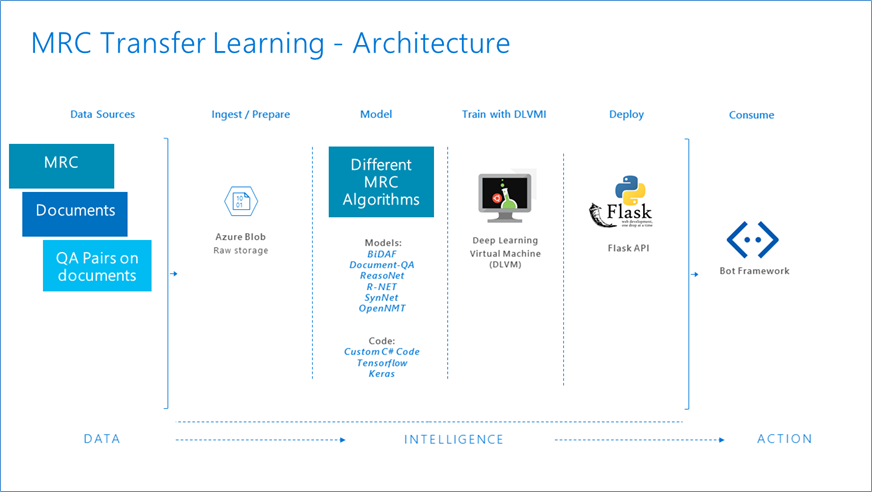

Operationalization is the process of publishing models and code as web services and the consumption of these services to produce business results. The AI models can be deployed to local DLVM using the Python Flask API. To operationalize an AI model using DLVM, we can use JupyterHub in DLVM. You can follow similar steps listed in this notebook for each model. The DLVM model deployment architecture diagram is shown in Figure 2 below.

Figure 2. Model deployment architecture

Evaluation Approach

For our comparison study, we wanted to take different MRC models trained on different datasets and test them on a single large corpus. For the purposes of this blog post, we use six MRC model approaches – BIDAF, DOCQA, ReasoNet, R-NET, SynNet and OpenNMT – to create a QA-Bot for a big corpus using the trained MRC models and we then compare the results.

For creating our test corpus, as noted earlier, we use the book Future Computed, by Harry Shum and Brad Smith. We converted the PDF of the book to a Word format and removed all images and diagrams so our test corpus consisted only of text.

BIDAF, DOCQA, R-NET, SynNet and OpenNMT have open GitHub resources available to reproduce the paper results. We used these open GitHub links to train the models and extended these codes wherever necessary for our comparison study. For the ReasoNet paper, we reached out to the authors and got access to their private code for our evaluation work. Please refer to the detailed write-ups below for an evaluation of each MRC Model on our test corpus.

- Part 1 - Evaluating the Bi-Directional Attention Flow (BIDAF) Model

- Part 2 - Evaluating the Document-QA Model

- Part 3 - Evaluating the ReasoNet Model

- Part 4 - Evaluating the R-NET Model

- Part 5 - Evaluating the SynNet Model

- Part 6 - Evaluating the OpenNMT Model

Our comparison work is summarized in Table 1 below.

Training Dataset Used |

Training Dataset Size |

Testing Dataset |

PROs |

CONs |

Relevant Application Scenario |

|

BiDAF |

SQUAD |

100K+ questions posed by crowd workers on a set of Wikipedia articles |

Text Corpus created from "Future Computed" e-book |

Easy to train and test. |

Works well only on a small paragraph. |

Customer Service |

Document-QA |

TriviaQA |

650K question-answer-evidence triples |

Text Corpus created from "Future Computed" e-book |

Works on multiple large documents. |

As this model does not give a single answer, it might be the case the most probable answer is assigned a lower priority by the algorithm, which might not make any sense at all. |

Knowledge Bots |

ReasoNet |

SQUAD |

100K+ questions posed by crowd workers on a set of Wikipedia articles |

Text Corpus created from "Future Computed" e-book |

Can dynamically determine whether to continue the comprehension process after digesting intermediate results, or to terminate reading. |

Works well only on multiple small paragraphs |

QA on Manuals |

R-NET |

MS-MARCO |

100K+ Bing User QA pairs |

Text Corpus created from "Future Computed" e-book |

Out-performs ReasoNet on SQUAD |

Labeled data use becomes the bottleneck for better performance |

Personalized Recommendation based on Bing Search |

SynNet |

SQUAD |

100K+ questions posed by crowd workers on a set of Wikipedia articles |

Maluuba NewsQA Corpus |

Successfully generated QA pairs from News articles |

Needs a lot of manual data processing for test corpuses, and the steps are not clear from the open GitHub code |

Generate new MRC corpus |

OpenNMT |

MS-MARCO |

100K+ Bing User QA pairs |

Text Corpus created from "Future Computed"e-book |

Get most accurate results on a niche domain without any additional training data |

The training code is not available open source |

Personality Bots |

Table 1: Comparing different MRC algorithms

Learnings From Our Evaluation Work

In this blog post, we investigated the performance of four different MRC methodologies on SQUAD and TriviaQA datasets from the literature. We compared the performance of the transfer learning approach for creating a QA system for the book Future Computed using these pretrained MRC models. Please note that the comparisons here are restricted to our evaluation scenario only. The results may differ for other documents or scenarios.

Our evaluation scenario shows that the performance of the OpenNMT fine-tuning approach outperforms that of plain transfer learning MRC mechanisms for domain-specific datasets. However, for generic large articles, the Document-QA model outperforms BIDAF, ReasoNet and R-NET models. We compare the performance in more detail below.

Pros/Cons of Using the BiDAF Model for Transfer Learning

Pros

BiDAF model is easy to train and test (thanks to AllenAI for making all the codes available through open GitHub link).

Cons

The BiDAF model has very restricted usage. It works well only on a small paragraph. Given a larger paragraph or many small paragraphs, this model usually takes a long time and comes back with a probable span as an answer which might not make any sense at all.

Resource Contribution in GitHub

https://github.com/antriv/Transfer_Learning_Text/tree/master/Transfer_Learning/bi-att-flow

Pros/Cons of Using the Document-QA Model for Transfer Learning

Pros

Document-QA model is very easy to train and test (thanks to AllenAI for making all the codes available through open GitHub link). The Document-QA model does a better job compared to the BiDAF model we explored earlier. Given multiple larger documents, this model usually takes little time to produce multiple probable spans as answers.

Cons

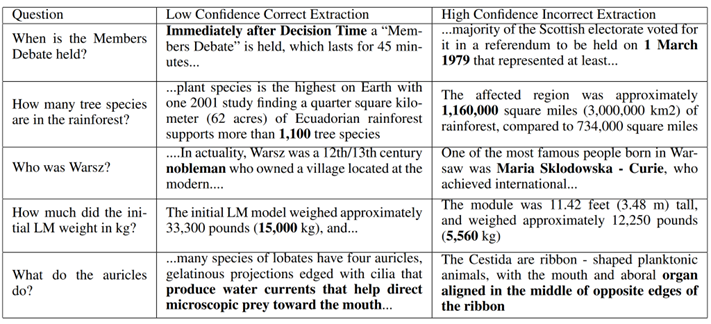

However, as Document-QA does not give a single answer, it might be the case the most probable answer is assigned a lower priority by the algorithm, which might not make any sense at all. We hypothesize that if the model only sees paragraphs that contain answers, it might become too confident in heuristics or patterns that are only effective when it is known a priori that an answer exists. For example, in Table 2 below (adapted from the paper), we observe that the model will assign high confidence values to spans that strongly match the category of the answer, even if the question words do not match the context. This might work passably well if an answer is present but can lead to highly over-confident extractions in other cases.

Resource Contribution in GitHub

https://github.com/antriv/Transfer_Learning_Text/tree/master/Transfer_Learning/document-qa

Table 2: Examples from SQuAD where a paragraph-level model was less confident in a correct extraction

from one paragraph (left) than in an incorrect extraction from another (right).

Pros/Cons of Using the ReasoNet Model for Transfer Learning

Pros

ReasoNets make use of multiple turns to effectively exploit and then reason over the relation among queries, documents, and answers. With the use of reinforcement learning, ReasoNets can dynamically determine whether to continue the comprehension process after digesting intermediate results, or to terminate reading.

Cons

It's hard to recreate results from this paper. No open code is available for this. The ReasoNet model has very restricted usage. It works well only on a small paragraph. Given a larger paragraph, this model usually takes a long time and comes back with a probable span as an answer which might not make any sense at all.

Resource Contribution in GitHub

We added some demo code for this work but no public GitHub code is available for this.

Pros/Cons of Using the R-NET Model for Transfer Learning

Pros

Apart from training this on SQUAD, we can train this model also on MS-MARCO. In MS-MARCO, every question has several corresponding passages, so we simply concatenate all passages of one question in the order given in the dataset. Secondly, the answers in MS-MARCO are not necessarily subspans of the passages. In this regard, we choose the span with the highest evaluation score with the reference answer as the gold span in the training and predict the highest scoring span as answer during prediction. R-NET model on MS-MARCO dataset out-performs other competitive baselines like ReasoNet.

Cons

For data-driven approach, labeled data might become the bottleneck for better performance. While texts are abundant, it is not easy to find question-passage pairs that match the style of SQuAD. To generate more data, R-NET model authors trained a sequence-to-sequence question generation model using SQuAD dataset and produced a large amount of pseudo question-passage pairs from English Wikipedia. But analysis shows that the quality of generated questions needs improvement. R-NET works well only on a small paragraph. Given a larger paragraph or many small paragraphs, this model usually takes a long time and comes back with a probable span as an answer which might not make any sense at all.

Pros/Cons of Using the SynNet Model for Finetuning

Pros

Using the SynNet model on the NewsQA dataset was very easy. It also generated great QA pairs on NewsQA dataset.

Cons

The SynNet model has very restricted usage. It is hard to run the open existing code on a custom paragraph/text. It needs a lot of manual data processing, and the steps are not clear from this open GitHub link. We were therefore unable to evaluate it on our test book corpus.

Pros/Cons of Using the OpenNMT Model for Finetuning

Pros

Using the OpenNMT model, we were able to get the most accurate results to date on a niche domain without any additional training data, approaching the performance of a fully supervised MRC system. OpenNMT works in two stages:

- Answer Synthesis: Given a text paragraph, generate an answer.

- Question Synthesis: Given a text paragraph and an answer, generate a question.

Once we get the generated QA pairs from a new domain, we can also train a Seq2Seq model on these QA pairs to generate more human-like conversational AI approaches from MRC.

Cons

The OpenNMT model training code is not available open source. It works well only on a small paragraph. Given a larger paragraph or many small paragraphs, this model usually takes a long time and comes back with a probable span as an answer which might not make any sense at all.

Conclusion

In this blog post, we showed how we used DLVM to train and compare different MRC models for transfer learning. We evaluated four MRC algorithms and compared their performance by creating a QA bot for a corpus using each of the models. In the post, we demonstrate the importance of selecting relevant data using transfer learning. We show that considering the task and domain-specific characteristics and learning an appropriate data selection measure outperforms off-the-shelf metrics. MRC approaches had given AI more comprehension power, but MRC algorithms still don't really understand what it reads. For instance, it doesn't know what "British rock group Coldplay" really is, aside from it being an answer to a Super Bowl related question. There are many NLP applications that need models that can transfer knowledge to new tasks and adapt to new domains with human-level understanding, and we feel that this is just the beginning of our text transfer learning journey.

Anusua & Wee Hyong

(You can email Anusua at antriv@microsoft.com with questions related to this post.)