The Power of RDMA in Storage Space Direct

About one year ago, I wrote a blog post "The Power of RDMA in Storage Space Direct". In that post, I shared the performance improvement I observed (incl. IOPS and latency) and especially great performance consistency (QoS) provided by RDMA capable NICs in Windows Server 2016 TP4.

Given there are lots of improvements from TP4 to TP5 and to RTM. I'd like refresh the content based on latest version of Window Server 2016. In addition, I also refresh the hardware infrastructure as the following:

Test Environment

Hardware

- ECGCAT-R4-16 (DELL 720, 2 E5-2650, 192GB Memory, 4 600 15K SAS HDD RAID10, 2 EMC P320h 700GB PCIe SSD, 1 Chelsio T580-CR NIC + 1 Mellanox ConnectX-3 NIC

- ECGCAT-R4-18 (DELL 720, 2 E5-2650, 192GB Memory, 4 600 15K SAS HDD RAID10, 2 EMC P320h 700GB PCIe SSD, 1 Chelsio T580-CR NIC + 1 Mellanox ConnectX-3 NIC

- ECGCAT-R4-20 (DELL 720, 2 E5-2650, 192GB Memory, 4 600 15K SAS HDD RAID10, 2 EMC P320h 700GB PCIe SSD, 1 Chelsio T580-CR NIC + 1 Mellanox ConnectX-3 NIC

As you could see from the above list, I have both Chelsio T580 NIC (Dual Port, 40G) and Mellanox Connect-3X NIC (Dual Port, 10G). Both of the two NICs are support RDMA. However in this test, Mellanox NICs will be used as normal management NIC (management traffic only). I will use one port of 40Gb Chelsio T580 as the storage NIC. (Actually that's automatic coz Storage Space Direct will automatically use the most approriate network.)

Software

Windows Server 2016 Datacenter Edition

Test Case

Raw Performance of Mirrored Space

- 4KB Random IO, 100% Read

- 4KB Random IO, 90% Read, 10% Write

- 4KB Random IO, 70% Read, 30% Write

VM Fleet

- 4KB Random IO, 100% Read

- 4KB Random IO, 90% Read, 10% Write

- 4KB Random IO, 70% Read, 30% Write

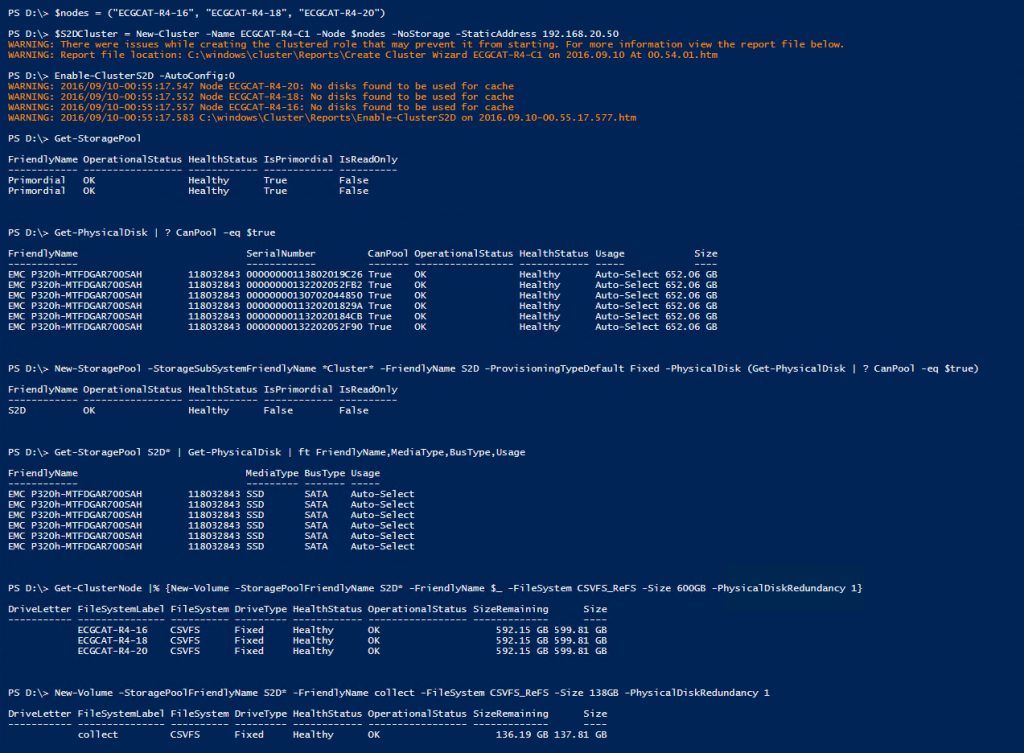

Create Hyper-Converged Storage Space Direct Cluster

Assumption

I before create Storage Space Direct cluster, I had already add the above three physical machines into my domain "infra.lab".

Create Cluster

$nodes = ("ECGCAT-R4-16", "ECGCAT-R4-18", "ECGCAT-R4-20")

icm $nodes {

Install-WindowsFeature -Name Hyper-V, File-Services, Failover-Clustering -IncludeManagementTools -Restart

}

New-Cluster -Name ECGCAT-R4-C1 -Node $nodes -NoStorage -StaticAddress 192.168.20.50

Enable S2D and Create Space

Enable-ClusterS2D -AutoConfig:0

New-StoragePool -StorageSubSystemFriendlyName *Cluster* -FriendlyName S2D -ProvisioningTypeDefault Fixed -PhysicalDisk (Get-PhysicalDisk | ? CanPool -eq $true)

Get-StoragePool S2D* | Get-PhysicalDisk | Set-PhysicalDisk -Usage AutoSelect

Get-ClusterNode |% {New-Volume -StoragePoolFriendlyName S2D* -FriendlyName $_ -FileSystem CSVFS_ReFS -Size 600GB -PhysicalDiskRedundancy 1}

New-Volume -StoragePoolFriendlyName S2D* -FriendlyName collect -FileSystem CSVFS_ReFS -Size 138GB -PhysicalDiskRedundancy 1

In the above example, I didn't use Auto Config of S2D coz in some extreme case, S2D might not configure physical disk's usage correctly. In my case, I don't need any cache device. All of the 6 drivs are PCIe SSD. They are extremely fast.

In addition, besides three space used for test (one for each node), I also create a fourth space called "collect" which will be used for VM Fleet test.

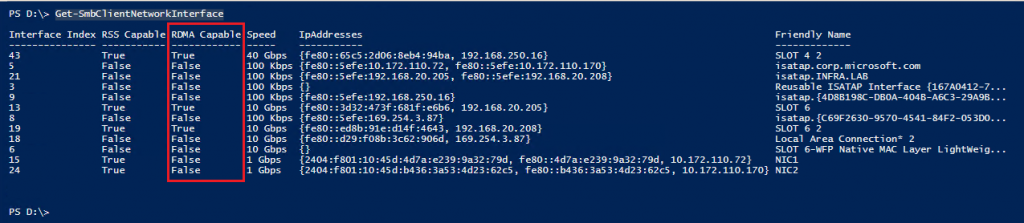

As you could see, with RTM build, Create S2D Cluster is pretty easy. Basically you only need three cmdlets, New-Cluster, Enable-ClusterS2D and "New-Volume". Everything else is automatic. If you used iWARP (like the Chelsio T520/T580 NIC), there is no any RDMA specific configuration. Driver is already built-in in Window Server 2016. No need for DCB or enable PFC on switch. It's pretty much plug and play. You may use the following cmdlet to verify if your NIC is RDMA capable.

Get-SmbClientNetworkInterface

Enable/Disable RDMA

I use the following script to disable and enable the RDMA

$nodes = ("ECGCAT-R4-16", "ECGCAT-R4-18", "ECGCAT-R4-20")

icm $nodes {

Set-Netoffloadglobalsetting -Networkdirect Disabled; `

Update-Smbmultichannelconnection

}

icm $nodes {

Set-Netoffloadglobalsetting -Networkdirect Enabled; `

Update-Smbmultichannelconnection

}

Performance of Mirrored Space

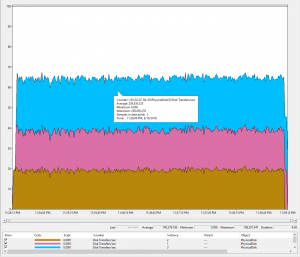

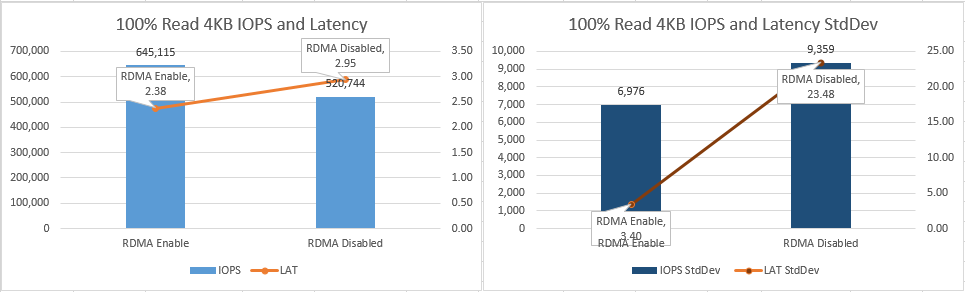

4KB Random IO, 100% Read

Run the following command from each of the three nodes and captured the Performance data from Performance Monitor.

diskspd.exe -c100G -d300 -w0 -t32 -o16 -b4K -r -h -L -D C:\ClusterStorage\VolumeX\IO.dat

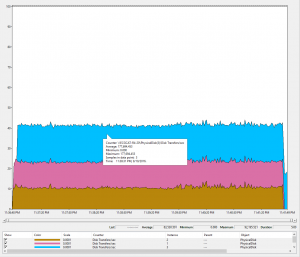

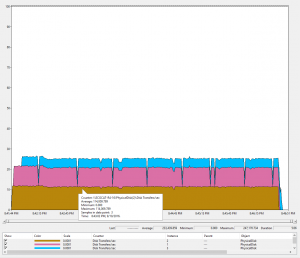

Here is the results of test when RDMA enabled and disabled.

If we compared the above results, we could see in this scenario, with RDMA enabled, IOPS increased 23.88% and Latency decreased 19.29%. More important, Standard Deviation of Latency decreased 85.52%, which means RDMA can not only provide better performance but also much better consistency. That's critical for Enterprise Applications.

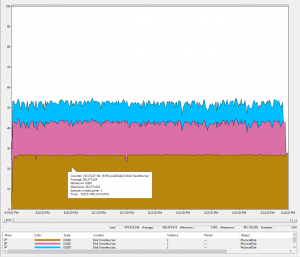

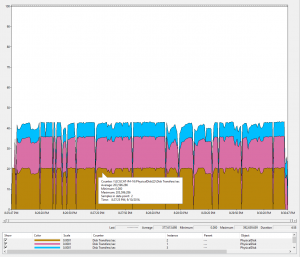

4KB Random IO, 90% Read, 10% Write

Run the following command from each of the three nodes and captured the Performance data from Performance Monitor.

diskspd.exe -c100G -d300 -w10 -t32 -o16 -b4K -r -h -L -D C:\ClusterStorage\VolumeX\IO.dat

In 90% Read 10% Write scenario, RDMA can provide even larger performance improvements. IOPS increased 32.16% and Latency decreased 24.15%. As for Standard Deviation of Latency, it decreased 88.83%.

4KB Random IO, 70% Read, 30% Write

Run the following command from each of the three nodes and captured the Performance data from Performance Monitor.

diskspd.exe -c100G -d300 -w30 -t32 -o16 -b4K -r -h -L -D C:\ClusterStorage\VolumeX\IO.dat

Same as the above, in 70% Read 30% Write scenario, the testing results w/ RDMA enabled is outstanding. its IOPS increased 63.57% and Latency decreased 38.81%. As for Standard Deviation of Latency, it decreased 92.14%.

VM Fleet Performance Test

Prepare VM Fleet

If you are not familiar with VM Fleet, you may refer to the my previous post.

After install VM Fleet, I use the following cmdlets to prepare the environment. 8 VMs for each node and 24 VMs in total. Each VM has 8 virtual cores and 16GB memory.

$nodes = ("ECGCAT-R4-16", "ECGCAT-R4-18", "ECGCAT-R4-20")

.\create-vmfleet.ps1 -basevhd "C:\ClusterStorage\collect\VMFleet-ENGWS2016COREG2VM.vhdx" -vms 8 -adminpass User@123 -connectpass User@123 -connectuser "infra\administrator" -nodes $nodes

.\set-vmfleet.ps1 -ProcessorCount 8 -MemoryStartupBytes 16GB -DynamicMemory $false

.\start-vmfleet.ps1

Open a separate Windows and run the cmdlet ".\watch-cluster.ps1" to monitor the test results.

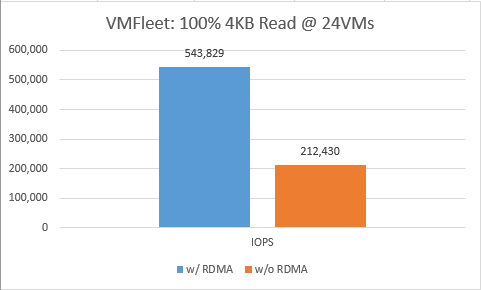

4KB Random IO, 100% Read

Run the following cmdlet and start the test.

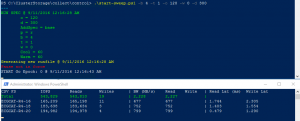

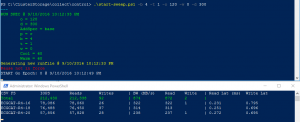

.\start-sweep.ps1 -b 4 -t 1 -o 120 -w 0 -d 300

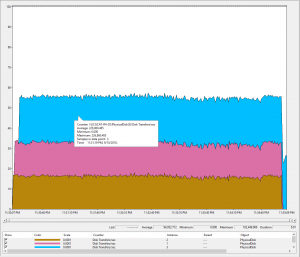

Here is how it looks like. Left screen was captured when RDMA was enabled and the right was captured when RDMA disabled.

When compared two test results, you could RDMA can increase the IOPS 156% in the test scenario.

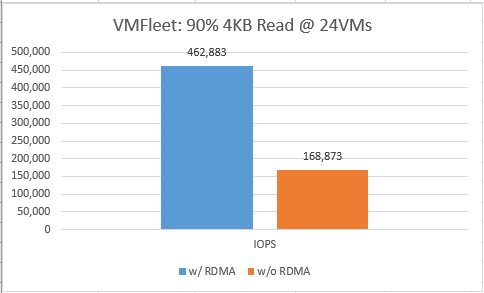

4KB Random IO, 90% Read

Run the following cmdlet and start the test.

.\start-sweep.ps1 -b 4 -t 1 -o 120 -w 10 -d 300

In this case, RDMA can provide 174% IOPS improvements.

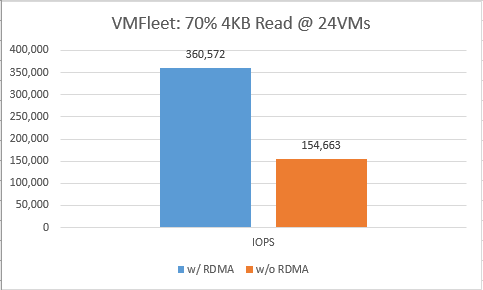

4KB Random IO, 70% Read

Run the following cmdlet and start the test.

.\start-sweep.ps1 -b 4 -t 1 -o 120 -w 30 -d 300

In this case, RDMA can provide 133% IOPS improvements.

Conclusion

Based on the above tests, we could say RDMA can improve the performance dramatically in the IO intensive scenarios.

Kudo to Chelsio

Thanks Chelsio for providing their T580-CR in this validation test.

Comments

- Anonymous

November 01, 2016

Hi,What is tool using for monitor CSV IO from Powershell?- Anonymous

November 14, 2016

https://github.com/Microsoft/diskspd/tree/master/Frameworks/VMFleet

- Anonymous