Everything you wanted to know about SR-IOV in Hyper-V Part 3

So far in this series, we’ve looked at the “why” question, and the hardware aspects of SR-IOV, and identified that to use SR-IOV in Hyper-V it is necessary to have system hardware support in the form of an IOMMU device, and a PCI Express device which has SR-IOV capabilities. Now it’s time to start taking a look at the software aspects. In this part, I’ll look at device drivers.

SR-IOV Device Drivers

I’ve mentioned that a driver model is essential for a total SR-IOV solution. Yet the SR-IOV specifications don’t mention anything about driver models. Hence it has been up to Microsoft to specify the necessary interfaces necessary to support VFs and PFs running together in a virtualised environment. You may be asking why we need to update the driver model for SR-IOV when we already have a well-established driver model for networking, in the form of NDIS. If you think about a “traditional” networking device driver, the driver is only responsible for controlling a single device (in the parent partition, or the only instance of Windows when outside of virtualization). That device, of course, being what in the SR-IOV world is referred to as the PF.

Prior to Windows “8”, we’ve only had emulated or software based devices in Hyper-V virtual machines, and device vendors haven’t needed to concern themselves too much about what is running inside virtual machines. Hyper-V has dealt with the indirect I/O model itself and provided drivers for those virtual devices. However, an SR-IOV device has VFs as well as PFs. With SR-IOV, a part of vendor’s hardware is exposed inside the virtual machine. As our networking code doesn’t know how to manipulate that piece of hardware directly, we need to load a vendor supplied driver in the VM.

However, the VF is not a fully-fledged device or autonomous. It cannot, for hopefully obvious security reasons, make any decisions about policy and control. It can’t instantiate itself, something else has to do that. It can’t cause another VF to be instantiated. It is transient in the overall lifetime of a given virtual machine guest operating system instance. It can only read and write parts of device configuration that the PF lets it manipulate, and it can only see the parts of networking hardware in memory space that are allocated to that VF.

While VFs are transient, the PF is always available (assuming the PCI Express device is enabled, that is). VFs cannot exist without a PF being present. As the PF runs in a trusted domain (the parent partition), the PF can be the arbiter for all policy decisions, and the control point for VF instantiation and tear-down including hardware resource allocation, working in conjunction with the rest of the Hyper-V virtualization stack.

There are also situations where the VF driver needs to communicate safely with the PF driver. These are not for the I/O path, but for policy and control logic. Microsoft considers a hardware backchannel less secure than letting Hyper-V modulate that channel of communication. By exposing interfaces in Windows for this functionality, we can validate driver behaviour by providing fuzz and penetration testing as part of driver certification, thereby encouraging vendors to take a long hard look at the security model when exposing hardware directly to a virtual machine. This is a critical tenet in our Trustworthy Computing SDL that all Windows components go through.

So what interfaces have we identified at a very high level?

- To allow a PF driver to tell Windows about what SR-IOV capabilities it has

- To allow a PF driver to instantiate and tear-down VFs including provisioning and de-provisioning of hardware queues and filters as required

- To allow a VF driver to send policy and control logic to a PF (and back)

These are the driver interfaces which are documented in the SR-IOV NDIS functions on MSDN.

One point I want to touch on about the driver model is that we have left it up to device driver writers to decide on how they want to design their drivers. Either a split driver model where there are completely separate drivers for the PF and the VF. Or a combined driver model where there is a single driver for both the PF and the VF. Or indeed to use a virtual bus driver (VBD) if that is what they are familiar with. In other words, although there are new concepts, the step to support SR-IOV is not a huge one from a driver writer’s perspective as it builds on existing models.

When is PCI Bus not a PCI Bus?

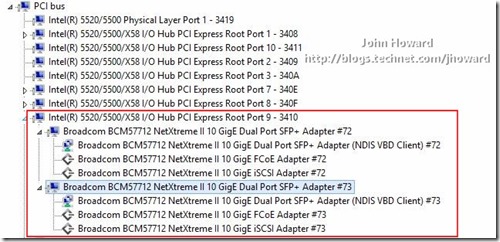

A strange question perhaps. A traditional PCI Express device is typically enumerated on the PCI bus under a PCI Express Root Port. Here’s a screenshot from device manager using Windows Server “8” Beta in the parent partition, on a machine which has two dual-port SR-IOV capable networking devices. You can see the PF devices under a PCI Express Root Port.

And the same with a VBD driver in place from a different machine with a single dual-port SR-IOV capable networking device.

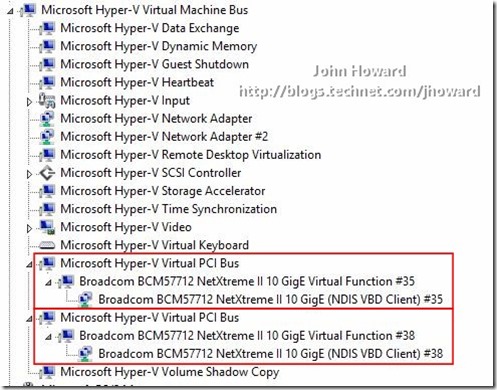

If we were to look at a virtual machine with SR-IOV devices assigned, you would see something subtly different in the device tree. This particular virtual machine has two software network adapters, each backed by a virtual function. The virtual functions are exposed on a virtual PCI Bus under VMBus as you can see in the following screen shot. Not an important point from an IT-Pro perspective, but in case you wanted to see the device hierarchy.

And again, with a VBD driver in place (with two VFs assigned to this particular VM):

This part of the blog post series started taking a look at the software side of SR-IOV, particularly from the viewpoint of device vendor driver writers. In summary, in addition to answering the “why” question, so far we’ve identified three dependencies necessary to support SR-IOV in Hyper-V in Windows Server “8” beta and hopefully explained why all of these are essential:

- System hardware support in the form of an IOMMU device

- A PCI Express networking device which has SR-IOV capabilities

- A driver model to support both PF and VFs.

In the next part, I look at the next dependency to add to the list.

Cheers,

John.

Comments

Anonymous

January 01, 2003

Hi Daniele - no, we solve this problem. SR-IOV is fully compatible with mobility scenarios including Live Migration. I go into depth in this in part 6. Cheers, John.Anonymous

January 01, 2003

Hi John, won't exposing hw info to the VMs break the abstarction layert that let me move VMs to farily different HW without bothering of device drivers? In your example, waht happen if I move my VM to an host with an Intel NIC?Anonymous

January 01, 2003

SR-IOV in Hyper-V is for networking. Is has nothing to do with storage here.Anonymous

May 22, 2014

Hi,

I want to be sure about a configuration we need to build to a customer, here is the build to his server:

- Lenovo TS 140 70A4001LUX

- 4 x WD Red 3Tb in RAID 5

- 1 x SC-SA0T11-S1 from SIIG PCIe to 2.5in. SSD + 2 SATA

- 1 X SSD Samsung 840 Evo 250Go

- Hyper-V server 2012 on the SSD

- Win Srv 2008 R2, Win 7, Win 8, CentOS, Debian and FreeNAS or OpenFiler on VMs

We don’t feel to write data on virtual disks since we will need to access the data through the network w/ or w/o the VMs running. I found that VT-d or SR-IOV are protocols to write directly on physical disks volumes.

- To enable SR-IOV, I need the intel NIC I350-T2

Where I miss something, SR-IOV is meant to access PCIe devices, if my drives are plugged into SATA ports, will we be able to write on them by the VMs?

Do I miss something into my setup to be working well?Anonymous

November 19, 2015

With Windows Server 2016, we're introducing a new feature, called Discrete Device Assignment, in