Azure Network Latency & SQL Server Optimization

During some of my recent partner engagements, I had the opportunity to test deeply the Azure network performances and latency impact on SQL Server in Azure Virtual Machines (IaaS VM), now in this blog I’m going to unveil some interesting findings, testing methodology and comparison with other Cloud providers. Finally, I will show you an interesting finding on a pretty unknown and un-used SQL Server parameter that you can play with to reduce network latency effect. Regarding the VM configuration, for Azure I used two “LARGE” VMs inside the same Virtual Network (VNET) deployed in “West Europe” Azure datacenter (Amsterdam). I executed all tests on Monday afternoons (November 4, 11, 18 and 25), starting from 2:00pm until 6:00pm, local CET time, I used this day and hours to have good measures in normal workload conditions on Azure infrastructure.

Testing in the Cloud

I spent the last two years mainly testing performances in the Cloud, on AZURE and some others Cloud providers, and I have to say that is challenging due to the intrinsic “volatile” nature of this kind of platform, then I want to provide you my five “Don’t assume…. ” rules in approaching this kind of activity:

- Don’t assume all the datacenters have the same performances;

- For Azure, I observed roughly the same performances in all datacenters, but for other Cloud providers I found a sensible difference between European and US datacenters;

- Don’t assume that during weekend (Saturday, Sunday) or holiday periods you will have better or more stable results;

- I cannot explain why (at least officially) but the worst testing period, due to high network usage and variation in the network performance, is Sunday afternoon and Sunday morning for Azure;

- Don’t assume network performances will remain the same over time;

- In the last months I noticed great performance improvements on Azure that changed my test results almost every month, then be careful and execute periodical fresh tests;

- Don’t assume all days and hours will be the same for network conditions;

- Execute the same test series in different days and different hours during an entire week, starting from Monday to Sunday;

- The best hours for stability of network testing results is in the evening, after 9:00pm, but be careful since around midnight something generally happens (maintenance?) and network fluctuates;

- Don’t assume one test run and 5 minutes duration are sufficient;

- For every test, execute at least 20 runs and apply 95% percentile rule;

- For smaller packet sizes under 1MB, I would recommend using 1 million packets to be sent using PsPing tool (see below), then reducing to 50k - 100k for bigger sizes above 1MB, otherwise the test completion time will be prohibitive;

- You can use different parameter tests, but you should generate enough activity for at least 10 - 20 minutes to have meaningful results.

- On Azure I noticed higher latency differences between minimum and maximum values, some other Cloud providers seems to have more constant performances.

Finally, an important recommendation if you want to run your network performance tests in Azure. In order to avoid blacklisting of your deployment, be sure to open a Support Case to the Azure Support otherwise you may be throttled by the infrastructure DDOS protection mechanisms. During my tests, I encountered problems crossing 10K packets per second on a specific connection, and when the aggregate number of packets to a VIP reached 100K packets per second.

Azure Network Configuration and Optimization

The first trivial recommendation in Azure network configuration, is to use the same “Affinity Group” (AG) used for Azure Virtual Network also for your VM and storage placement.

You may be wondered why I’m using Azure Virtual Network (VNET) since using AG should be enough to locate as close as possible all the VMs that need to communicate each other with the minimum latency, the reasons are simple if you consider the facts below:

- AG will simply guarantee you that all the resources will be placed in the same Azure Datacenter (DC), but nothing more than this;

- VNET is not able to span multiple Azure Host Clusters inside the datacenter, then if you join all your VMs to the same VNET, they will be in the same Cluster with the minimum network distance between each other;

What is really surprising is that testing latency across Azure Load Balancer (LB) using VIP (Virtual IP – external - Azure LB) originated almost the same results compared to internal network communications using DIP (Direct IP – Internal - No Azure LB). After internal investigations, I finally discovered that Azure recently introduced a very nice network optimization: once the connection is established between two VMs, Azure will recognize that the communication is between two internal resources and will allow direct communication as in the case of network connection using DIP. This is really a huge gain since you application will be able to connect to a SQL Server VM (and all other type of server side VMs) efficiently even if in a different Cloud Service!

SQL Server Network Latency Analysis

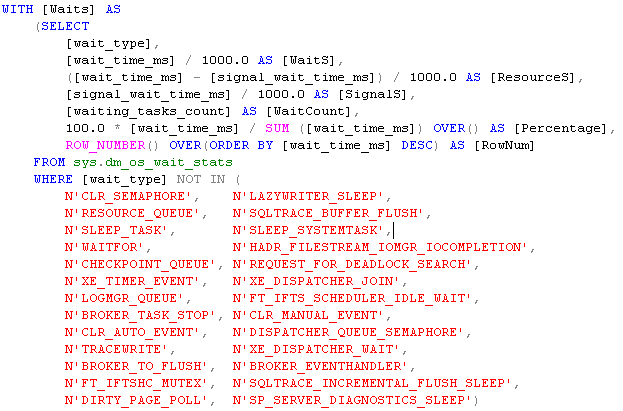

First of all, how to test and measure effectively network latency in a typical client-server application scenario using SQL Server as the backend database? As you probably know, there is no performance counter or trace log that will track network packet latency, but SQL Server is unique in providing a great but simple mechanism, inside the engine itself, it’s called “Wait Types” and you can query details using a specific SQL Server Dynamic Management View (DMV) called “sys.dm_os_wait_stats”. If you are not a DBA nor familiar with SQL Server world, you can read about this DMV and “Wait Types” at the link below, I also provided a sample query in this blog post attachment, but you can also find plenty of similar example over the Internet:

Dynamic Management Views and Functions

http://msdn.microsoft.com/en-us/library/ms188754.aspx

sys.dm_os_wait_stats (Transact-SQL)

http://msdn.microsoft.com/en-us/library/ms179984.aspx

There are many example over Internet on queries to analyze SQL Server Wait Stats, my favorite is the one below:

Wait statistics, or please tell me where it hurts http://www.sqlskills.com/blogs/paul/wait-statistics-or-please-tell-me-where-it-hurts

Now, just to land the boots on the ground for everyone, using the DMV mentioned above, you can look for a very specific wait type logged by the SQL Server engine, called “ASYNC_NETWORK_IO”, looking in the SQL Server BooksOnline, this is the official explanation:

Occurs on network writes when the task is blocked behind the network.

Verify that the client is processing data from the server.

Great! You may argue we have the perfect tool to measure network latency, unfortunately this is not 100% accurate for two reasons:

- This particular wait type, not only include network latency, but also application delay in receiving (acknowledging) query results sent back from SQL Server;

- The latency measured here is only in one direction of the network dialog between the application and SQL Server, latency for network traffic originating from the application server to SQL Server is not counted;

Anyway, also with these limitations, “ASYNC_NETWORK_IO” will provide a good indicator and the best (at my knowledge) tool to achieve this goal, then go on and use it! How? This is a simple step list:

- Before running your application workload, reset all wait statistics using the TSQL command below:

DBCC SQLPERF (N'sys.dm_os_wait_stats', CLEAR);

- Now run your workload;

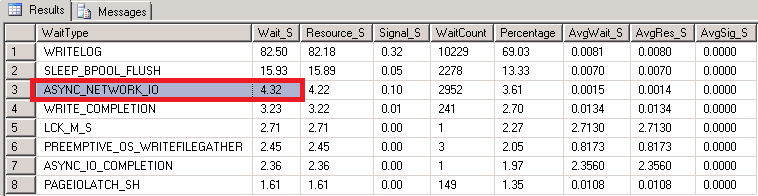

- At the end, run the TSQL query attached to this blog post and search for “ASYNC_NETWORK_IO” as shown in the print screen below (values reported in seconds):

What I described above is a SQL Server specific method to measure, with good approximation, the network latency, but in the next section I will show you an agnostic way to do that in general, without considering specific application scenarios and architectures.

General Network Latency Test

If you don’t have SQL Server, or you need to run some more general network tests, for example to evaluate the goodness of a Cloud platform, there is a great tool from great Microsoft Technical Fellow Mark Russinovich called “PsPing” from SYSINTERNALS tool suite:

PsTools

http://technet.microsoft.com/en-us/sysinternals/bb896649.aspx

This tool is great for several reasons, but most importantly it can tests both network latency and bandwidth, for the context of this blog post, let’s focus on the latency. Here is how to run your first network latency test:

- After extracting the whole package, simply copy the “PsPing” executable on your source VM (application server) and on your target VM (database server);

- On the target VM, open a command prompt and run the following command:

psping -s 10.0.0.5:50000

- In this way, the PsPing process on the target VM will wait for connection by the PsPing process that you will run later on the source VM; in this example, the process will listen on “10.0.0.5” local IP address and TCP port “50000” (can be changed);

- Now, on the source VM, run the command below to reach the PsPing process that is listening on the target VM:

psping -4 -l 1024 -n 1000000 -h 10 10.0.0.5:50000

- You can learn the meaning of each parameter in the web page from where you downloaded the tool, here you may simply want to know that PsPing will test network latency [ -l] over IPv4 [ -4] using one million packets [-n 1000000] directed to a VM (where another copy of PsPing is running) whose IP address is [10.0.0.5] over TCP port [50000].

- At the end, you will see an output similar to the one below, with latency results (in milliseconds) subdivided in 10 histograms [ -h 10]:

Nice tool, isn’t it? The major (and probably only one) drawback is that this tool is not application specific, then you may experience different results testing real workloads.

Guest OS Network Optimization

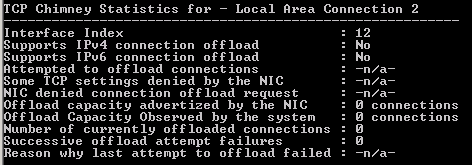

For my tests, I used Windows Server 2012 as the Guest Operating System, looking at the network settings; everything is expected as a normal on-premise installation regarding “TCP Task Offloading”, “Chimney” and “Jumbo Frames” support.

Netshell command to show NIC capabilities: netsh interface tcp show chimneystats <<NIC ID>>

Unfortunately, the virtual network NIC used in the Azure VM is not capable to support TCP Task Offloading and Chimney, then there is nothing you can tweak here. Regarding Jumbo Frames, disabled by default, I tried to enable and test all the possibilities listed below, but nothing changes significantly on the latency test results.

SQL Server Optimization

Even if you are familiar with SQL Server, you may haven’t ever heard about an instance wide parameter called “network packet size (B) ” only accessible using SP_CONFIGURE system stored procedure with advanced options visualization turned on: this parameter, with default value of 4KB, will affect the TDS (Tabular Data Stream) protocol packet size that SQL Server will use for communications with applications. During my 15-years working experience on SQL Server, I had to change it only 2 times, and only for SAP that need a special value, additionally you may have heard many Microsoft SQL experts recommending to do not change it. Well, if you are using SQL Server in an Azure VM, probably this time you may consider tweaking this parameter since I found beneficial in Azure network infrastructure. To measure the impact of this parameter, I used “Wait Types” observation and specifically the “ASYNC_NETWORK_IO” type, here are the results I obtained testing a real application workload:

- SQL packet size default to 4KB = 13.81 seconds

- SQL packet size changed to 8KB = 9.74 seconds

- SQL packet size changed to 16KB = 6.47 seconds

- SQL packet size changed to 32KB = 0.00 seconds

NOTE: The maximum value for “network packet size (B) ” is (32767) byte on SQL Server 2012 (and many other SQL versions), not 32KB as reported for simplicity in the last row above.

Now, I need to warn you about raising the value for this parameter: don’t assume it comes with no drawbacks, then change it using higher values only if you have serious network performance problems and high “ASYNC_NETWORK_IO” wait type. The biggest risk in raising this parameter may be an increase in SQL Server multi-page allocation in its VAS (Virtual Address Space) causing memory pressure and fragmentation, then it’s recommended to test your application workload using the new configured value for “network packet size (B) ”. A safe value you can try to experiment is (8000) bytes. Details on how to change “network packet size (B) ” and additional information can be found at the link below:

Configure the network packet size Server Configuration Option

http://technet.microsoft.com/en-us/library/ms177437.aspx

Finally, if you want to check the parameter value for specific connections, you can run “select * from sys.dm_exec_connections” TSQL query, if you want to check the instance wide value, you can use “EXEC sp_configure 'network packet size' ” TSQL query.

Final Test Results

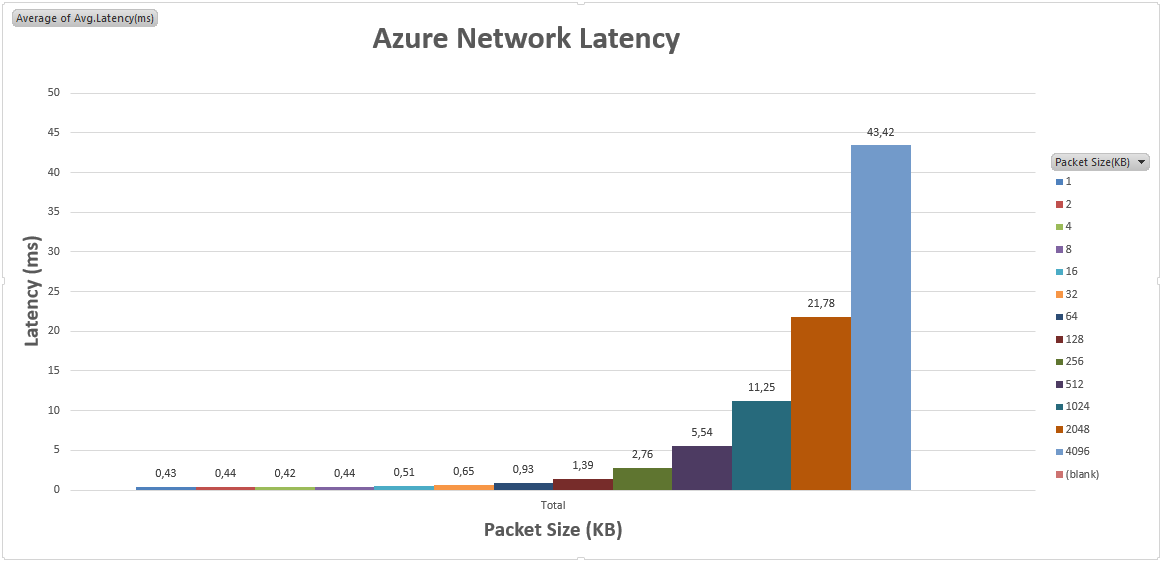

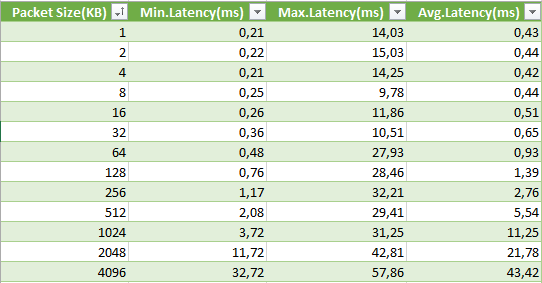

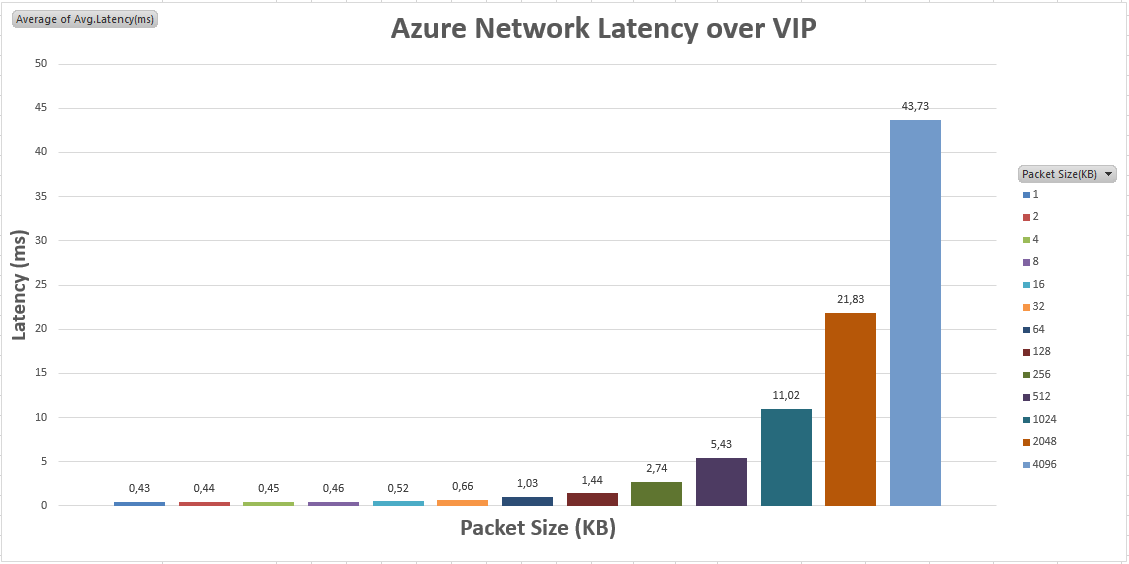

In the following section, you can find the results of my tests, there are some very important conclusions we can obtain, let me summarize here and invite you to read the details later:

- Except for smaller network packet sizes (1KB-2KB), Azure network outperforms other Cloud provider networks clearly, in some cases with 75% better latency;

- Until 64KB packet size, Azure network is able to maintain sub-millisecond network latency;

- Comparing Azure network latency when traffic flows through the Azure Load Balancer (using external VIP) to direct communication (using internal DIP), the results are surprisingly very similar, with a maximum overhead, in the work case of <0.3ms;

Based on a real application workload I used as a test case, over 20 minutes network activity, using VIP for application-to-SQL communication only introduced 1-2 % overhead in the test completion time;

AZURE Network Latency Results using internal IP (DIP)

AZURE Network Latency Results using external IP (VIP)

I hope this information will be useful for you, please if you have experienced interesting facts or done other types of tests, send me a mail using igorpag@microsoft.com address and I will be happy to discuss the details with you and consolidate information in this blog.

Regards.