Monitoring temperature sensors in remote locations with Twilio, Azure Automation and Log Analytics - Part 2

This is part two of my little three part series on how to monitor sensors in remote locations with Twilio, Azure Automation and OMS. It describes the overall solution architecture, some technical challenges I faced, solutions I found and lessons I learned.

For the background of this blog series, please read part one. If you just want to see the result, please go straight to part three.

Overview solution architecture

Based on the backgorund and ideas from part 1, this is an overview of the whole solution architecture that I want to create:

Handling text messages with Twilio

Let's start with the text message handling. You have to subscribe to the Twilio service to use it. Once you have a subscription, you have to buy a SMS capable mobile phone number. Once you've created a subscription, you will get an AccountSID and an AuthToken to authenticate against the Twilio service.

Sending text messages

Ok, how can we send a text message to an arbitrary receipient? Twilio provides a REST-API for this task and has a very good documentation of this REST-API. That makes translating this into PowerShell quiet easy:

function send-twiliosms

{

param($RecipientNumber,

$SMSText )

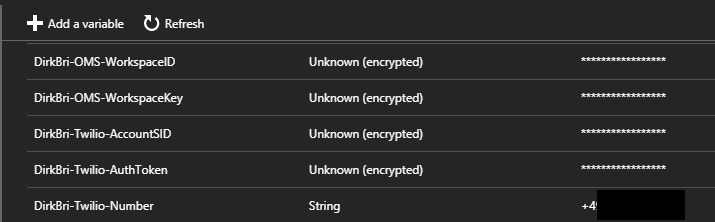

$TwilioSID = Get-AutomationVariable -Name "DirkBri-Twilio-AccountSID"

$TwilioToken = Get-AutomationVariable -Name "DirkBri-Twilio-AuthToken"

$TwilioNumber = Get-AutomationVariable -Name "DirkBri-Twilio-Number"

$secureAuthToken = ConvertTo-SecureString $TwilioToken -AsPlainText -force

$TwilioCredential = New-Object System.Management.Automation.PSCredential($TwilioSID,$secureAuthToken)

$TwilioMessageBody = @{

From = $TwilioNumber

To = $RecipientNumber

Body = $SMSText

}

$TwilioApiEndpoint= "https://api.twilio.com/2010-04-01/Accounts/$TwilioSID/Messages.json"

$Result = Invoke-RestMethod -Uri $TwilioApiEndpoint -Body $TwilioMessageBody -Credential $TwilioCredential -Method "POST" -ContentType "application/x-www-form-urlencoded"

return $Result

}

$Result = send-twiliosms -RecipientNumber $Device -SMSText $dicDevicesToTrigger.item($Device)

write-host $Result

As you can see, I have stored the information of my number, the AccountSID and the AuthToken along with my OMS workspace information in an (encrypted) Azure Automation variable for better and safer usage.

Receiving text messages

At the beginning of the project I was worried how I could receive the text messages send by the devices. Do I have to write some kind of polling mechanism? But then I checked the capabilities of my Twilio service and noticed this:

Twilio can call a Webhook once a message arrives! Yessss! And Azure Automation runbooks can be triggered by a Webhook :)

So receiving text messages is the easiest part. Twilio will call the Azure runbook by invoking the Webhook and will commit the text message as a JSON structure within the Webhook. The RequestBody property contains the text message information:

Azure Runbooks

I decided to create two runbooks:

- One runbook that actively triggers the DRH-3015 devices to send their status message

- One runbook to receive all text messages, parse them and create an OMS custom log record out of it

Trigger text message runbook

The trigger runbook consists mainly of the send-twiliosms function explained above. It gets triggered once per day (or as often as you want and would like to pay for the text messages) and sends a trigger to all DRH-3015 devices.

Receive and process text message runbook

This runbook is a bit more complex and performs these tasks:

- Converts the URL encoded Webhook data from JSON to a PowerShell object with multiple properties like Sender, Message body, SMS ID etc.

- Checks, if the sender is valid and approved. The runbook will not accept data from unknown numbers (devices) to prevent SPAM or malicious data.

- Parses the different text message formats and transforms them into a single, standardized Hash table.

If for any reason the device sends a message that cannot be transformed properly (e.g. due to an unknown format), consider this as an alert and set the properties of the record accordingly.

This is an important fallback, in case my script has a flaw and cannot handle some specific kind of text message correctly. - Converts the Hash table into a JSON object and sends the JSON object to my OMS workspace using the REST API.

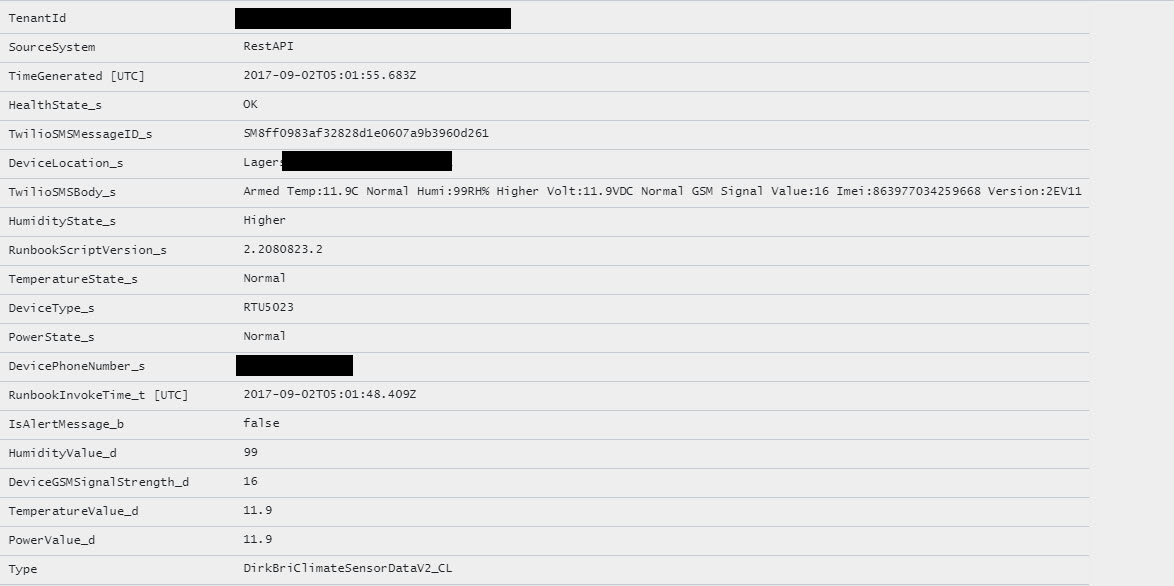

I use a custom OMS log called DirkBriClimateSensorDataV2_CL

You can find detailed information on how to send data to OMS via PowerShell and the REST-API here and here

All these steps are relatively simple to realize with the incredible power of PowerShell. The most complicated part was designing the layout of the Hash Table, meaning what kind of properties I should send to OMS!

Properties for OMS custom log

Lesson learned

One of the most important lessons I learned during this project was:

You NEED to know, what you want to achieve in OMS, BEFORE you create the custom log record!!! That might sound simple, but I guess I have created over 5 different custom logs, before it finally contained all data that I needed to build my OMS dashboards and create my alerts.

I ended up with this JSON data structure created by the runbook:

A brief explanation of the properties specified:

- HealthState

Allows me to show the overall health state of each device by showing the current (latest) HealthState value per device. - HumidityValue,TemperatureValue,PowerValue

Number values that will allow me to draw charts in OMS - HumidityState, TemperatureState, PowerState

State values that allow me to show the individual component state of each device. - IsAlertMessage

Very important property that will be needed to create out-of-band alerts in OMS. - DeviceType,PhoneNumer,Location

Content fields to identify the different devices. - TwilioSMSMessageID, RunbookInvokeTime, TwilioSMSBody, RunbookScriptVersion

Additional information that will help troubleshooting in case of any issue.

Lesson learned

This is a lesson I learned when I started creating SCOM Management Packs: You can never have too much data for debugging an troubleshooting purpose :). By including this data in the OMS log record I can easily track e.g. a text message throughout the whole process, determine the time difference between sending the data from Azure Automation to OMS and its availability for searching in Log Analytics and so on.

This is how the same JSON data will look like in Azure Log Analytics once transformed into a custom log record:

Based on these properties I will hopefully be able to create the necessary dahsboards and alerts in OMS. Let's see this in part three...