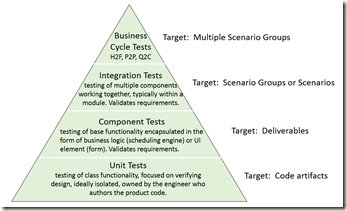

Test Automation Pyramid

Continuing the discussion on automated regression tests, this post will focus on the test granularities that will lead to a test suite that meets the qualities discussed in my last post.

Test granularity refers to the scope of the test, or how much of the system under test (SUT) that the test covers. There are a variety of terms used for the granularity, typically starting with 'unit' for tests that are narrow in scope. At the other extreme, tests that are broad in scope are typically called 'integration', 'scenario', or 'end to end (E2E)'.

The test pyramid is a simple visualization of the suite make-up using different granularities. Do an image web search for 'test pyramid' and you'll got lots of examples of them. For Dynamics AX'7', here's the test pyramid that we used:

The test suite's base needs to be a large volume of unit and component tests. This is important because these tests are better across the board with respect to the test qualities that I discussed in the last blog post: reliable, maintainable, fast, simple, and precise. Hopefully this should be self-evident, but for a much longer discussion on this topic I suggest you take a look at https://googletesting.blogspot.com/2015/04/just-say-no-to-more-end-to-end-tests.html.

The pyramid also indicates that having some broader integration or business cycle tests is important. Even though these tests are more costly, they are very good for building confidence in the product. They are less useful than granular tests when they fail, however. They are typically less reliable so they could be failing for a reason other than a product issue. If it is a product issue, the issue can be challenging to isolate.

The next question you might have may be about the ideal ratio between the different tiers of tests. That's a good question and the answer depends on the context of your product, but the starting point that I use is an order of magnitude difference between each tier. For example, having 1,000 unit tests, 100 component tests, 10 integration tests, and 1 business cycle test would be reasonable. We ended up with roughly this distribution for the test suite in AX'7'.

While this all looks good in theory, my experience with the last two major releases of AX provides great evidence that this approach works. For AX 2012, our pyramid looked more like an hourglass. We had a lot of unit tests and an almost equal number of integration tests. The integration tests mostly worked through the user interface and the tests were relatively slow, unreliable, and very costly from a maintenance standpoint. Their feedback value was significantly diminished because the failure rates for non-product reasons was high.

For AX'7', we have some new testability features (future post fodder!) and, as mentioned above, our suite follows the pyramid. The test suite runs at least an order of magnitude faster and our pass rate is consistently above 99.5%. Maintenance cost is significantly less and feedback value is significantly higher. We've also moved a large number of the tests further upstream in our development process to catch product and test issues earlier.