The Basics of Securing Applications: Part 3 - Secure Design and Analysis in Visual Studio 2010 and Other Tools

In this post, we’re continuing our One on One with Visual Studio conversation from May 26 with Canadian Developer Security MVP Steve Syfuhs, The Basics of Securing Applications.If you’ve just joined us, the conversation builds on the previous post, so check that out (links below) and then join us back here. If you’ve already read the previous post, welcome!

In this post, we’re continuing our One on One with Visual Studio conversation from May 26 with Canadian Developer Security MVP Steve Syfuhs, The Basics of Securing Applications.If you’ve just joined us, the conversation builds on the previous post, so check that out (links below) and then join us back here. If you’ve already read the previous post, welcome!

Part 1: Development Security Basics

Part 2: Vulnerabilities

Part 3: Secure Design and Analysis in Visual Studio 2010 and Other Tools (This Post)

In this session of our conversation, The Basics of Securing Applications, Steve shows us how we can identify potential security issues in our applications by using Visual Studio and other security tools.

Steve, back to you.

Every once in a while someone says to me, “hey Steve, got any recommendations on tools for testing security in our applications?” It's a pretty straightforward question, but I find it difficult to answer because a lot of times people are looking for something that will solve all of their security problems, or more likely, solve all the problems they know they don't know about because, well, as we talked about last week (check out last week’s discussion), security is hard to get right. There are a lot of things we don't necessarily think about when building applications.

Last time we only touched on four out of ten types of vulnerabilities that can be mitigated through the use of frameworks. That means that the other six need to be dealt with another way. Let's take a look at what we have left:

- Injection

- Cross-Site Scripting (XSS)

- Broken Authentication and Session Management

- Insecure Direct Object References

- Cross-Site Request Forgery (CSRF)

- Security Misconfiguration

- Insecure Cryptographic Storage

- Failure to Restrict URL Access

- Insufficient Transport Layer Protection

- Unvalidated Redirects and Forwards

None of these can actually be fixed by tools either. Well, that's a bit of a downer. Let me rephrase that a bit: these vulnerabilities can not be fixed by tools, but some can be found by tools. This brings up an interesting point. There is a major difference between identifying vulnerabilities with tools and fixing vulnerabilities with tools. We will fix vulnerabilities by writing secure code, but we can find vulnerabilities with the use of tools.

Remember what I said in our conversation two weeks ago (paraphrased): the SDL is about defense in depth. This is a concept of providing or creating security measures at multiple layers of an application. If one security measure fails, there will be another one that hasn't failed to continue to protect the application or data. It stands to reason then that there is no one single thing we can do to secure our applications, and by extension, therefore there is no one single tool we can use to find all of our vulnerabilities.

There are different types of tools to use at different points in the development lifecycle. In this article we will look at tools that we can use within three of the seven stages of the lifecycle: Design, implementation, and verification:

Within these stages we will look at some of their processes, and some tools to simplify the processes.

Design

Next to training, design is probably the most critical stage of developing a secure application because bugs that show up here are the most expensive to fix throughout the lifetime of the project.

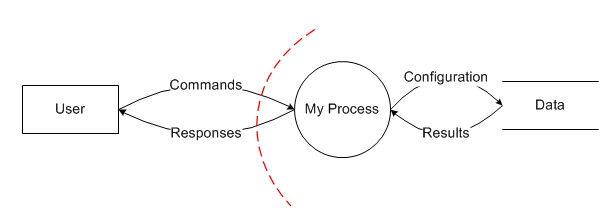

Once we've defined our secure design specifications, e.g. the privacy or cryptographic requirements, we need to create a threat model that defines all the different ways the application can be attacked. It's important for us to create such a model because an attacker will absolutely create one, and we don't want to be caught with our pants down. This usually goes in-tow with architecting the application, as changes to either will usually affect the other. Here is a simple threat model:

It shows the flow of data to and from different pieces of the overall system, with a security boundary (red dotted line) separating the user's access and the process. This boundary could, for example, show the endpoint of a web service.

However, this is only half of it. We need to actually define the threat. This is done through what's called the STRIDE approach. STRIDE is an acronym that is defined as:

| Name | Security Property | Huh? |

| Spoofing | Authentication | Are you really who you say you are? |

| Tampering | Integrity | Has this data been compromised or modified without knowledge? |

| Repudiation | Non-repudiation | The ability to prove (or disprove) the validity of something like a transaction. |

| Information Disclosure | Confidentiality | Are you only seeing data that you are allowed to see? |

| Denial of Service | Availability | Can you access the data or service reliably? |

| Elevation of Privilege | Authorization | Are you allowed to actually do something? |

We then analyze each point on the threat model for STRIDE. For instance:

| Spoofing | Tampering | Repudiation | Info Disclosure | DoS | Elevation | |

| Data | True | True | True | True | False | True |

It's a bit of a contrived model because it's fairly vague, but it makes us ask these questions:

- Is it possible to spoof a user when modifying data? Yes: There is no authentication mechanism.

- Is it possible to tamper with the data? Yes: the data can be modified directly.

- Can you prove the change was done by someone in particular? No: there is no audit trail.

- Can information be leaked out of the configuration? True: you can read the data directly.

- Can you disrupt service of the application? No: the data is always available (actually, this isn't well described – a big problem with threat models which is a discussion for another time).

- Can you access more than you are supposed to? Yes: there is no authorization mechanism.

For more information you can check out the Patterns and Practices Team article on creating a threat model.

This can get tiresome very quickly. Thankfully Microsoft came out with a tool called the SDL Threat Modeling tool. You start with a drawing board to design your model (like shown above) and then you define it's STRIDE characteristics:

Which is basically just a grid of fill-in-the-blanks which opens up into a form:

Once you create the model, the tool will auto-generate a few well known characteristics of the types of interactions between elements as well as provides a bunch of useful questions to ask for less common interactions. It's at this point that we can start to get a feel for where the application weaknesses exist, or will exist. If we compare this to our six vulnerabilities above, we've done a good job of finding a couple. We've already come across an authentication problem and potentially a problem with direct access to data. Next week, we will look at ways of fixing these problems.

After a model has been created we need to define the attack surface of the application, and then we need to figure out how to reduce the attack surface. This is a fancy way of saying that we need to know all the different ways that other things can interact with our application. This could be a set of API's, or generic endpoints like port 80 for HTTP:

Attack surface could also include changes in permissions to core Windows components. Essentially, we need to figure out the changes our application introduces to the known state of the computer it's running on. Aside from analysis of the threat model, there is no easy way to do this before the application is built. Don't fret just yet though, because there are quite a few tools available to us during the verification phase which we will discuss in a moment. Before we do that though, we need to actually write the code.

Implementation

Visual Studio 2010 has some pretty useful features that help with writing secure code. First is the analysis tools:

There is a rule set specifically for security:

If you open it you are given a list of 51 rules to validate whenever you run the test. This encompasses a few of the OWASP top 10, as well as some .NET specifics.

When we run the analysis we are given a set of warnings:

They are a good sanity check whenever you build the application, or check it into source control.

In prior versions of Visual Studio you had to run FxCop to analyze your application, but Visual Studio 2010 calls into FxCop directly. These rules were migrated from CAT.NET, a plugin for Visual Studio 2008. There is a V2 version of CAT.NET in beta hopefully to be released shortly.

If you are writing unmanaged code, there are a couple of extras that are available to you too.

One major source of security bugs in unmanaged code comes from a well known set of functions that manipulate memory in ways that are usually called poorly. These are collectively called the banned functions, and Microsoft has released a header file that deprecates them:

# pragma deprecated (_mbscpy, _mbccpy) # pragma deprecated (strcatA, strcatW, _mbscat, StrCatBuff, StrCatBuffA, StrCatBuffW, StrCatChainW, _tccat, _mbccat)

Microsoft also released an ATL template that allows you to restrict which domains or security zones your ActiveX control can execute.

Finally, there is also a set of rules that you can run for unmanaged code too. You can enable these to run on build:

These tools should be run often, and the warnings should be fixed as they appear because when we get to verification, things can get ugly.

Verification

In theory, if we have followed the guidelines for the phases of the SDL, verification of the security of the application should be fairly tame. In the previous section I said things can get ugly; what gives? Well, verification is sort of the Rinse-and-Repeat phase. It's testing. We write code, we test it, we get someone else to test it, we fix the bugs, and repeat.

The SDL has certain requirements for testing. If we don't meet these requirements, it gets ugly. Therefore we want to get as close as possible to secure code during the implementation phase. For instance, if you are writing a file format parser you have to run it through 100,000 different files of varying integrity. In the event that something catastrophically bad happens on one of these malformed files (which equates to it doing anything other than failing gracefully), you need to fix the bug and re-run the 100,000 files – preferably with a mix of new files as well. This may not seem too bad, until you realize how long it takes to process 100,000 files. Imagine it takes 1 second to process a single file:

100,000 files * 1 second = 100,000 seconds

100,000 seconds / 60 seconds = ~1667 minutes

1667 minutes / 60 minutes = ~27 hours

This is a time consuming process. Luckily Microsoft has provided some tools to help. First up is MiniFuzz.

Fuzzing is the process of taking a good copy of a file and manipulating the bits in different ways; some random, some specific. This file is then passed through your custom parser… 100,000 times. To use this tool, set the path of your application in the process to fuzz path, and then a set of files to fuzz in the template files path. MiniFuzz will go through each file in the templates folder and randomly manipulate them based on aggressiveness, and then pass them to the process.

Regular expressions also run into a similar testing requirement, so there is a Regex Fuzzer too.

An often overlooked part of the development process is the compiler. Sometimes we compile our applications with the wrong settings. BinScope Binary Analyzer can help us as it will verify a number of things like compiler versions, compiler/linker flags, proper ATL headers, use of strongly named assemblies, etc. You can find more information on these tools on the SDL Tools site.

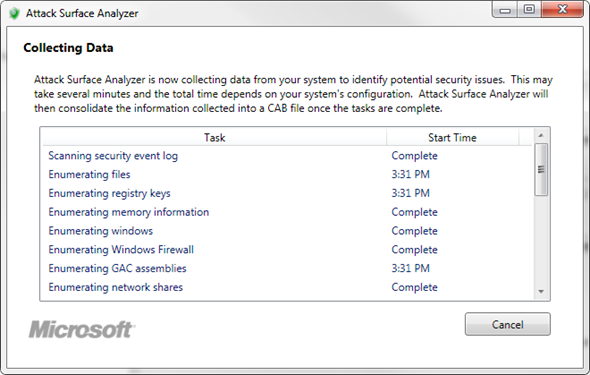

Finally, we get back to attack surface analysis. Remember how I said there aren't really any tools to help with analysis during the design phase? Luckily, there is a pretty useful tool available to us during the verification phase, aptly named the Attack Surface Analyzer. It's still in beta, but it works well.

The analyzer goes through a set of tests and collects data on different aspects of the operating system:

The analyzer is run a couple times; it's run before your application is installed or deployed, and then it's run after it's installed or deployed. The analyzer will then do a delta on all of the data it collected and return a report showing the changes.

The goal is to reduce attack surface. Therefore we can use the report as a baseline to modify the default settings to reduce the number of changes.

It turns out that our friends on the SDL team have released a new tool just hours ago. It's the Web Application Configuration Analyzer v2 – so while not technically a new tool, it's a new version of a tool. Cool! Lets take a look:

It's essentially a rules engine that checks for certain conditions in the web.config files for your applications. It works by looking at all the web sites and web applications in IIS and scans through the web.config files for each one.

The best part is that you can scan multiple machines at once, so you know your entire farm is running with the same configuration files. It doesn't take very long to do the scan of a single system, and when you are done you can view the results:

It's interesting to note that this was scan of my development machine (don't ask why it's called TroyMcClure), and there are quite a number of failed tests. All developers should run this tool on their development machines, not only for their own security, but so that your application can be developed in the most secure environment possible.

Final Thoughts

It's important to remember that tools will not secure our applications. They can definitely help find vulnerabilities, but we cannot rely on them to solve all of our problems.

Looking forward to continuing the conversation.

About Steve Syfuhs

| Steve Syfuhs is a bit of a Renaissance Kid when it comes to technology. Part developer, part IT Pro, part Consultant working for ObjectSharp. Steve spends most of his time in the security stack with special interests in Identity and Federation. He recently received a Microsoft MVP award in Developer Security. You can find his ramblings about security at www.steveonsecurity.com |