Mahout with HDInsight

My name is Sudhir and I work with the Microsoft HDInsight support team. The other day, my colleague, Dan and I were discussing MAHOUT, so I thought about how it can be used with HDInsight.

[* Note:- If you are using HDInsight 3.1, it's have mahout package installed in it. So in such case one need not to follow some part of this blog post like uploading mahout jar file. Please have a look here to find out how to run mahout job in HDInsight 3.1.]

I investigated more to see how MAHOUT can be used with HDInsight and I feel its good information to share. First, I tried through master node (RDP) and then I tried with PowerShell. Running MAHOUT from the head node won’t be a recommended approach because if a cluster gets reimaged all the changes to the configuration will not be available. So I skipped this approach and started looking at PowerShell.

Before I start I want to mention that MAHOUT is not supported by Microsoft.

In case you want to read more about MAHOUT click here. I’ll be using RecommenderJob class for this example. More information about the class can be found here.

Here are the step by step instruction to use MAHOUT on HDInsight through PowerShell.

Copy following files to a folder on the Local Machine

https://repo2.maven.org/maven2/org/apache/mahout/mahout-core/0.8/mahout-core-0.8-job.jar https://github.com/rawatsudhir/Samples/blob/master/ItemID.txt :- contains userid, itemid and value

https://github.com/rawatsudhir/Samples/blob/master/users.txt :- contains userid

Next step is to upload above sample files. Open PowerShell window and use below script to upload each file.

$subscriptionName = "<subscription name>"

$storageAccountName = "<storage account>"

$containerName = "<container>"

$fileName ="<Location\FileName>"

# Uploading file under the folder mahout

$blobName = "<mahout/FileName>"

# Get the storage account key

Select-AzureSubscription $subscriptionName

$storageaccountkey = get-azurestoragekey $storageAccountName | %{$_.Primary}

# Create the storage context object

$destContext = New-AzureStorageContext -StorageAccountName $storageAccountName -StorageAccountKey $storageaccountkey

# Copy the file from local workstation to the Blob container

Set-AzureStorageBlobContent -File $filename -Container $containerName -Blob $blobName -context $destContext

Copy below command (or get the script from here).

# Cluster Name

$clusterName="<ClusterName>"

# Subscription name

$subscriptionName="<SubscriptionName>"

$containerName = "<containerName>"

$storageAccountName = "<StorageAccountName>"

Select-AzureSubscription -SubscriptionName $subscriptionName

# Assuming mahout-core-0.8-job.jar copied to Mahout folder.

$mahoutJob = New-AzureHDInsightMapReduceJobDefinition -JarFile "wasb://$containerName@$storageAccountName.blob.core.windows.net/mahout/mahout-core-0.8-job.jar" -ClassName "org.apache.mahout.cf.taste.hadoop.item.RecommenderJob"

# Adding the similarityclassname argument

$mahoutJob.Arguments.Add("-s")

# Adding the name of similarityclassname. However other similarityclassname can be used.

$mahoutJob.Arguments.Add("SIMILARITY_COOCCURRENCE")

# Adding the input file argument

$mahoutJob.Arguments.Add("-i")

# Adding location of the file. The file is stored on Windows Azure Storage Blob.

$mahoutJob.Arguments.Add("wasb://$containerName@$storageAccountName.blob.core.windows.net/mahout/itemID.txt")

# Adding usersFile as an argument.

$mahoutJob.Arguments.Add("--usersFile")

# Adding userFile location.

$mahoutJob.Arguments.Add("wasb://$containerName@$storageAccountName.blob.core.windows.net/mahout/users.txt")

# Adding output as an argument.

$mahoutJob.Arguments.Add("--output")

# Adding output location. This will be the location where result will be generated.

$mahoutJob.Arguments.Add("wasb://$containerName@$storageAccountName.blob.core.windows.net/mahout/output")

# Starting job

$MahoutJobProcessing = Start-AzureHDInsightJob -Cluster $clusterName -JobDefinition $mahoutJob

# Waiting Job for completion

Wait-AzureHDInsightJob -Job $MahoutJobProcessing -WaitTimeoutInSeconds 3600

# Getting error if any

Get-AzureHDInsightJobOutput -Cluster $clusterName -JobId $MahoutJobProcessing.JobId -StandardError

Run above scripts. Waiting job for completion

Once job done, the result will be found on target directory.

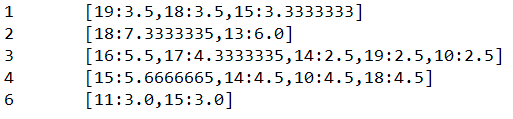

It basically outputs userIDs with associated recommended itemIDs and their scores.

Clean up process:-

Require to do rdp and run hdfs fs -rmr -skipTrash /user/hdp/temp to delete temp folder. Use any familiar tool to delete temp folder.

Tips:-

- Make sure of/understand algorithm which you are going to use.

- Look for the input type by the algorithm.

- You may want to prepare your data based on the input required by the algorithm.

Thanks to Bill and Sunil to review this blog post.

Happy Learning!

Sudhir Rawat

Comments

- Anonymous

March 30, 2014

The comment has been removed - Anonymous

April 06, 2014

Hi Rob,Thanks for the note. When I started working, mahout-core-0.8-job was available so I used that.I was busy and didn't get notice about 0.9. Your note bring attention and I try mahout-core-0.9-job for same scenario I described above and everything worked as expected.I am planning to explore more and will share my findings.Thanks and Regards,Sudhir