Overview of Microsoft Office SharePoint Server 2007 Enterprise Search

SharePoint Search Architecture:

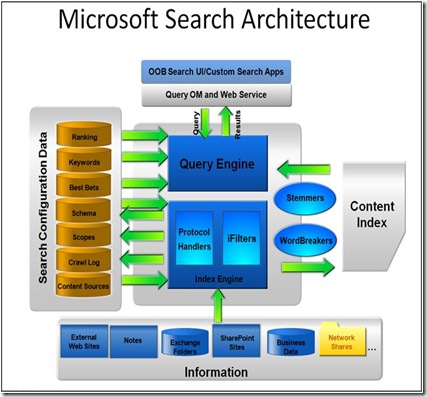

Search is implemented using the shared service provider architecture in SharePoint. The areas that you need to be aware of in the architecture are the content sources, configuration data, protocol handlers, index engine, IFilters, content index, property store, query engine, end user interface, and platform services. Below figure shows the architecture that we will walk through in more detail in the next few paragraphs.

Content sources define the locations, security, and formats that you want to crawl and index. Out of the box, WSS crawls WSS content, and Microsoft Office SharePoint Server can crawl SharePoint, web sites, file shares, ExchangeServer public folders, Lotus Notes, and Documentum and FileNet repositories. With the federation capabilities that were introduced recently in SharePoint, you can extend these content sources to any content source that works with the OpenSearch standard.

Crawling Walkthrough:

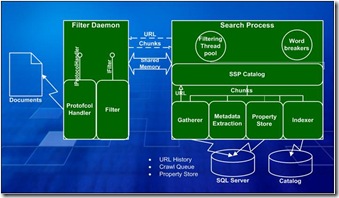

When a crawl is requested . . .

- Indexer grabs the start address of content source

- Start address is prefixed with protocol associated with accessing the content

- Appropriate protocol handler invoked to traverse the content source

- During traversal, the handler will identify content nodes it needs to index

- Protocol handler invokes IFilter associated with content node type

- IFilter identifies and extracts properties from content node

- Protocol handler supplements IFilter data with additional property information

- Data associated with content node is added to index

- Index “delta” propagates to search servers

Crawl Overview Diagram:

Index Propagation:

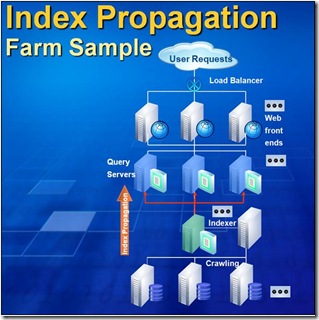

Commnication, between index and query works (diagram)

- Index server

- Content

- Master index, and a lot of little files that are called shadow indexes

- The master index is the main catelog.

- Shadow indexes are being created

- The crawl process, the crawler loads in memory bunches of data. What is called shadow indexes.

- How heavy is this crawl, the index server copies the shadow files to the query boxes.

- After that the index server notifices the search database that the propogration has happened.

- The query service that has a thread that will poll the DB to understand if there are changes. Loads shadow file in memory, which is why you are able to intiate a query even if the crawl process is in progress.

- Performs a master merge. Provides changes to the master index. Creates a new file and save. This is a consoidation of the shadow indexes

- The master merge process will not occur at the query process

- There is usulaly around a 10% index

- What happens if one query server is offline? Or add a new query server?

- If one of query server is down, it keeps track of the shadow index, and when it comes up, it will be moved.

- The propogation happens only when there are differences between the content in the shadow index and the master index. There is a new master merge process that is the same in SQL 2005

- For capacity planning make sure that you plan for the shadow indexes.. Need more space.