What is Azure Data Lake?

Azure Data Lake includes all the capabilities required to make it easy for developers, data scientists, and analysts to store data of any size, shape and speed, and do all types of processing and analytics across platforms and languages. It removes the complexities of ingesting and storing all of your data while making it faster to get up and running with batch, streaming, and interactive analytics. Azure Data Lake works with existing IT investments for identity, management, and security for simplified data management and governance. It also integrates seamlessly with operational stores and data warehouses so you can extend current data applications. We’ve drawn on the experience of working with enterprise customers and running some of the largest scale processing and analytics in the world for Microsoft businesses like Office 365, Xbox Live, Azure, Windows, Bing and Skype.

Azure Data Lake solves many of the productivity and scalability challenges that prevent you from maximizing the value of your data assets with a service that’s ready to meet your current and future business needs.

A Quick Overview of Azure Data Lake is explained in this Video here

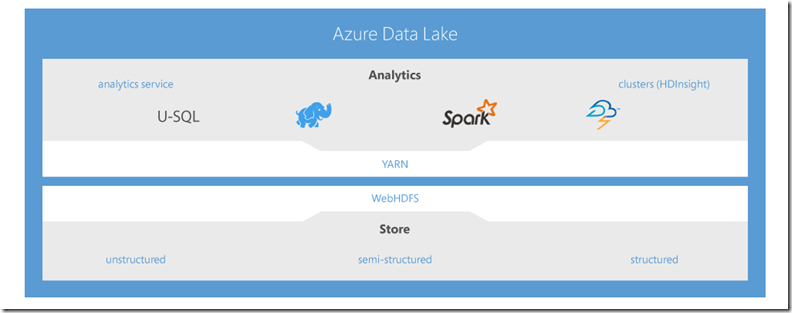

In short Azure Data Lake is made of 2 parts :

Azure Data Lake Store : Azure Data Lake Store is an enterprise-wide hyper-scale repository for big data analytic workloads. Azure Data Lake enables you to capture data of any size, type, and ingestion speed in one single place for operational and exploratory analytics. Azure Data Lake Store can be accessed from Hadoop (available with HDInsight cluster) using the WebHDFS-compatible REST APIs. It is specifically designed to enable analytics on the stored data and is tuned for performance for data analytics scenarios. Out of the box, it includes all the enterprise-grade capabilities—security, manageability, scalability, reliability, and availability—essential for real-world enterprise use cases.

Azure Data Lake Analytics: Azure Data Lake Analytics is a new service, built to make big data analytics easy. This service lets you focus on writing, running and managing jobs, rather than operating distributed infrastructure. Instead of deploying, configuring and tuning hardware, you write queries to transform your data and extract valuable insights. The analytics service can handle jobs of any scale instantly by simply setting the dial for how much power you need. You only pay for your job when it is running making it cost-effective. The analytics service supports Azure Active Directory letting you simply manage access and roles, integrated with your on-premises identity system. It also includes U-SQL, a language that unifies the benefits of SQL with the expressive power of user code.

These two integrates with many other Azure Services such as Azure HDInsight (Hadoop) , Visual Studio etc.. to name a few.

Azure Data Lake Store can ingest any type of data, structured, un-structured or semi-structured and helps you perform analytics on top of it to make intelligent business decisions, without having to ever manage any servers, or perform any infrastructure plumbing or write code to get insights on your data, with a simple SQL like query language called U-SQL.

Below is high level architecture of Azure Data Lake:

How do I get Started?

Azure Data Lake as of this writing is in Public Preview, which means Microsoft does not yet offer SLA until it goes full GA.

Below are are some excellent resources for you to get an deep understanding of Azure Data Lake and get started to explore power of BigData in Action without writing any code:

- How To - Create an Azure Data Lake Store Account

- How To - Use the Data Explorer to Manage Data in Azure Data Lake Store

- How To - Connect Azure Data Lake Analytics to Azure Data Lake Store

- How To - Access Azure Data Lake Store via Data Lake Analytics

- How To - Connect Azure HDInsight to Azure Data Lake Store

- How To - Access Azure Data Lake Store via Hive and Pig

- How To - Use DistCp (Hadoop Distributed Copy) to copy data to and from Azure Data Lake Store

- How To - Use Apache Sqoop to move data between relational sources and Azure Data Lake Store

- How To - Data Orchestration using Azure Data Factory for Azure Data Lake Store

- Core Concepts - Securing Data in the Azure Data Lake Store

For more up-to-date information on Azure Data Lake please use our product Learning Site

Is Azure Data Lake compatible with Open Source Software ?

Yes – Azure Data Lake is integrated Apache OSS product such as Sqoop, Map reduce, Hive, Storm, Mahout etc.. for detail list of compatibility of Azure Data Lake with OSS please visit here