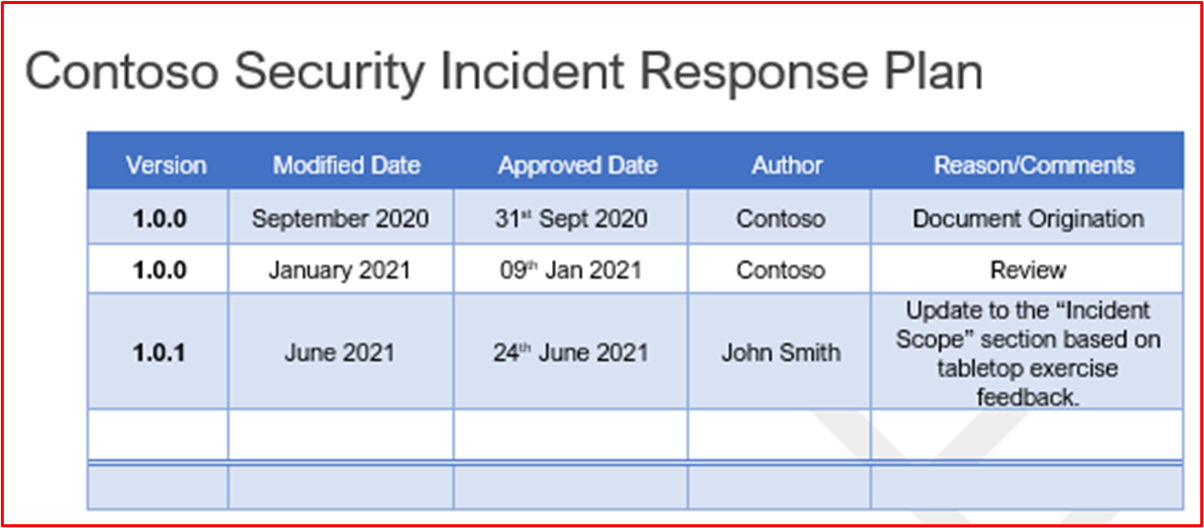

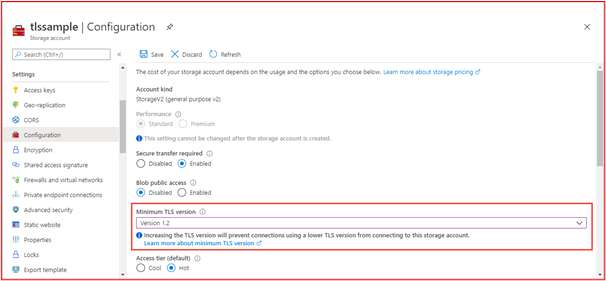

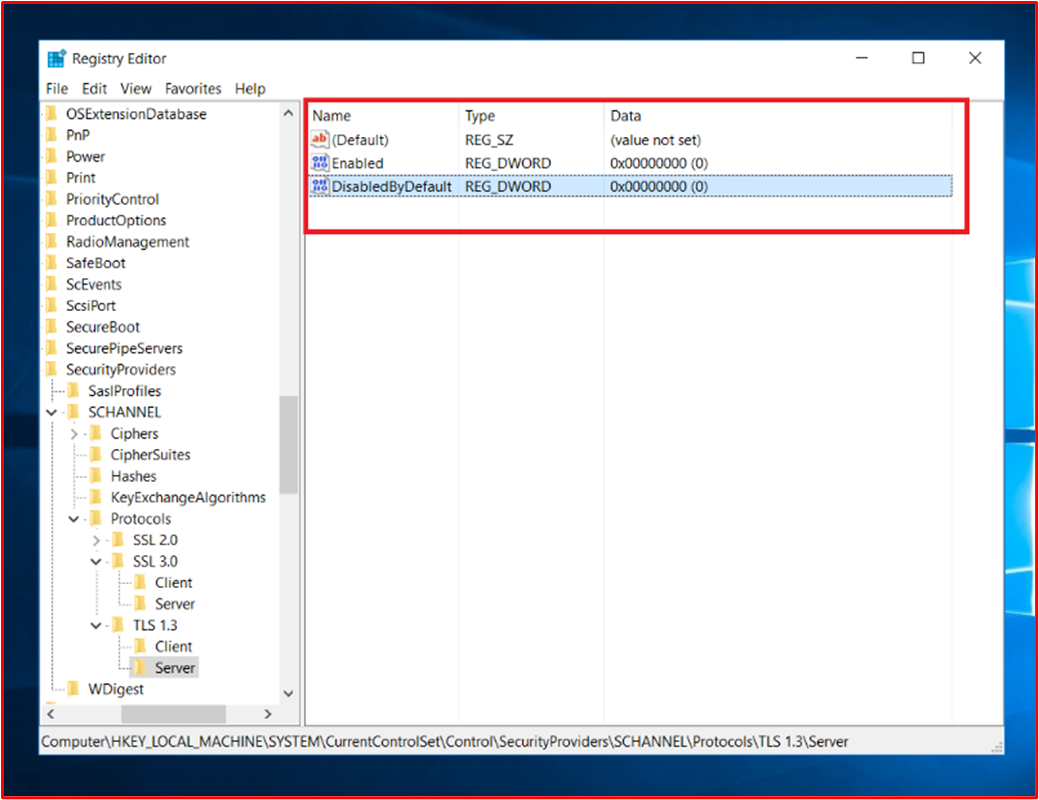

Microsoft 365 Certification - Sample Evidence Guide

Overview

This guide has been created to provide ISVs with examples of the type of evidence and level of detailed required for each of the Microsoft 365 Certification controls. Any examples shared in this document don't represent the only evidence that can be used to demonstrate that controls are being met but act only as a guideline for the type of evidence required.

Note: The actual interfaces, screenshots, and documentation used to satisfy the requirements will vary depending on product use, system setup, and internal processes. In addition, please note that where policy or procedure documentation is required the ISV will be required to send the ACTUAL documents and not screenshots as maybe shown in some of the examples.

There are two sections in the certification that require submissions:

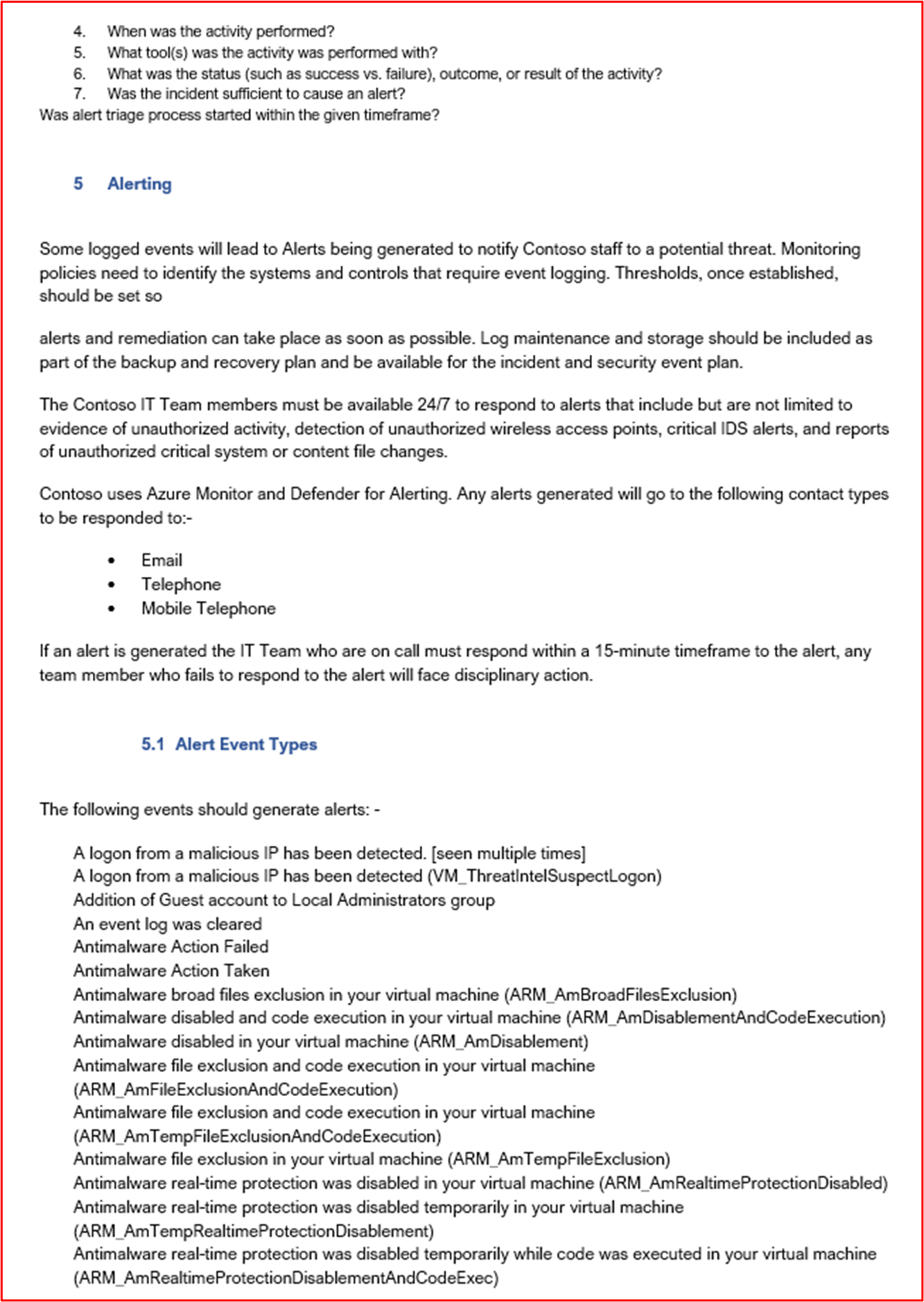

- The Initial Document Submission: a small set of high level documents required for scoping your assessment.

- The Evidence Submission: the full set of evidence required for each control in-scope for your certification assessment.

Tip

Try the App Compliance Automation Tool for Microsoft 365 (ACAT) to achieve an accelerated path to achieve Microsoft 365 certification by automating the evidence collection and control validation. Learn more about which control is fully automated by ACAT.

Structure

This document maps directly to controls you'll be presented during your certification in partner center. The guidance provided within this document is detailed as follows:

- Security Domain: The three security domains that all controls are grouped into: Application Security, Operational Security, and Data Security and Privacy.

- Control(s): = Assessment Activity Description - These control(s) and associated number (No.) are taken directly from the Microsoft 365 Certification Checklist.

- Intent: = The intent of why the security control is included within the program and the specific risk that it's aimed to mitigate. The hope is that this information will provide ISVs with the reasoning behind the control to better understand the types of evidence that needs to be collected and what ISVs must pay attention to and have awareness and understanding of in producing their evidence.

- Example Evidence Guidelines: = Given to help guide the Evidence Collection Tasks on the Microsoft 365 Certification Checklist spreadsheet, this allows the ISVs to clearly see examples of the type of evidence that can be used by the Certification Analyst who will use it to make a confident determination that a control is in place and maintained – it's by no means exhaustive in nature.

- Evidence Example: = This section gives example screenshots and images of potential evidence captured against each of the controls within the Microsoft 365 Certification Checklist spreadsheet, specifically for the Operational Security and Data Security and Privacy Security Domains (Tabs within the spreadsheet). Note any information with red arrows and boxes within the examples is to further aid your understanding of the requirements necessary to meet any control.

Security Domain: Application Security

Control 1 - Control 16:

The Application Security domain controls can be satisfied with a penetration test report issued within the last 12 months showing that your app has no outstanding vulnerabilities. The only required submission is a clean report by a reputable independent company.

Security Domain: Operational Security / Secure Development

The ‘Operational Security / Secure development’ security domain is designed to ensure ISVs implement a strong set of security mitigation techniques against a myriad of threats faced from activity groups. This is designed to protect the operating environment and software development processes to build secure environments.

Malware Protection - Anti-Virus

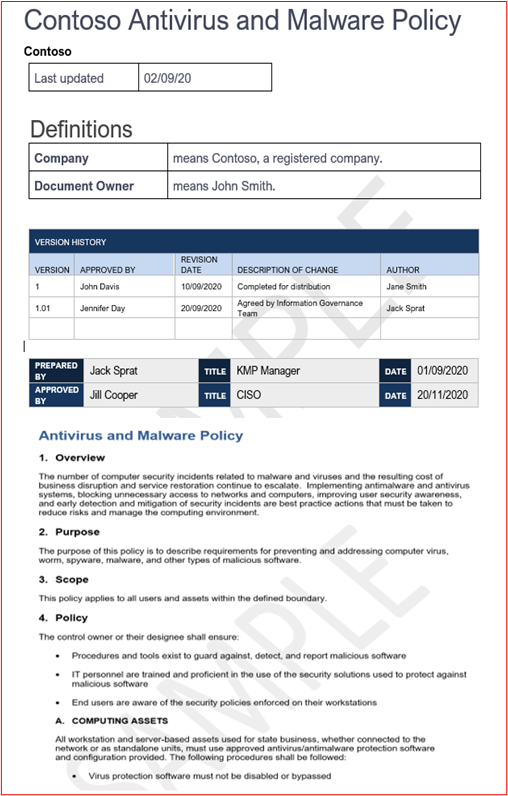

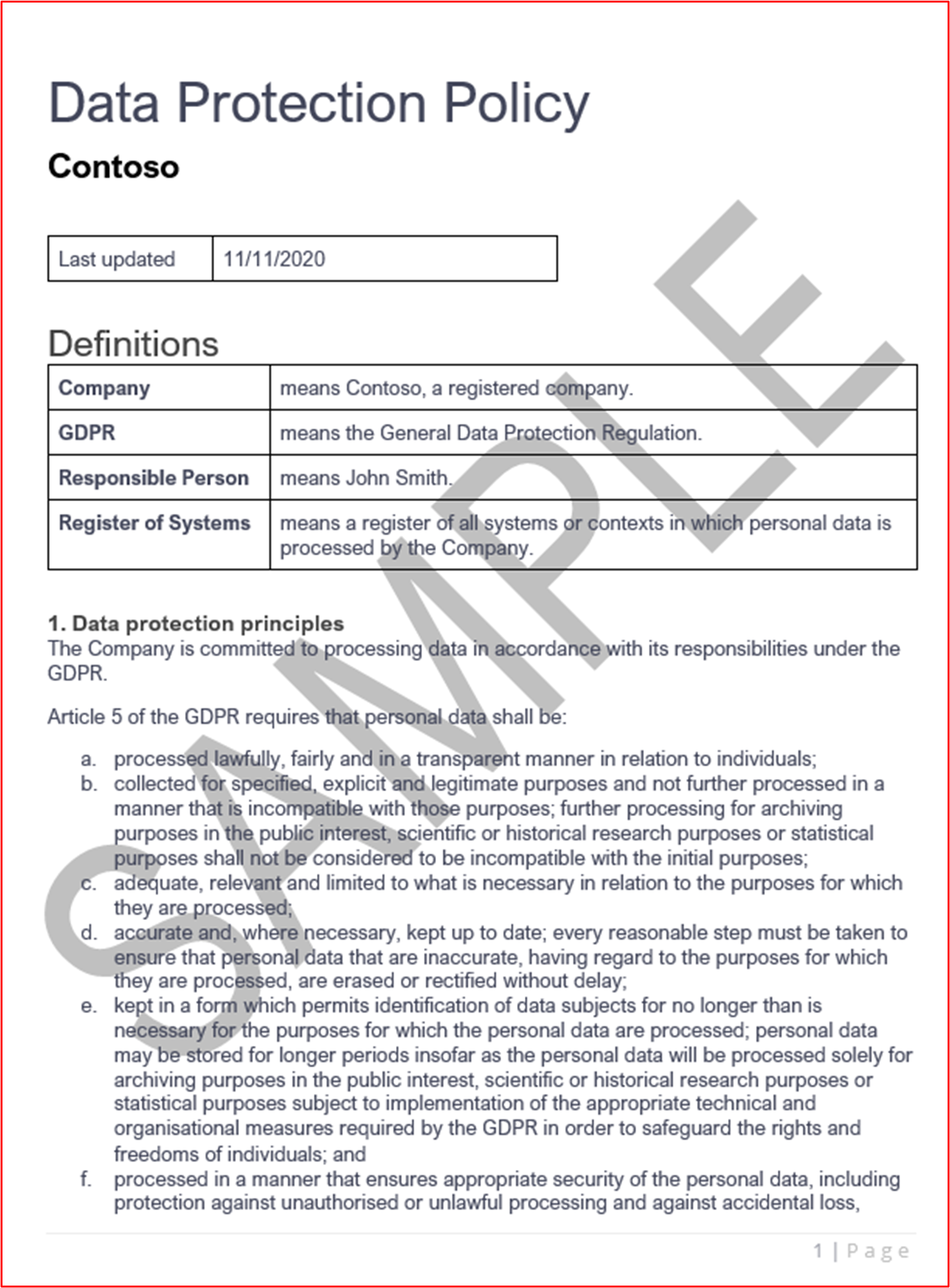

Control #1: Provide policy documentation that governs anti-virus practices and procedures.

- Intent: The intent of this control is to assess an ISV’s understanding of the issues they face when considering the threat from computer viruses. By establishing and using industry best practices in developing an anti-virus policy and processes, an ISV provides a resource tailored to their organization’s ability to mitigate the risks faced by malware, listing best practices in virus detection and elimination, and gives evidence that the documented policy provides suggested security guidance for the organization and its employees. By documenting a policy and procedure of how the ISV deploys anti-malware decencies, this ensures the consistent rollout and maintenance of this technology in reducing the risk of malware to the environment.

- Example Evidence Guidelines: Provide a copy of your Antivirus/Antimalware policy detailing the processes and procedures implemented within your infrastructure to promote Antivirus/Malware best practices. Example Evidence

- Example Evidence:

Note: This screenshot shows a policy/process document, the expectation is for ISVs to share the actual supporting policy/procedure documentation and not simply provide a screenshot.

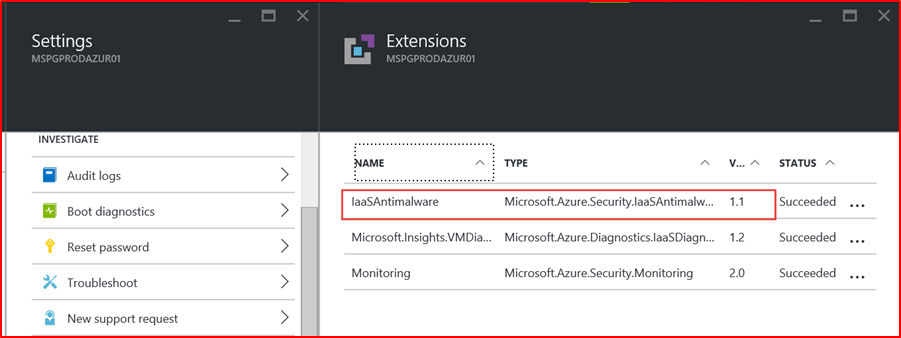

Control #2: Provide demonstrable evidence that anti-virus software is running across all sampled system components.

- Intent: It's important to have Anti-Virus (AV) (or anti-malware defenses) running in your environment to protect against cyber security risks that you may or may not be aware of as potentially damaging attacks are increasing, both in sophistication and numbers. Having AV deployed to all system components that support its use, will help mitigate some of the risks of anti-malware being introduced into the environment. It only takes a single endpoint to be unprotected to potentially provide a vector of attack for an activity group to gain a foothold into the environment. AV should therefore be used as one of several layers of defense to protect against this type of threat.

- Example Evidence Guidelines: To prove that an active instance of AV is running in the assessed environment. Provide a screenshot for every device in the sample that supports the use of anti-virus, which shows the anti-virus process running, the anti-virus software is active, or if you have a centralized management console for anti-virus, you may be able to demonstrate it from that management console. If using the management console, be sure to evidence in a screenshot that the sampled devices are connected and working.

- Evidence Example 1: The below screenshot has been taken from Azure Security Center; it shows that an anti-malware extension has been deployed on the VM named "MSPGPRODAZUR01".

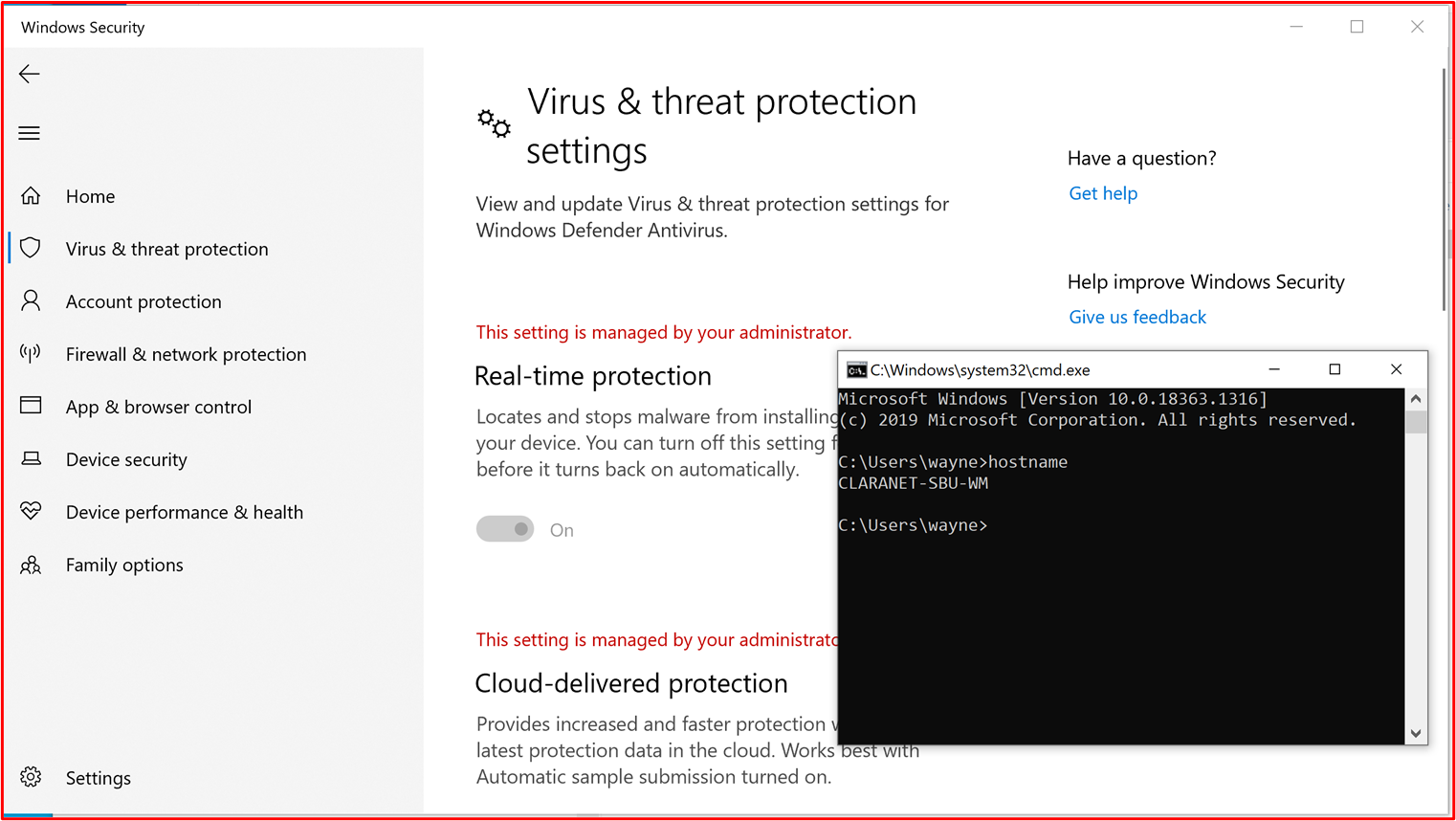

- Evidence Example 2

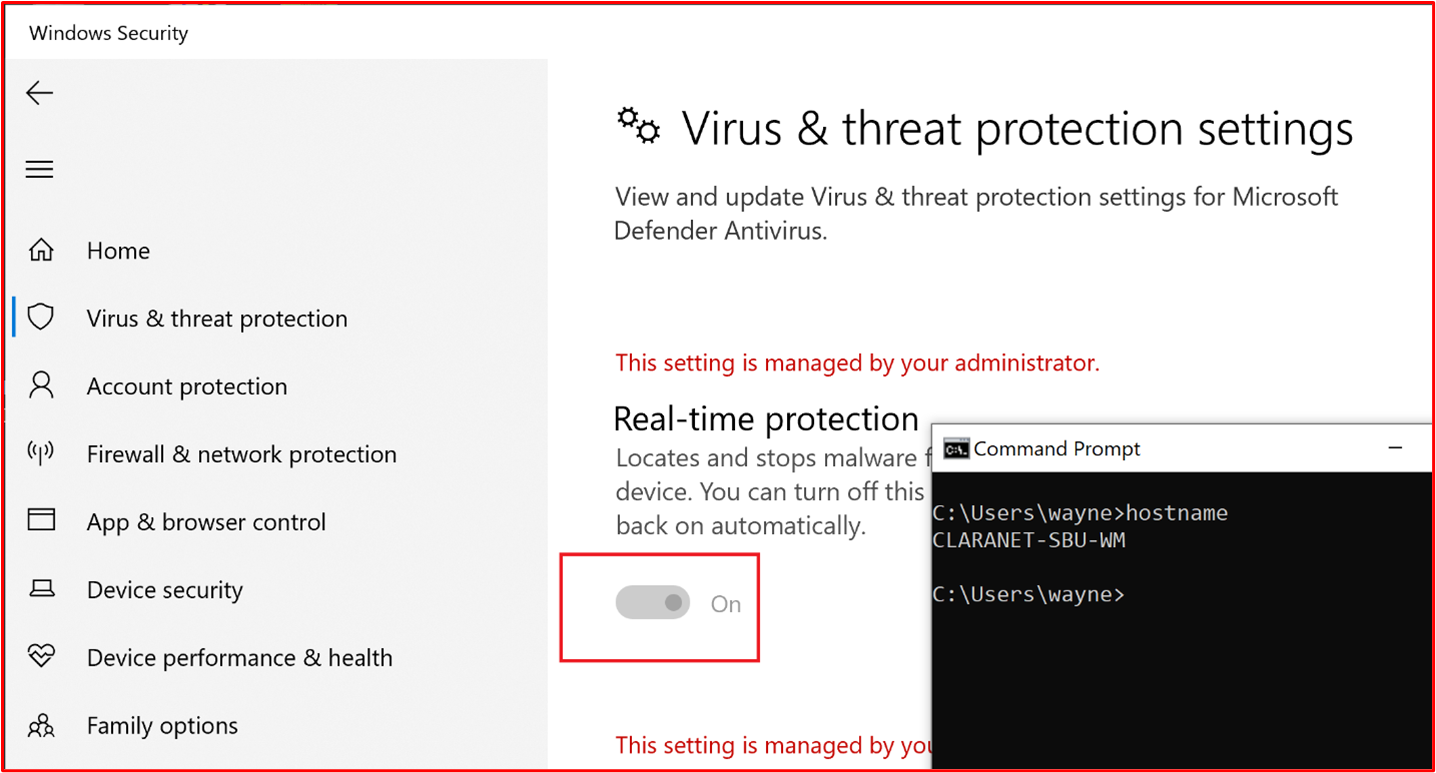

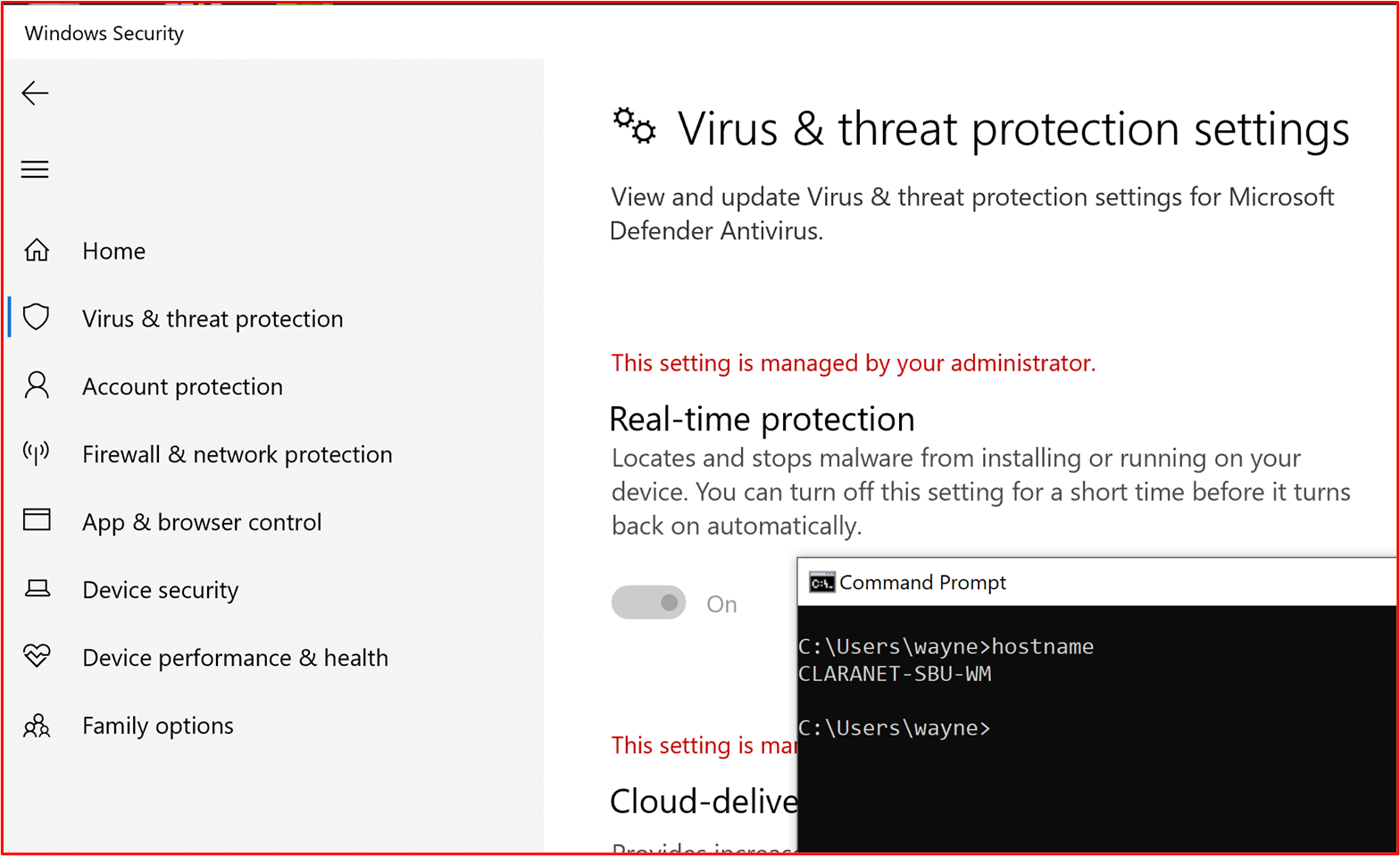

The below screenshot has been taken from a Windows 10 devices, showing that "Real-time protection" is switched on for the host name "CLARANET-SBU-WM".

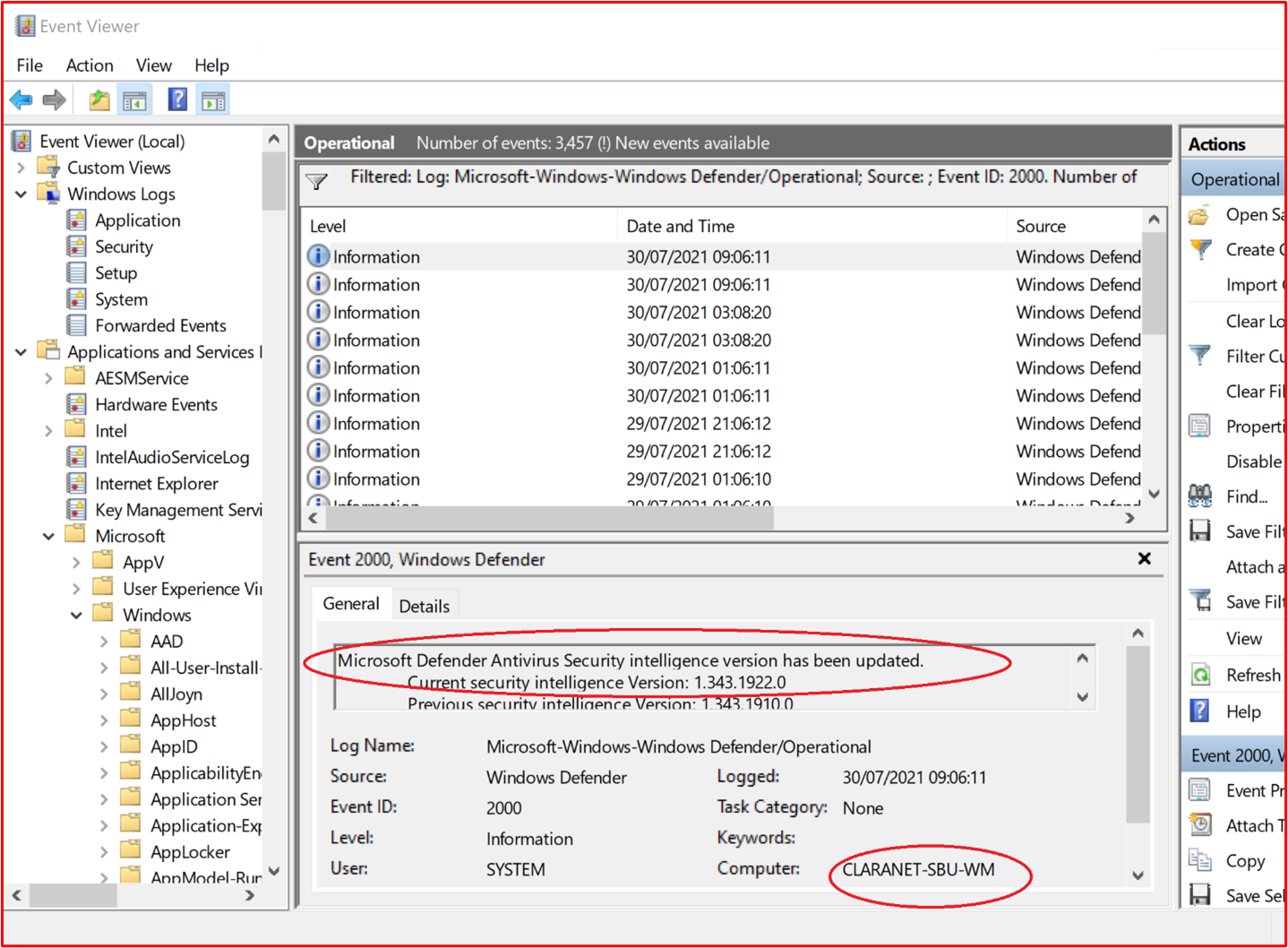

Control #3: Provide demonstratable evidence that anti-virus signatures are up-to-date across all environments (within 1 day).

- Intent: Hundreds of thousands of new malware and potentially unwanted applications (PUA) are identified every day. To provide adequate protection against newly released malware, AV signatures need to be updated regularly to account for newly released malware.

- This control exists to ensure that the ISV has taken into consideration the security of the environment and the effect that outdated AV can have on security.

- Example Evidence Guidelines: Provide anti-virus log files from each sampled device, showing that updates are applied daily.

- Example Evidence: The following screenshot shows Microsoft Defender updating at least daily by showing 'Event 2000, Windows Defender' which is the update. The hostname is shown, showing that this was taken from the in-scope system "CLARANET-SBU-WM".

Note: The evidence provided would need to include an export of the logs to show daily updates over a greater time-period. Some anti-virus products will generate update log files so these files should be supplied or export the logs from Event Viewer.

Control #4: Provide demonstratable evidence that anti-virus is configured to perform on-access scanning or periodic scan across all sampled system components.

Note: If on-access scanning isn't enabled, then a minimum of daily scanning and alerting_ MUST _be enabled.

- Intent: The intention of this control is to ensure that malware is quickly identified to minimize the effect this may have to the environment. Where on-access scanning is carried out and coupled with automatically blocking malware, this will help stop malware infections that are known by the anti-virus software. Where on-access scanning isn't desirable due to risks of false positives causing service outages, suitable daily (or more) scanning and alerting mechanisms need to implement to ensure a timely response to malware infections to minimize damage.

- Example Evidence Guidelines: Provide a screenshot for every device in the sample that supports anti-virus, showing that anti-virus is running on the device and is configured for on-access (real-time scanning) scanning, OR provide a screenshot showing that periodic scanning is enabled for daily scanning, alerting is configured and the last scan date for every device in the sample.

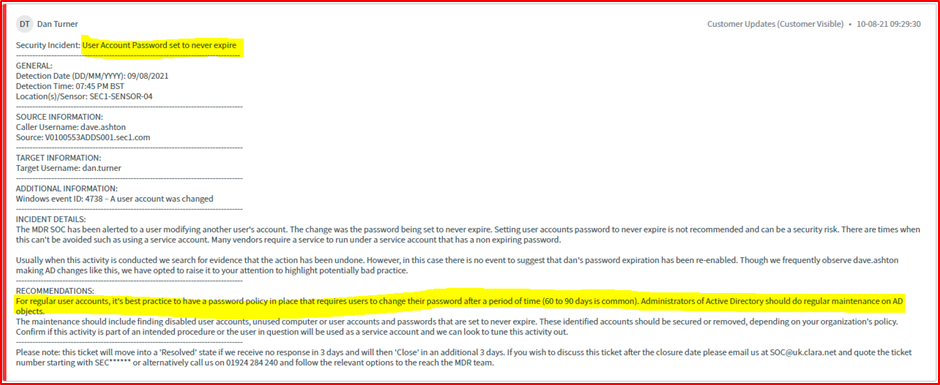

- Example Evidence: The following screenshot shows Real-time protection is enabled for the host, "CLARANET-SBU-WM".

Control #5: Provide demonstratable evidence that anti-virus is configured to automatically block malware or quarantine and alert across all sampled system components.

Intent: The sophistication of malware is evolving all the time along with the varying degrees of devastation that they can bring. The intent of this control is to either stop malware from executing, and therefore stopping it from executing its potentially devastating payload, or if automatic blocking isn't an option, limiting the amount of time malware can wreak havoc by alerting and immediately responding to the potential malware infection.

Example Evidence Guidelines: Provide a screenshot for every device in the sample that supports anti-virus, showing that anti-virus is running on the machine and is configured to automatically block malware, alert or to quarantine and alert.

Example Evidence 1: The following screenshot shows the host "CLARANET-SBU-WM" is configured with real-time protection on for Microsoft Defender Antivirus. As the setting says, this locates and stops malware from installing or running on the device.

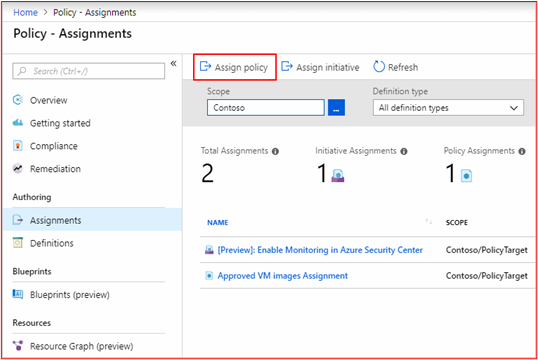

Control #6: Provide demonstratable evidence that applications are approved prior to being deployed.

Intent: With application control, the organization will approve each application/process that is permitted to run on the operating system. The intent of this control is to ensure that an approval process is in place to authorize which applications/processes can run.

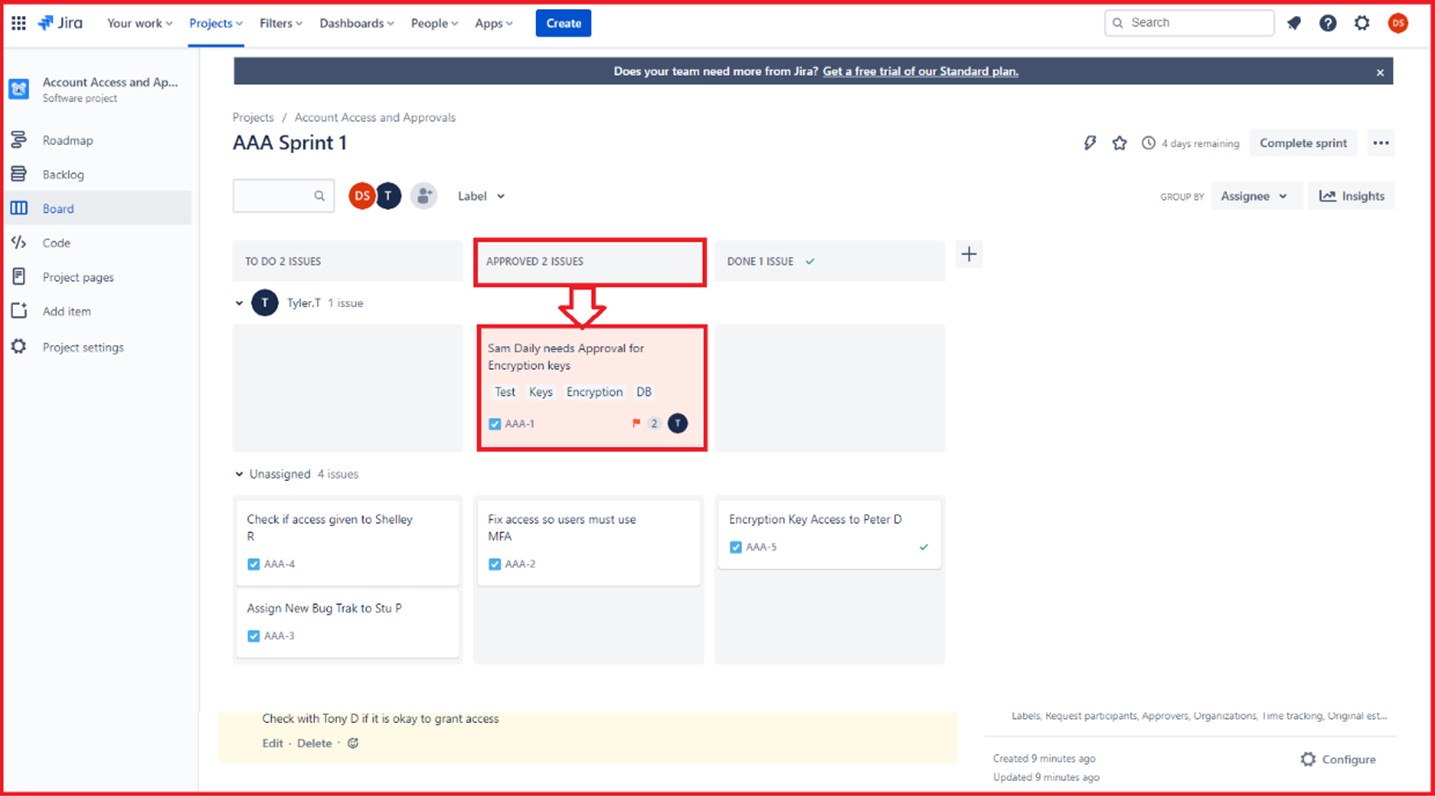

Example Evidence Guidelines: Evidence can be provided showing that the approval process is being followed. This may be provided with signed documents, tracking within change control systems or using something like Azure DevOps or JIRA to track these requests and authorization.

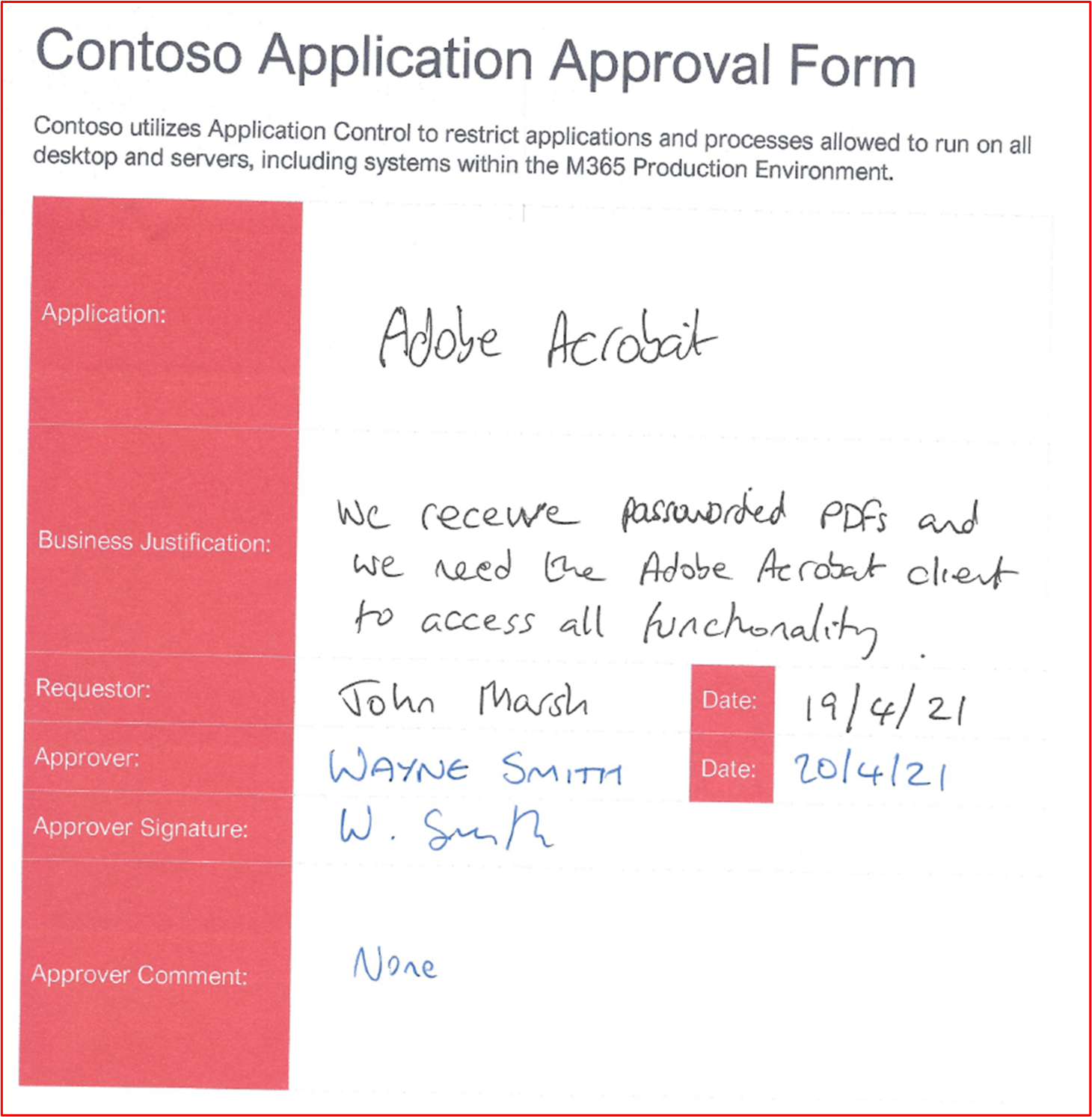

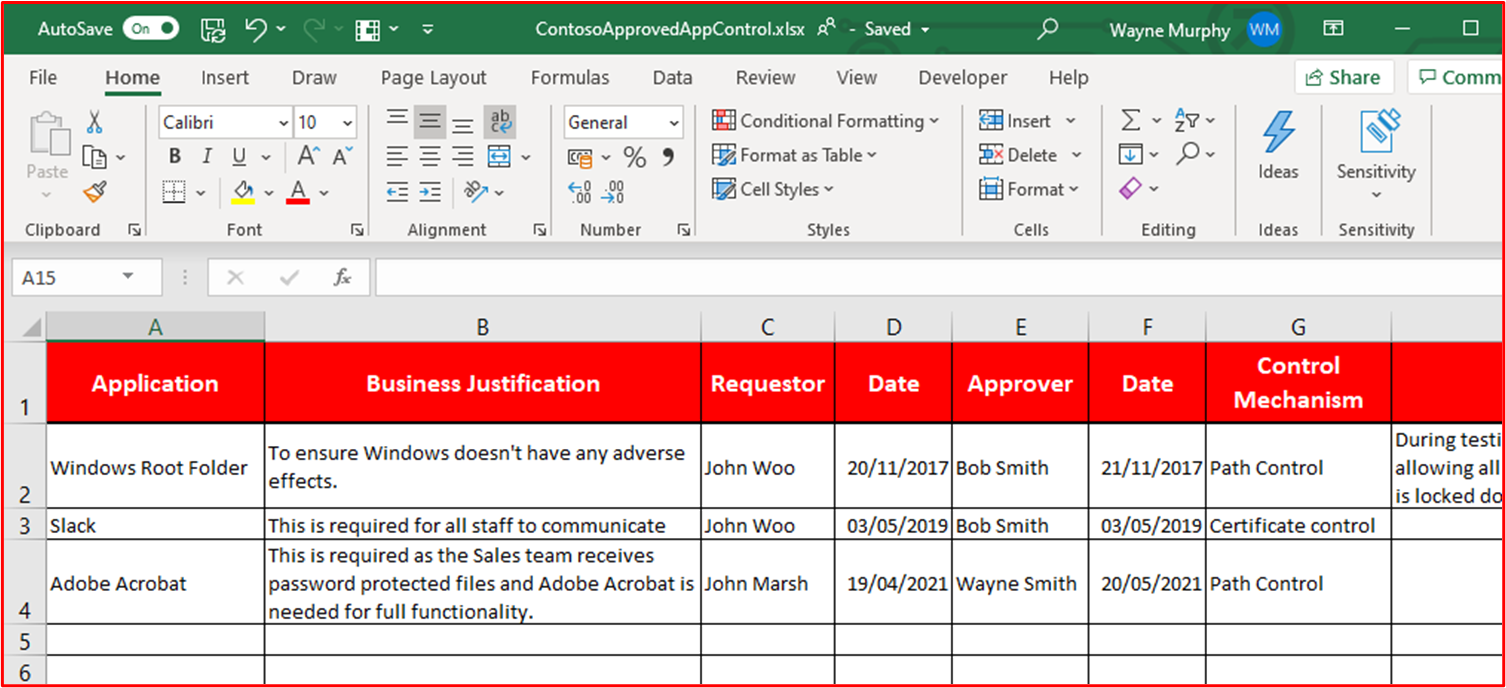

Example Evidence: The following screenshot demonstrates an approval by management that each application permitted to run within the environment follows an approval process. This is a paper-based process at Contoso, however other mechanisms may be used.

Control #7: Provide demonstratable evidence that a complete list of approved applications with business justification exists and is maintained.

Intent: It's important that organizations maintain a list of all applications that have been approved, along with information on why the application/process has been approved. This will help ensure the configuration stays current and can be reviewed against a baseline to ensure unauthorized applications/processes aren't configured.

Example Evidence Guidelines: Supply the documented list of approved applications/processes along with the business justification.

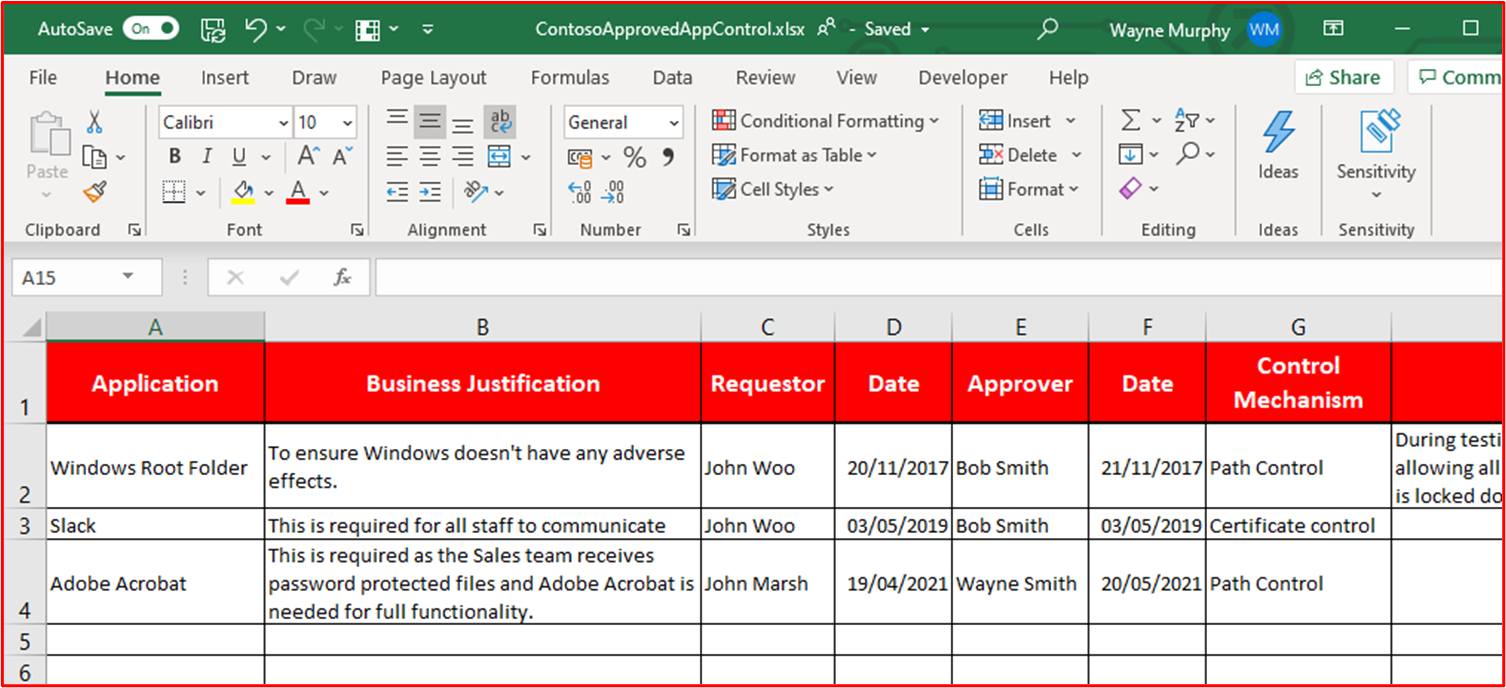

Example Evidence: The following screenshot lists the approved applications with business justification.

Note: This screenshot shows a document, the expectation is for ISVs to share the actual supporting document and not to provide a screenshot.

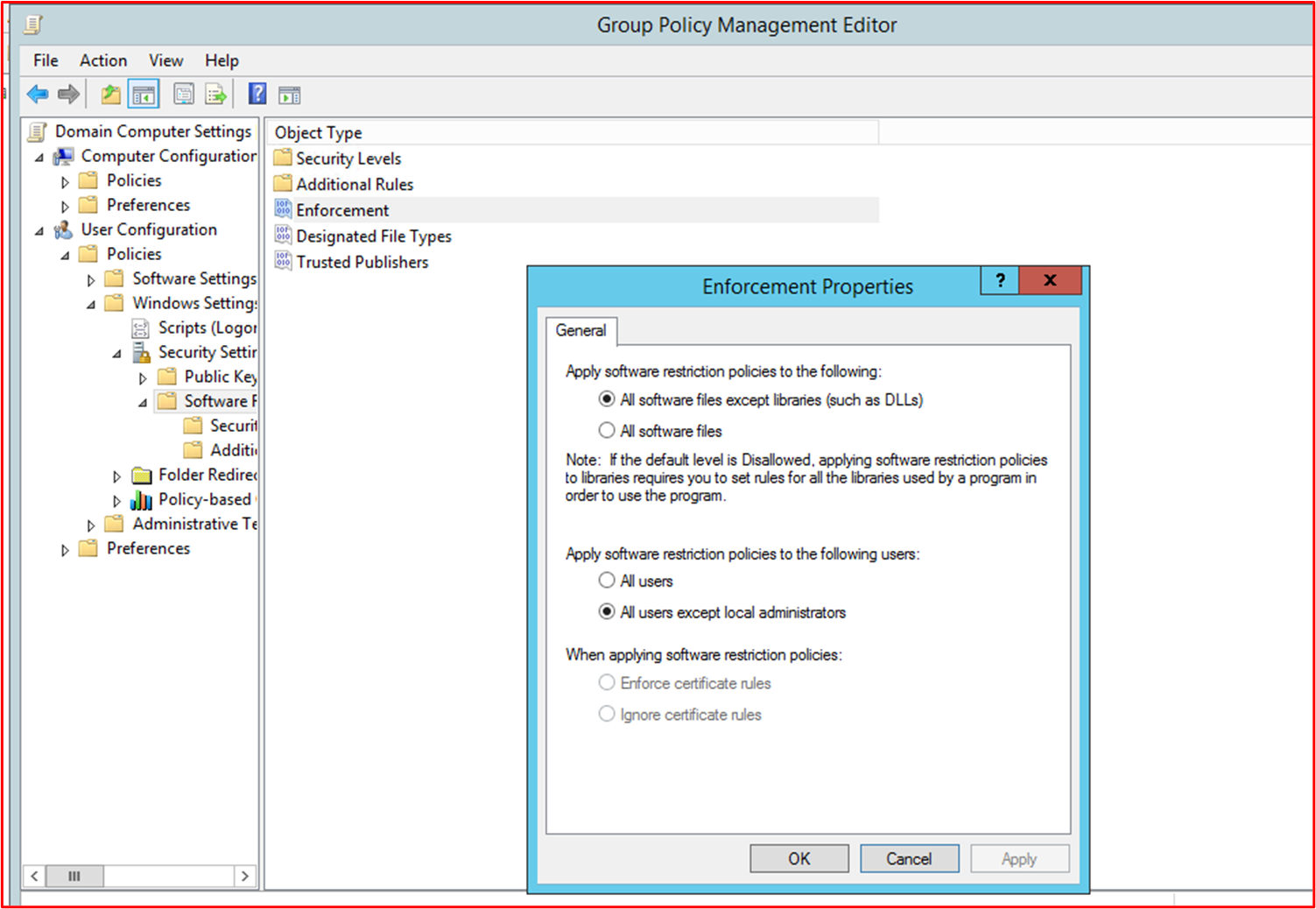

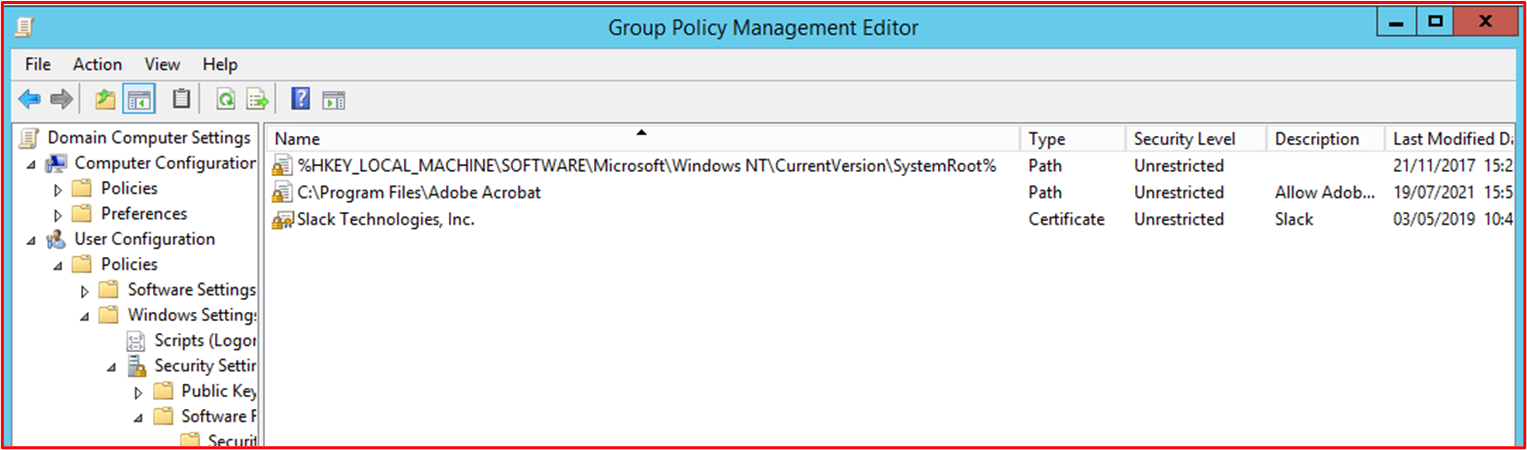

Control #8: Provide supporting documentation detailing that application control software is configured to meet specific application control mechanisms.

Intent: The configuration of the application control technology should be documented along with a process of how to maintain the technology, that is, add and delete applications/processes. As part of this documentation, the type of mechanism used should be detailed for each application/process. This will feed into the next control to ensure the technology is configured as documented.

Example Evidence Guidelines: Provide supporting documentation detailing how application control has been set up and how each application/process has been configured within the technology.

Example Evidence: The following screenshot lists the control mechanism used to implement the application control. You can see below that 1 app is using Certificate controls and the others using the file path.

Note: This screenshot shows a document, the expectation is for ISVs to share the actual supporting document and not to provide a screenshot.

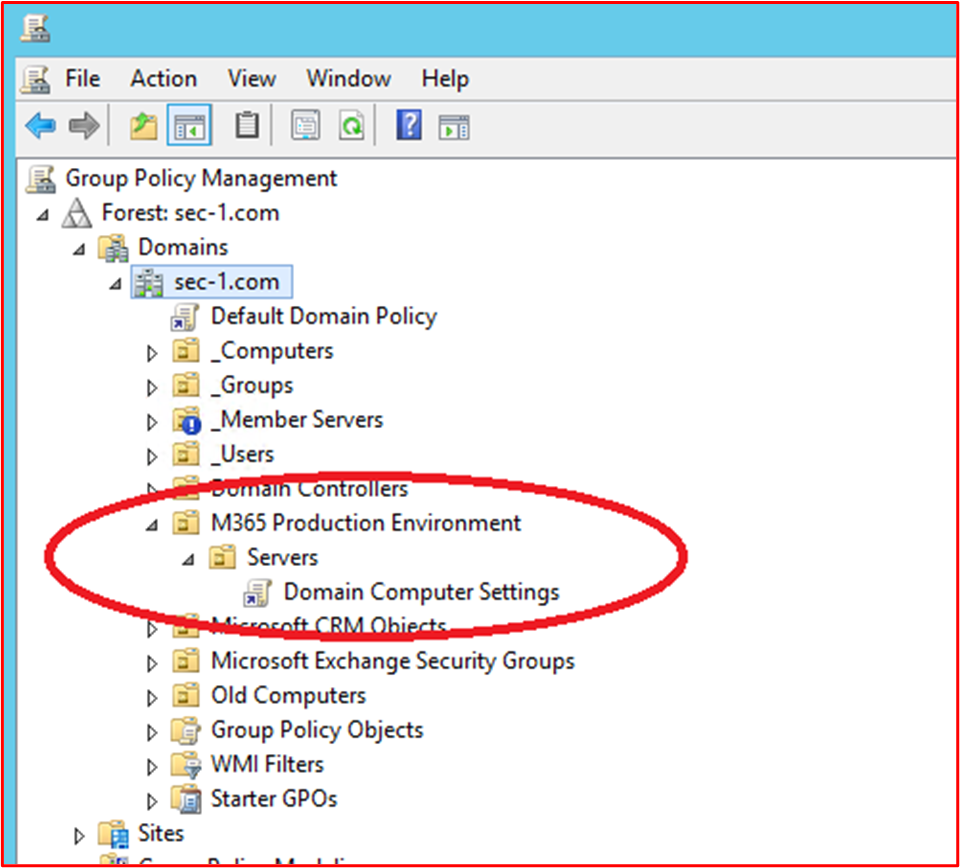

Control #9: Provide demonstratable evidence that application control is configured as documented from all sampled system components.

Intent: The intent of this is to validate that application control is configured across the sample as per the documentation.

Example Evidence Guidelines: Provide a screenshot for every device in the sample to show that it has application controls configured and activated. This should show machine names, the groups they belong to, and the application control policies applied to those groups and machines.

Evidence Example: The following screenshot shows a Group Policy object with Software Restriction Policies enabled.

This next screenshot shows the configuration in line with the control above.

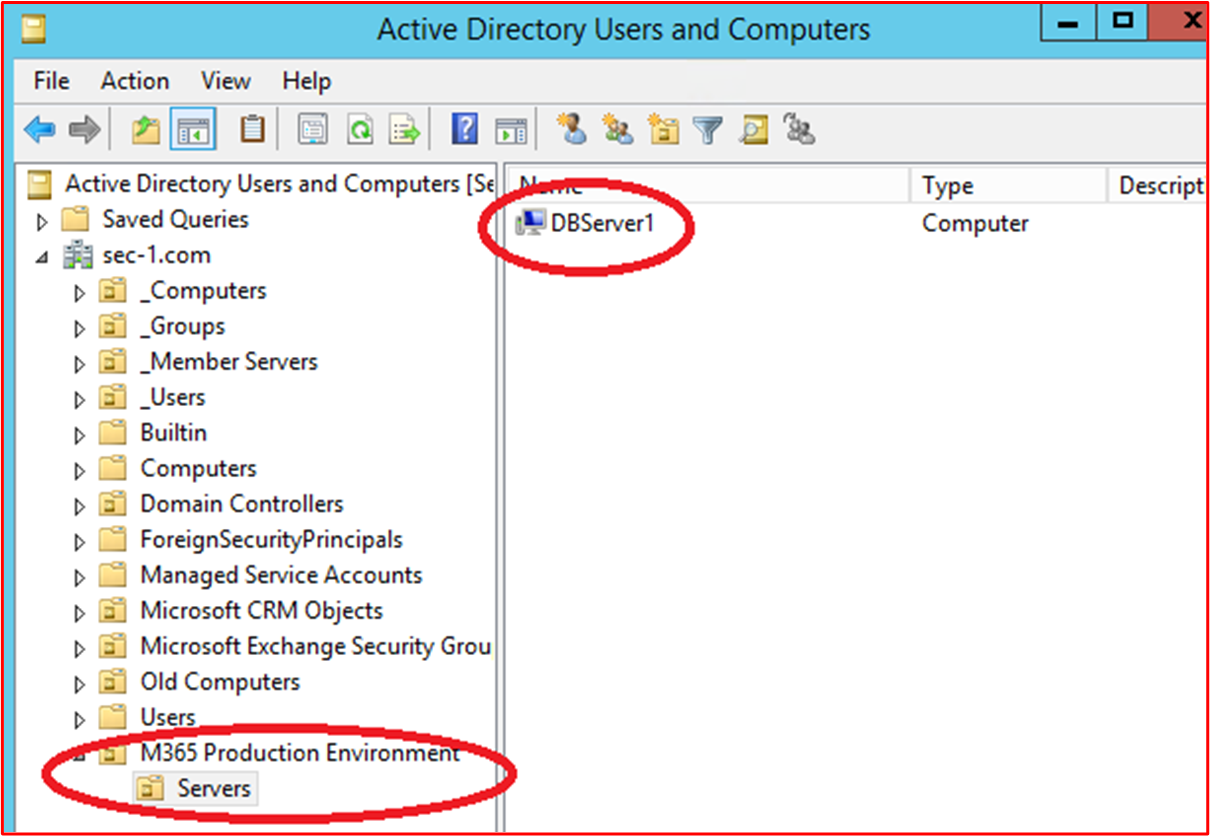

This next screenshot shows the Microsoft 365 Environment and the Computers included within the scope being applied to this GPO Object 'Domain Computer Settings'.

This final screenshot shows the in-scope server "DBServer1" being within the OU within the screenshot above.

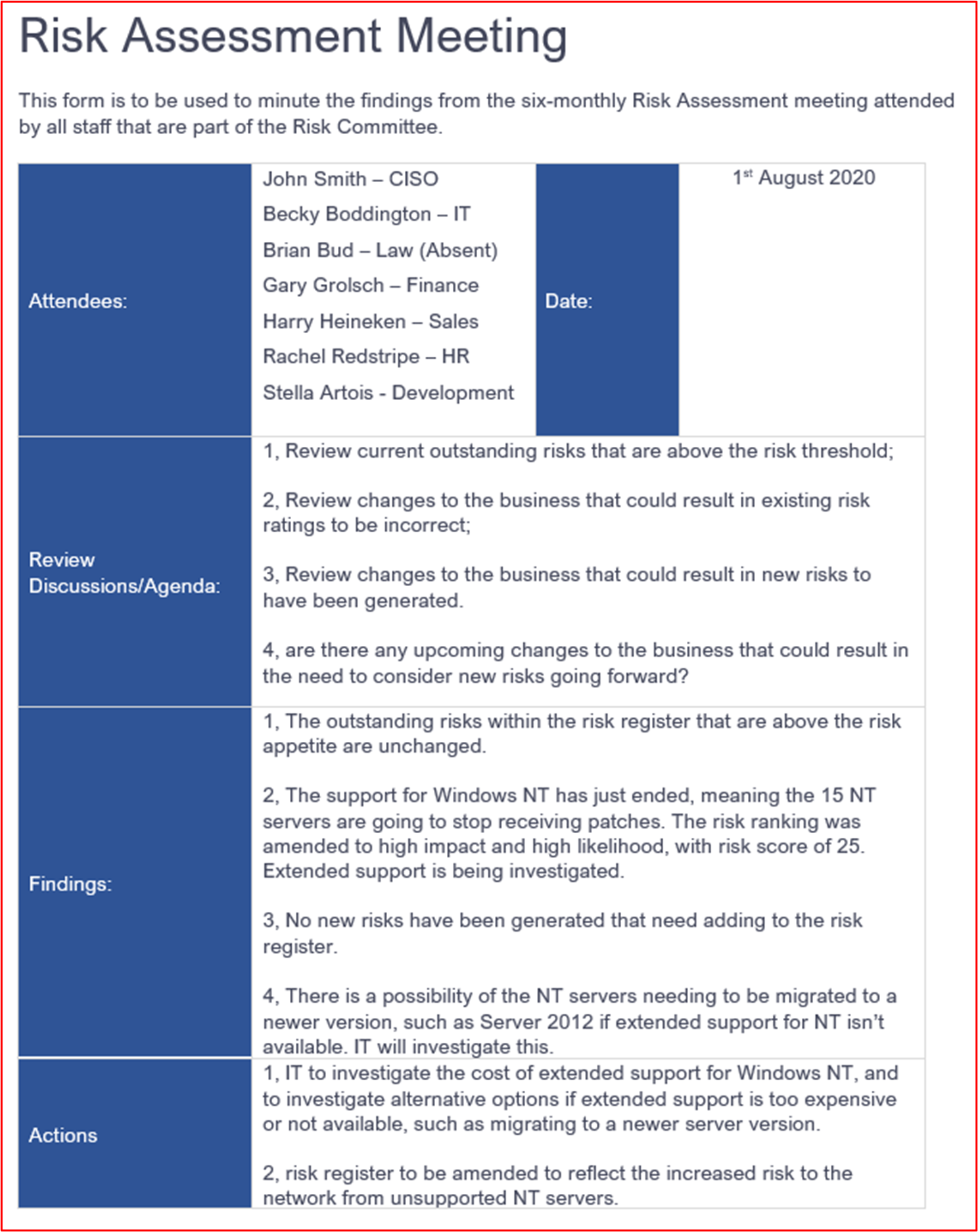

Patch Management – Risk Ranking

The swift identification and remediation of security vulnerabilities helps to minimize the risks of an activity group compromising the environment or application. Patch management is split into two sections: risk ranking and patching. These three controls cover the identification of security vulnerabilities and ranking them according to the risk they pose.

This security control group is in scope for Platform-as-a-Service (PaaS) hosting environments since the application/add-in third-party software libraries and code base must be patched based upon the risk ranking.

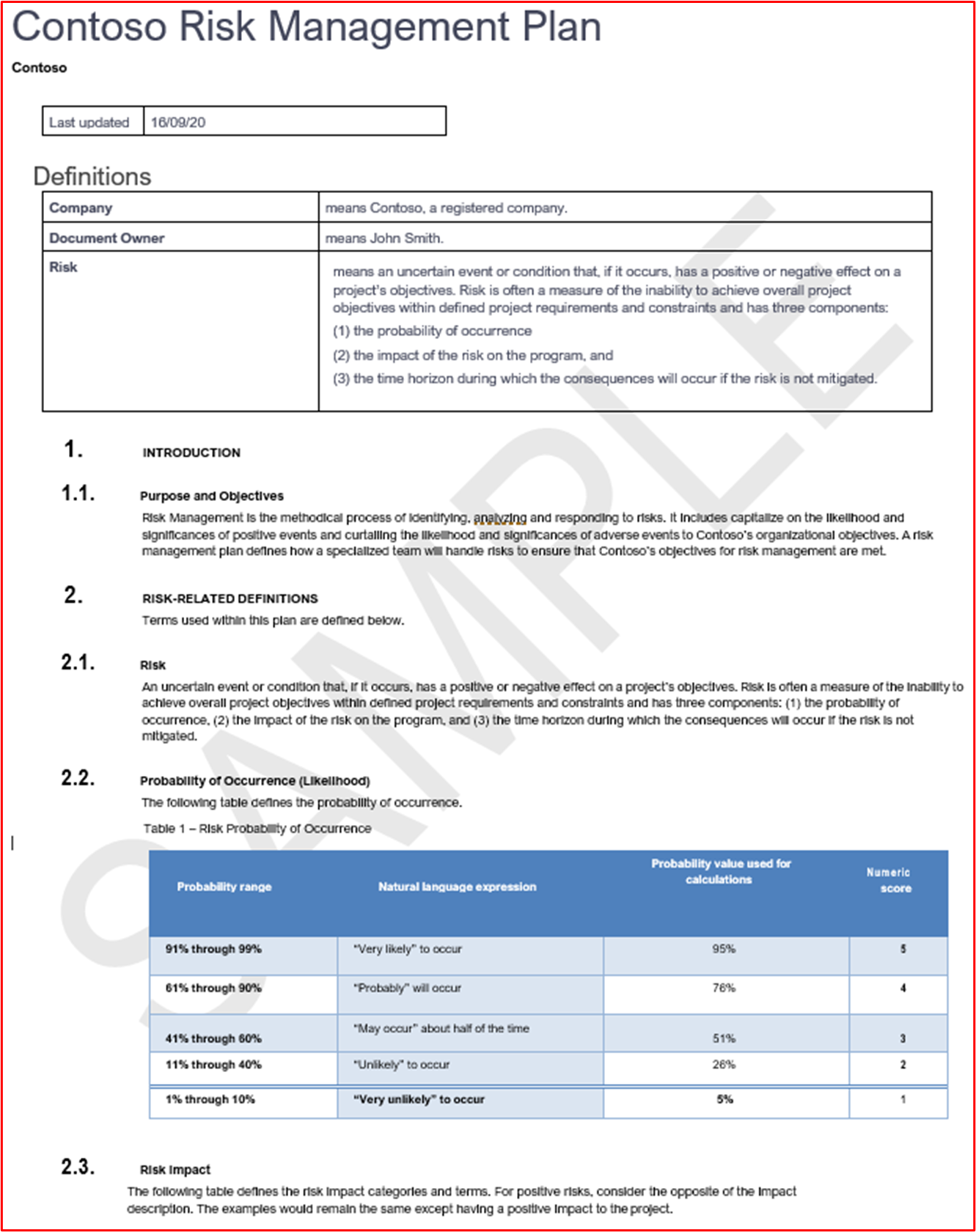

Control #10: Supply policy documentation that governs how new security vulnerabilities are identified and assigned a risk score.

- Intent: The intent of this control is to have supporting documentation to ensure security vulnerabilities are identified quickly to reduce the window of opportunity that activity groups have to exploit these vulnerabilities. A robust mechanism needs to be in place to identify vulnerabilities covering all the system components in use by the organizations; for example, operating systems (Windows Server, Ubuntu, etc.), applications (Tomcat, MS Exchange, SolarWinds, etc.), code dependencies (AngularJS, jQuery, etc.). Organizations need to not only ensure the timely identification of vulnerabilities within the estate, but also rank any vulnerabilities accordingly to ensure remediation is carried out within a suitable timeframe based on the risk the vulnerability presents.

Note Even if you're running within a purely Platform as a Service environment, you still have a responsibility to identify vulnerabilities within your code base: that is, third-party libraries.

Example Evidence Guidelines: Supply the support documentation (not screenshots)

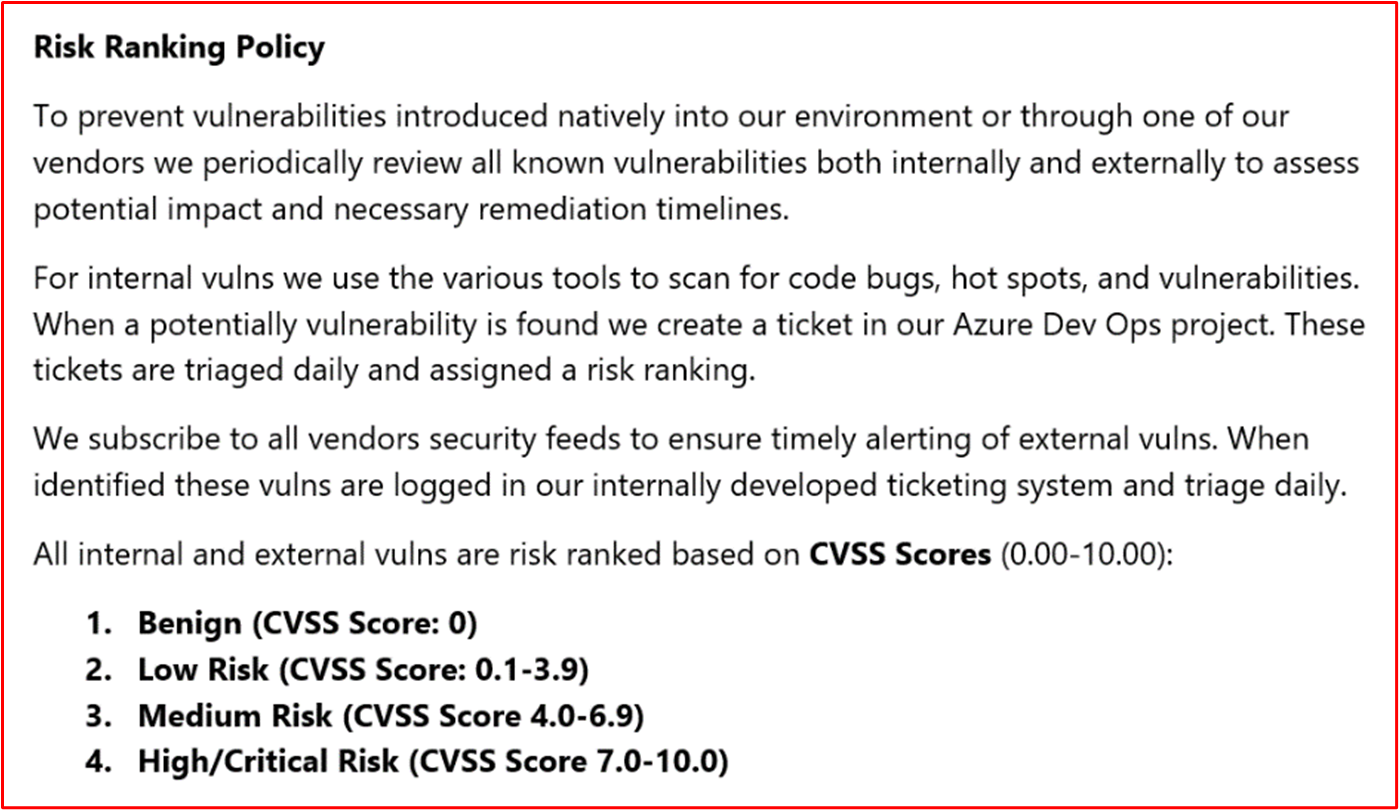

Example Evidence: This screenshot shows a snippet of a risk ranking policy.

Note: This screenshot shows a policy/process document, the expectation is for ISVs to share the actual supporting policy/procedure documentation and not provide a screenshot._

Control #11: Provide evidence of how new security vulnerabilities are identified.

Intent: The intent of this control is to ensure the process is being followed and it's robust enough to identify new security vulnerabilities across the environment. This may not just be the Operating Systems; it may include applications running within the environment and any code dependencies.

Example Evidence Guidelines: Evidence may be provided by way of showing subscriptions to mailing lists, manually reviewing security sources for newly released vulnerabilities (would need to be adequately tracked with timestamps of the activities, that is, with JIRA or Azure DevOps), tooling which finds out-of-date software (for example, could be Snyk when looking for out-of-date software libraries, or could be Nessus using authenticated scans which identify out-of-date software.).

Note If using Nessus, this would need to be run regularly to identify vulnerabilities quickly. We would recommend at least weekly.

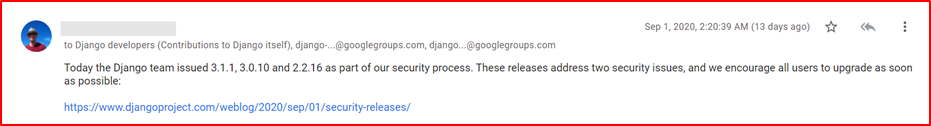

- Example Evidence: This screenshot demonstrates that a mailing group is being used to be notified of security vulnerabilities.

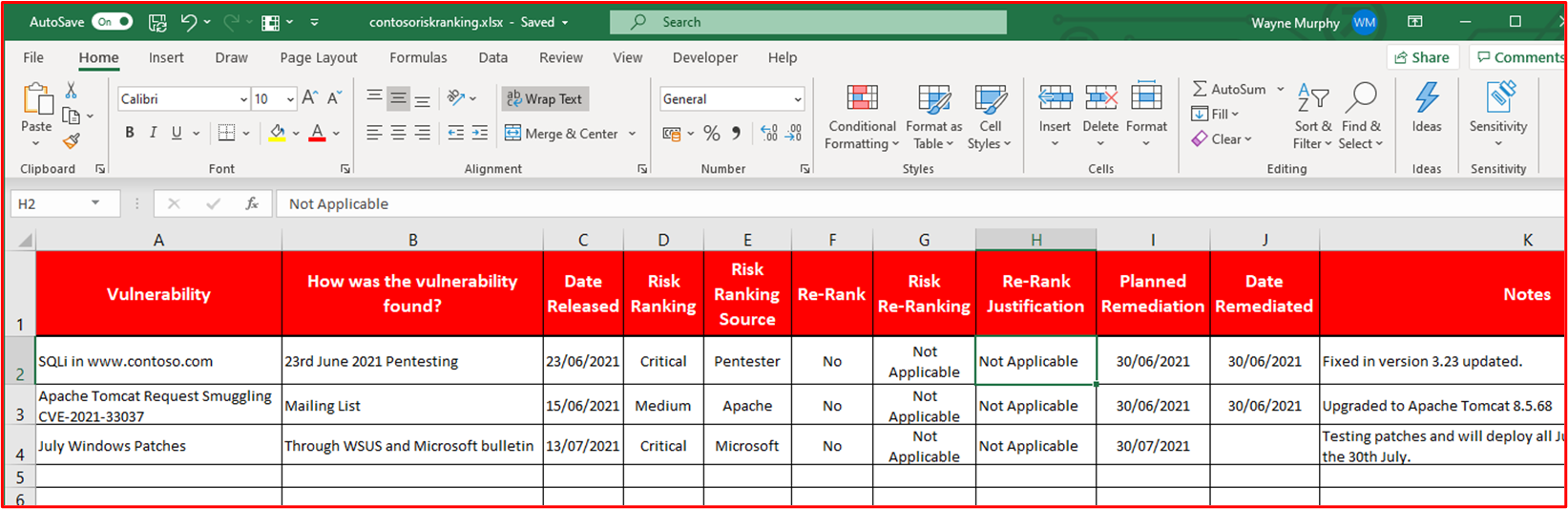

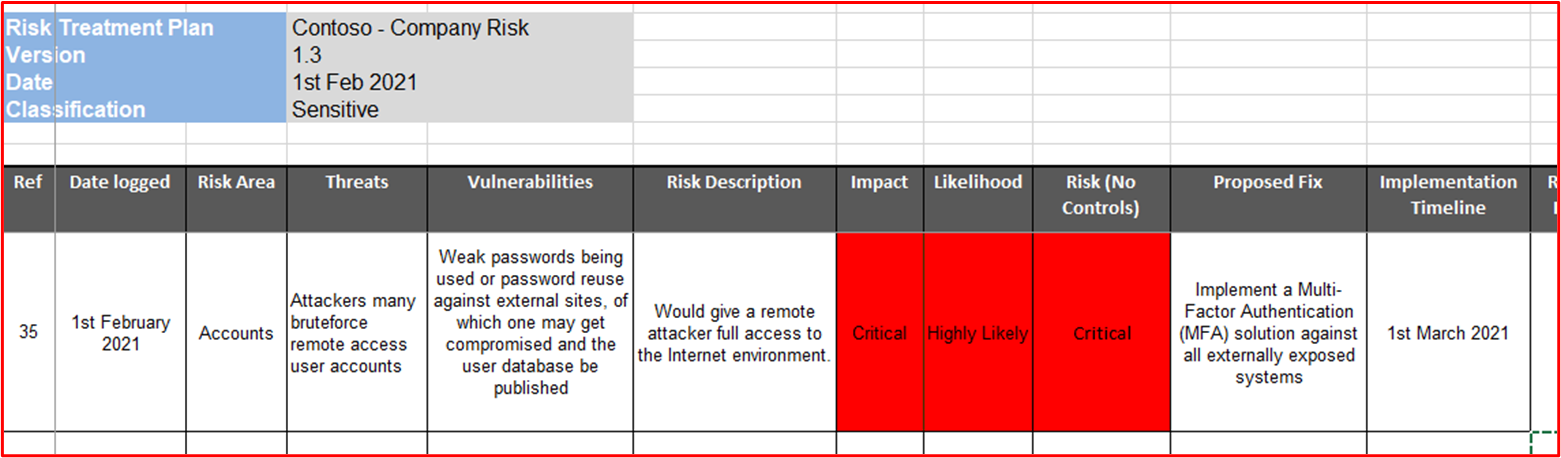

Control #12: Provide evidence demonstrating that all vulnerabilities are assigned a risk ranking once identified.

Intent: Patching needs to be based upon risk, the riskier the vulnerability, the quicker it needs to be remediated. Risk ranking of identified vulnerabilities is an integral part of this process. The intent of this control is to ensure there's a documented risk ranking process which is being followed to ensure all identified vulnerabilities are suitably ranked based upon risk. Organizations usually utilize the CVSS (Common Vulnerability Scoring System) rating provided by vendors or security researchers. it's recommended that if organization rely on CVSS, that a re-ranking mechanism is included within the process to allow the organization to change the ranking based upon an internal risk assessment. Sometimes, the vulnerability may not be application due to the way the application has been deployed within the environment. For example, a Java vulnerability may be released which impacts a specific library that t used by the organization.

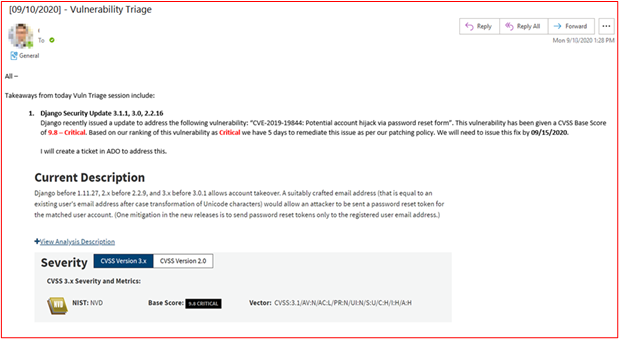

Example Evidence Guidelines: Provide evidence by way of screenshot or other means, for example, DevOps/Jira, which demonstrates that vulnerabilities are going through the risk ranking process and being assigned an appropriate risk ranking by the organization.

Example Evidence: This screenshot shows risk ranking occurring within column D and re-ranking in columns F and G, should the organization perform a risk assessment and determine that the risk can be downgraded. Evidence of re-ranking risk assessments would need to be supplied as supporting evidence

Patch Management – Patching

The below controls are for the patching element for Patch Management. To maintain a secure operating environment, applications/add-ons and supporting systems must be suitably patched. A suitable timeframe between identification (or public release) and patching needs to be managed to reduce the window of opportunity for a vulnerability to be exploited by a 'activity group'. The Microsoft 365 Certification doesn't stipulate a 'Patching Window', however Certification Analysts will reject timeframes that aren't reasonable.

This security control group is in scope for Platform-as-a-Service (PaaS) hosting environments since the application/add-in third-party software libraries and code base must be patched based upon the risk ranking.

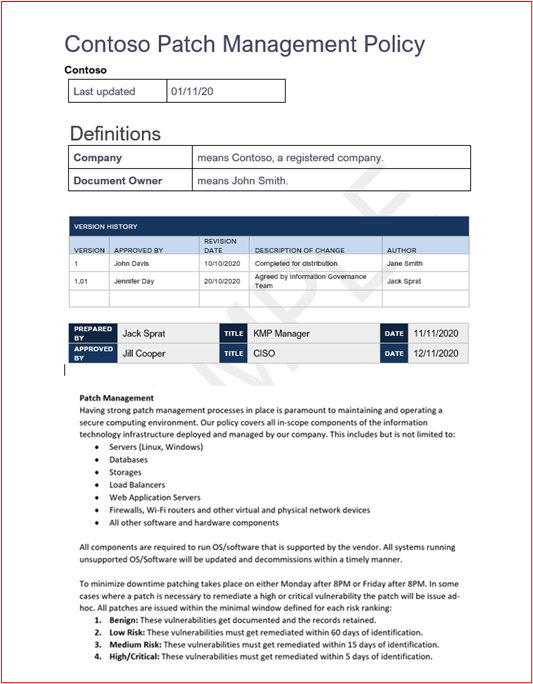

Control #13: Provide policy documentation for patching of in-scope system components that includes suitable minimal patching timeframe for critical, high, and medium risk vulnerabilities; and decommissioning of any unsupported operating systems and software.

Intent: Patch management is required by many security compliance frameworks that is, PCI-DSS, ISO 27001, NIST (SP) 800-53. The importance of good patch management can't be over stressed as it can correct security and functionality problems in software, firmware and mitigate vulnerabilities, which helps in the reduction of opportunities for exploitation. The intent of this control is to minimize the window of opportunity an activity group has to exploit vulnerabilities that may exist within the in-scope environment.

Example Evidence Guidelines: Provide a copy of all policies and procedures detailing the process for patch management. This should include a section on a minimal patching window, and that unsupported operating systems and software must not be used within the environment.

Example Evidence: Below is an example policy document.

Note: This screenshot shows a policy/process document, the expectation is for ISVs to share the actual supporting policy/procedure documentation and not simply provide a screenshot._

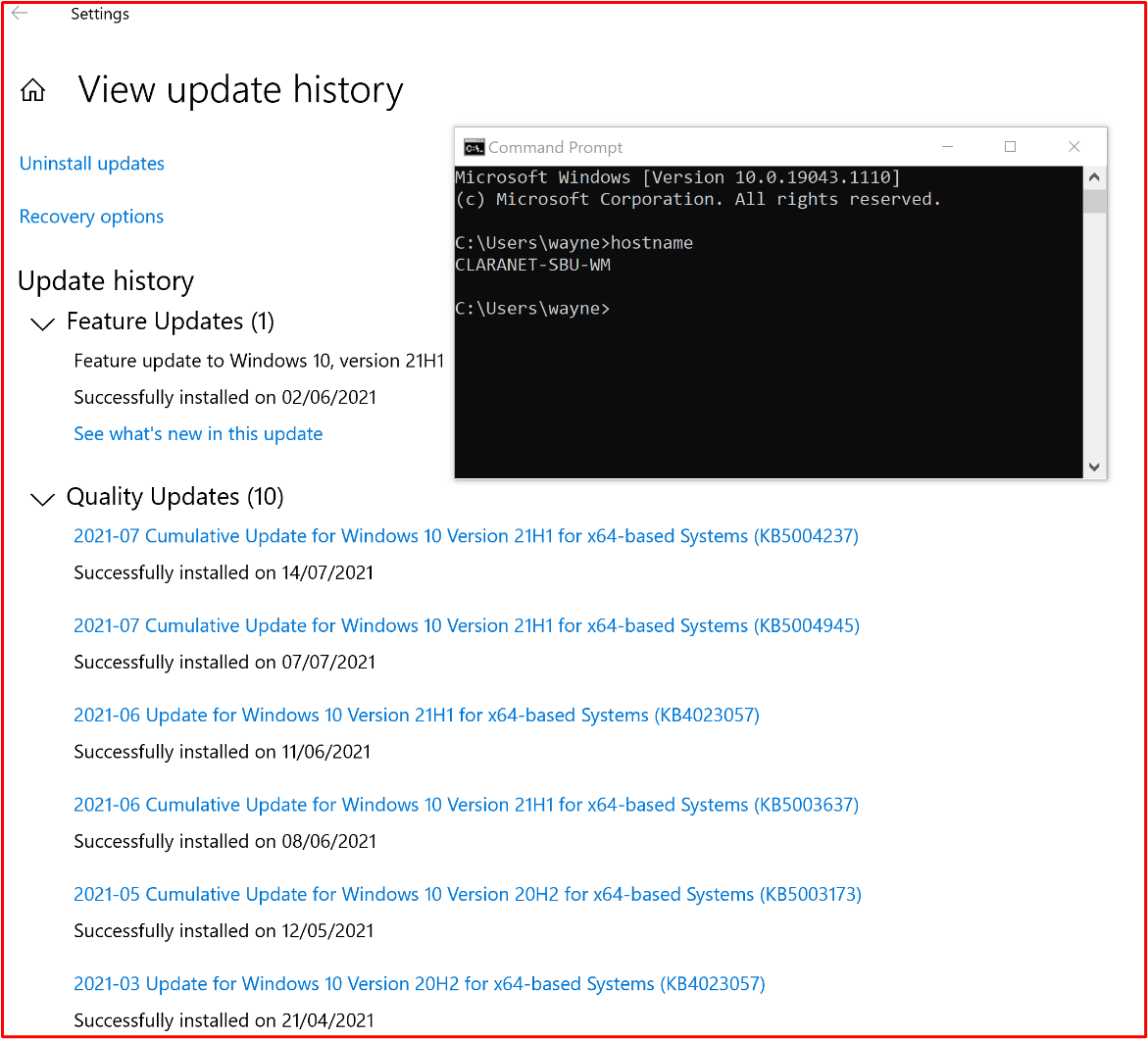

Control #14: Provide demonstratable evidence that all sampled system components are being patched.

Note: Include any software/third-party libraries.

Intent: Patching vulnerabilities ensures that the differing modules that form part of the information technology infrastructure (hardware, software, and services) are kept up to date and free from known vulnerabilities. Patching needs to be carried out as soon as possible to minimize the potential of a security incident between the release of vulnerability details and patching. This is even more critical where the exploitation of vulnerabilities known to be in the wild.

Example Evidence Guidelines: Provide a screenshot for every device in the sample and supporting software components showing that patches are installed in line with the documented patching process.

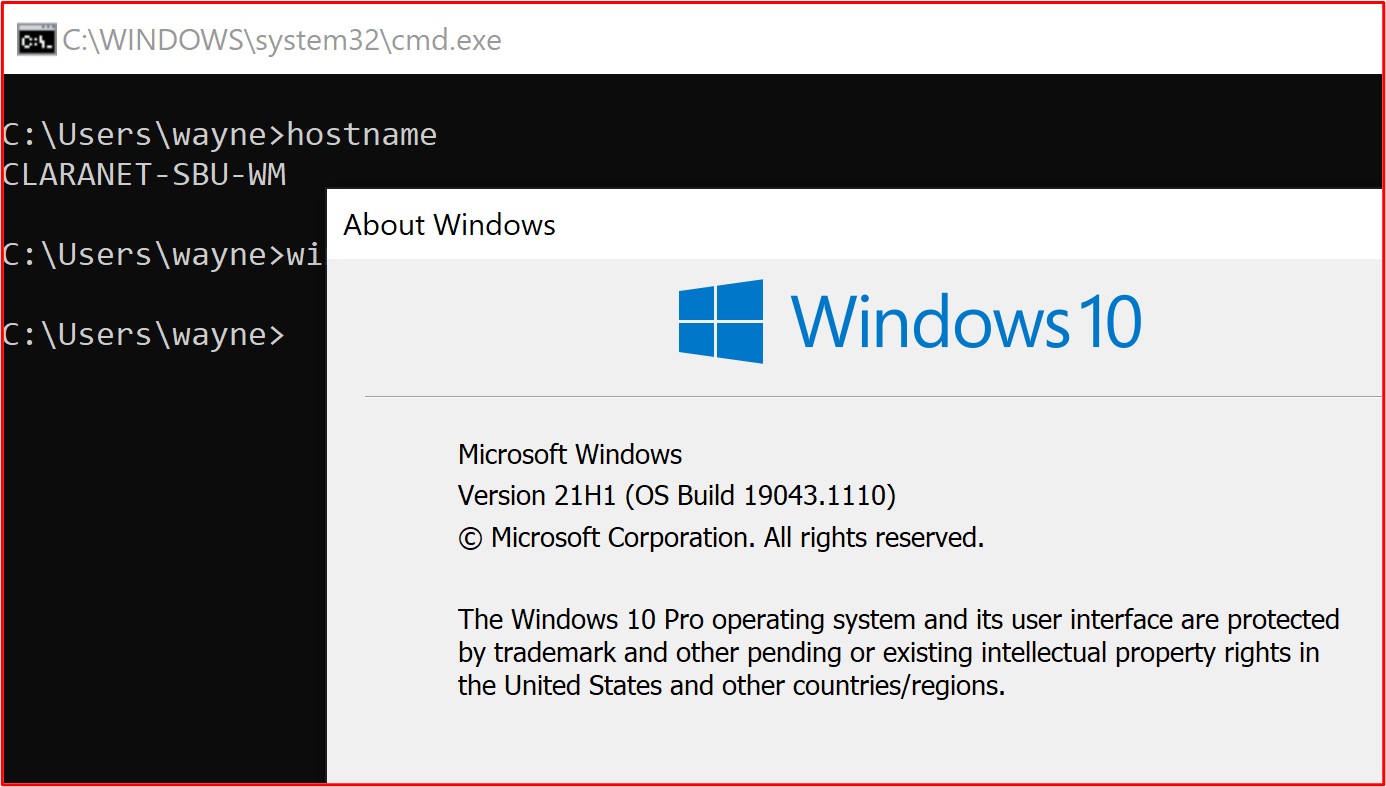

Example Evidence: The following screenshot shows that the in scope system component "CLARANET-SBU-WM" is carrying out Windows updates in line with the patching policy.

Note: Patching of all the in-scope system components needs to be evidence. This includes things like; OS Updates, Application/Component Updates (i.e__.,_ Apache Tomcat, OpenSSL, etc.), Software Dependencies (for example, JQuery, AngularJS, etc.), etc.

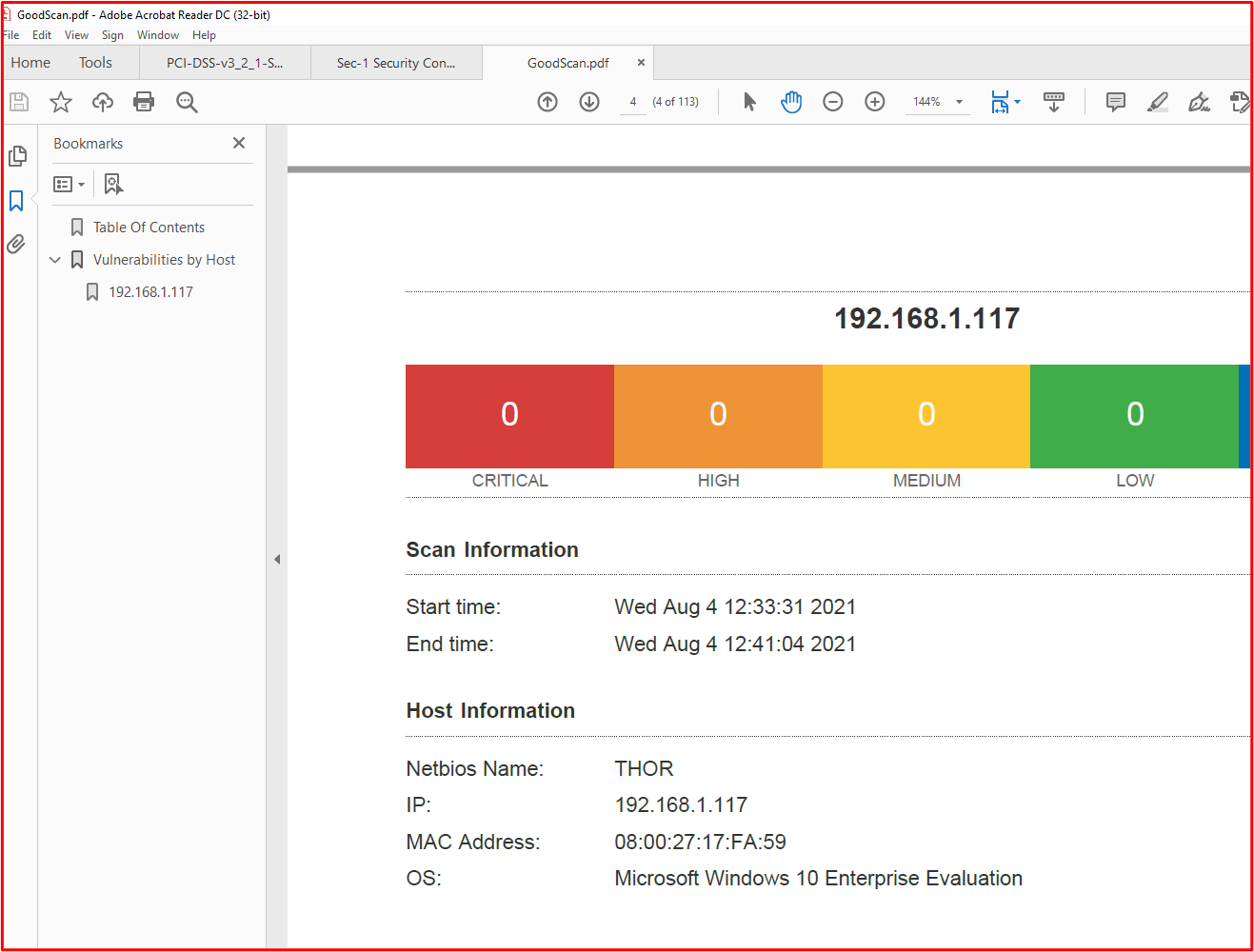

Control #15: Provide demonstratable evidence that any unsupported operating systems and software components aren't used within the environment.

Intent: Software that isn't being maintained by vendors will, overtime, suffer from known vulnerabilities that aren't fixed. Therefore, the use of unsupported operating systems and software components must not be used within production environments.

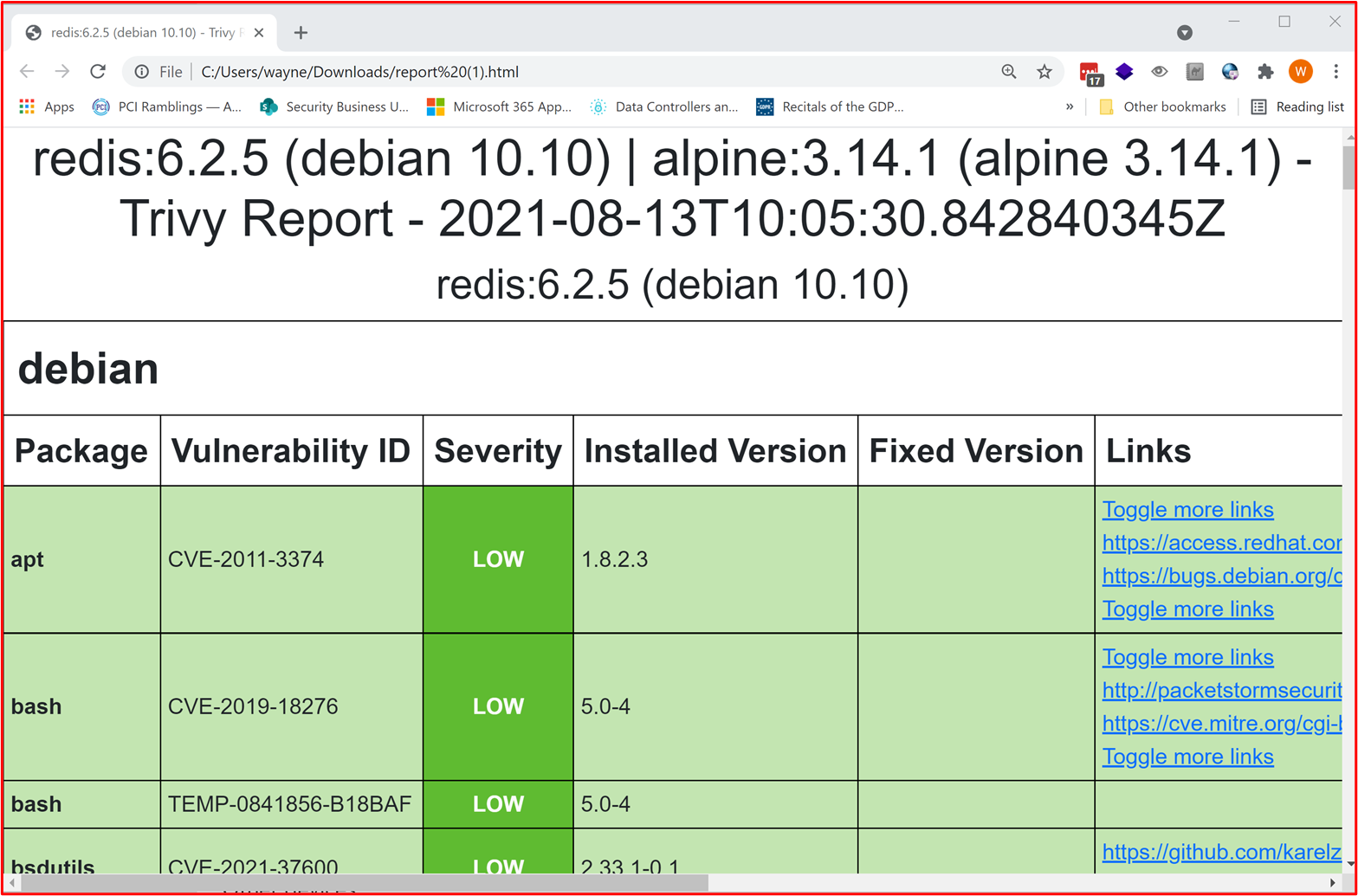

Example Evidence Guidelines: Provide a screenshot for every device in the sample showing the version of OS running (including the server's name in the screenshot). In addition to this, provide evidence that software components running within the environment are running supported versions. This may be done by providing the output of internal vulnerability scan reports (providing authenticated scanning is included) and/or the output of tools which check third-party libraries, such as Snyk, Trivy or NPM Audit. If running in PaaS only, only third-party library patching needs to be covered by the patching control groups.

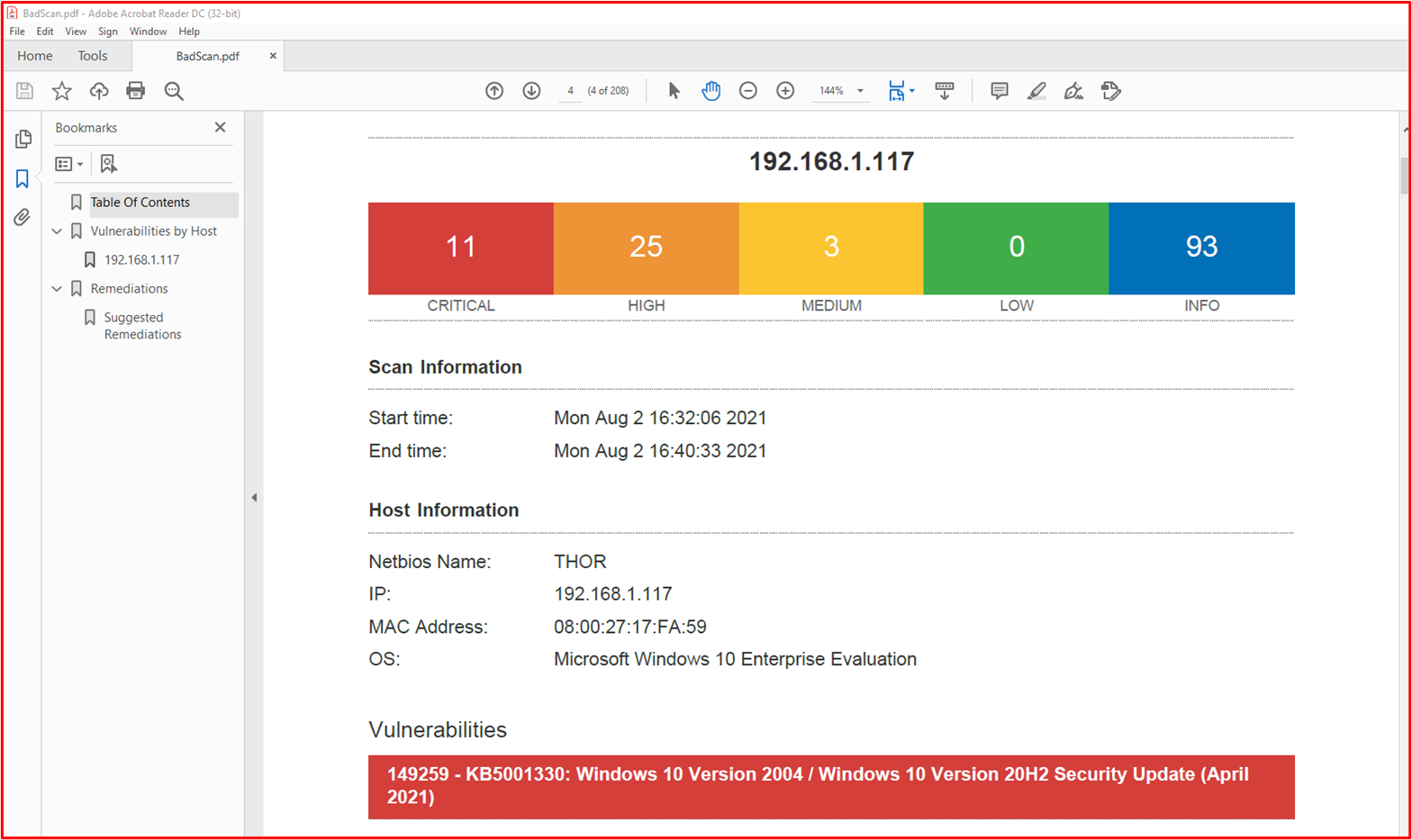

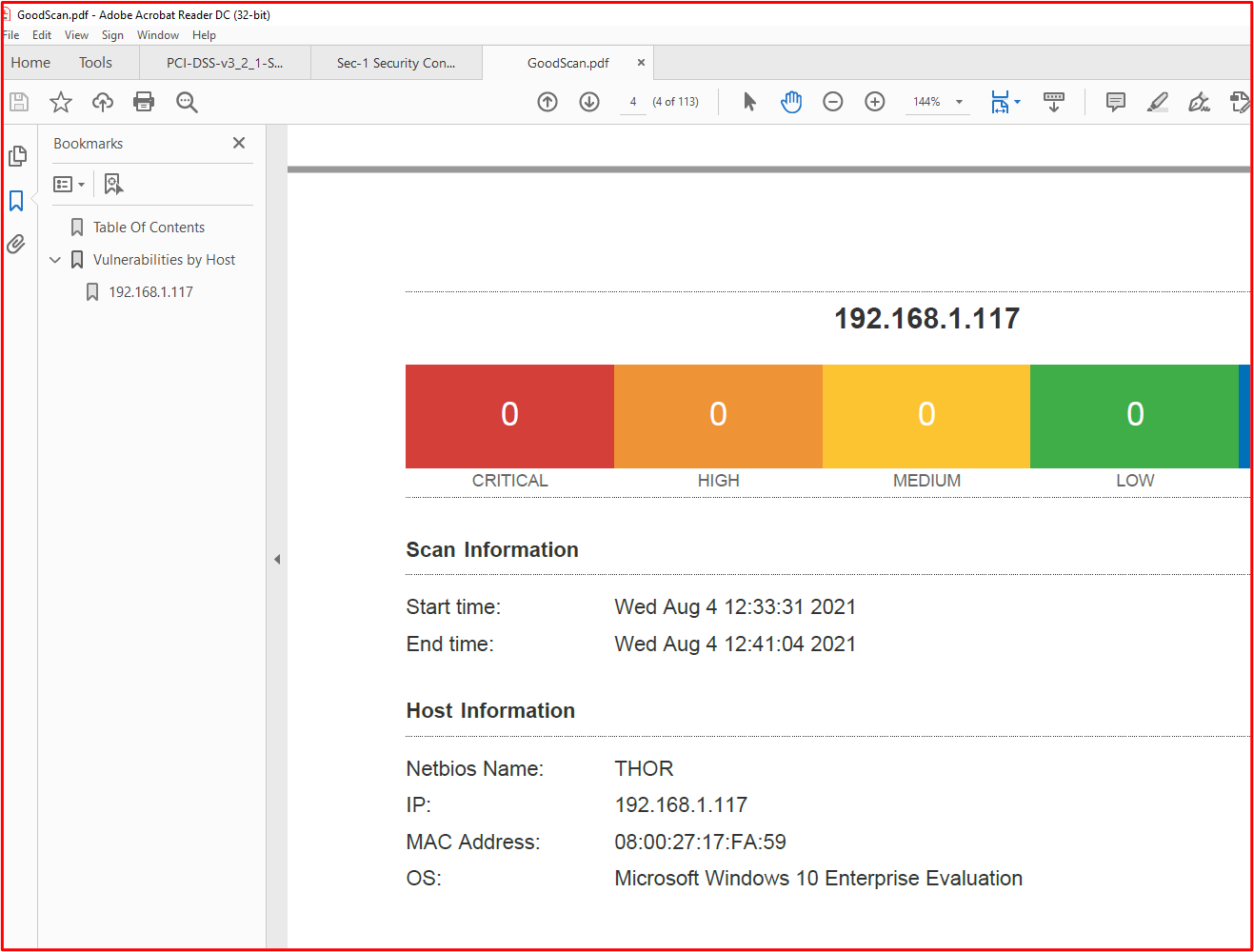

Example Evidence: The following evidence shows that the in-scope system component THOR is running software that is supported by the vendor since Nessus hasn't flagged any issues.

Note: The complete report must be shared with the Certification Analysts.

- Example Evidence 2

This screenshot shows that the in-scope system component "CLARANET-SBU-WM" is running on a supported Windows version.

- Example Evidence 3

The following screenshot is of the Trivy output, which the complete report doesn't list any unsupported applications.

Note: The complete report must be shared with the Certification Analysts.

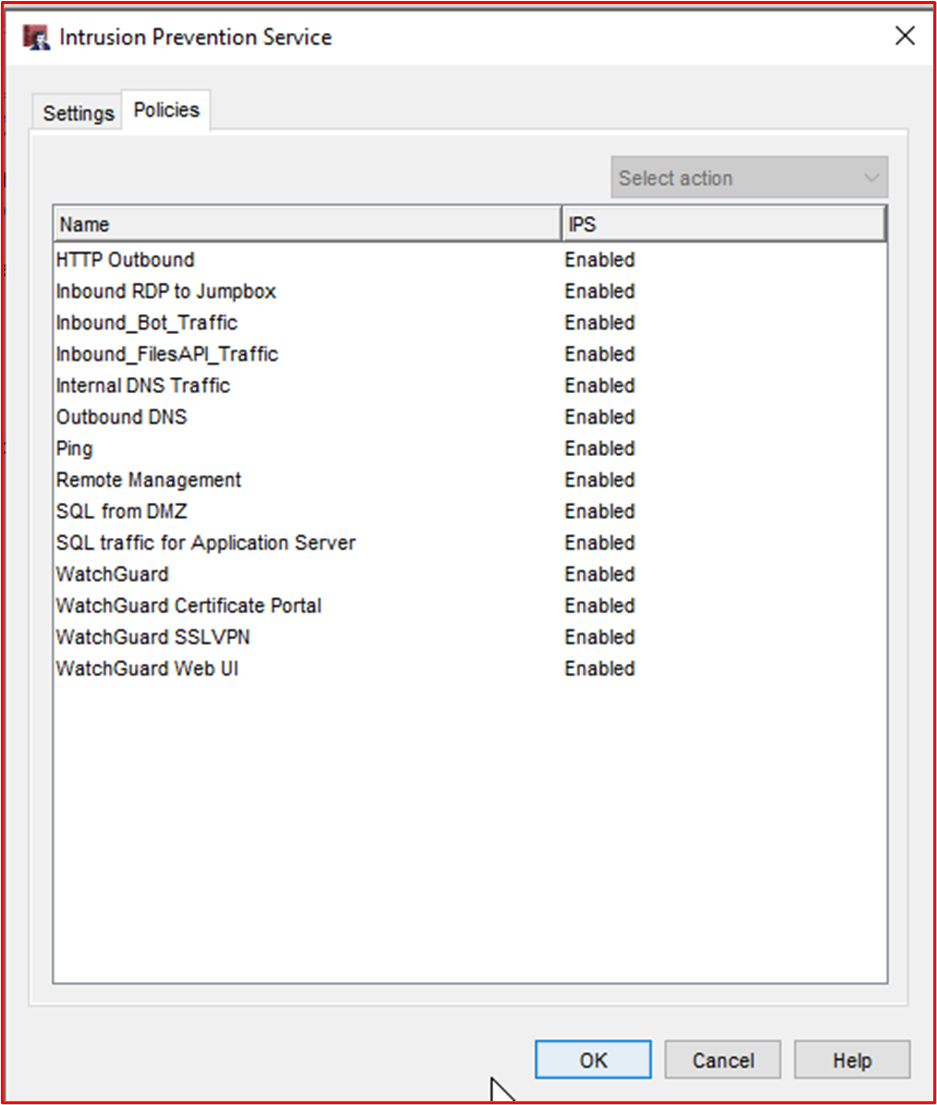

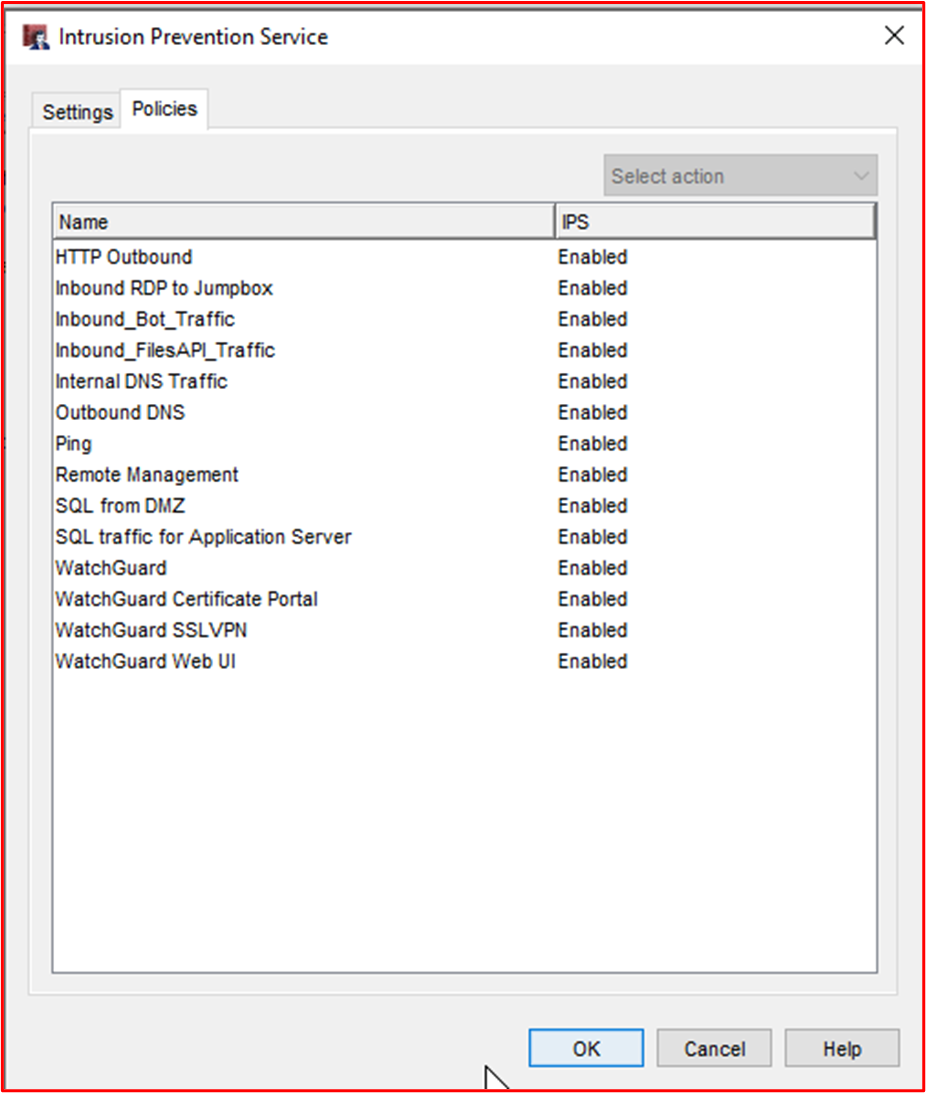

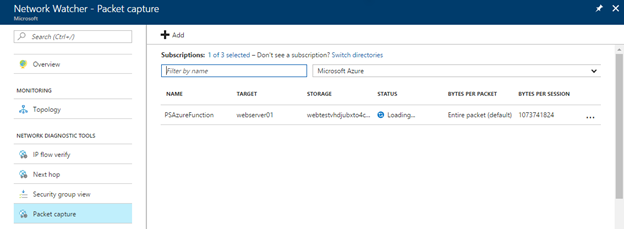

Vulnerability Scanning

By introducing regular vulnerability assessments, organizations can detect weaknesses and insecurities within their environments which may provide an entry point for a malicious actor to compromise the environment. Vulnerability scanning can help to identify missing patches or misconfigurations within the environment. By regularly conducting these scans, an organization can provide appropriate remediation to minimize the risk of a compromise due to issues that are commonly picked up by these vulnerability scanning tools.

Control #16: Provide the quarterly infrastructure and web application vulnerability scanning reports. Scanning needs to be carried out against the entire public footprint (IP addresses and URLs) and internal IP ranges.

Note: This MUST include the full scope of the environment.

Intent: Vulnerability scanning looks for possible weaknesses in an organizations computer system, networks, and web applications to identify holes which could potentially lead to security breaches and the exposure of sensitive data. Vulnerability scanning is often required by industry standards and government regulations, for example, the PCI DSS (Payment Card Industry Data Security Standard).

A report by Security Metric entitled "2020 Security Metrics Guide to PCI DSS Compliance" states that 'on average it took 166 days from the time an organization was seen to have vulnerabilities for an attacker to compromise the system. Once compromised, attackers had access to sensitive data for an average of 127 days' therefore this control is aimed at identifying potential security weakness within the in-scope environment.

Example Evidence Guidelines: Provide the full scan report(s) for each quarter's vulnerability scans that have been carried out over the past 12 months. The reports should clearly state the targets to validate that the full public footprint is included, and where applicable, each internal subnet. Provide ALL scan reports for EVERY quarter.

Example Evidence: Example Evidence would be to supply the scan reports from the scanning tool being used. Each quarter's scanning reports should be supplied for review. Scanning needs to include the entire environments system components so; every internal subnet and every public IP Address/URL that is available to the environment.

Control #17: Provide demonstratable evidence that remediation of vulnerabilities identified during vulnerability scanning are patched in line with your documented patching timeframe.

Intent: Failure to identify, manage and remediate vulnerabilities and misconfigurations quickly can increase an organization's risk of a compromise leading to potential data breaches. Correctly identifying and remediating issues is seen as important for an organization's overall security posture and environment which is in line with best practices of various security frameworks for; example, the ISO 27001 and the PCI DSS.

Example Evidence Guidelines: Provide suitable artifacts (that is, screenshots) showing that a sample of discovered vulnerabilities from the vulnerability scanning are remediated in line with the patching windows already supplied in Control 13 above.

Example Evidence: The following screenshot shows a Nessus scan of the in-scope environment (a single machine in this example named "THOR") showing vulnerabilities on the 2nd August 2021.

The following screenshot shows that the issues were resolved, 2 days later which is within the patching window defined within the patching policy.

Note: For this control, Certification Analysts need to see vulnerability scan reports and remediation for each quarter over the past twelve months.

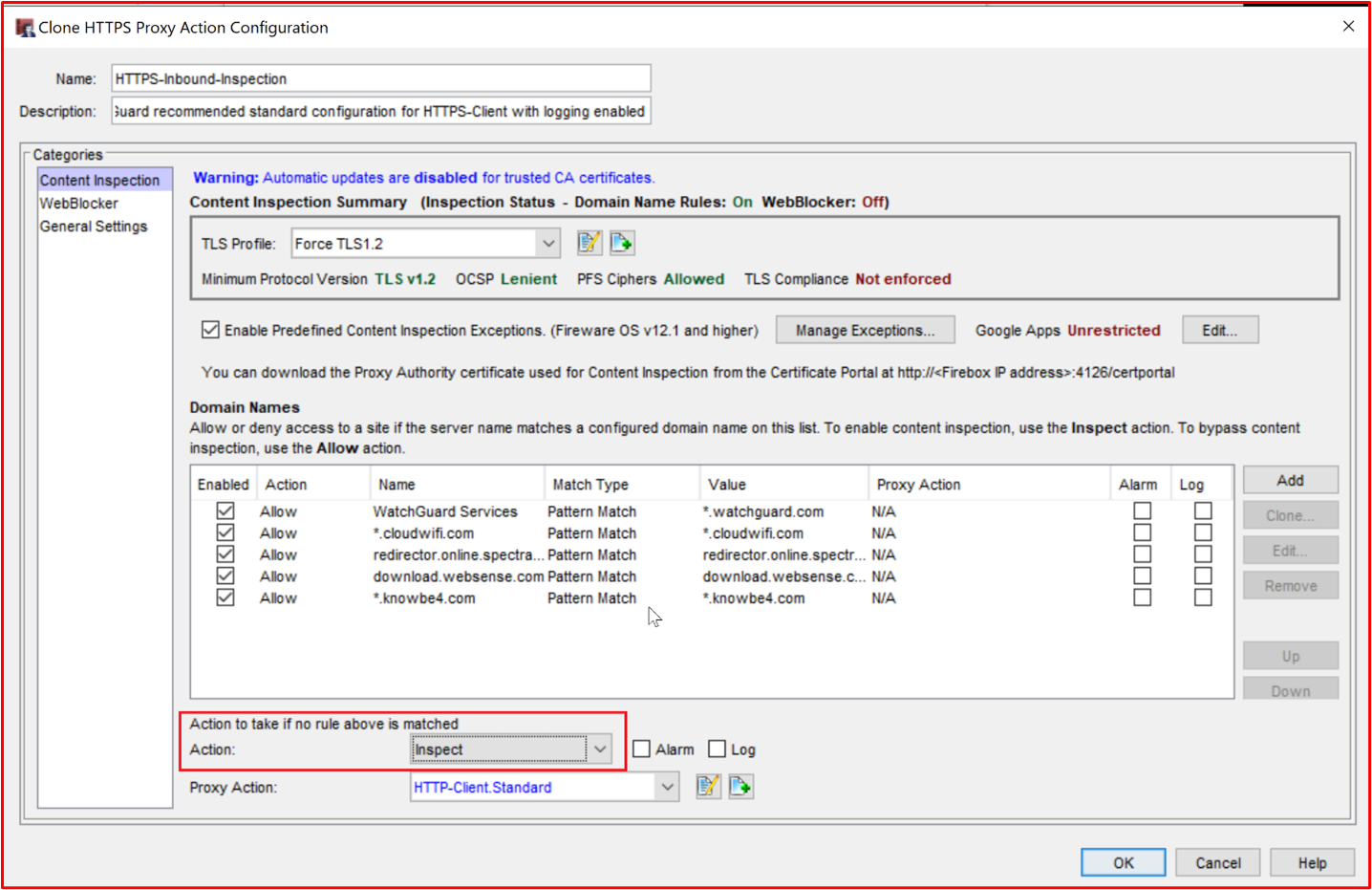

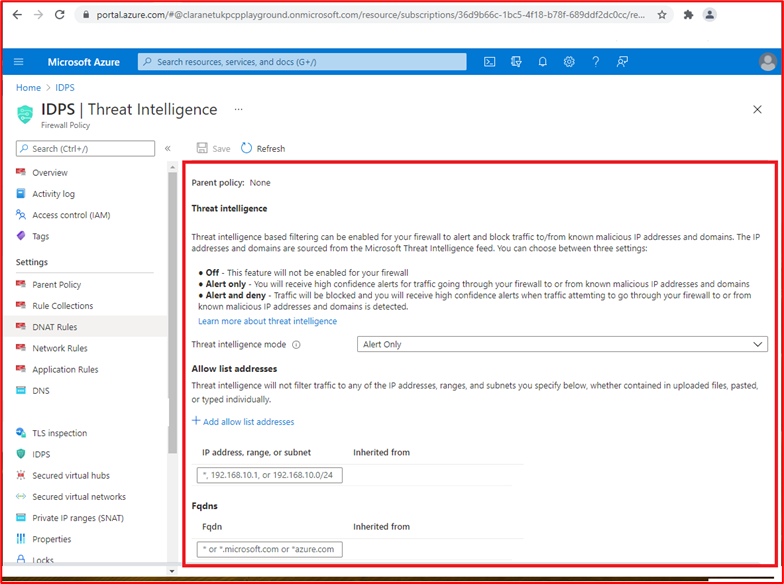

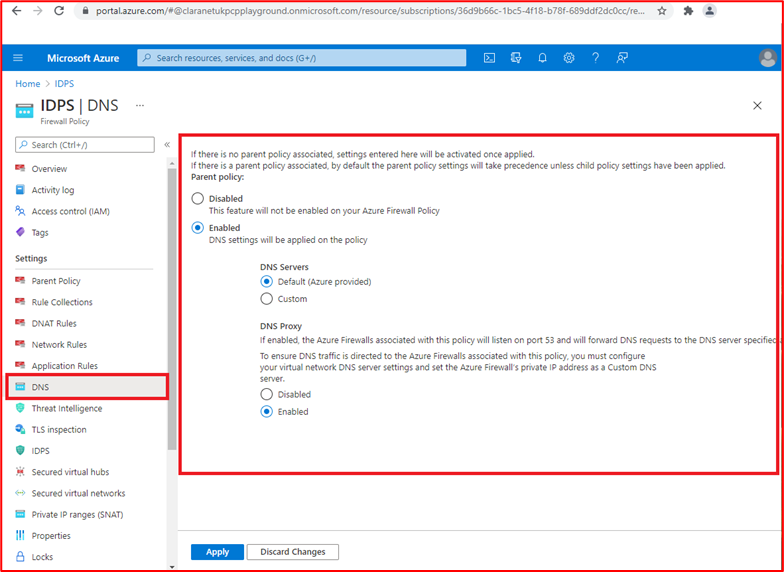

Firewalls

Firewalls often provide a security boundary between trusted (internal network), untrusted (Internet) and semi-trusted (DMZ) environments. These will usually be the first line of defense within an organizations defense-in-depth security strategy, designed to control traffic flows for ingress and egress services and to block unwanted traffic. These devices must be tightly controlled to ensure they operate effectively and are free from misconfiguration that could put the environment at risk.

Control #18: Provide policy documentation that governs firewall management practices and procedures.

Intent: Firewalls are an important first line of defense in a layered security (defense in-depth) strategy, protecting environments against less trusted network zones. Firewalls will typically control traffic flows based upon IP Addresses and protocols/ports, more feature rich firewalls can also provide additional "application layer" defenses by inspecting application traffic to safeguard against misuse, vulnerabilities and threats based upon the applications being accessed. These protections are only as good as the configuration of the firewall, therefore strong firewall policies and support procedures need to be in place to ensure they're configured to provide adequate protection of internal assets. For example, a firewall with a rule to allow ALL traffic from ANY source to ANY destination is just acting as a router.

Example Evidence Guidelines: Supply your full firewall policy/procedure supporting documentation. This document should cover all the points below and any additional best practices applicable to your environment.

Example Evidence: Below is an example of the kind of firewall policy document we require (this is a demo and may not be complete).

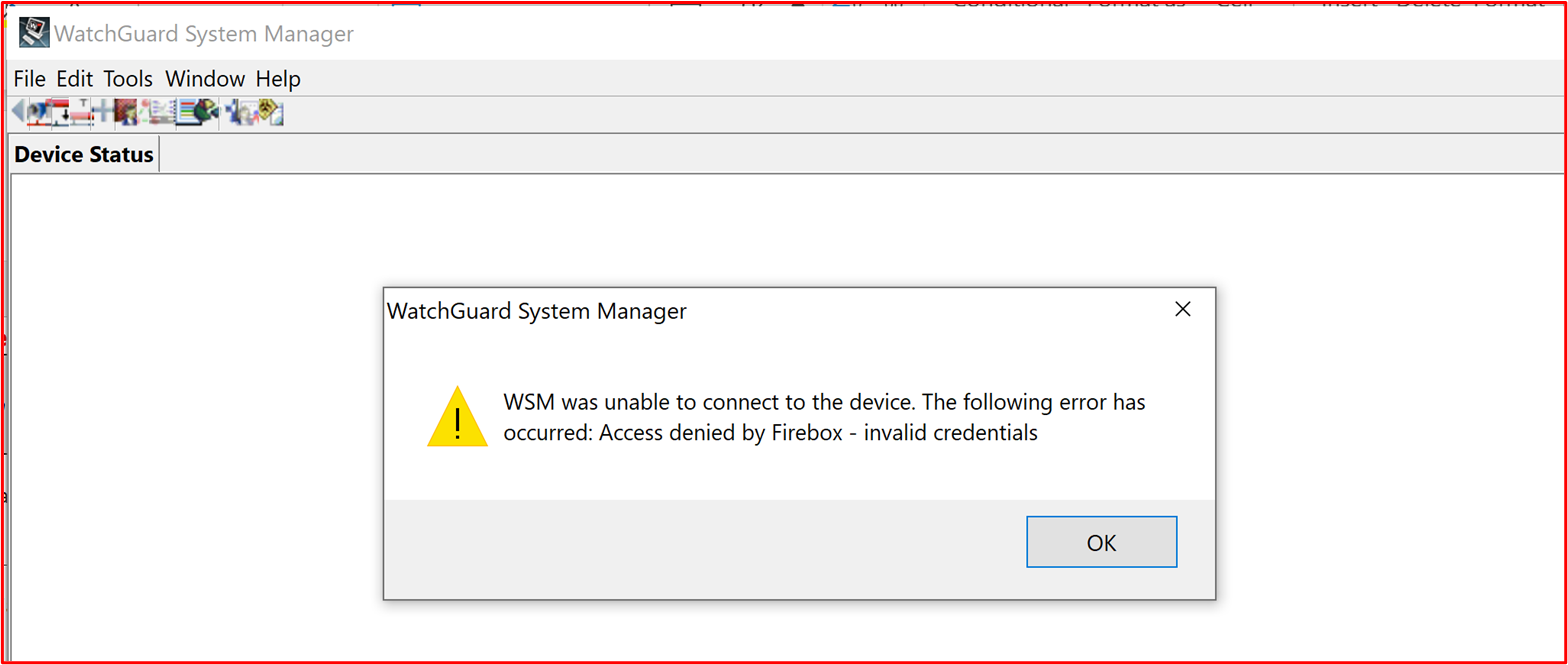

Control #19: Provide demonstrable evidence that any default administrative credentials are changed prior to installation into production environments.

Intent: Organizations need to be mindful of vendor provided default administrative credentials which are configured during the configuration of the device or software. Default credentials are often publicly available by the vendors and can provide an external activity group with an opportunity to compromise an environment. For example, a simple search on the Internet for the default iDrac (Integrated Dell Remote Access Controller) credentials will highlight root::calvin as the default username and password. This will give someone remote access to remote server management. The intent of this control is to ensure environments aren't susceptible to attack through default vendor credentials that haven't been changed during device/application hardening.

Example Evidence Guidelines

This can be evidenced over a screensharing session where the Certification Analyst can try to authenticate to the in-scope devices using default credentials.

Example Evidence

The below screenshot shows what the Certification Analyst would see from an invalid username / password from a WatchGuard Firewall.

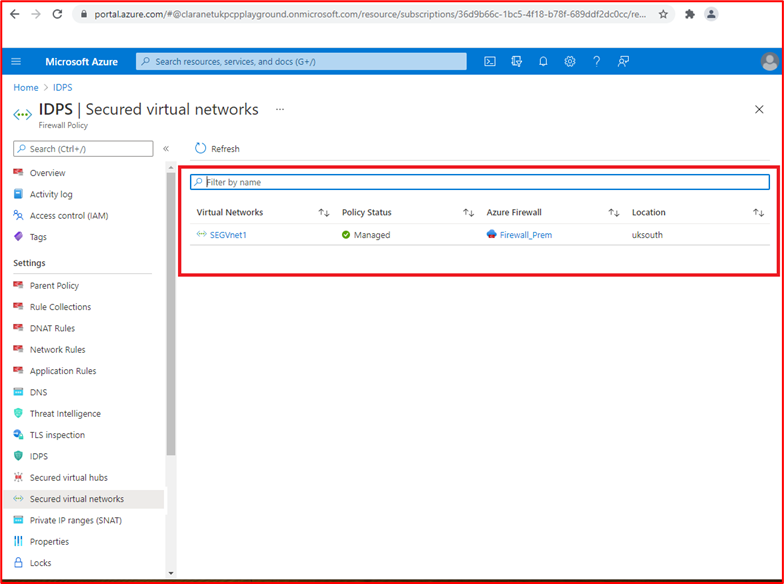

Control 20: Provide demonstrable evidence that firewalls are installed on the boundary of the in-scope environment, and installed between the perimeter network (also known as DMZ, demilitarized zone, and screened subnet) and internal trusted networks.

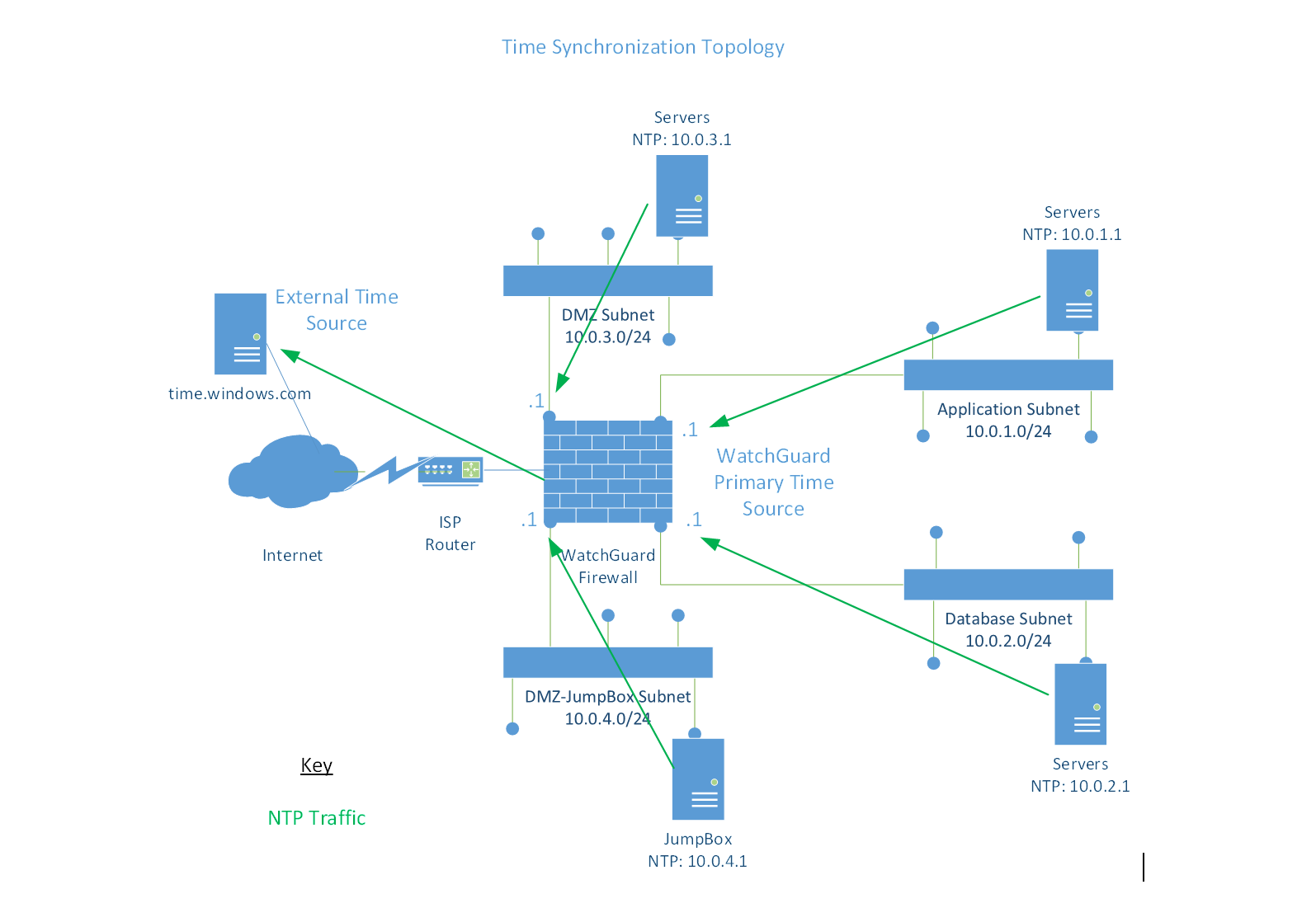

Intent: Firewalls provide the ability to control traffic between different network zones of different security levels. Since all environments are Internet connected, firewalls need to be installed on the boundary, that is, between the Internet and the in-scope environment. Additionally, firewalls need to be installed between the less trusted DMZ (De-Militarized Zone) networks and internal trusted networks. DMZs are typically used to serve traffic from the Internet and therefore is a target of attack. By implementing a DMZ and using a firewall to control traffic flows, a compromise of the DMZ won't necessarily mean a compromise of the internal trusted networks and corporate/customer data. Adequate logging and alerting should be in place to help organizations quickly identify a compromise to minimize the opportunity for the activity group to further compromise the internal trusted networks. The intent of this control is to ensure there's adequate control between trusted and less trusted networks.

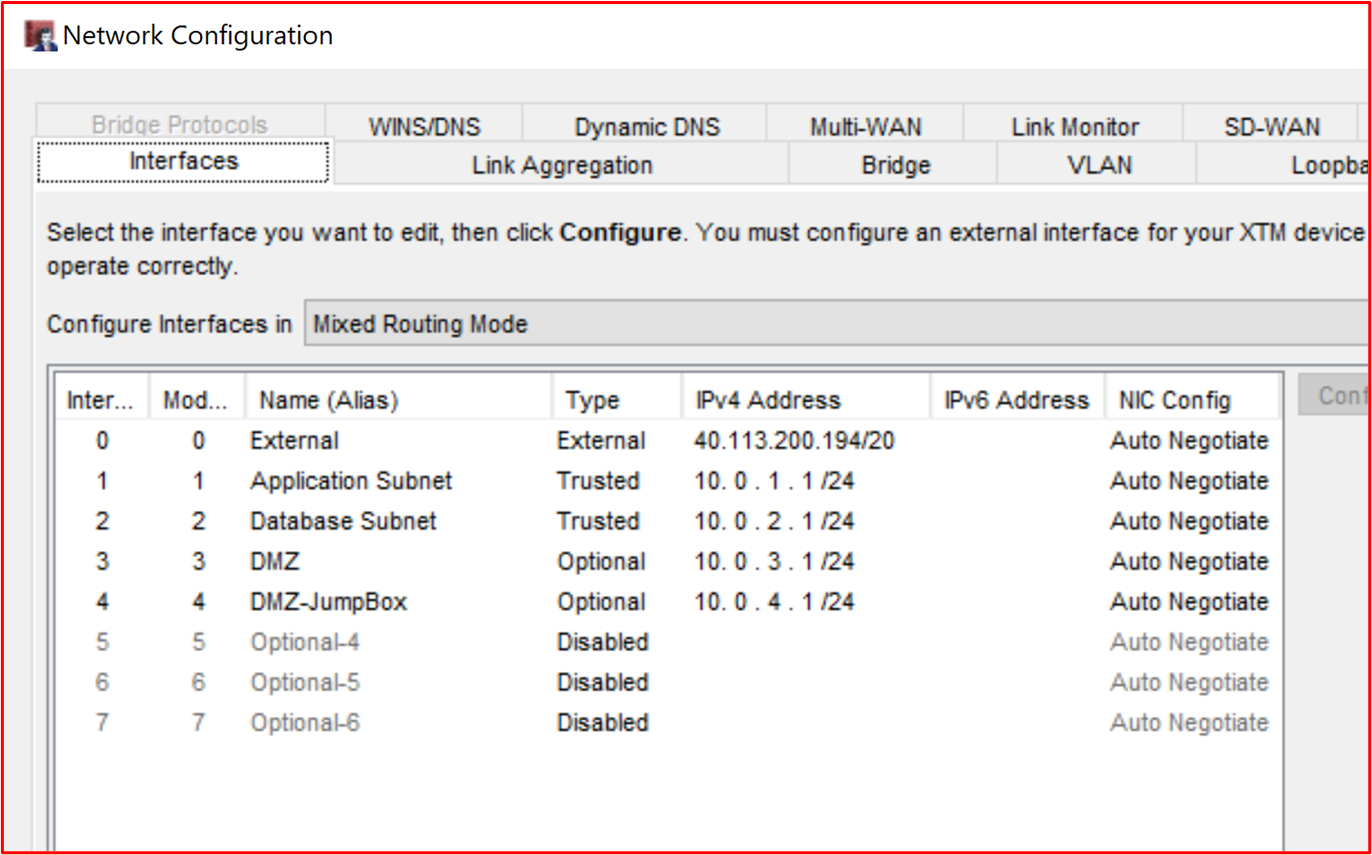

Example Evidence Guidelines: Evidence should be provided by way of firewall configuration files or screenshots demonstrating that a DMZ is in place. This should match the supplied architectural diagrams demonstrating the different networks supporting the environment. A screenshot of the network interfaces on the firewall, coupled with the network diagram already supplied as part of the Initial Document Submission should provide this evidence.

Example Evidence: Below is a screenshot of a WatchGuard firewall demonstrating two DMZs, one is for the inbound services (named DMZ), the other is serving the jumpbox (Bastian Host).

Control 21: Provide demonstrable evidence that all public access terminates in the demilitarized zone (DMZ).

Intent: Resources that are publicly accessible are open to a myriad of attacks. As already discussed above, the intent of a DMZ is to segment less trusted networks from trusted internal networks which may contain sensitive data. A DMZ is deemed less trusted since there's a much great risk of hosts that are publicly accessible from being compromised by external activity groups. Public access should always terminate in these less trusted networks which are adequately segmented by the firewall to help protect internal resources and data. The intent of this control is to ensure all public access terminates within these less trusted DMZs as if resources on the trusted internal networks were public facing, a compromise of these resources provides an activity group a foothold into the network where sensitive data is being held.

Example Evidence Guidelines

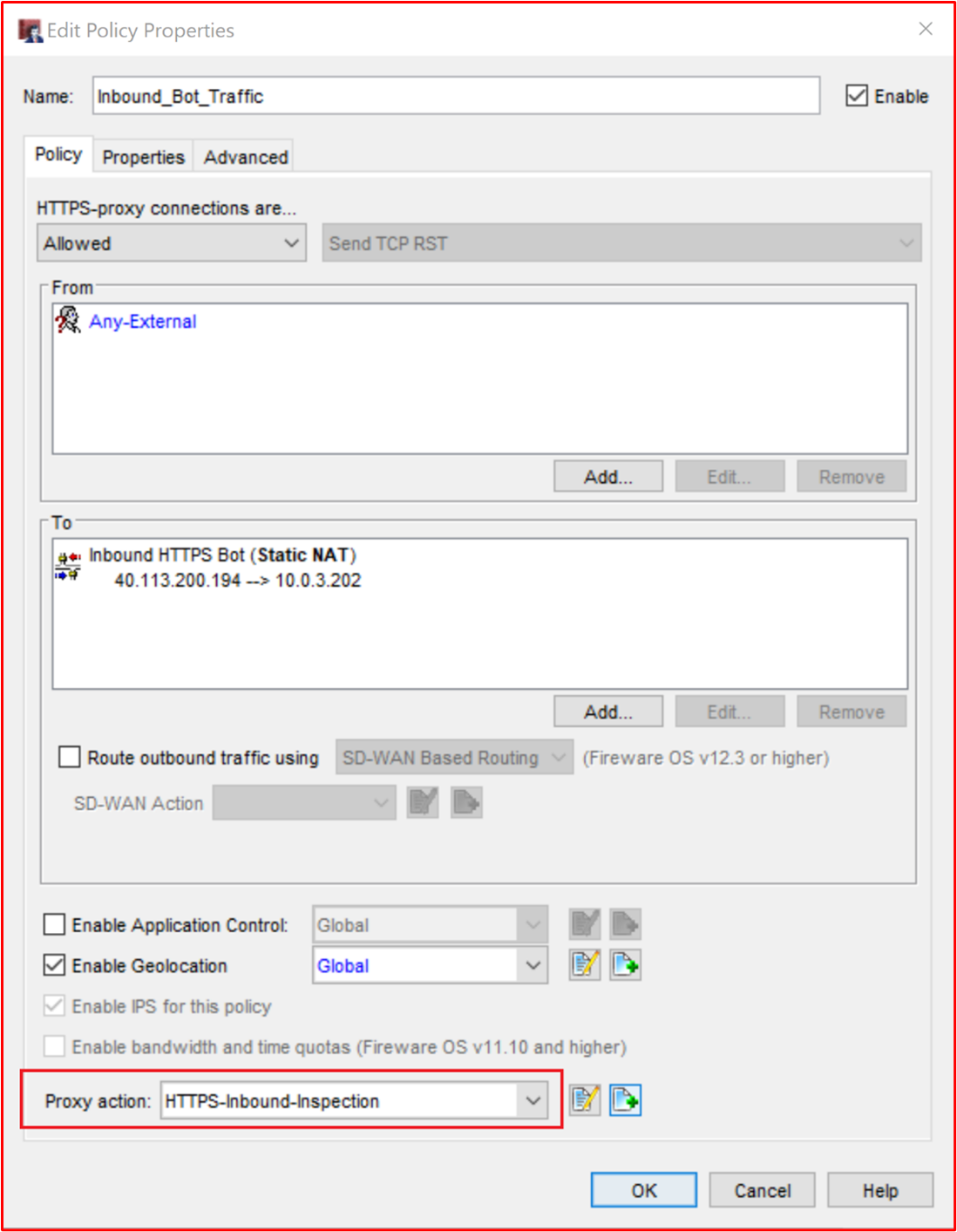

Evidence provided for this could be firewall configurations which show the inbound rules and where these rules are terminating, either by routing public IP Addresses to the resources, or by providing the NAT (Network Address Translation) of the inbound traffic.

Example Evidence

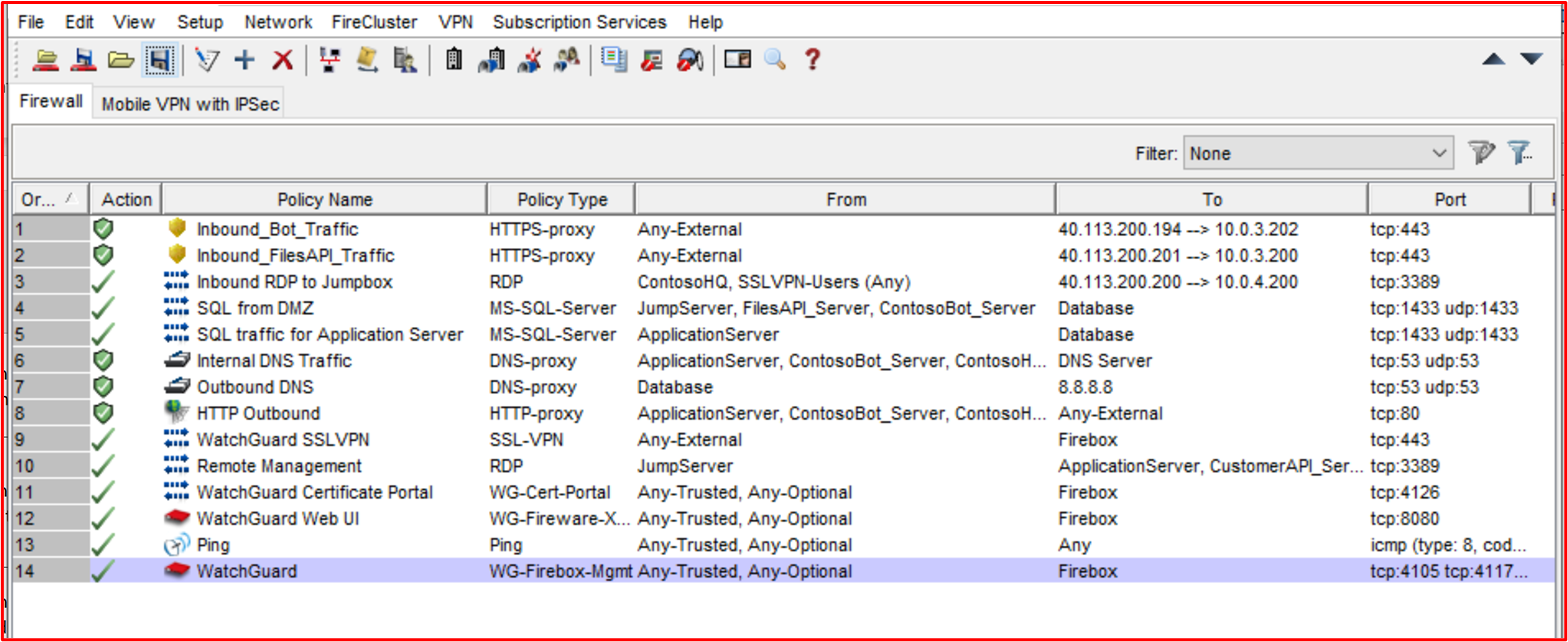

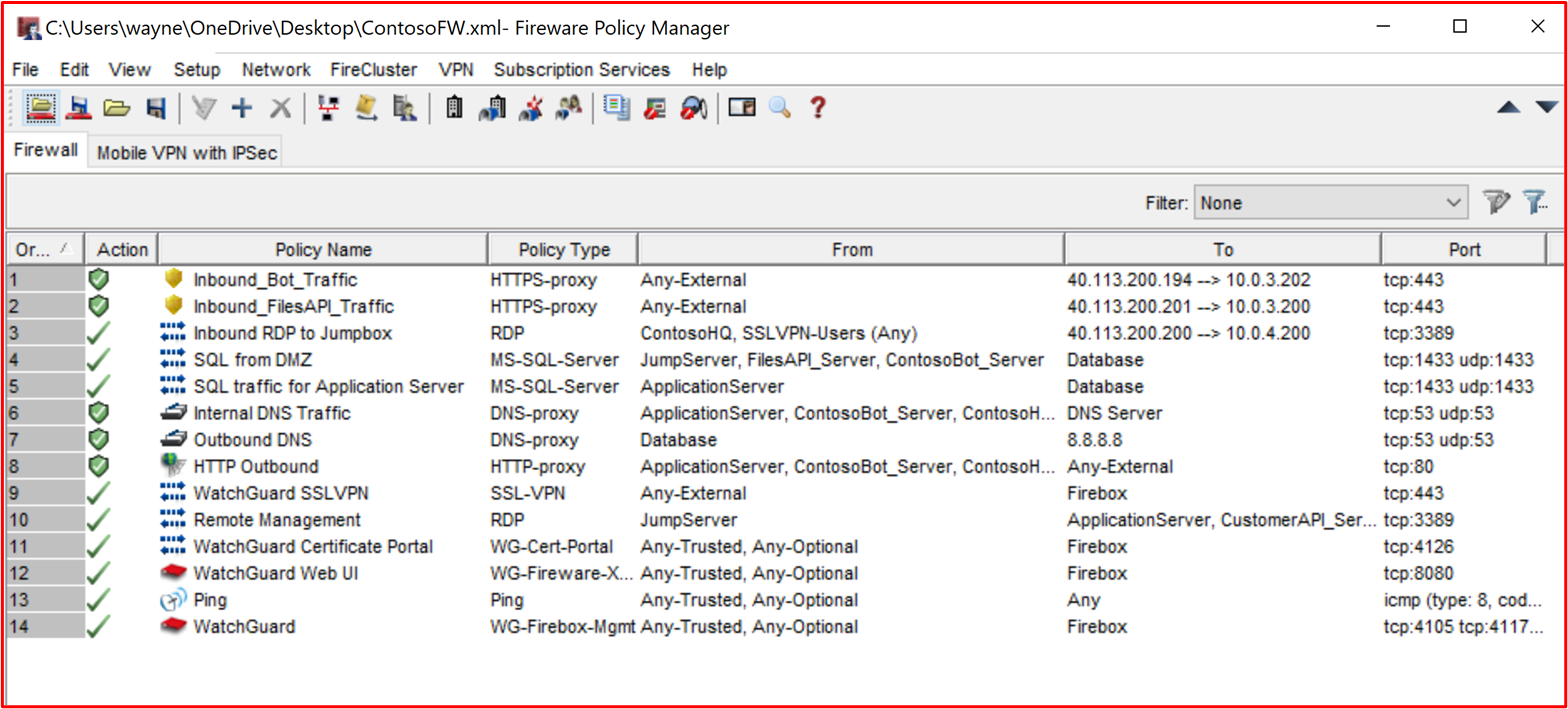

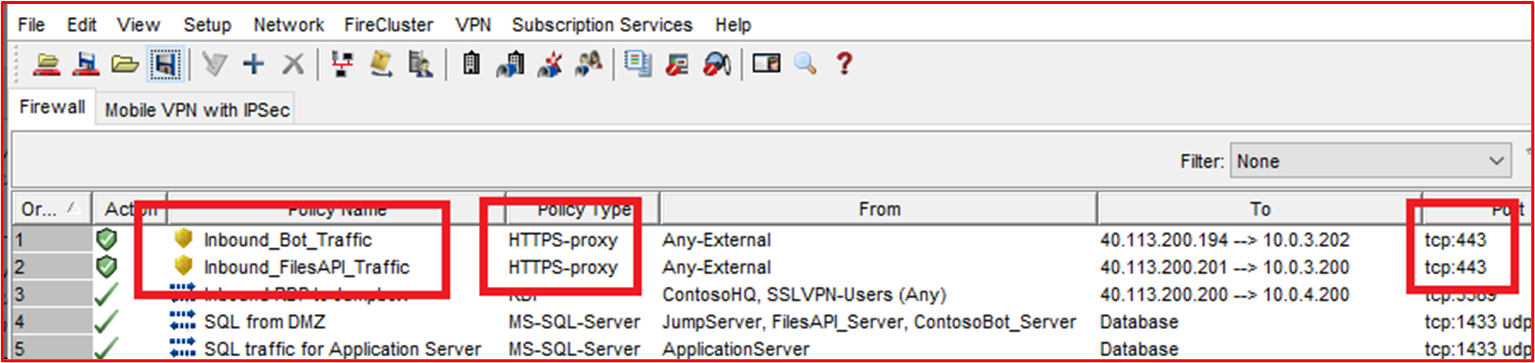

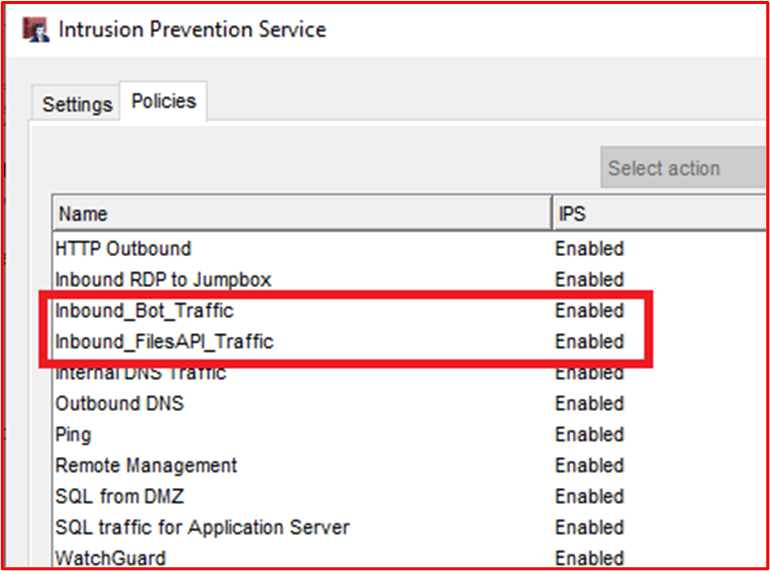

In the screenshot below, there are three incoming rules, each showing the NAT to the 10.0.3.x and 10.0.4.x subnets, which are the DMZ subnets

Control 22: Provide demonstrable evidence that all traffic permitted through the firewall goes through an approval process.

Intent: Since firewalls are a defensive barrier between untrusted traffic and internal resources, and between networks of different trust levels, firewalls need to be securely configured and ensure that only traffic which is necessary for business operations are enabled. By allowing an unnecessary traffic flow, or a traffic flow that is overly permissive, this can introduce weaknesses within the defense at the boundary of these various network zones. By establishing a robust approval process for all firewall changes, the risk of introducing a rule which introduces a significant risk to the environment is reduced. Verizon's 2020 Data Breach Investigation Report highlights that "Error's", which includes misconfigurations, is the only action type that is consistently increasing year-to-year.

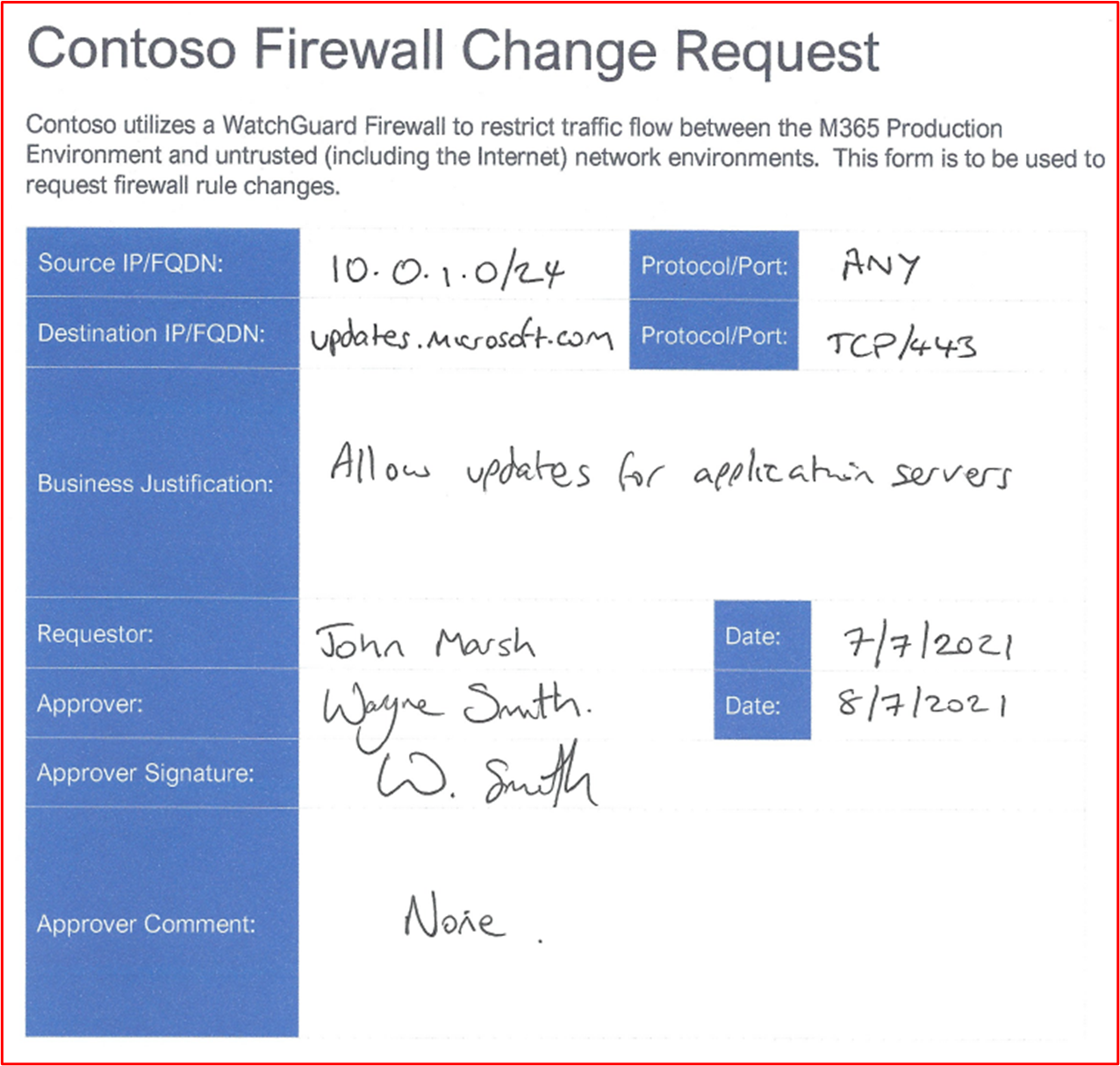

Example Evidence Guidelines: Evidence can be in the form of documentation showing a firewall change request being authorized, which may be minutes from a CAB (Change Advisor Board) meeting or by a change control system tracking all changes.

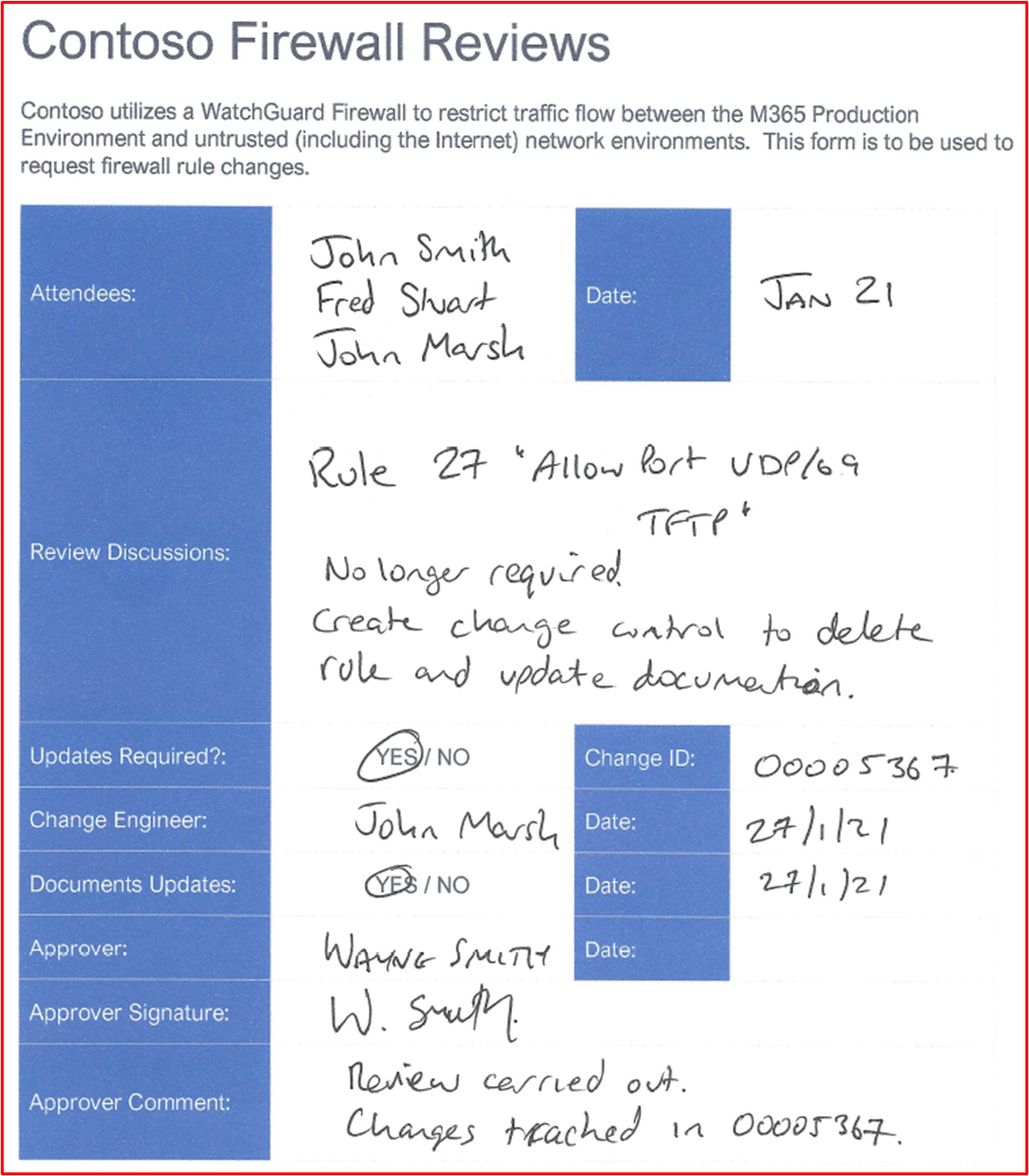

Example Evidence: The following screenshot shows a firewall rule change being requested and authorized using a paper-based process. This could be achieved through something like DevOps or Jira, for example.

Control 23: Provide demonstrable evidence that the firewall rule base is configured to drop traffic not explicitly defined.

Intent: Most firewalls will process the rules in a top-down approach to try to find a matching rule. If a rule matches, the action of that rule will be applied, and all further processing of the rules will stop. If no matching rules are found, by default the traffic is denied. The intent of this control is, if the firewall doesn't default to dropping traffic if no matching rule is found, then the rule base must include a "Deny All" rule at the end of ALL firewall lists. This is to ensure that firewall don't default into a default allow state when processing the rules, thus allowing traffic that hasn't been explicitly defined.

Example Evidence Guidelines: Evidence can be provided by way of the firewall configuration, or by screenshots showing all the firewall rules showing a "Deny All" rule at the end, or if the firewall drops traffic that doesn't match a rule by default, then supply a screenshot of all the firewall rules and a link to vendor administrative guides highlighting that by default the firewall will drop all traffic not matched.

Example Evidence: Below is a screenshot of the WatchGuard firewall rule base which demonstrates that no rules are configured to permit all traffic. there's no deny rule at the end because the WatchGuard will drop traffic that doesn't match by default.

The following WatchGuard Help Center link; https://www.watchguard.com/help/docs/help-center/en-US/Content/en-US/Fireware/policies/policies_about_c.html includes the following information:

Control 24: Provide demonstrable evidence that the firewall supports only strong cryptography on all non-console administrative interfaces.

Intent: To mitigate man-in-the-middle attacks of administrative traffic, all non-console administrative interfaces should support only strong cryptography. The main intent of this control is to protect the administrative credentials as the non-console connection is setup. Additionally, this also can help to protect against eavesdropping into the connection, trying to replay administrative functions to reconfigure the device or as part of reconnaissance.

Example Evidence Guidelines: Provide the firewall configuration, if the configuration provides the cryptographic configuration of the non-console administrative interfaces (not all devices will include this as configurable options). If this isn't within the configuration, you may be able to issue commands to the device to display what is configured for these connections. Some vendors may publish this information within articles so this may also be a way to evidence this information. Finally, you may need to run tools to output what encryption is supported.

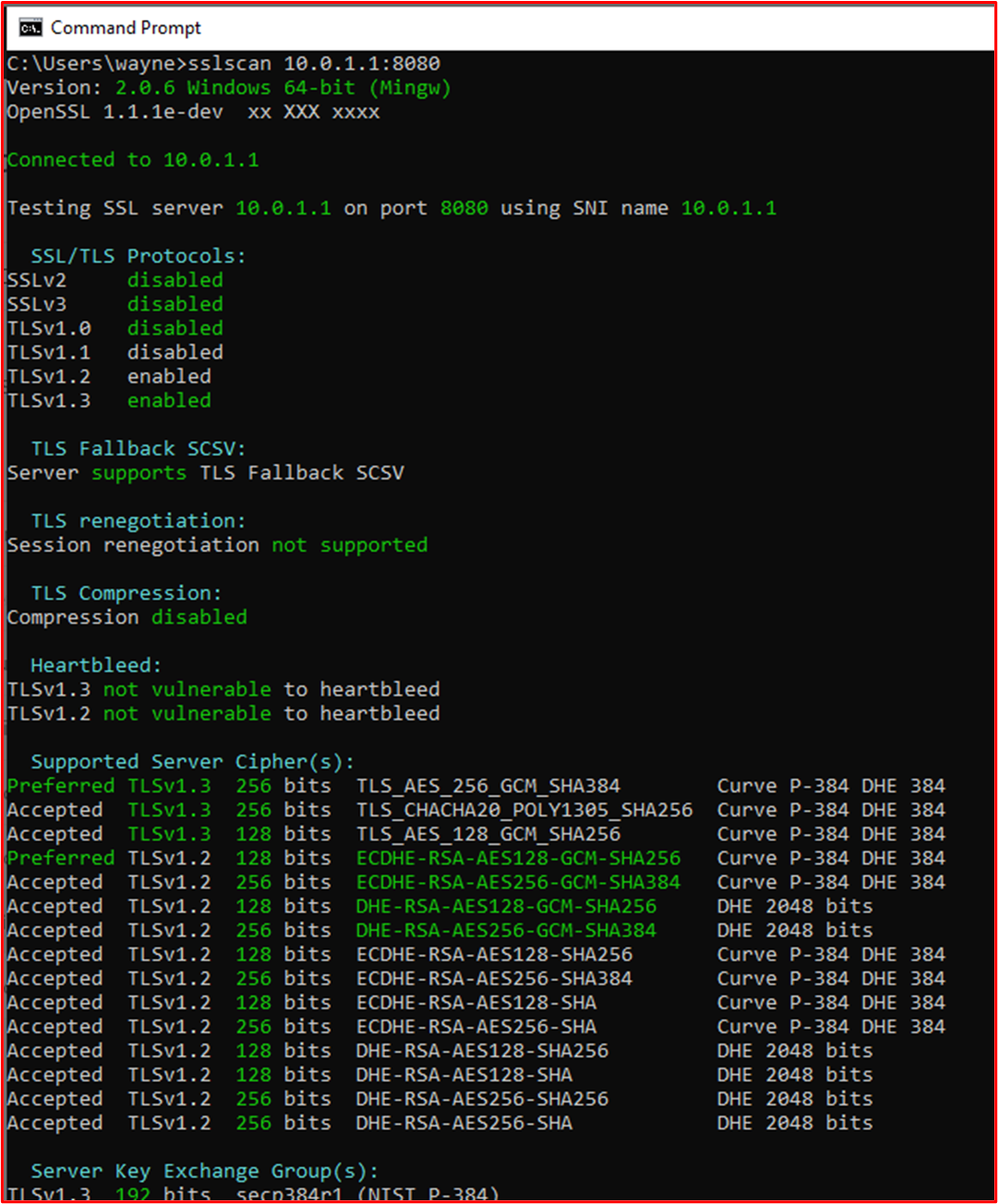

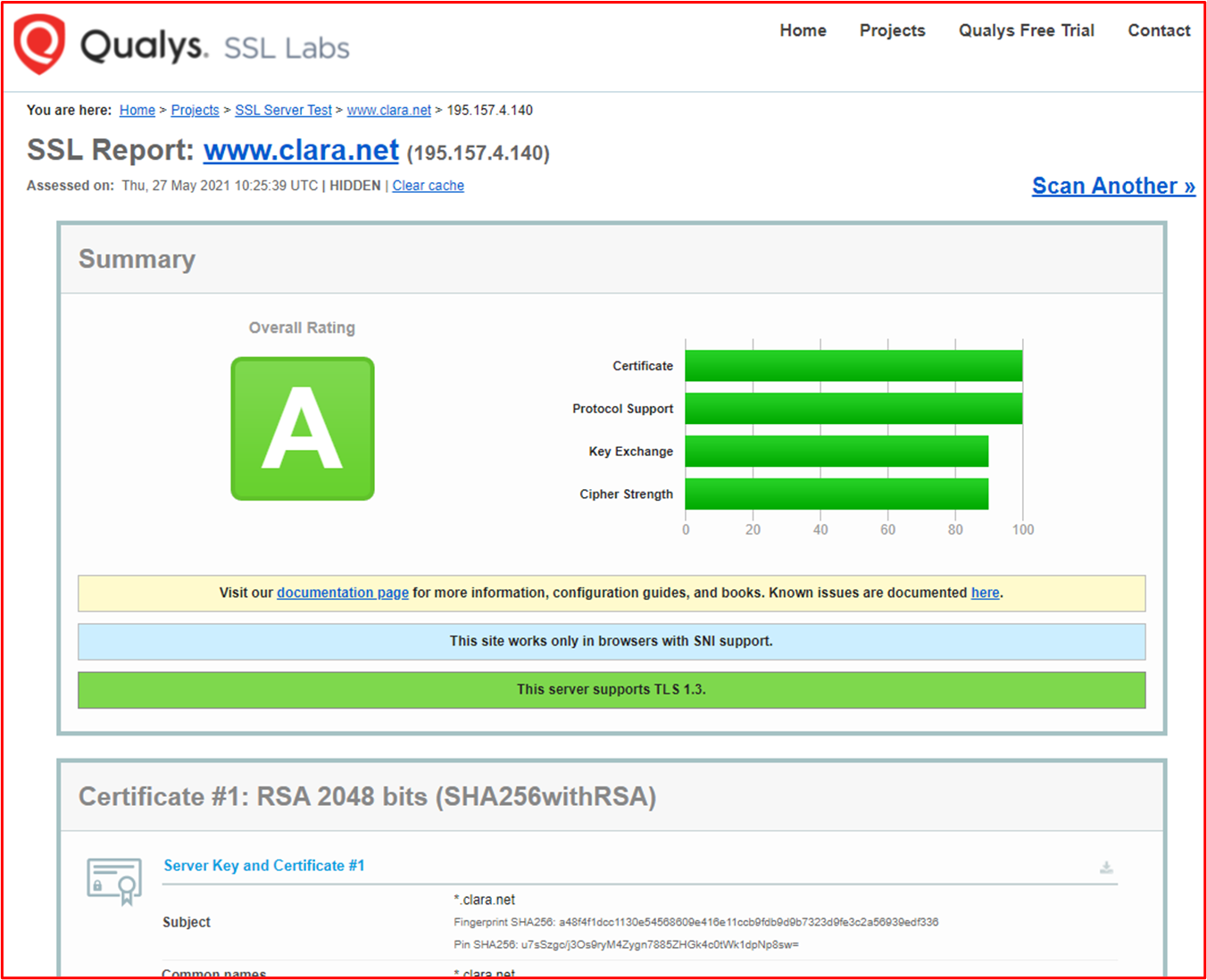

Example Evidence: The below screenshot shows the output of SSLScan against the Web Admin interface of the WatchGuard firewall on TCP port 8080. This shows TLS 1.2 or above with a minimum encryption cipher of AES-128bit.

Note: The WatchGuard firewalls also support administrative functions using SSH (TCP Port 4118) and WatchGuard System Manager (TCP Ports 4105 & 4117). Evidence of these non-console administrative interfaces would also need to be provided.

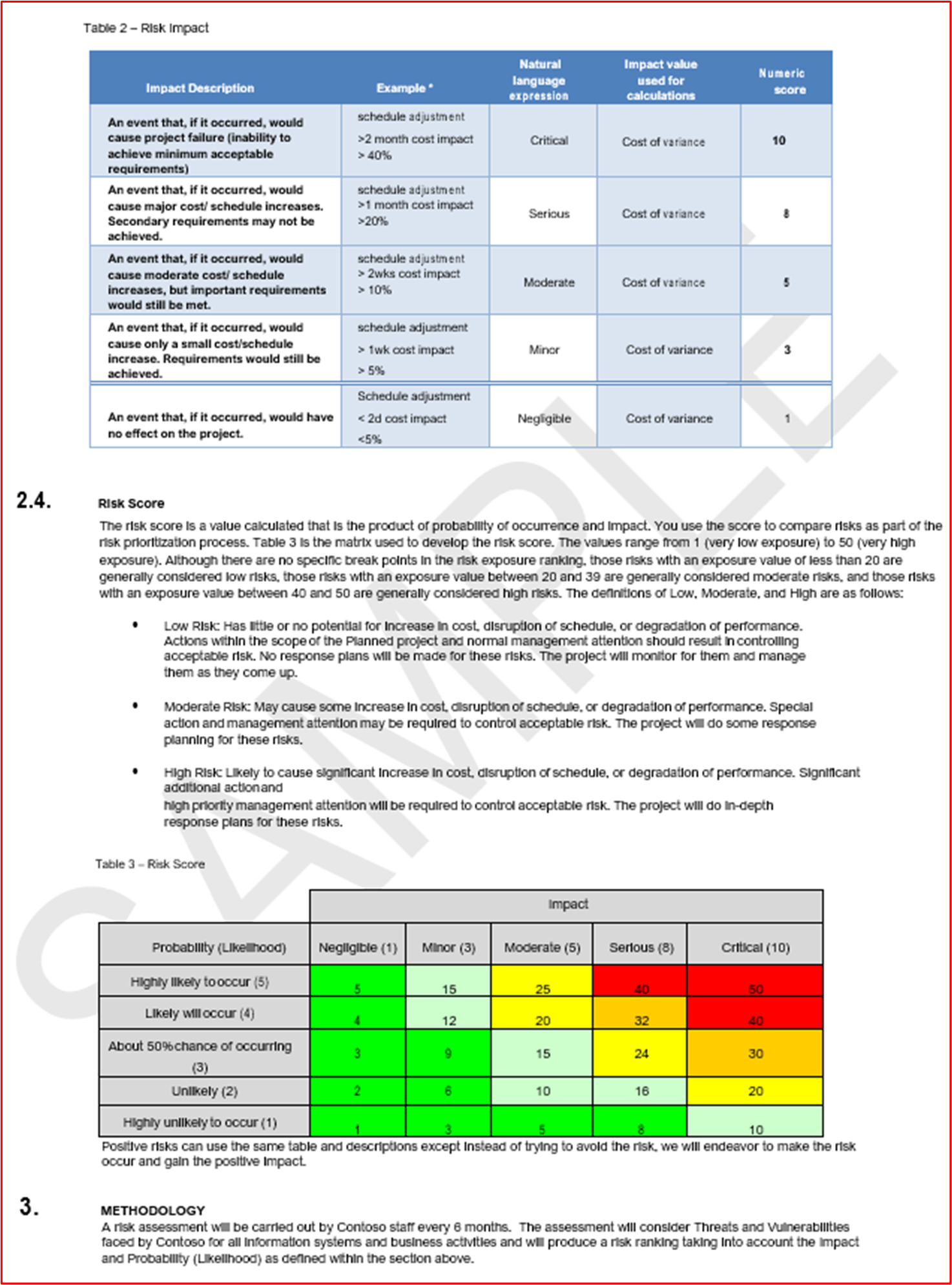

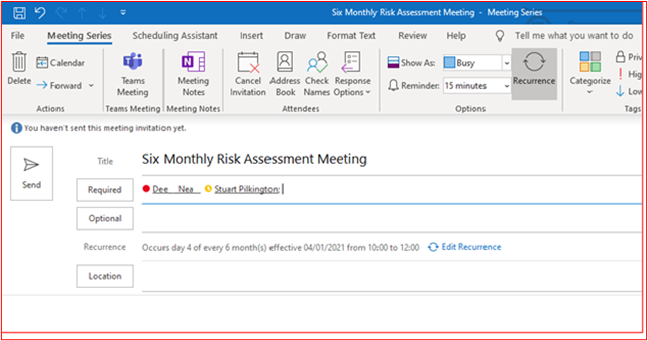

Control 25: Provide demonstratable evidence that you're performing firewall rule reviews at least every 6 months.

Intent: Over time, there's a risk of configuration creep in system components with the in-scope environment. This can often introduce insecurities or misconfigurations that can increase the risk of compromise to the environment. Configuration creep can be introduced for numerous reasons, such as, temporary changes to aid troubleshooting, temporary changes for ad-hoc functional changes, to introduce quick fixes to issues which can sometimes be overly permissive due to the pressures of introducing a quick fix. As an example, you may introduce a temporary firewall rule "Allow All" to overcome an urgent issue. The intent of this control is twofold, firstly to identify where there are misconfigurations which may introduce insecurities and secondly to help identify firewall rules which are no longer needed and therefore can be removed, that is, if a service has been retired but the firewall rule has been left behind.

Example Evidence Guidelines: Evidence needs to be able to demonstrate that the review meetings have been occurring. This can be done by sharing meeting minutes of the firewall review and any additional change control evidence that shows any actions taken from the review. Ensure the dates are present as we'd need to see a minimum of two of these meetings (that is, every six months)

Example Evidence: The following screenshot shows evidence of a Firewall review taking place in Jan 2021.

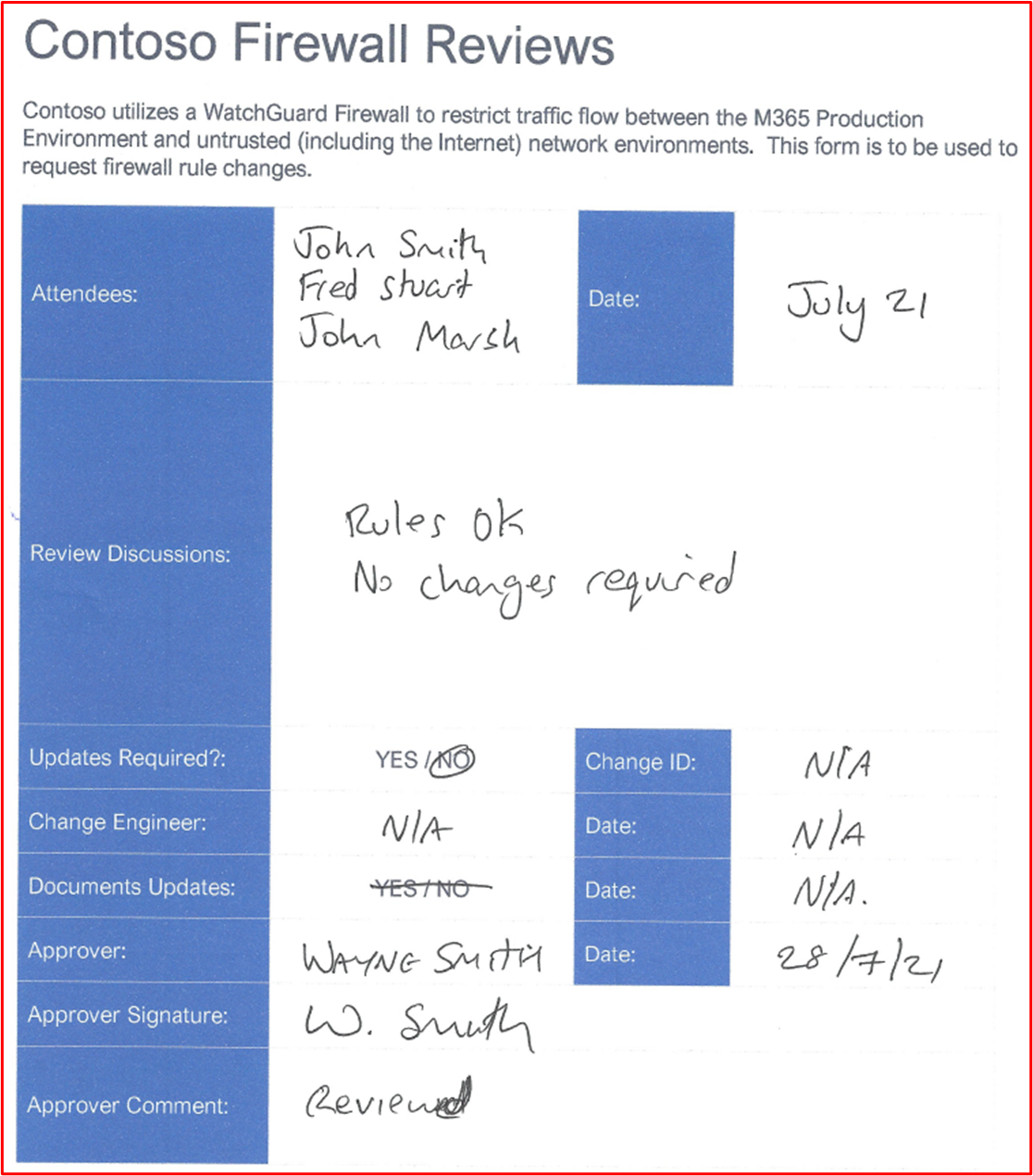

The following screenshot shows evidence of a Firewall review taking place in July 2021.

Firewalls – WAFs

it's optional to deploy a Web Application Firewall (WAF) into the solution. If a WAF is used, this will count as extra credits for the scoring matrix within the 'Operational Security' security domain. WAFs can inspect web traffic to filter and monitor web traffic between the Internet and published web applications to identify web application specific attacks. Web applications can suffer from many attacks which are specific to web applications such as SQL Injection (SQLi), Cross Site Scripting (XSS), Cross Site Request Forgery (CSRF/XSRF), etc. and WAFs are designed to protect against these types of malicious payloads to help protect web applications from attack and potential compromise.

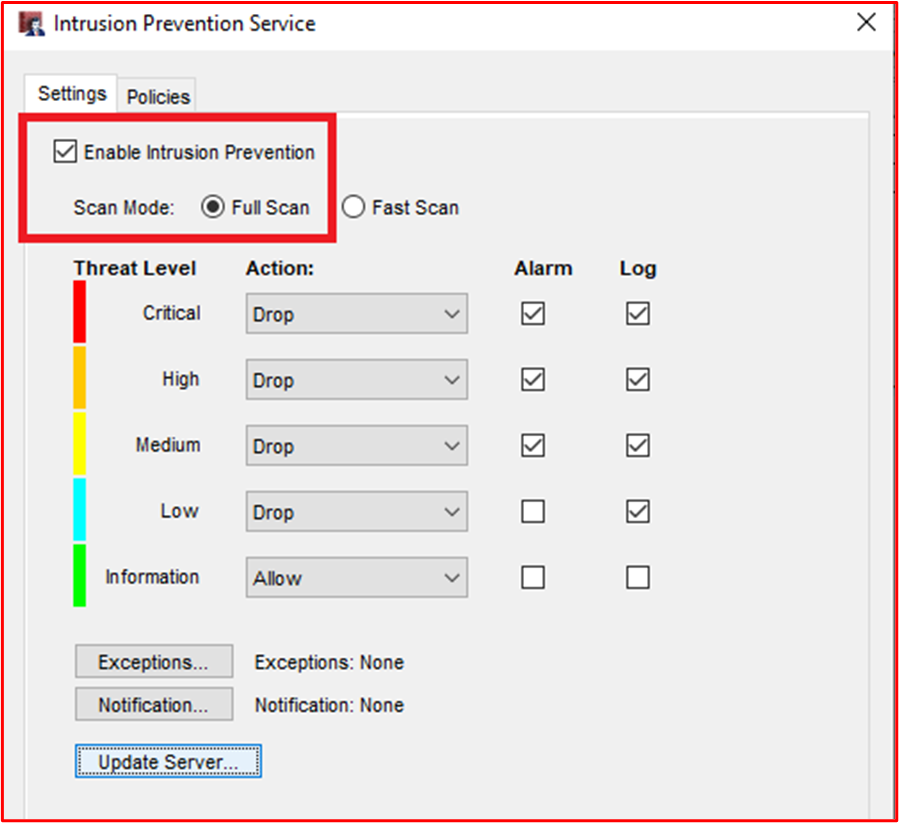

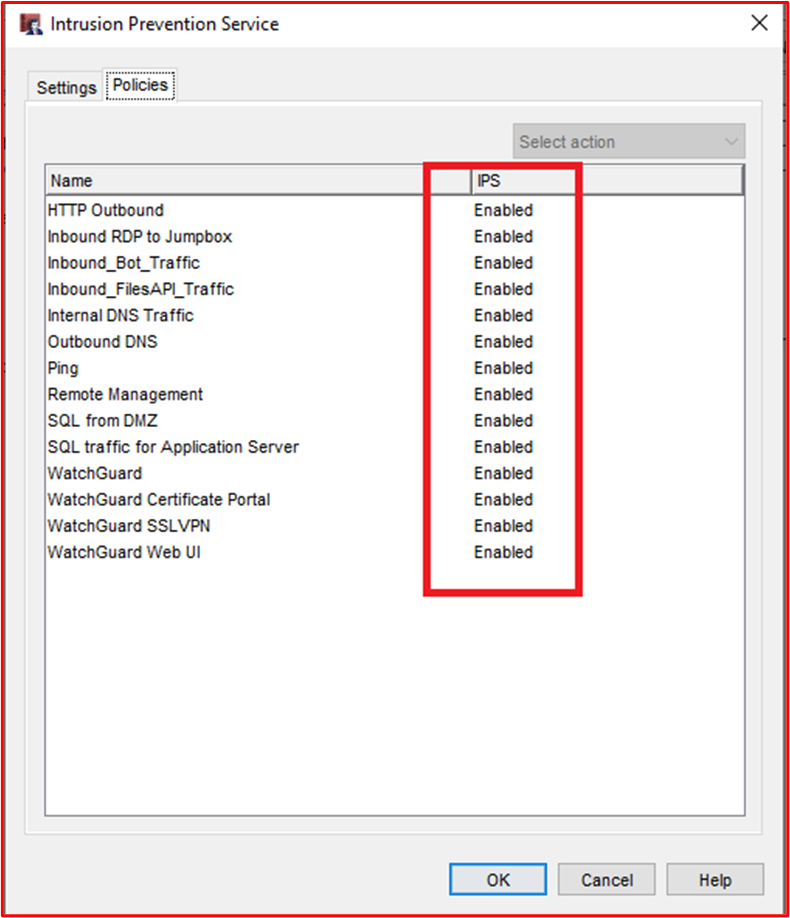

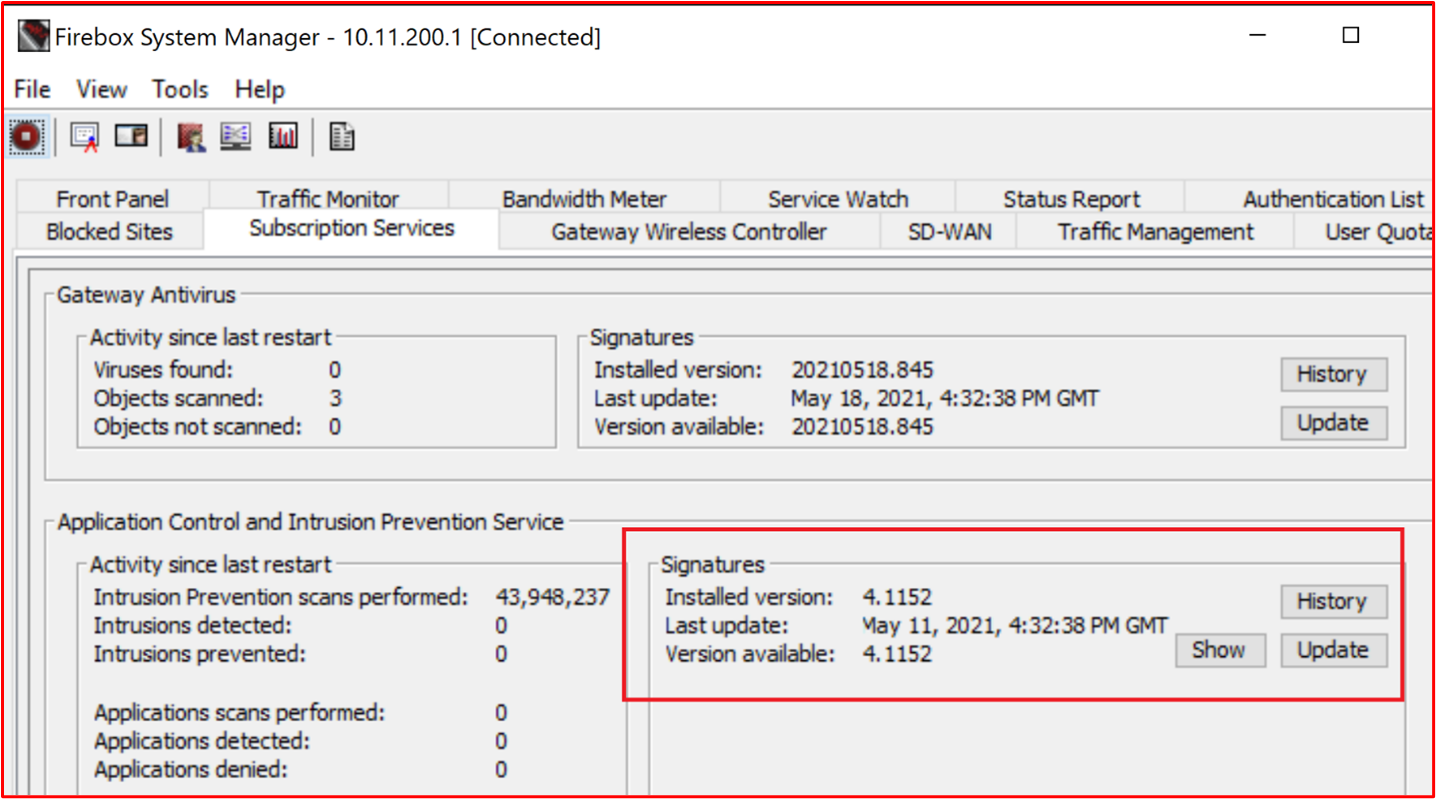

Control 26: Provide demonstratable evidence that the Web Application Firewall (WAF) is configured to actively monitor, alert, and block malicious traffic.

Intent: This control is in place to confirm that the WAF is in place for all incoming web connections, and that it's configured to either block or alert to malicious traffic. To provide an additional layer of defense for web traffic, WAFs need to be configured for all incoming web connections, otherwise, external activity groups could bypass the WAFs designed to provide this additional layer of protection. If the WAF isn't configured to actively block malicious traffic, the WAF needs to be able to provide an immediate alert to staff who can quickly react to the potential malicious traffic to help maintain the security of the environment and stop the attacks.

Example Evidence Guidelines: Provide configuration output from the WAF which highlights the incoming web connections being served and that the configuration actively blocks malicious traffic or is monitoring and alerting. Alternatively, screenshots of the specific settings can be shared to demonstrate an organization is meeting this control.

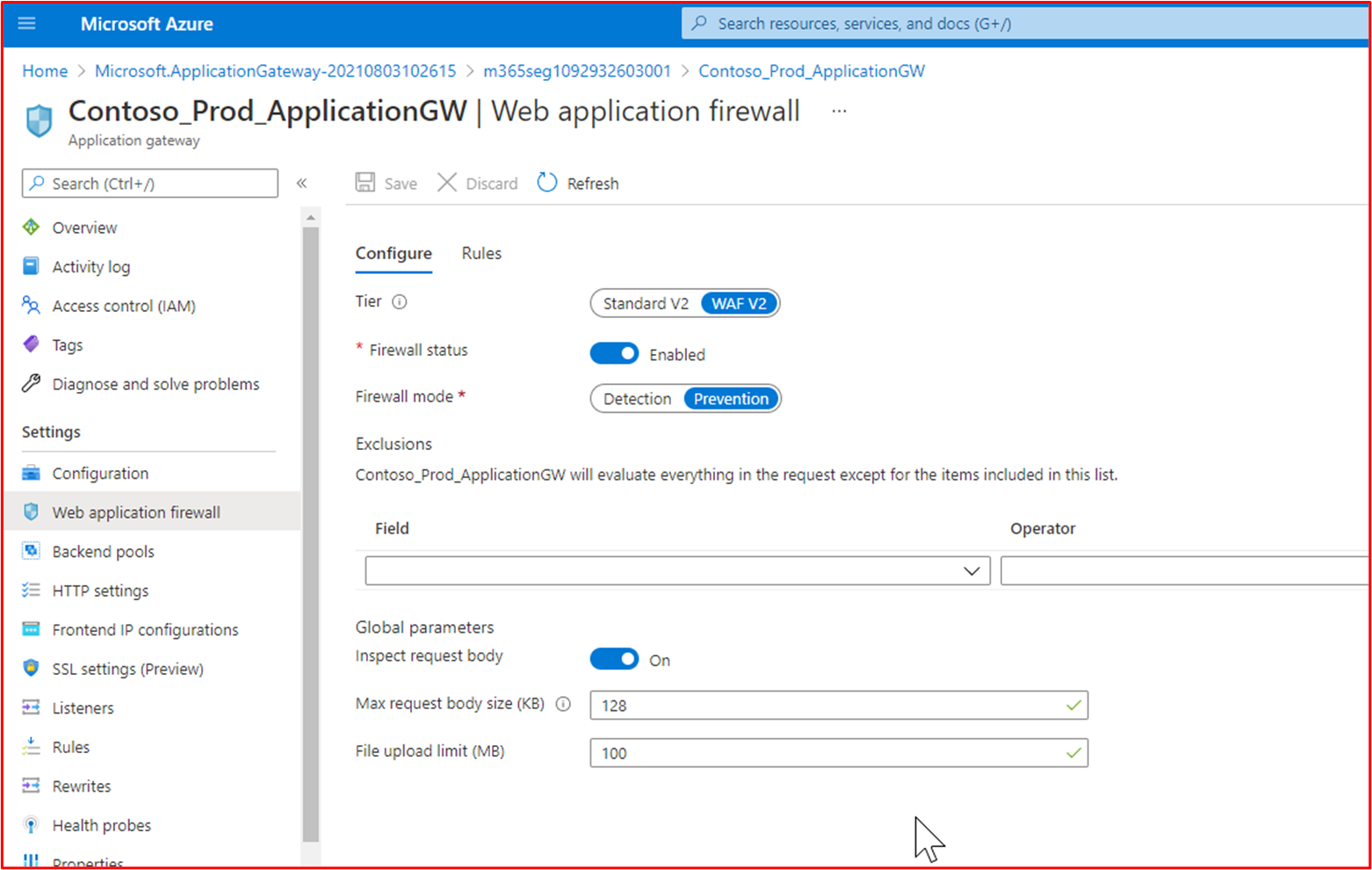

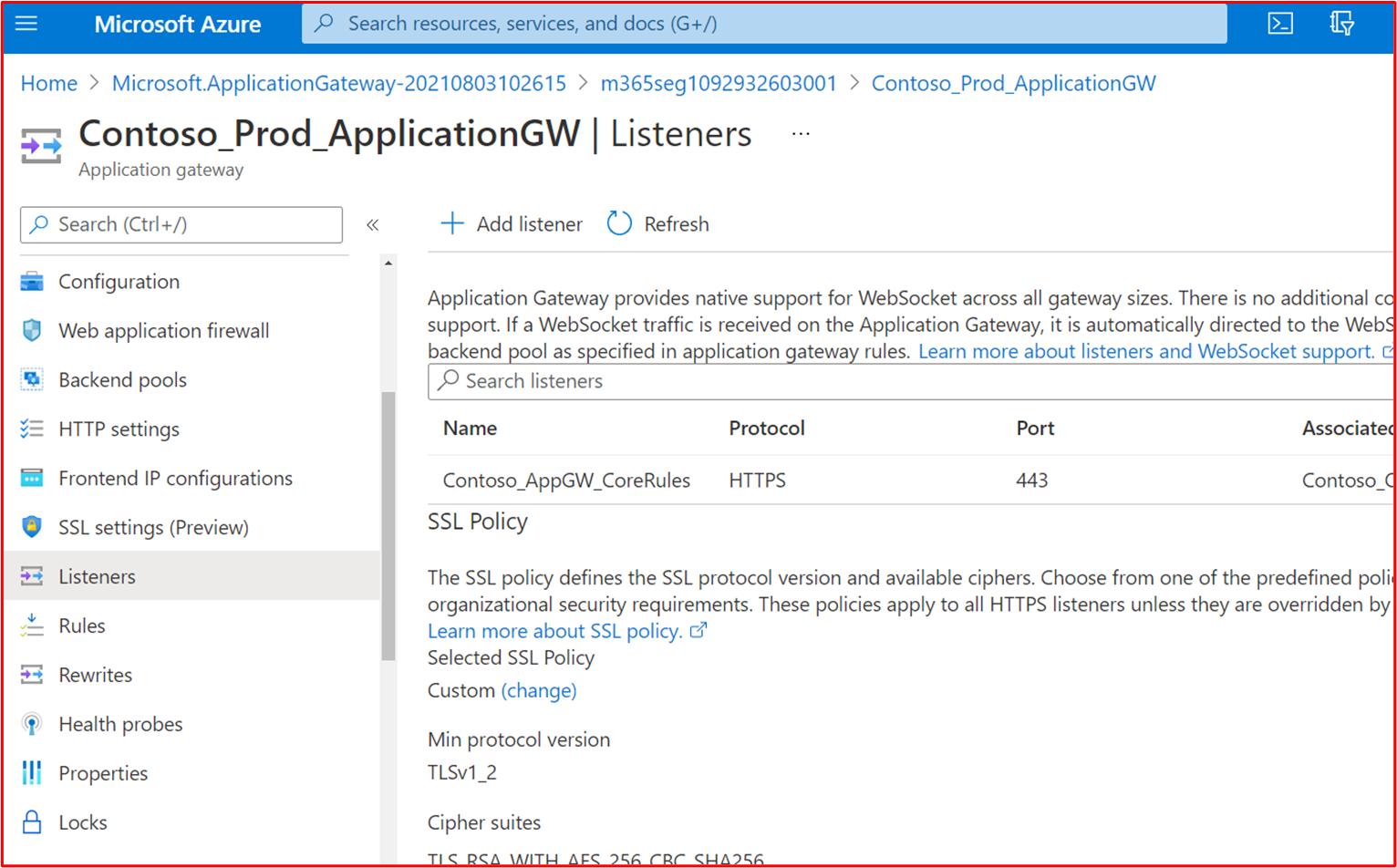

Example Evidence: The following screenshots shows the Contoso Production Azure Application Gateway WAF policy is enabled and that it's configured for 'Prevention' mode, which will actively drop malicious traffic.

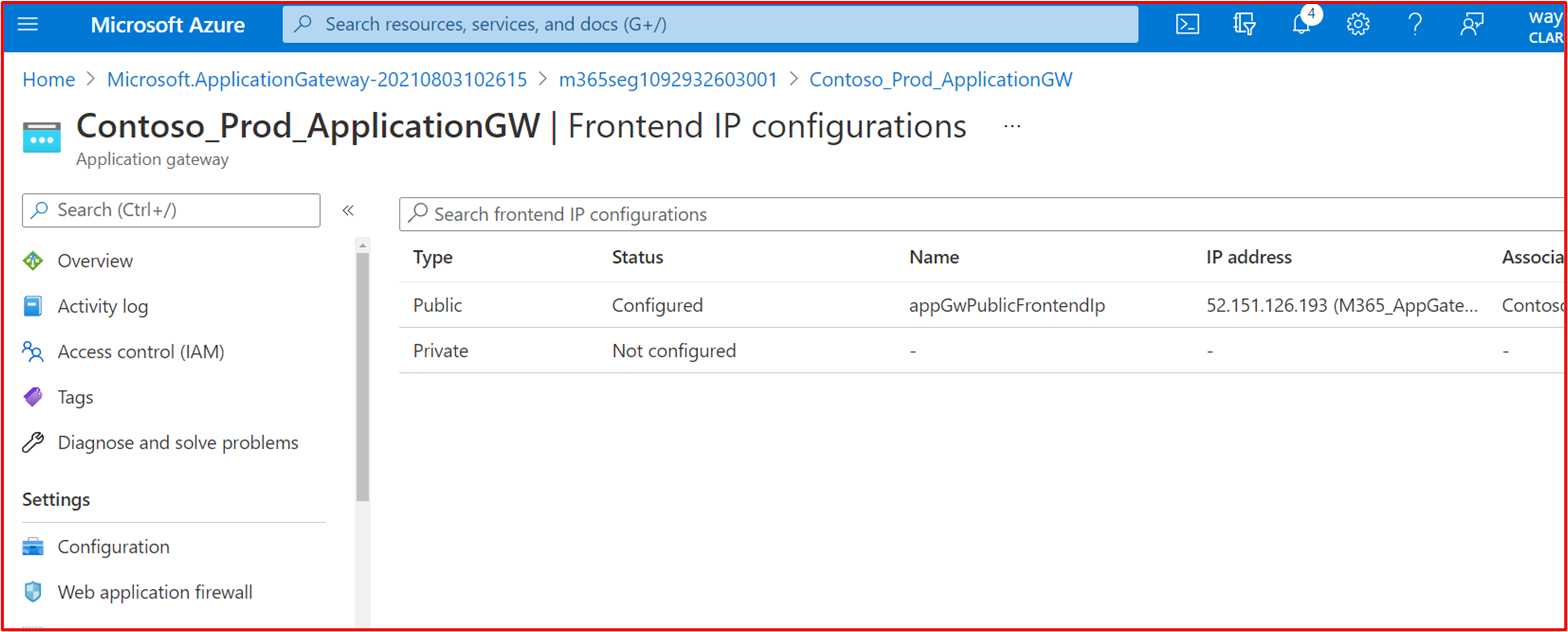

The below screenshot shows the Frontend IP configuration

Note: Evidence should demonstrate all public IPs used by the environment to ensure all ingress points are covered which is why this screenshot is also included.

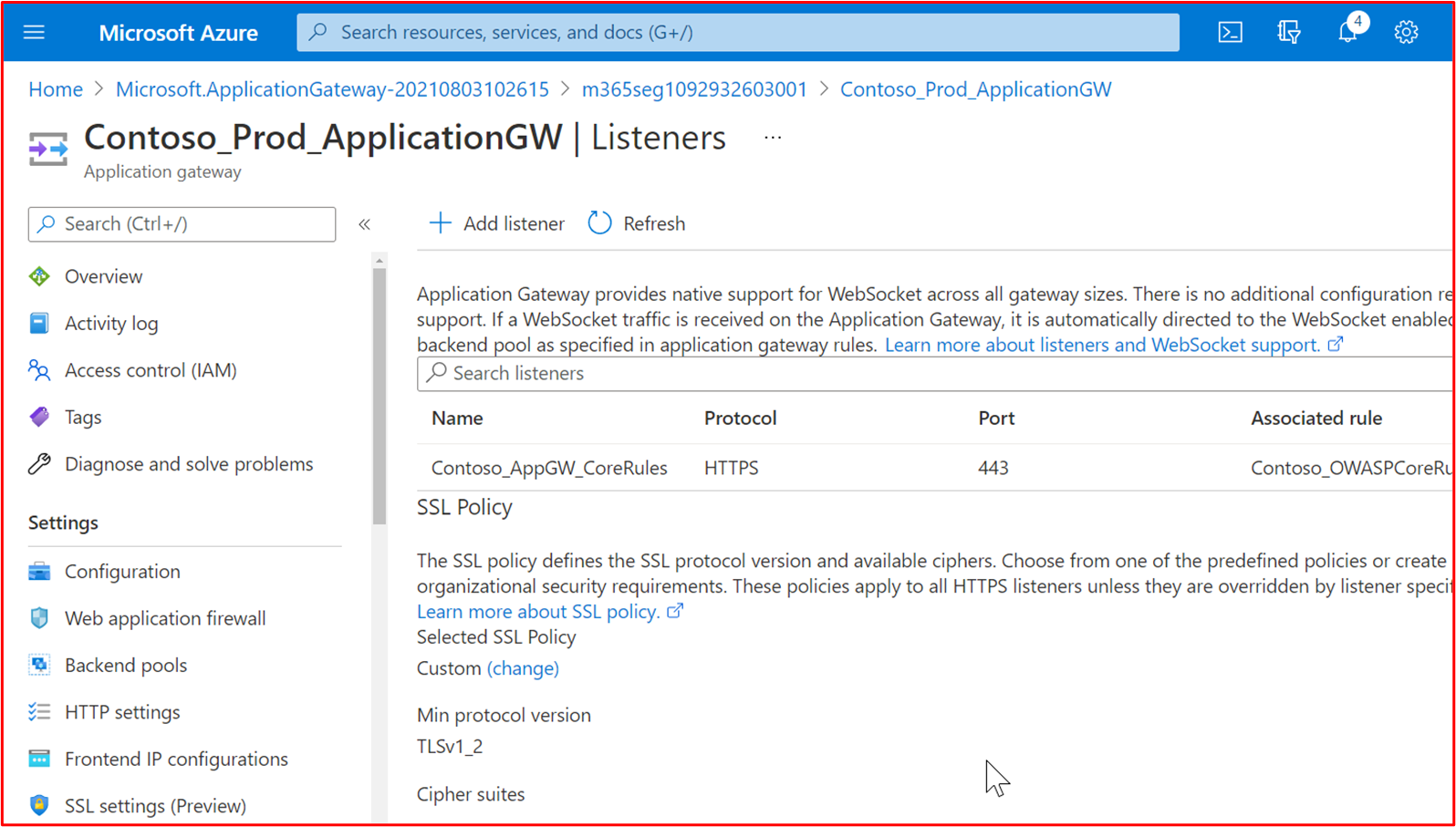

The below screenshot shows the incoming web connections using this WAF.

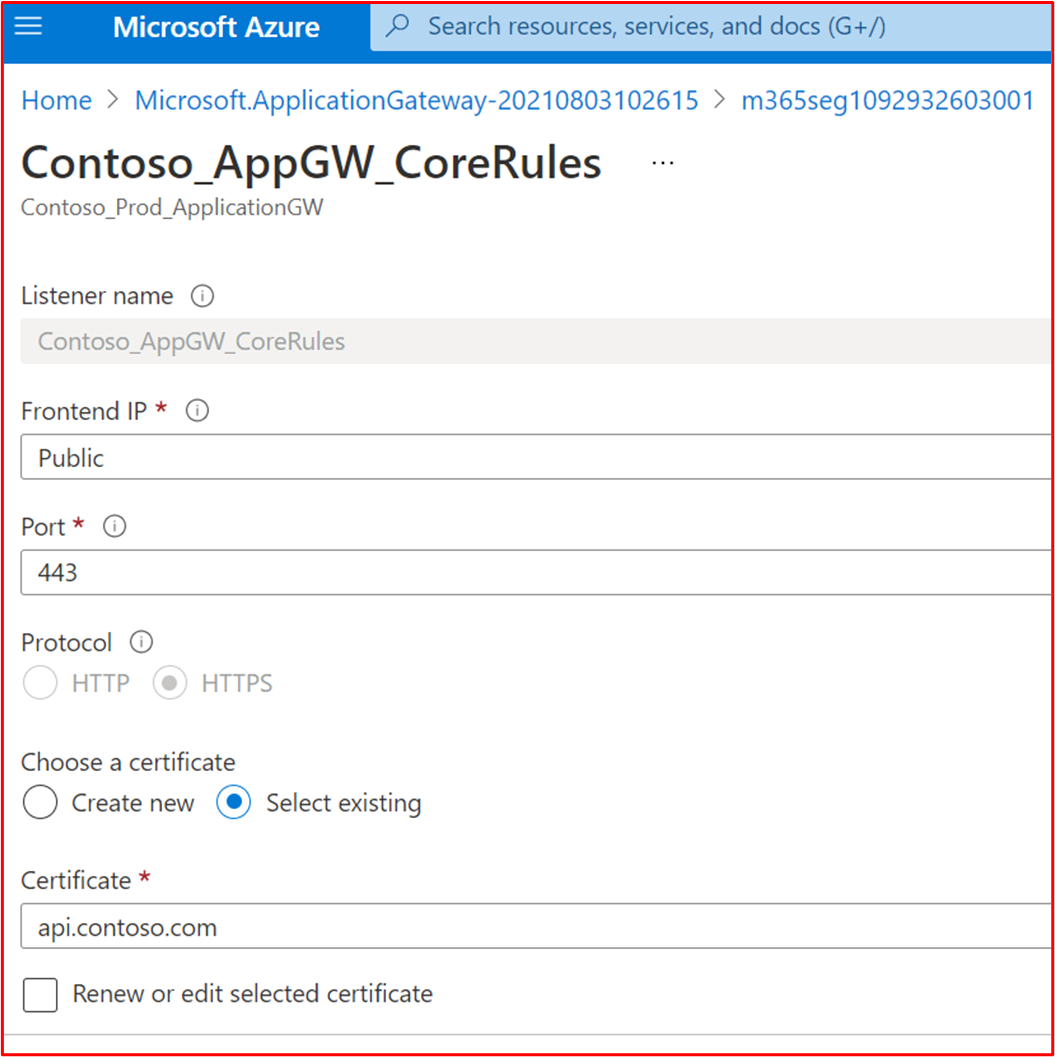

The following screenshot shows the Contoso_AppGW_CoreRules showing that this is for the api.contoso.com service.

Control 27: Provide demonstrable evidence that the WAF supports SSL offloading.

Intent: The ability for the WAF to be configured to support SSL Offloading is important, otherwise the WAF will be unable to inspect HTTPS traffic. Since these environments need to support HTTPS traffic, this is a critical function for the WAF to ensure malicious payloads within HTTPS traffic can be identified and stopped.

Example Evidence Guidelines: Provide configuration evidence via a configuration export or screenshots which shows that SSL Offloading is supported and configured.

Example Evidence: Within Azure Application Gateway, configuration of an SSL Listener enabled SSL Offloading, see the Overview of TLS termination and end to end TLS with Application Gateway Microsoft Docs page. The following screenshot shows this configured for the Contoso Production Azure Application Gateway.

Control 28: 'Provide demonstratable evidence that the WAF is protects against some, or all of the following classes of vulnerabilities as per the OWASP Core Rule Set (3.0 or 3.1):

protocol and encoding issues,

header injection, request smuggling, and response splitting,

file and path traversal attacks,

remote file inclusion (RFI) attacks,

remote code execution attacks,

PHP-injection attacks,

cross-site scripting attacks,

SQL-injection attacks,

session-fixation attacks.

Intent: WAFs need to be configured to identify attack payloads for common classes of vulnerabilities. This control intends to ensure that adequate detection of vulnerability classes is covered by leveraging the OWASP Core Rule Set.

Example Evidence Guidelines: Provide configuration evidence via a configuration export or screenshots demonstrate that most vulnerability classes identified above are being covered by the scanning.

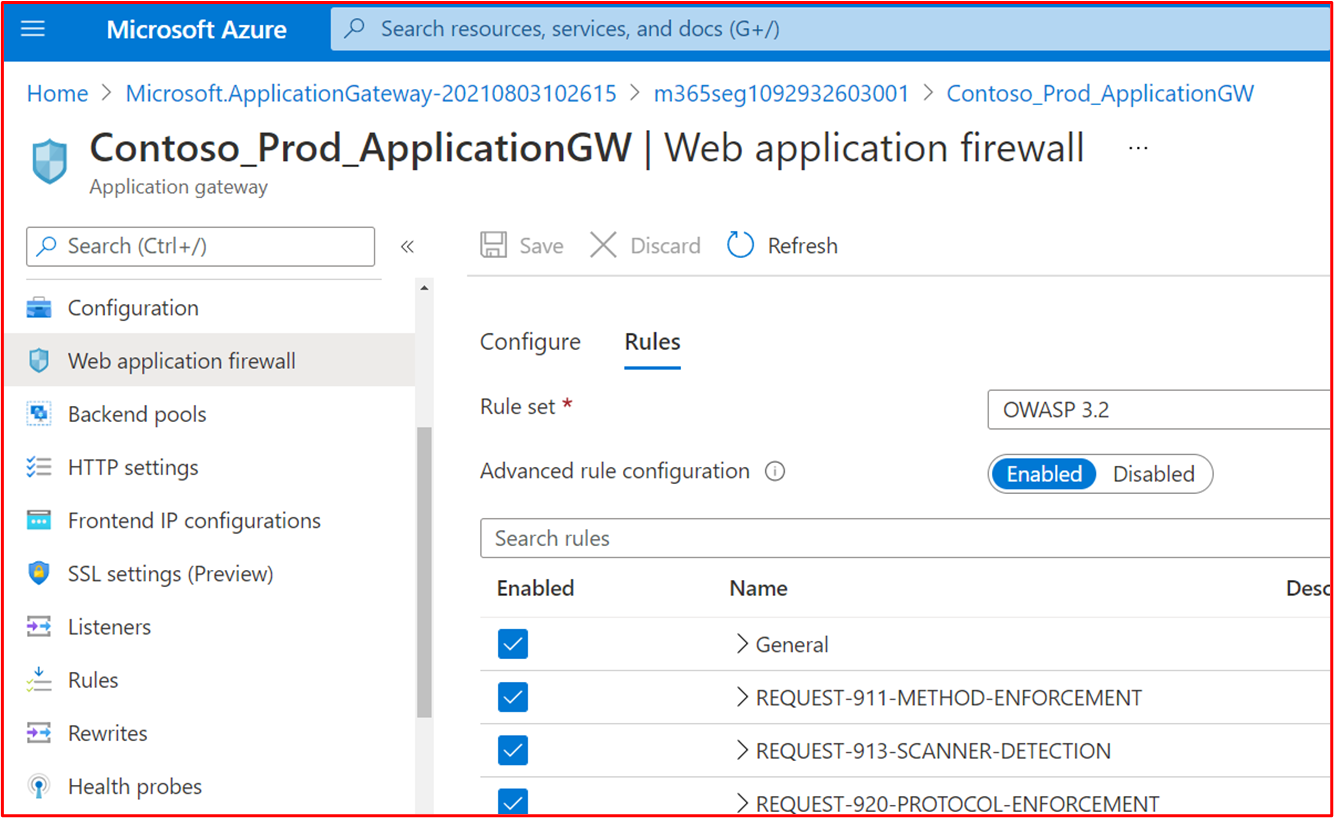

Example Evidence: The below screenshot shows that the Contoso Production Azure Application Gateway WAF policy is configured to scan against the OWASP Core Rule Set Version 3.2.

Change Control

An established and understood change control process is essential in ensuring that all changes go through a structured process which is repeatable. By ensuring all changes go through a structured process, organizations can ensure changes are effectively managed, peer reviewed and adequately tested before being signed off. Thisn't only helps to minimize the risk of system outages, but also helps to minimize the risk of potential security incidents through improper changes being introduced.

Control 29: Provide policy documentation that governs change control processes.

Intent: To maintain a secure environment and secure application, a robust change control process must be established to ensure all infrastructure and code changes are carried out with strong oversight and defined processes. This ensures that changes are documented, security implications are considered, thought has gone into what security impact the change will have, etc. The intent is to ensure the change control process is documented to ensure that a secure and consistent approach is taken to all changes within both the environment and application development practices.

Example Evidence Guidelines: The documented change control policies/procedures should be shared with the Certification Analysts.

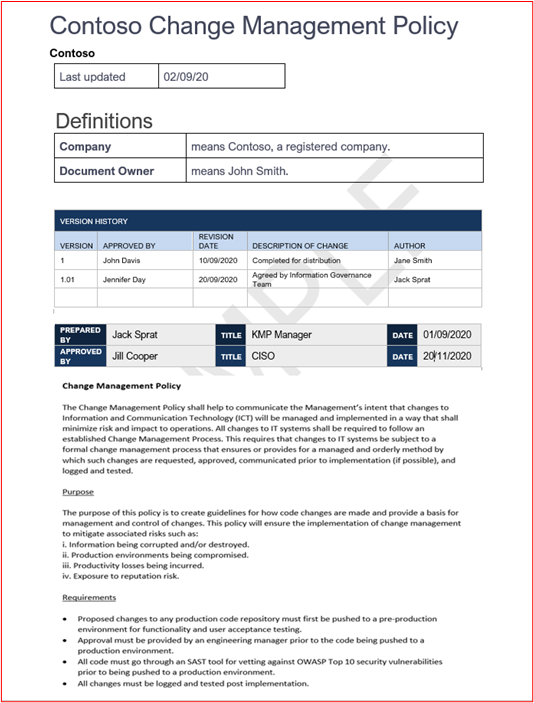

Example Evidence: Below shows the start of an example change management policy. Please supply your full policies and procedures as part of the assessment.

Note: This screenshot shows a policy/process document, the expectation is for ISVs to share the actual supporting policy/procedure documentation and not simply provide a screenshot.

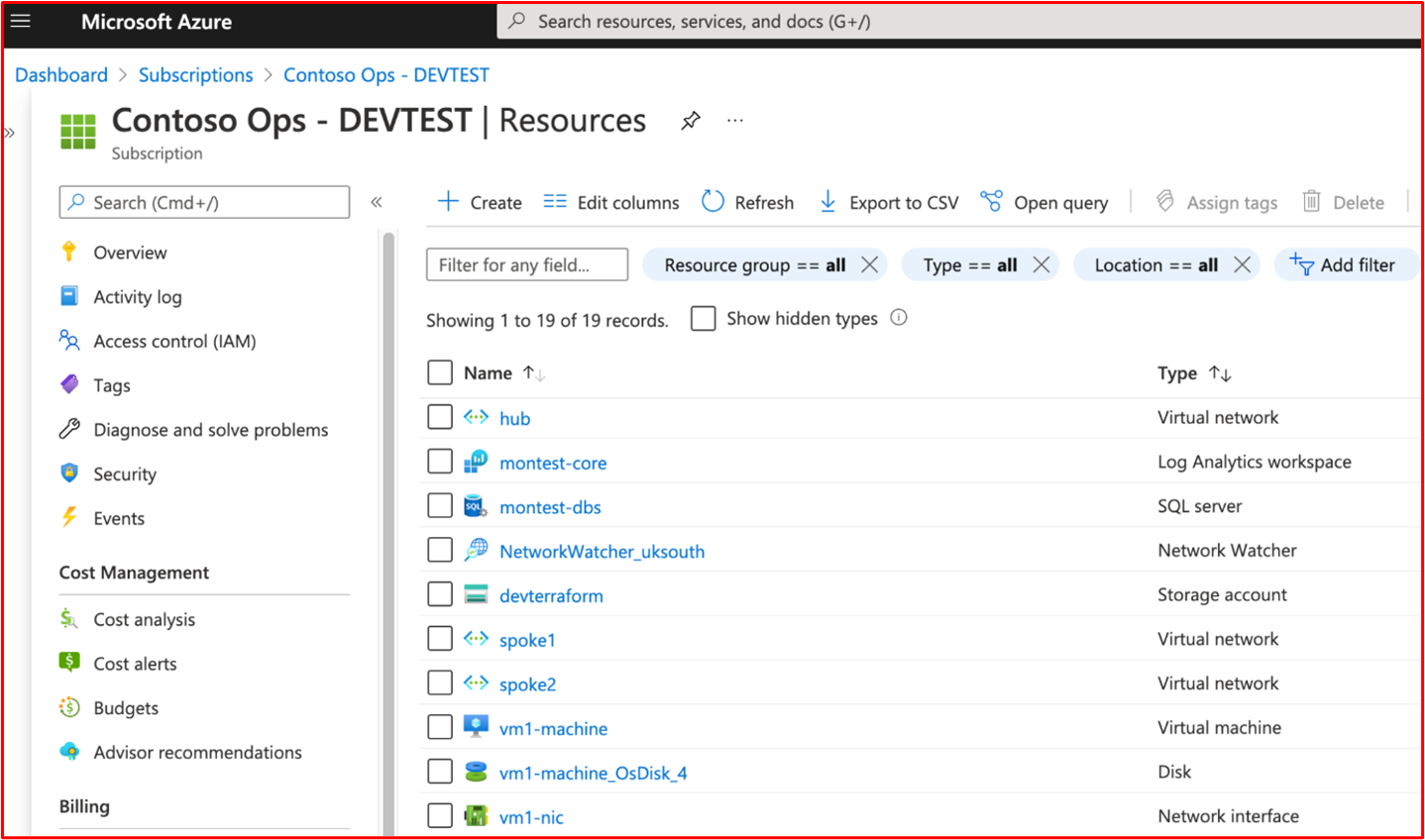

Control 30: Provide demonstratable evidence that development and test environments enforce separation of duties from the production environment.

Intent: Most organization's development / test environments aren't configured to the same vigor as the production environments and are therefore less secure. Additionally, testing shouldn't be carried out within the production environment as this can introduce security issues or can be detrimental to service delivery for customers. By maintaining separate environments which enforce a separation of duties, organizations can ensure changes are being applied to the correct environments, thereby, reducing the risk of errors by implementing changes to production environments when it was intended for the development / test environment.

Example Evidence Guidelines: Screenshots could be provided which demonstrate different environments being used for development / test environments and production environments. Typically, you would have different people / teams with access to each environment, or where this isn't possible, the environments would utilize different authorization services to ensure users can't mistakenly log into the wrong environment to apply changes.

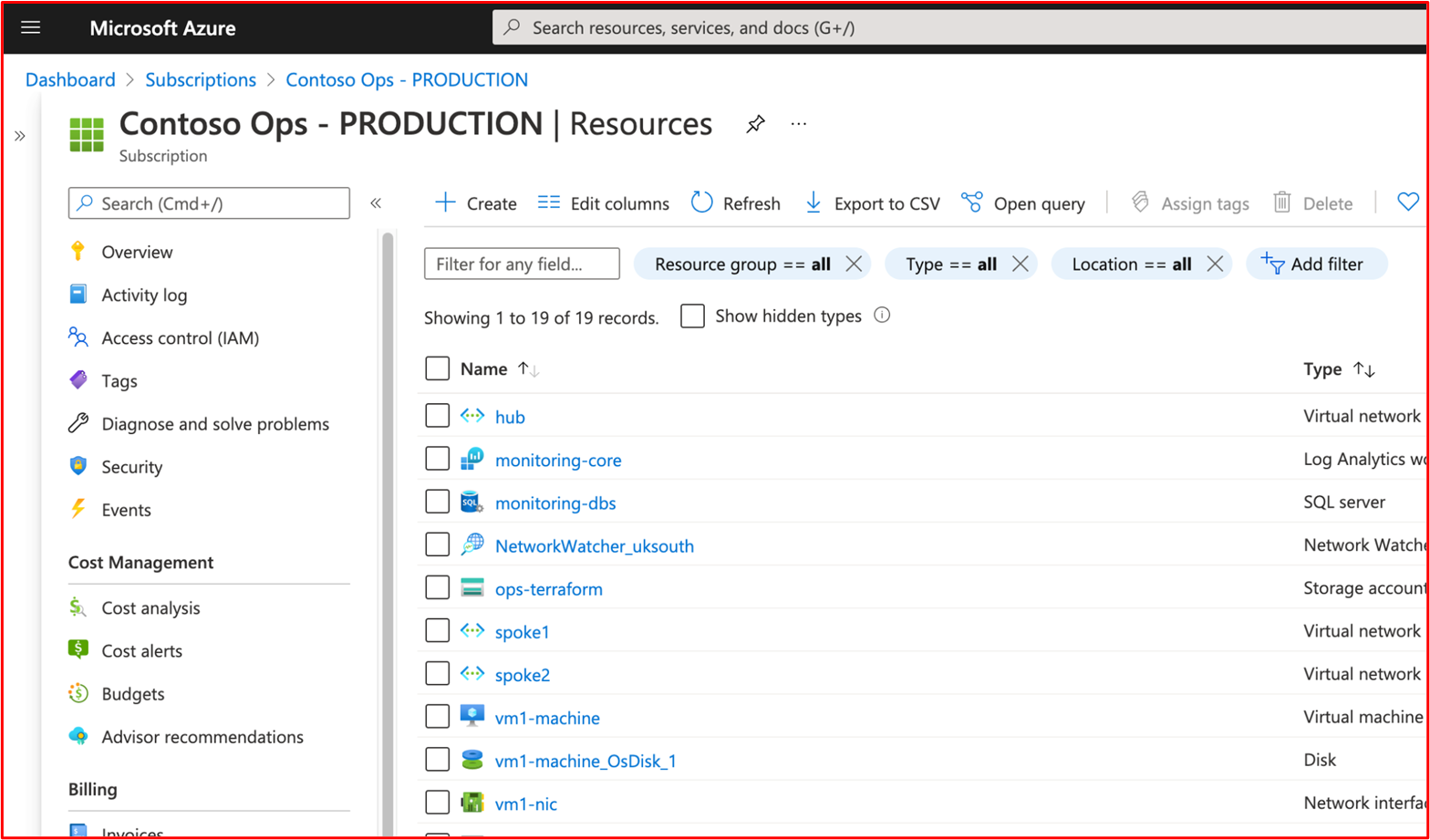

Example Evidence: The following screenshot shows an Azure subscription for Contoso's TEST environment.

This next screenshot shows a separate Azure subscription for Contoso's 'PRODUCTION' environment.

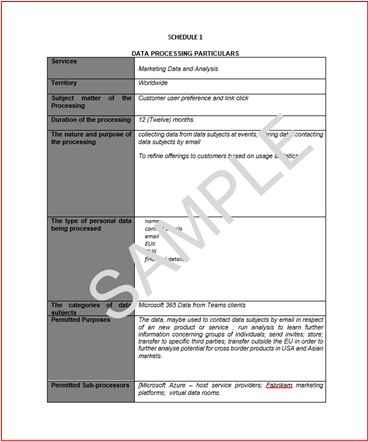

Control 31: Provide demonstratable evidence that sensitive production data isn't used within the development or test environments.

- Intent: As already discussed above, organizations won't implement security measures of a development/test environment to the same vigor as the production environment. Therefore, by utilizing sensitive production data in these development/test environments, you're increasing the risk of a compromise and must avoid using live/sensitive data within these development/test environments.

Note: You can use live data in development/test environments, providing the development/test is included within the scope of the assessment so the security can be assessed against the Microsoft 365 Certification controls.

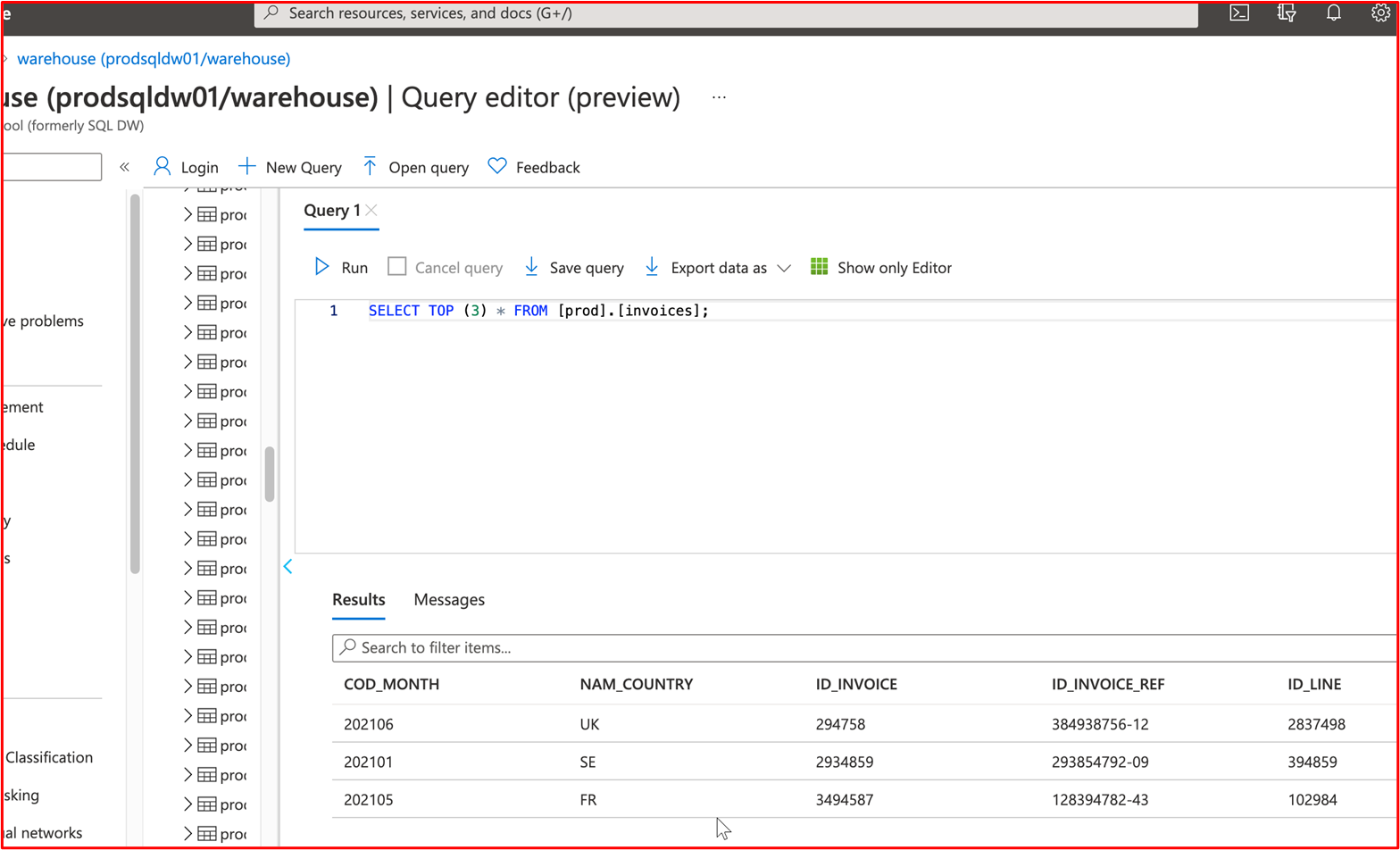

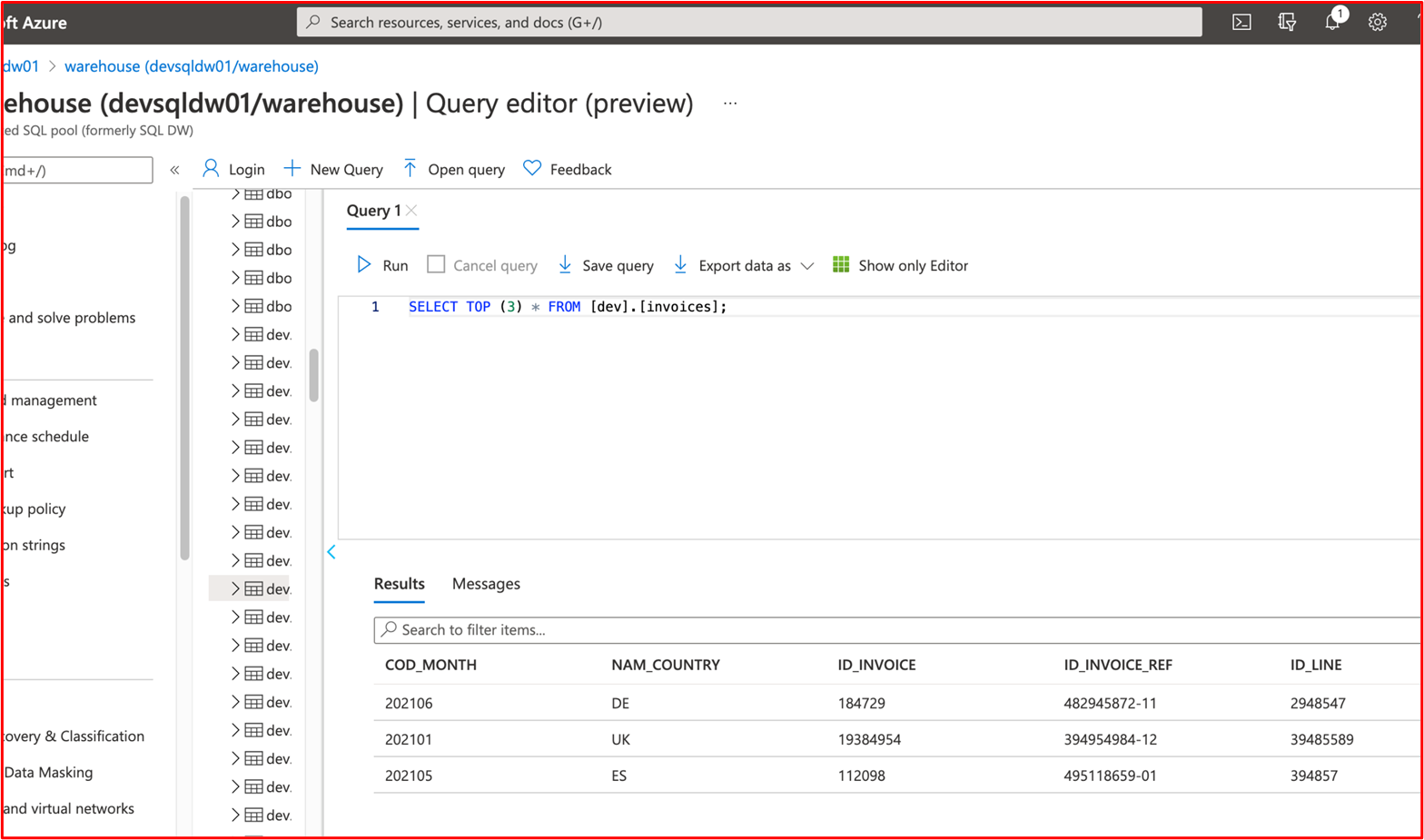

Example Evidence Guidelines: Evidence can be provided by sharing screenshots of the output of the same SQL query against a production database (redact any sensitive information) and the development/test database. The output of the same commands should produce different data sets. Where files are being stored, viewing the contents of the folders within both environments should also demonstrate different data sets.

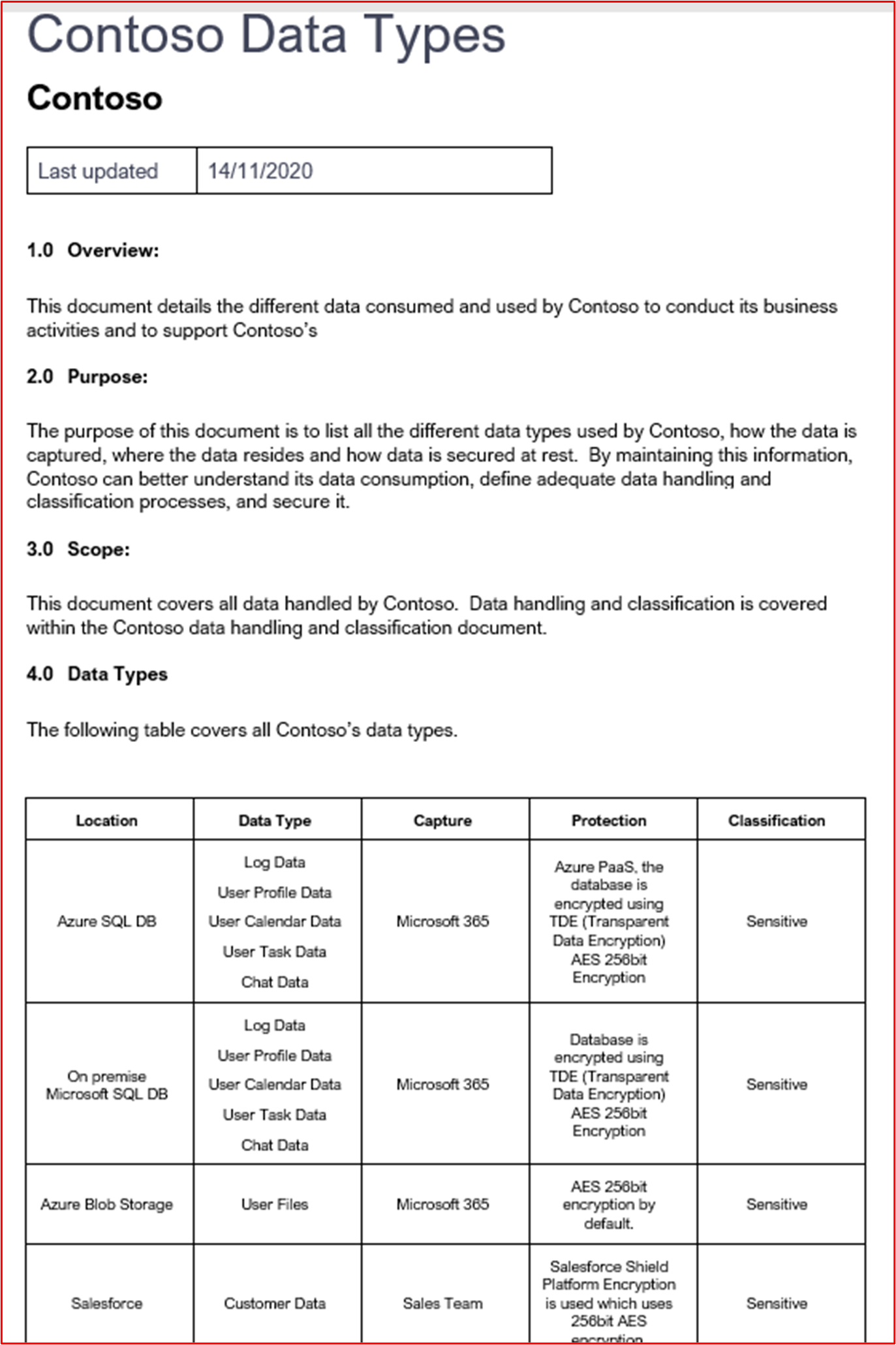

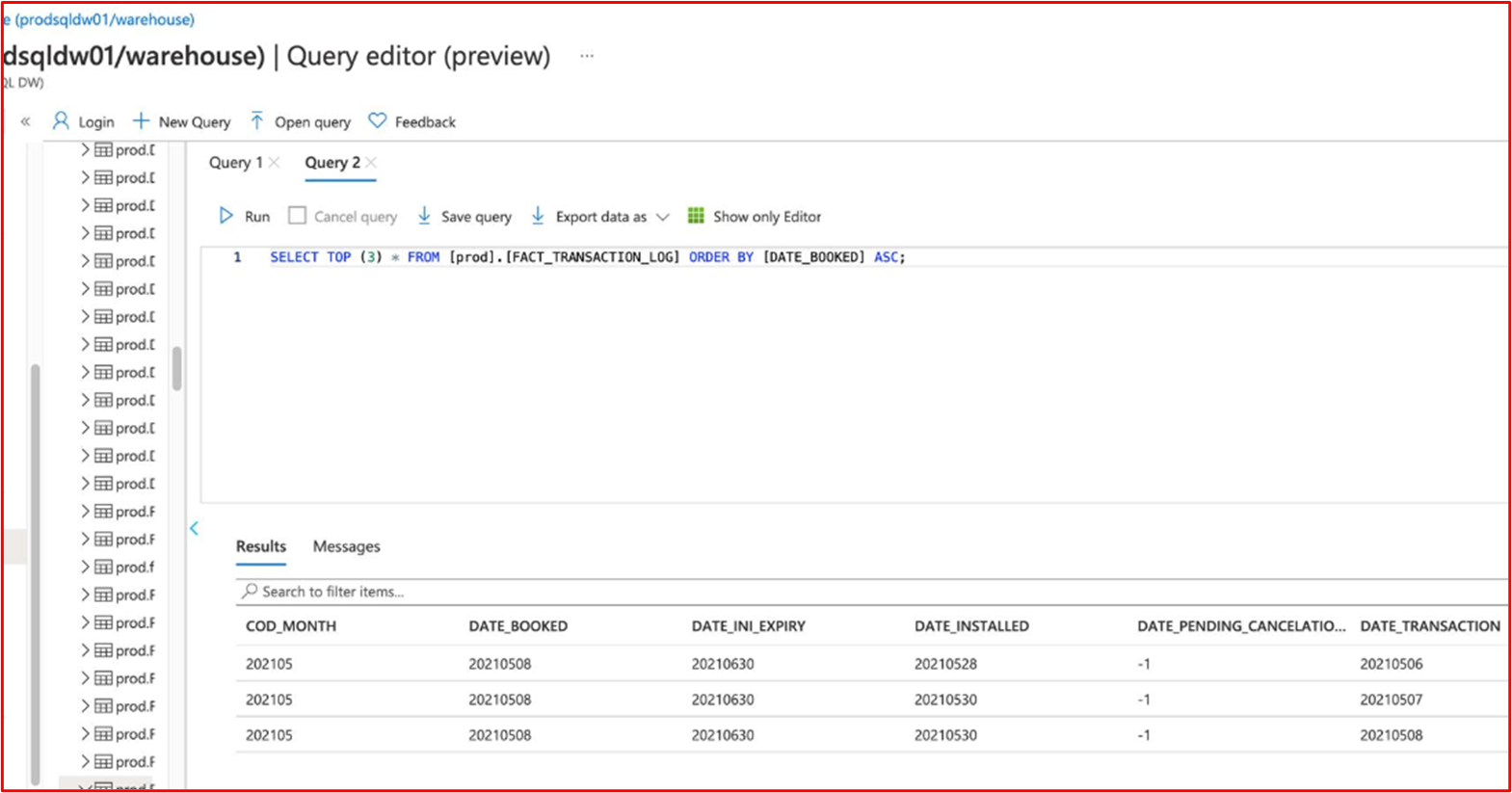

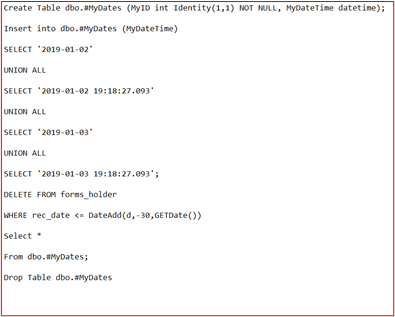

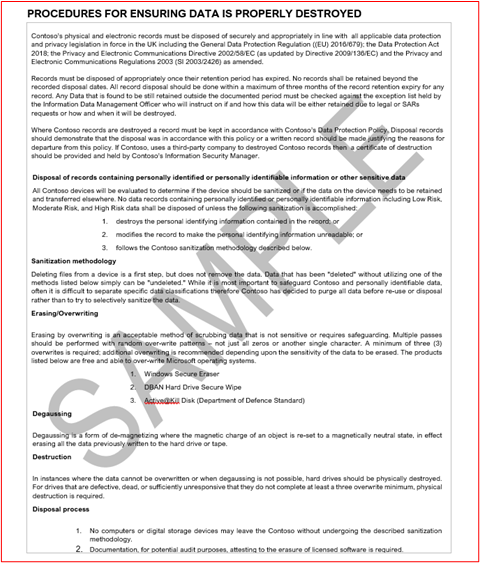

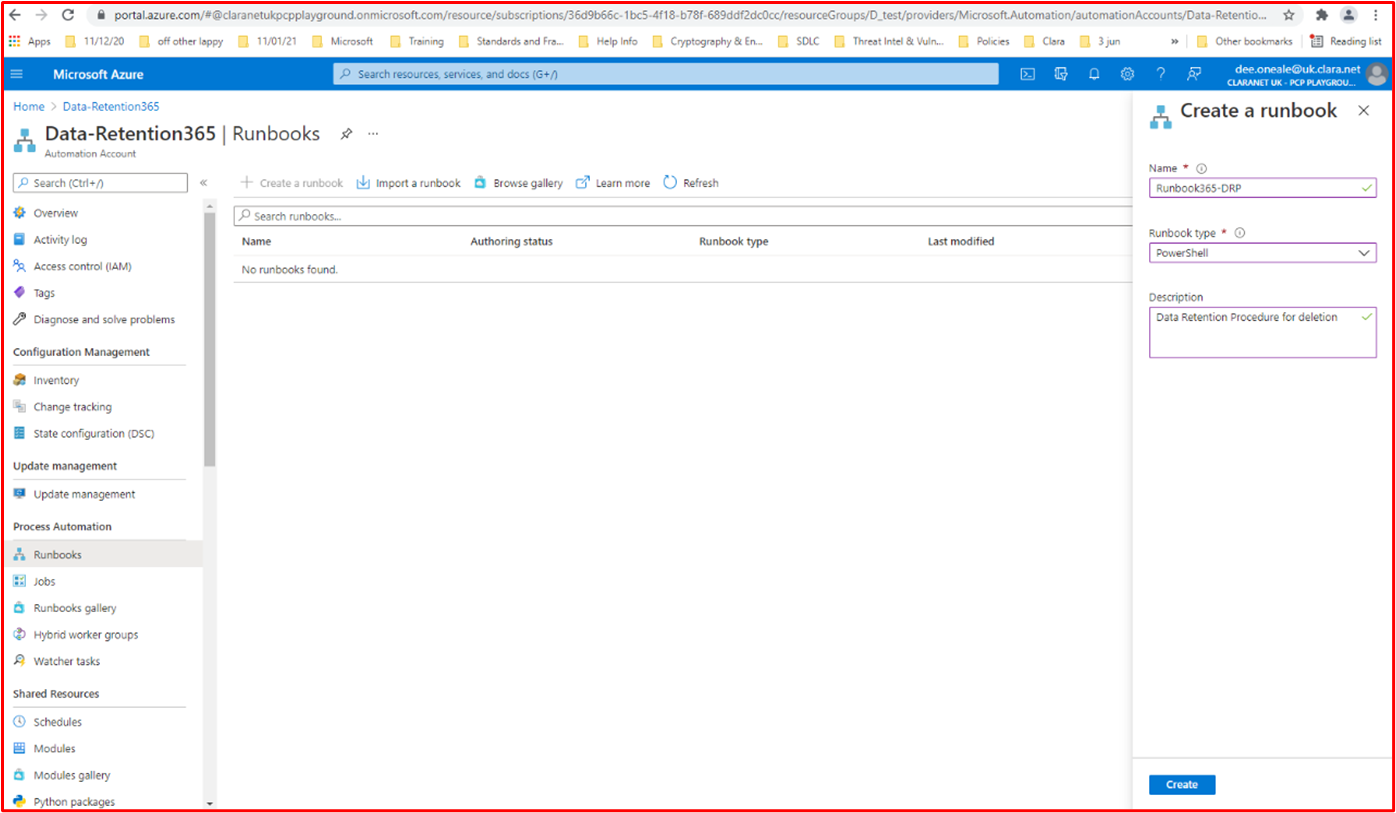

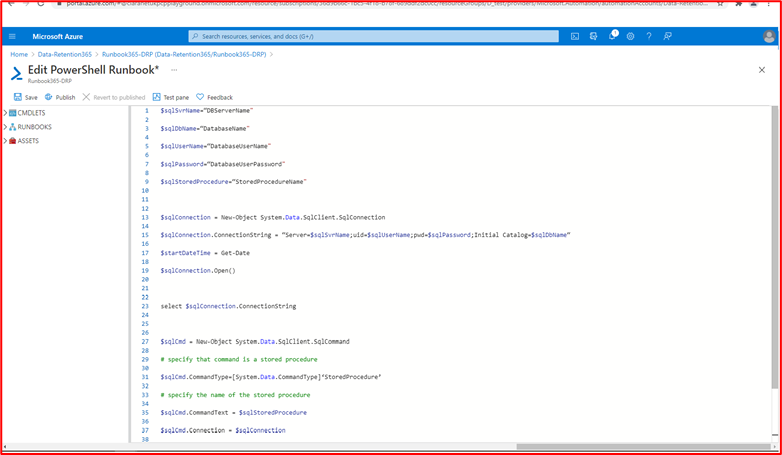

Example Evidence: The following screenshot shows the top 3 records (for evidence submission, please provide top 20) from the Production Database.

The next screenshot shows the same query from the Development Database, showing different records.

This demonstrates that the data sets are different.

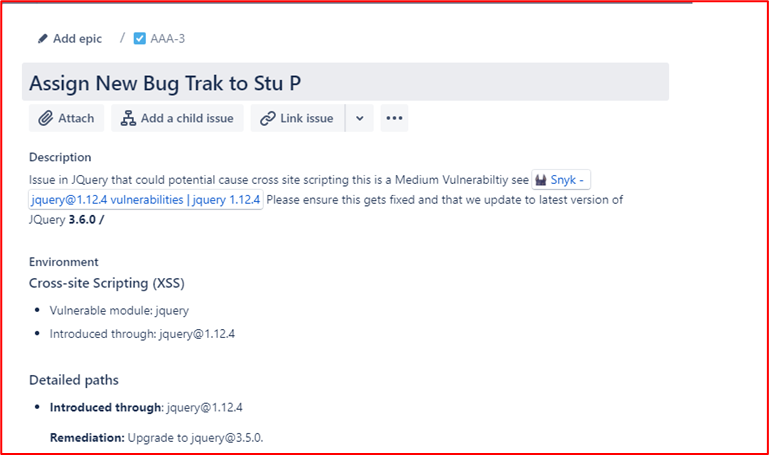

Control 32: Provide demonstratable evidence that documented change requests contain impact of the change, details of back-out procedures and of testing to be carried out.

Intent: The intent of this control is to ensure thought has gone into the change being requested. The impact the change has to the security of the system/environment needs to be considered and clearly documented, any back-out procedures need to be document to aid in recovery should something go wrong, and finally details of testing that is needed to validate the change has been successful also needs to be thought about and documented.

Example Evidence Guidelines: Evidence can be provided by exporting a sample of change requests, providing paper change requests, or providing screenshots of the change requests showing these three details held within the change request.

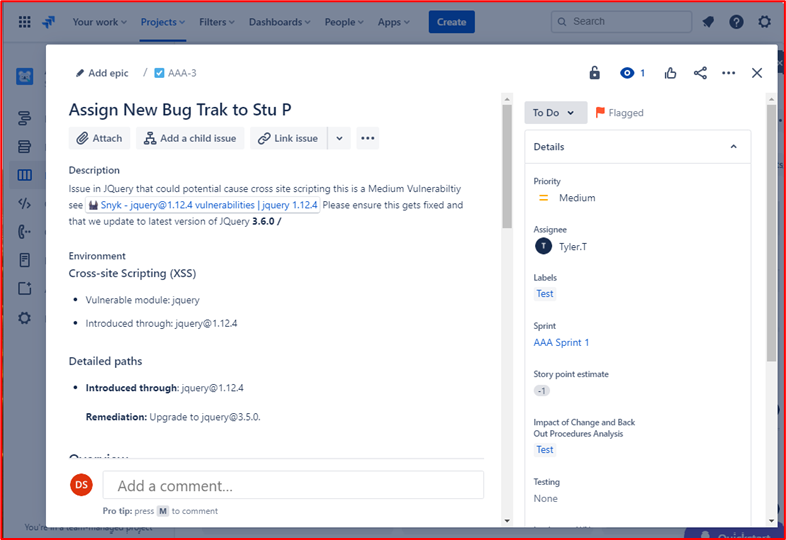

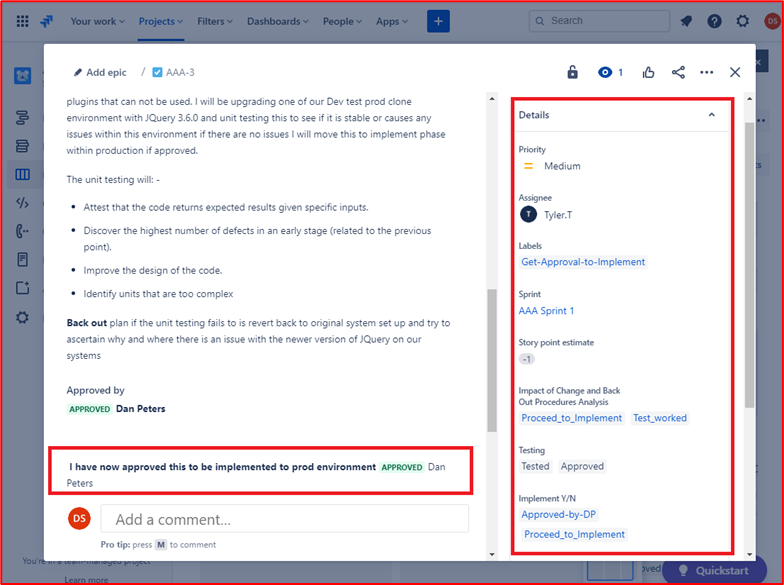

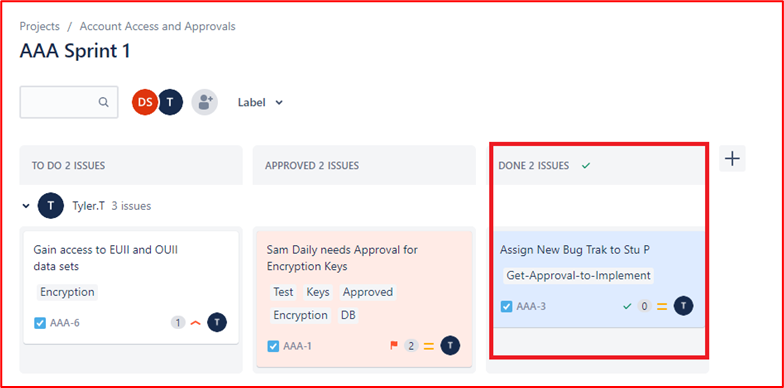

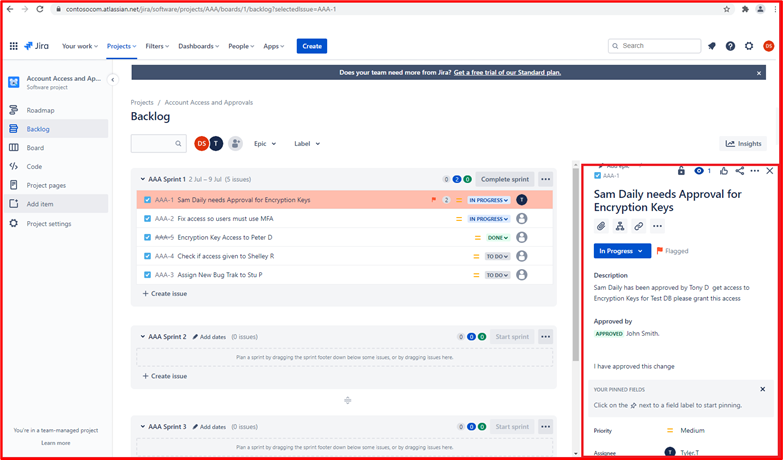

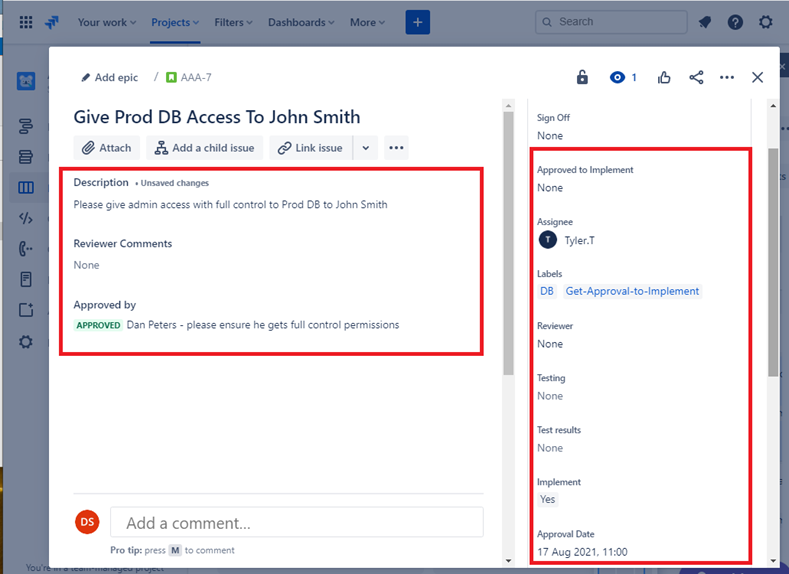

Example Evidence: The image below shows a new Cross Site Scripting Vulnerability (XSS) being assigned and document for change request.

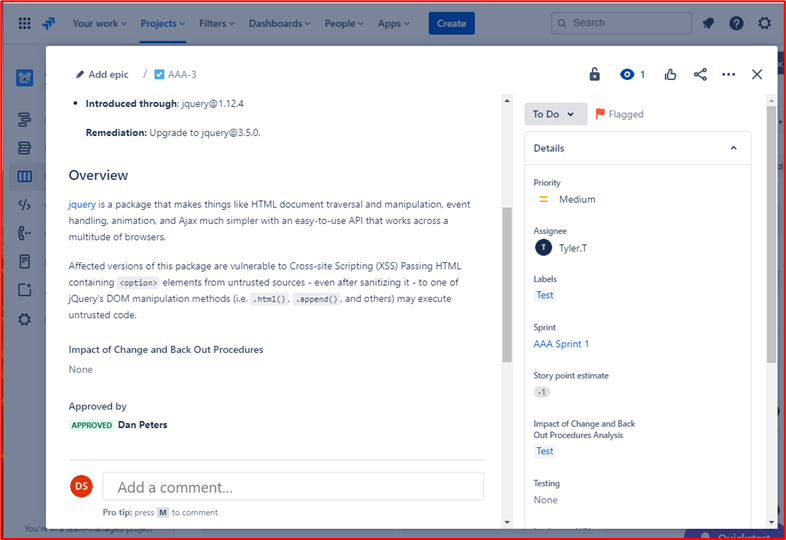

The below tickets show the information that has been set or added to the ticket on its journey to being resolved.

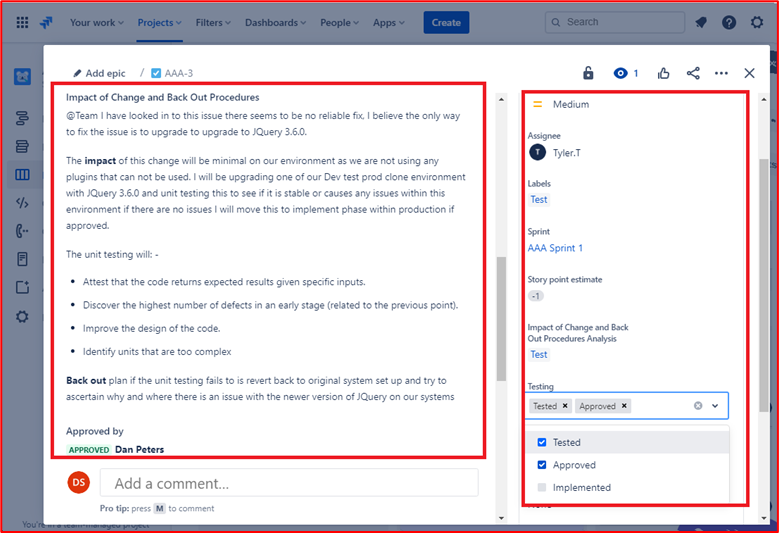

The two tickets below show the impact of the change to the system and any back out procedures which may be needed in the event of an issue. You can see impact of changes and back out procedures have gone through an approval process and have been approved for testing.

On the left of the screen, you can see that testing the changes has been approved, on the right you see that the changes have now been approved and tested.

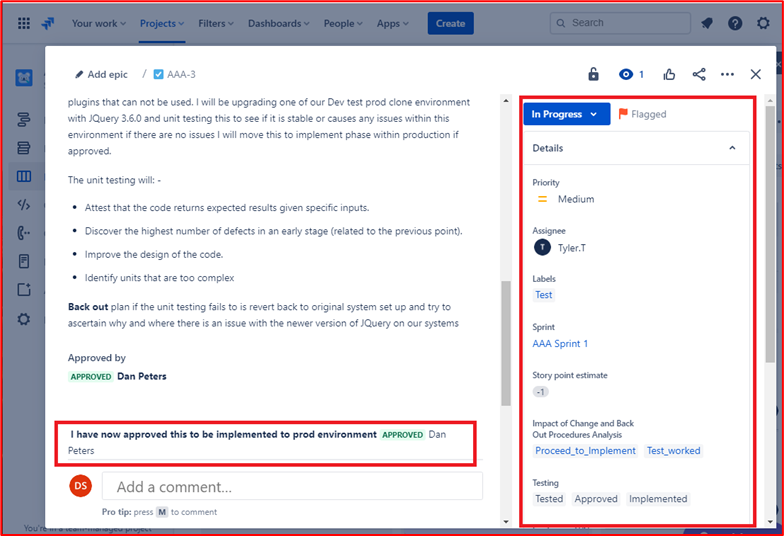

Throughout the process note that the person doing the job, the person reporting on it and the person approving the work to be done are different people.

The ticket above shows that the changes have now been approved for implementation to the production environment. The right-hand box shows that the test worked and was successful and that the changes have now been implemented to Prod Environment.

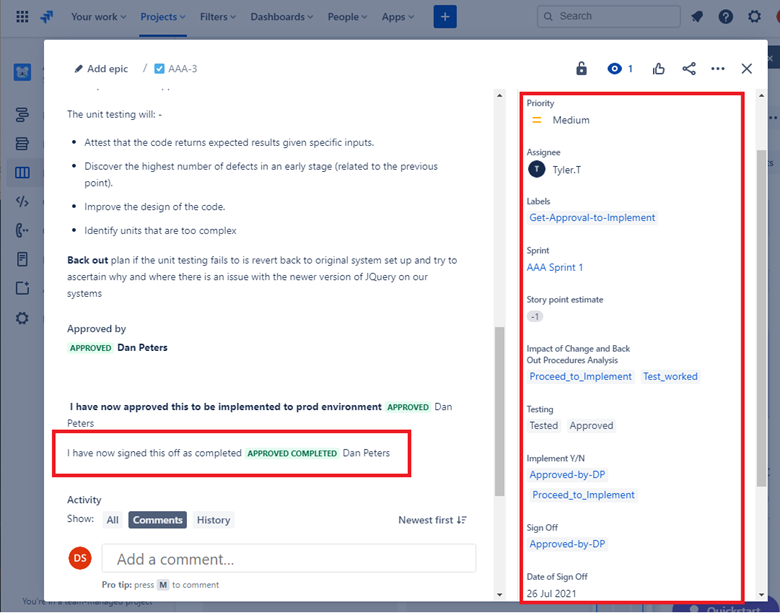

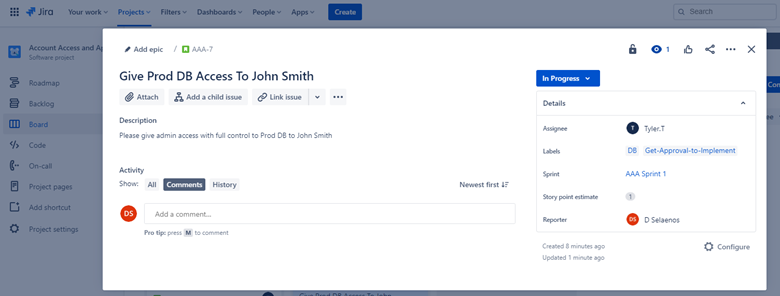

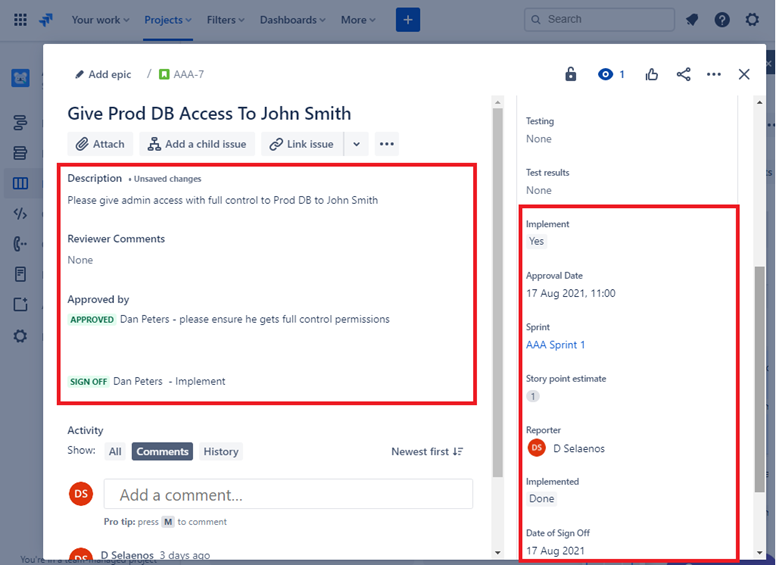

Control 33: Provide demonstratable evidence that change requests undergo an authorization and sign out process.

Intent: Process must be implemented which forbids changes to be carried out without proper authorization and sign out. The change needs to be authorized before being implemented and the change needs to be signed off once complete. This ensures that the change requests have been properly reviewed and someone in authority has signed off the change.

Example Evidence Guidelines: Evidence can be provided by exporting a sample of change requests, providing paper change requests, or providing screenshots of the change requests showing the change has been authorized, prior to implementation, and that the change has been signed off after completed.

Example Evidence: The below screenshot shows an example Jira ticket showing that the change needs to be authorized before being implemented and approved by someone other than the developer/requester. You can see the changes here are approved by someone with authority. On the right has been signed by DP once complete.

In the ticket below you can see the change has been signed off once complete and shows the job completed and closed.

Secure Software Development/Deployment

Organizations involved in software development activities are often faced with competing priorities between security and TTM (Time to Market) pressures, however, implementing security related activities throughout the software development lifecycle (SDLC) can not only save money, but can also save time. When security is left as an afterthought, issues are usually only identified during the test phase of the (DSLC), which can often be more time consuming and costly to fix. The intent of this security section is to ensure secure software development practices are followed to reduce the risk of coding flaws being introduced into the software which is developed. Additionally, this section looks to include some controls to aid in secure deployment of software.

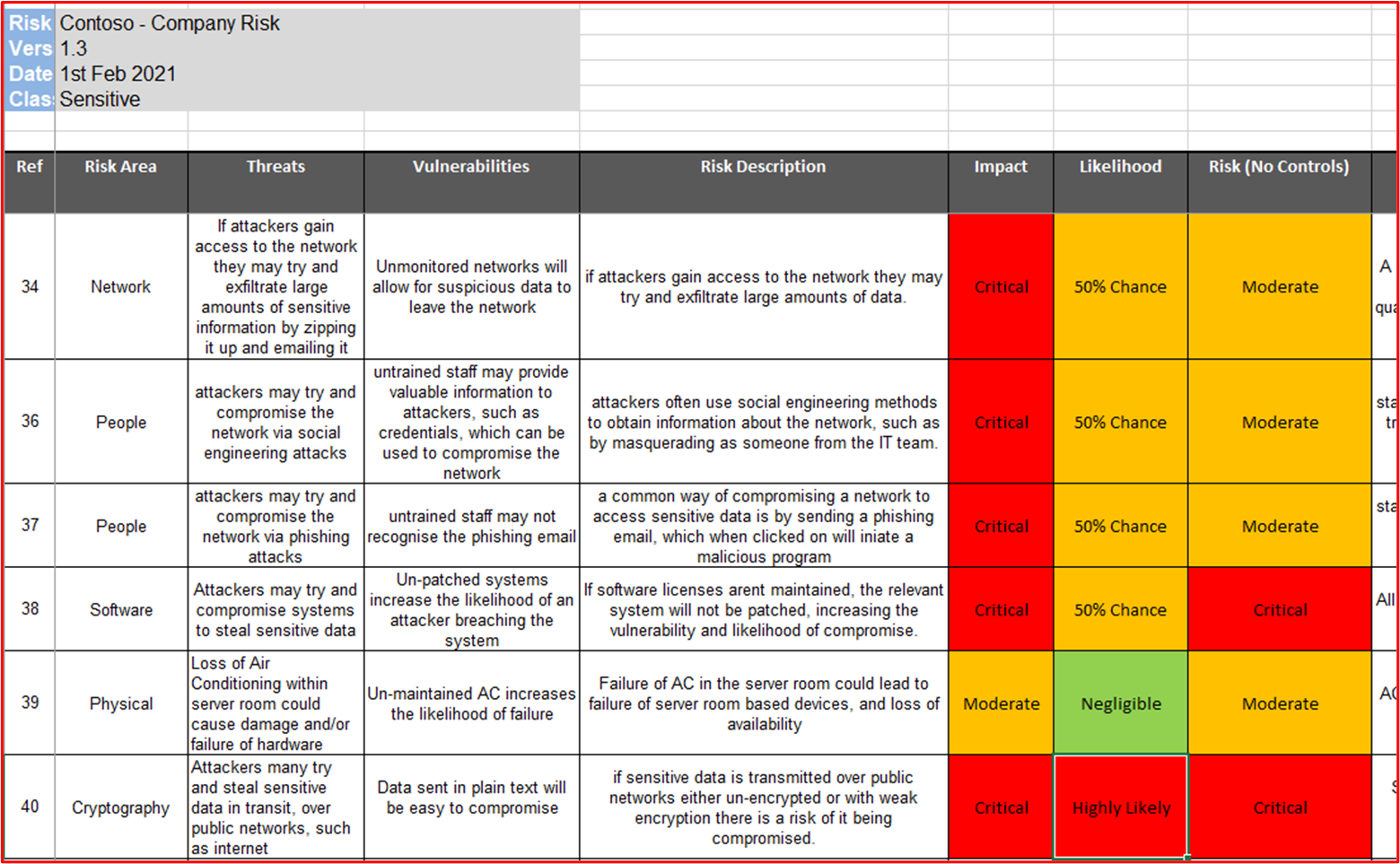

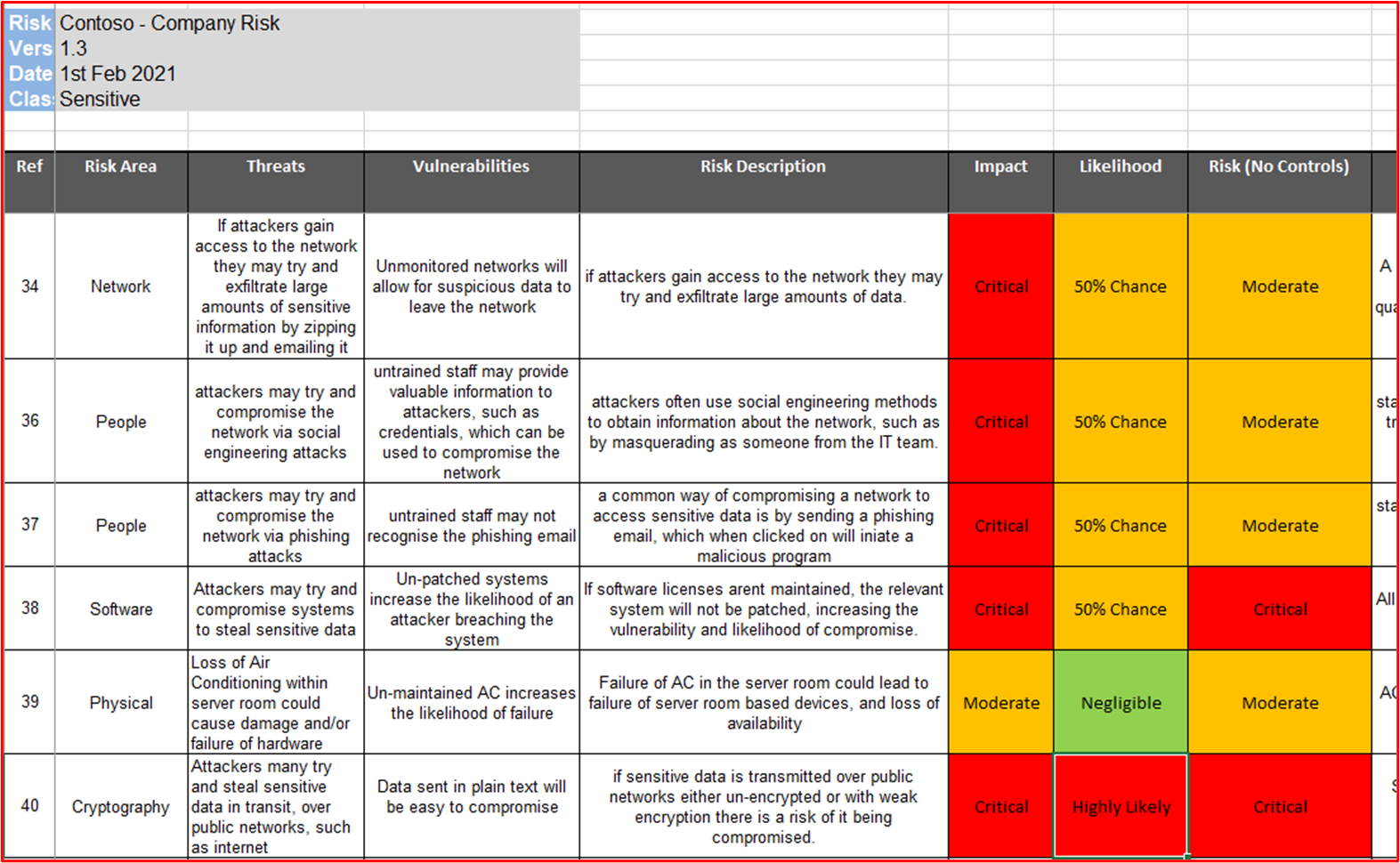

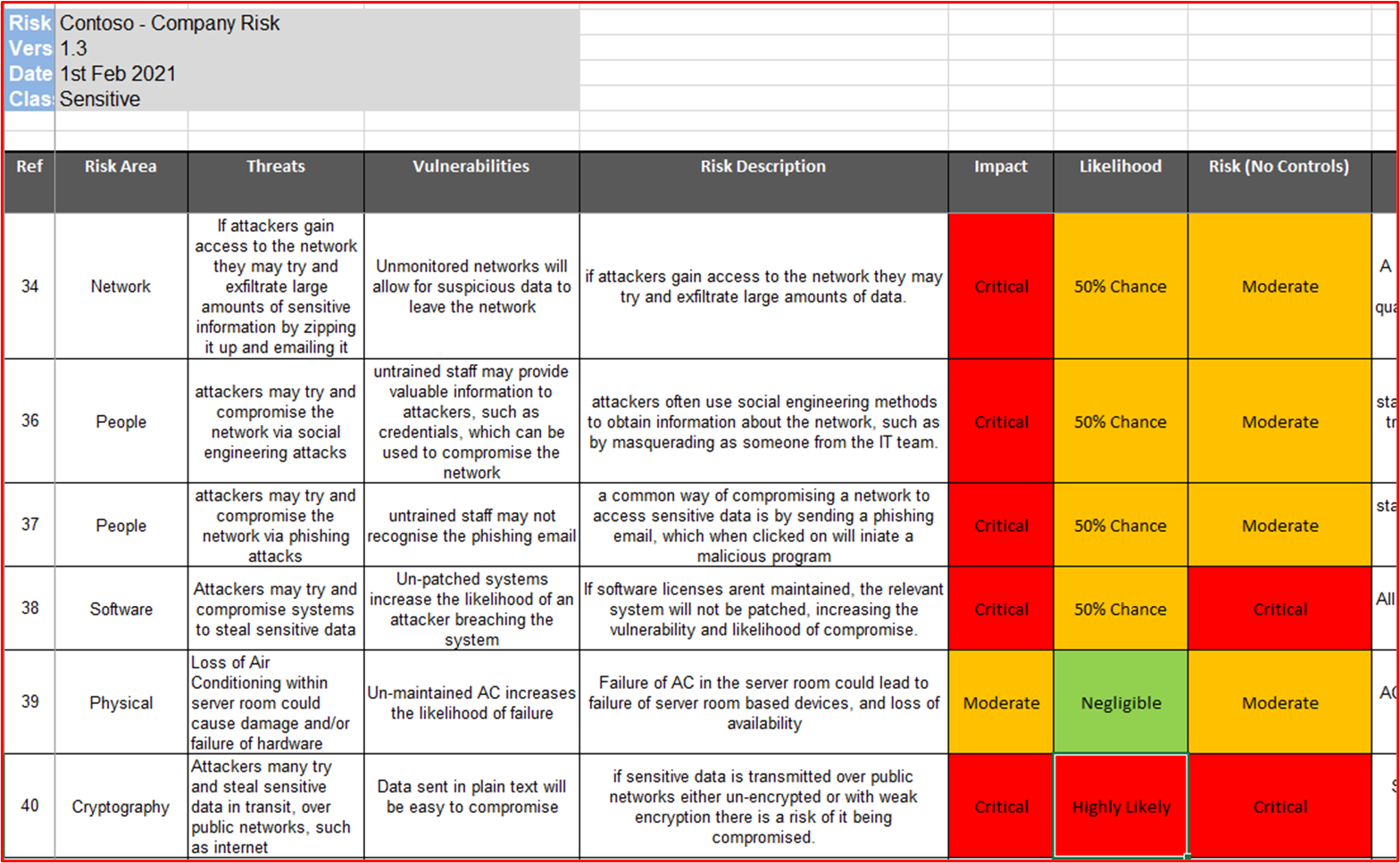

Control 34: Provide policies and procedures that support secure software development and deployment, including secure coding best practice guidance against common vulnerability classes such as, OWASP Top 10 or SANS Top 25 CWE.

Intent: Organizations need to do everything in their power in ensuring software is securely developed and free from vulnerabilities. In a best effort to achieve this, a robust secure software development lifecycle (SDLC) and secure coding best practices should be established to promote secure coding techniques and secure development throughout the whole software development process. The intent is to reduce the number and severity of vulnerabilities in the software.

Example Evidence Guidelines: Supply the documented SDLC and/or support documentation which demonstrates that a secure development life cycle is in use and that guidance is provided for all developers to promote secure coding best practice. Take a look at OWASP in SDLC and the OWASP Software Assurance Maturity Model (SAMM).

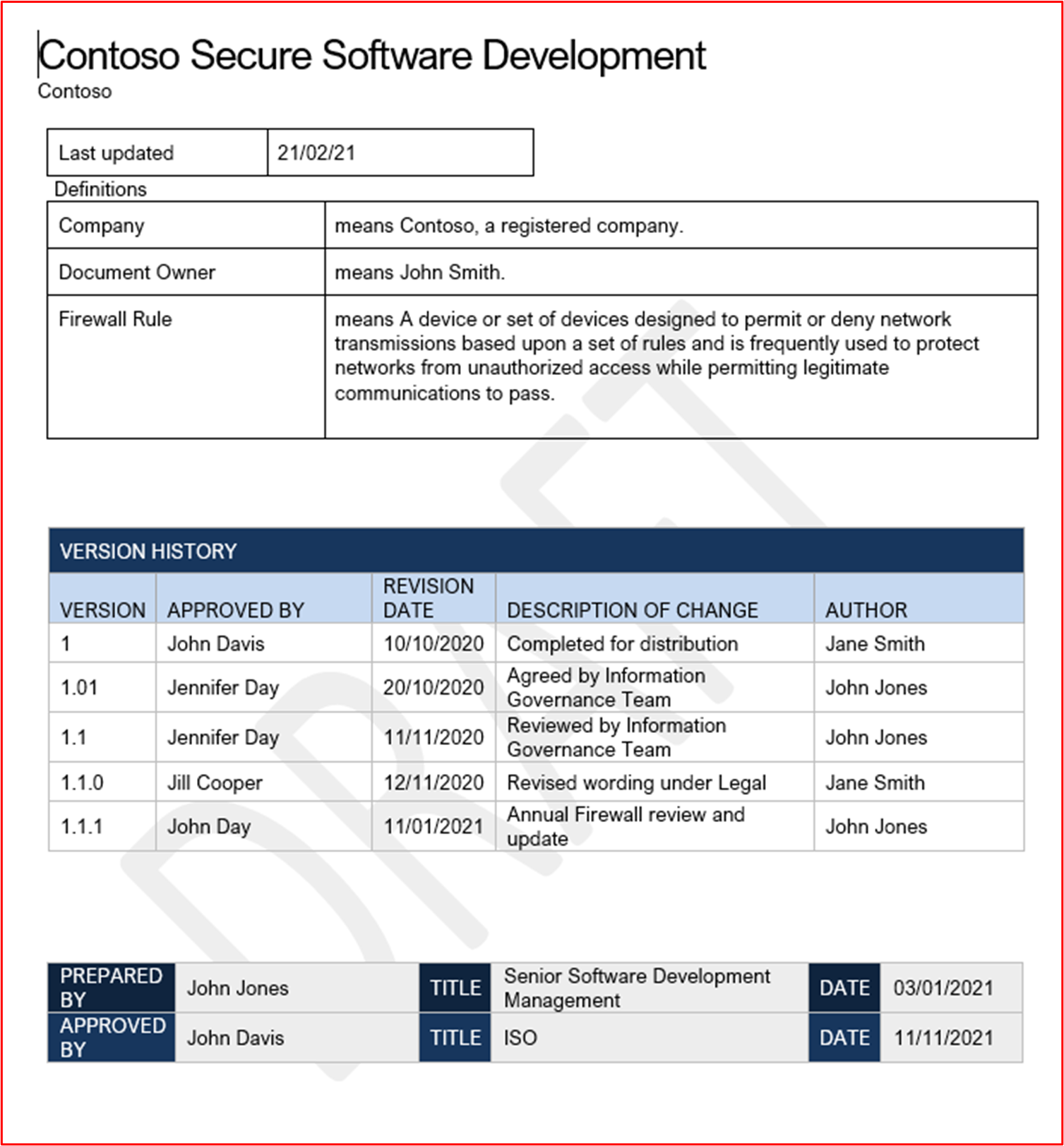

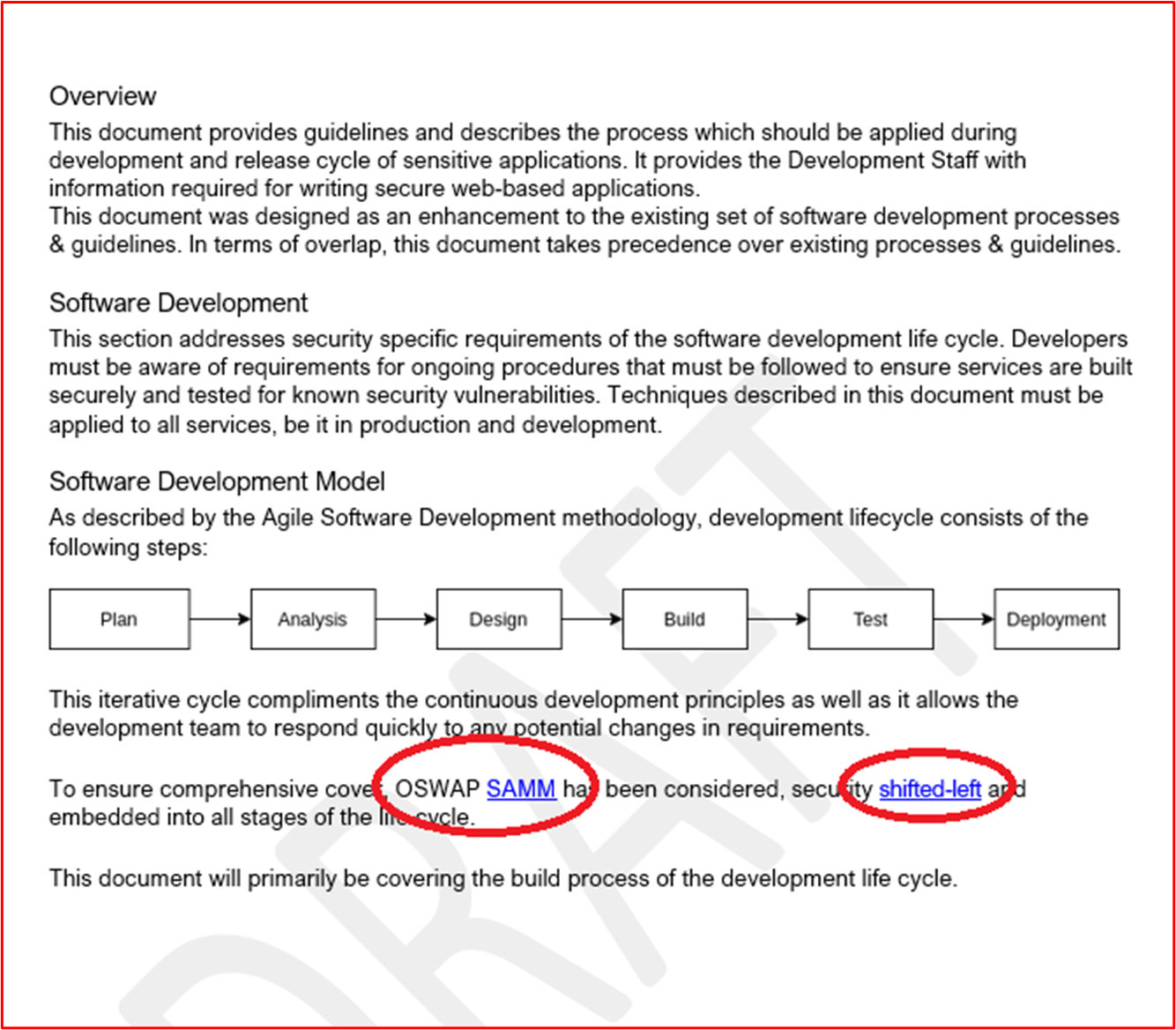

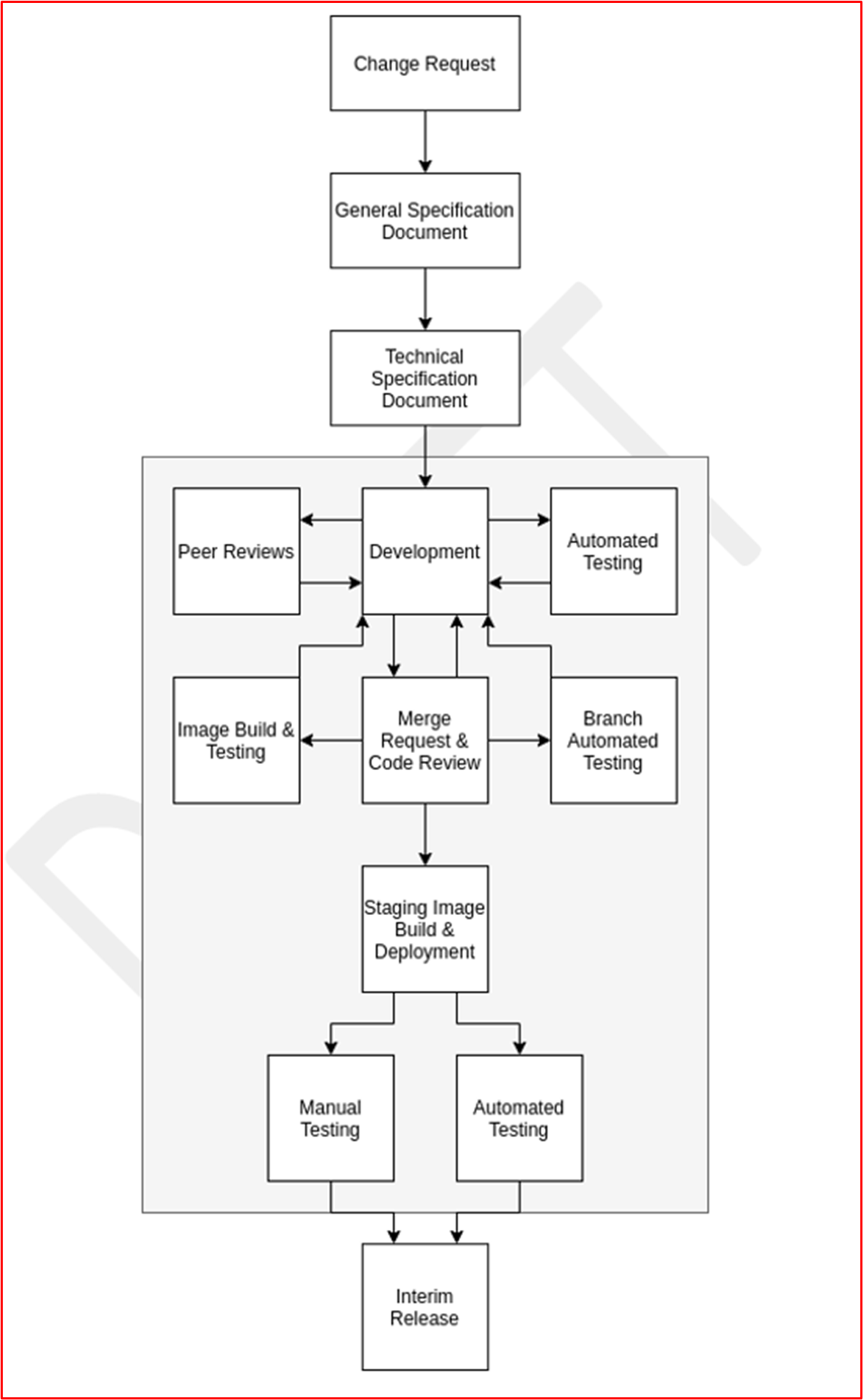

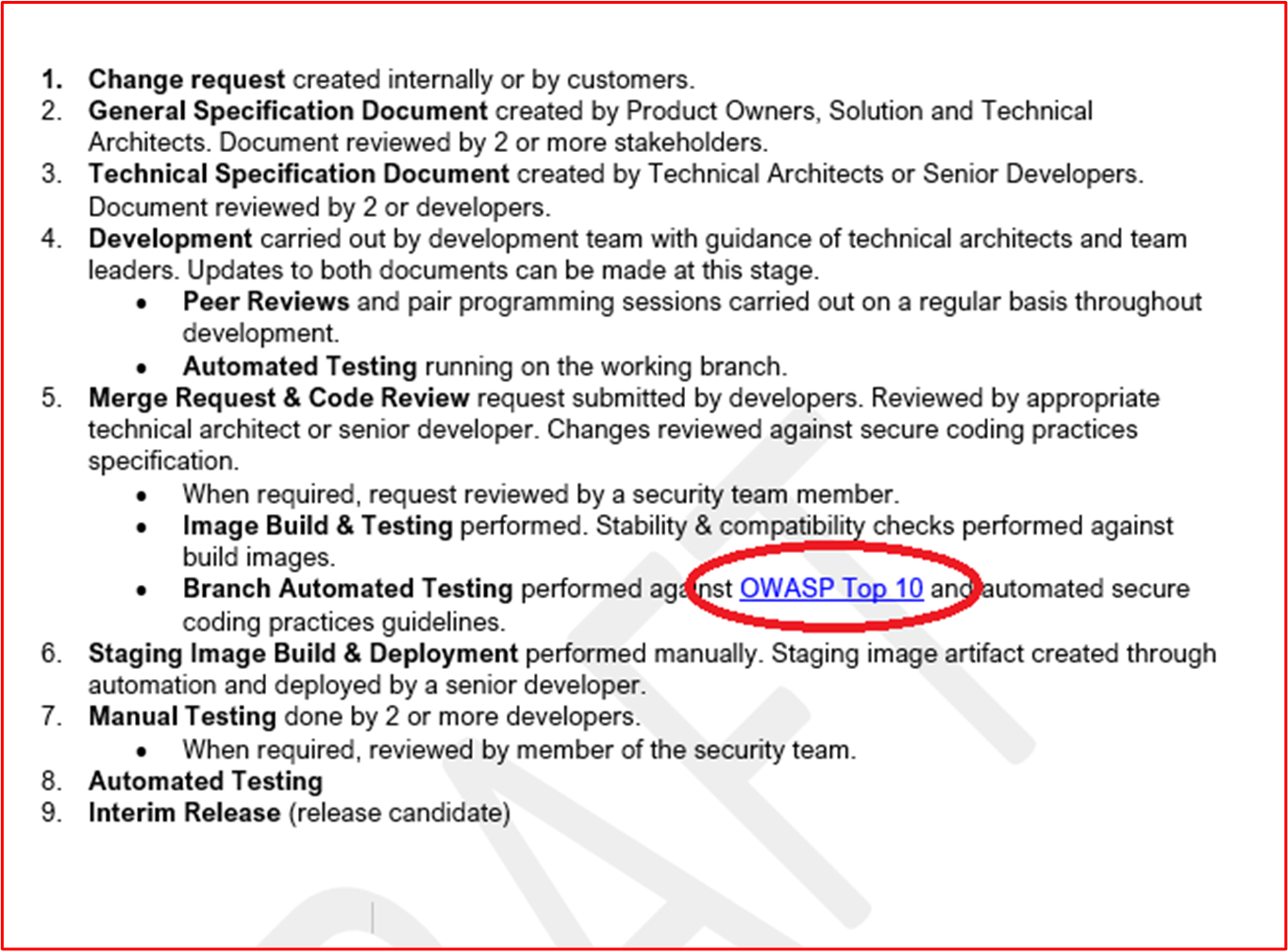

Example Evidence: The following is an extract from Contoso's Secure Software Development Procedure, which demonstrates secure development and coding practices.

Note: These screenshots show the secure software development document, the expectation is for ISVs to share the actual supporting documentation and not simply provide a screenshot.

Control 35: Provide demonstratable evidence that code changes undergo a review and authorization process by a second reviewer.

Intent: The intent with this control is to perform a code review by another developer to help identify any coding mistakes which could introduce a vulnerability in the software. Authorization should be established to ensure code reviews are carried, testing is done, etc. prior to deployment. The authorization step can validate that the correct processes have been followed which underpins the SDLC defined above.

Example Evidence Guidelines: Provide evidence that code undergoes a peer review and must be authorized before it can be applied to the production environment. This evidence may be via an export of change tickets, demonstrating that code reviews have been carried out and the changes authorized, or it could be through code reviews software such as Crucible (https://www.atlassian.com/software/crucible).

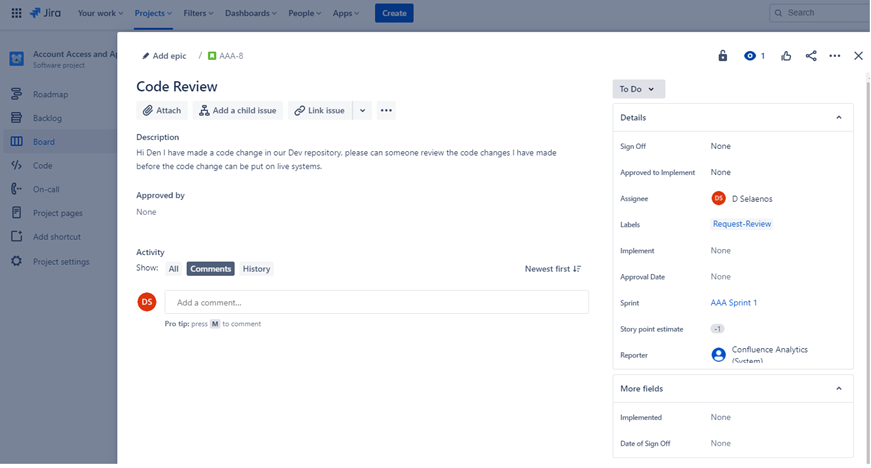

Example Evidence

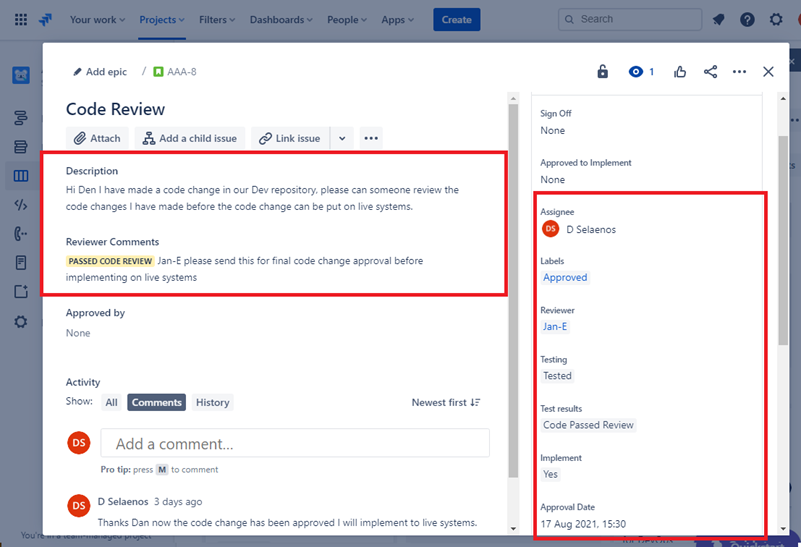

Below is a ticket that shows code changes undergo a review and authorization process by someone other than the original developer. It shows that a code review has been requested by the assignee and will be assigned to someone else for the code review.

Below is a ticket that shows code changes undergo a review and authorization process by someone other than the original developer. It shows that a code review has been requested by the assignee and will be assigned to someone else for the code review.

The image below shows that the code review was assigned to someone other than the original developer as shown by the highlighted section on the right-hand side of the image below. On the left-hand side you can see that the code has been reviewed and given a 'PASSED CODE REVIEW' status by the code reviewer.

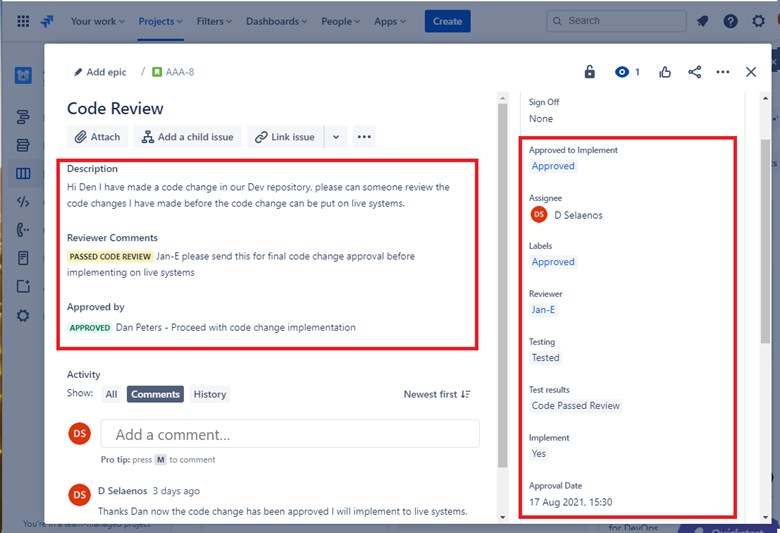

The ticket must now get approval by a manager before the changes can be put onto live production systems.

The image above shows that the reviewed code has been given approval to be implemented on the live production systems.

The image above shows that the reviewed code has been given approval to be implemented on the live production systems.

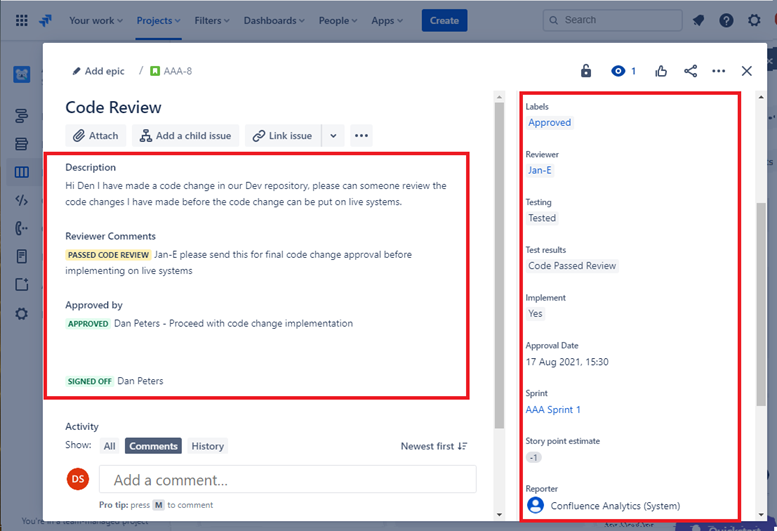

Once the code changes have been done the final job gets sign out as shown in the image above.

Once the code changes have been done the final job gets sign out as shown in the image above.

Note that throughout the process there are three people involved, the original developer of the code, the code reviewer and a manager to give approval and sign out. In order to meet the criteria for this control, it would be an expectation that your tickets will follow this process. Of a minimum of three people involved in the change control process for your code reviews.

Control 36: Provide demonstratable evidence that developers undergo secure software development training annually.

Intent: Coding best practices and techniques exist for all programming languages to ensure code is securely developed. There are external training courses that are designed to teach developers the different types of software vulnerabilities classes and the coding techniques that can be used to stop introducing these vulnerabilities into the software. The intention of this control is to teach these techniques to all developers and to ensure that these techniques aren't forgotten, or newer techniques are learned by carrying this out on a yearly basis.

Example Evidence Guidelines: Provide evidence by way of certificates if carried out by an external training company, or by providing screenshots of the training diaries or other artifacts which demonstrates that developers have attended training. If this training is carried out via internal resources, provide evidence of the training material also.

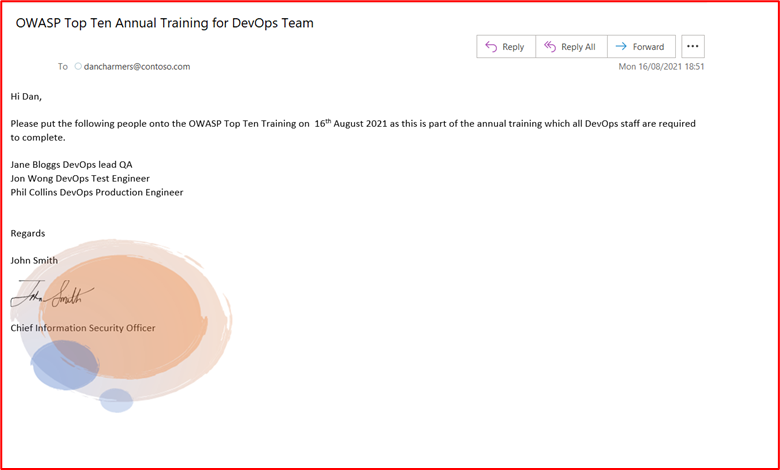

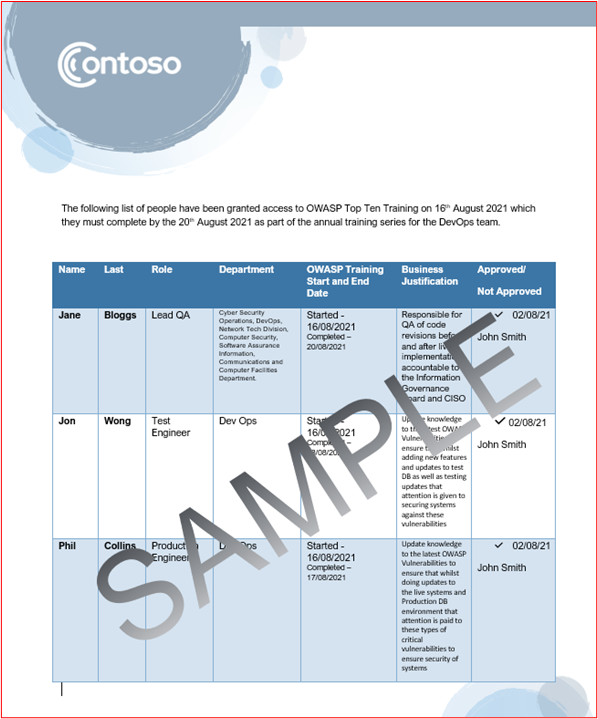

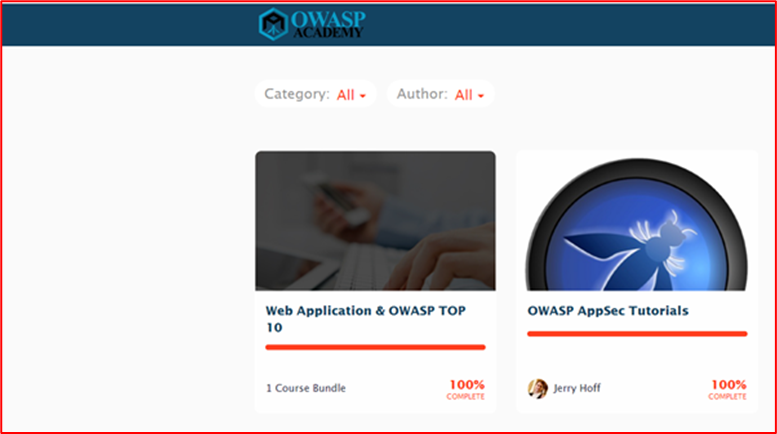

Example Evidence: Below is the email requesting staff in the DevOps team be enrolled into OWASP Top Ten Training Annual Training

The below shows that training has been requested with business justification and approval. This is then followed by screenshots taken from the training and a completion record showing that the person has finished the annual training.

Control 37: Provide demonstratable evidence that code repositories are secured with multi-factor authentication (MFA).

Intent: If an activity group can access and modify a software's code base, he/she could introduce vulnerabilities, backdoors, or malicious code into the code base and therefore into the application. There have been several instances of this already, with probably the most publicized being the NotPetya Ransomware attack which is reportedly infected through a compromised update to Ukrainian tax software called M.E.Doc (see What isn'tPetya).

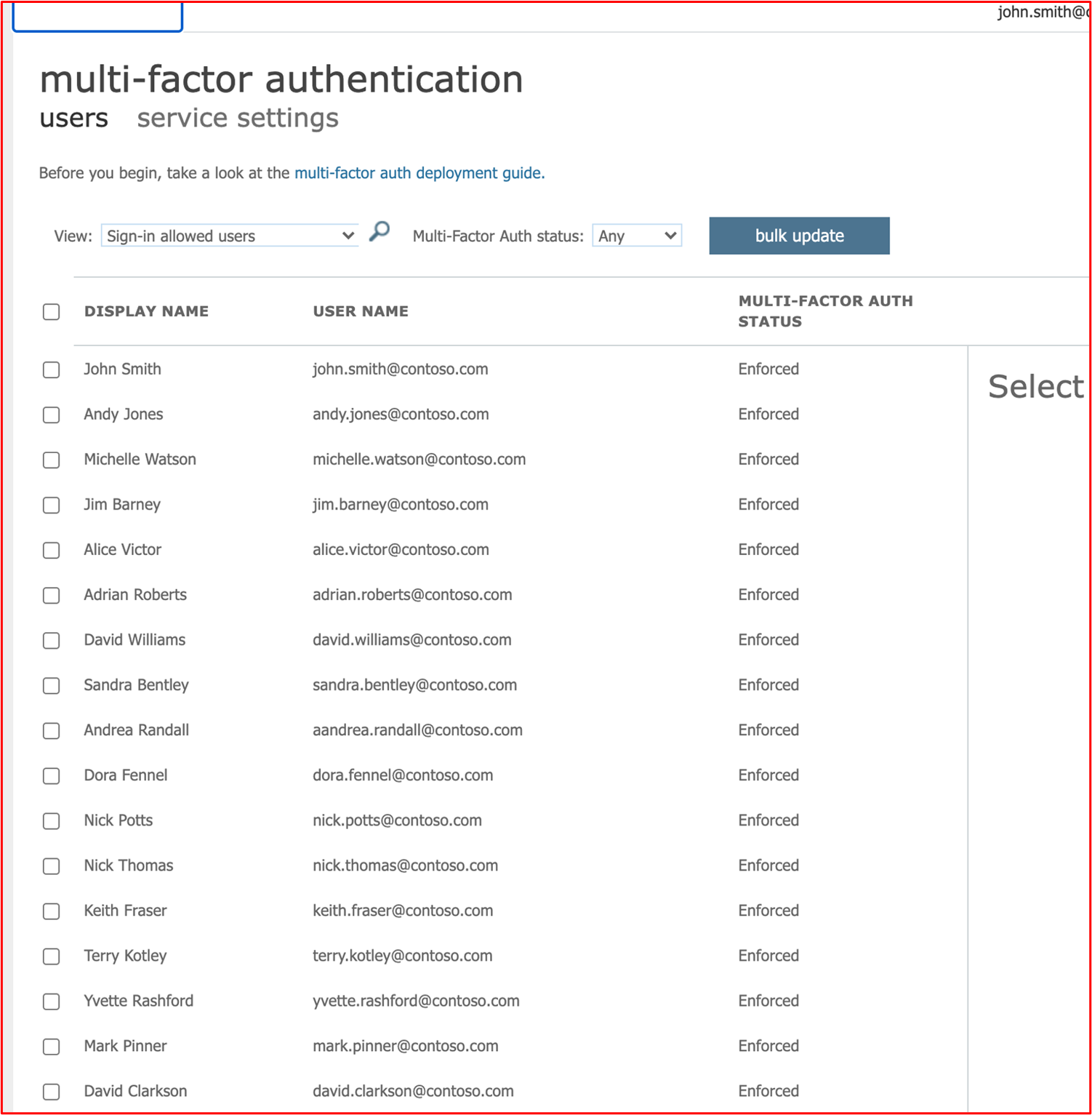

Example Evidence Guidelines: Provide evidence by way of screenshots from the code repository that ALL users have MFA enabled.

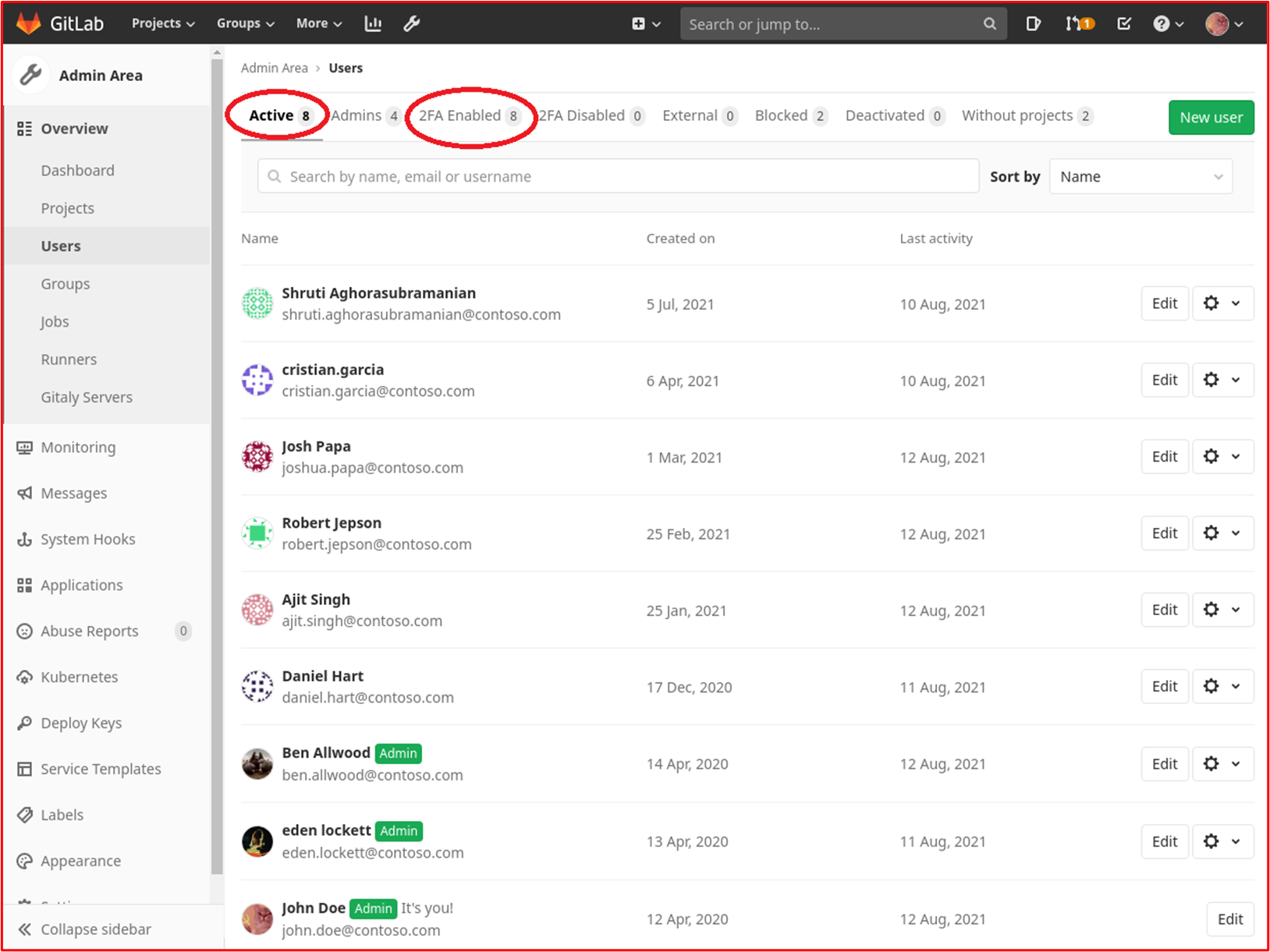

Example Evidence: The following screenshot shows that MFA is enabled on all 8 GitLab users.

Control 38: Provide demonstratable evidence that access controls are in place to secure code repositories.

Intent: Leading on from the previous control, access controls should be implemented to limit access to only individual users who are working on particular projects. By limiting access, you're limiting the risk of unauthorized changes being carried out and thereby introducing insecure code changes. A least privileged approach should be taken to protect the code repository.

Example Evidence Guidelines: Provide evidence by way of screenshots from the code repository that access is restricted to individuals needed, including different privileges.

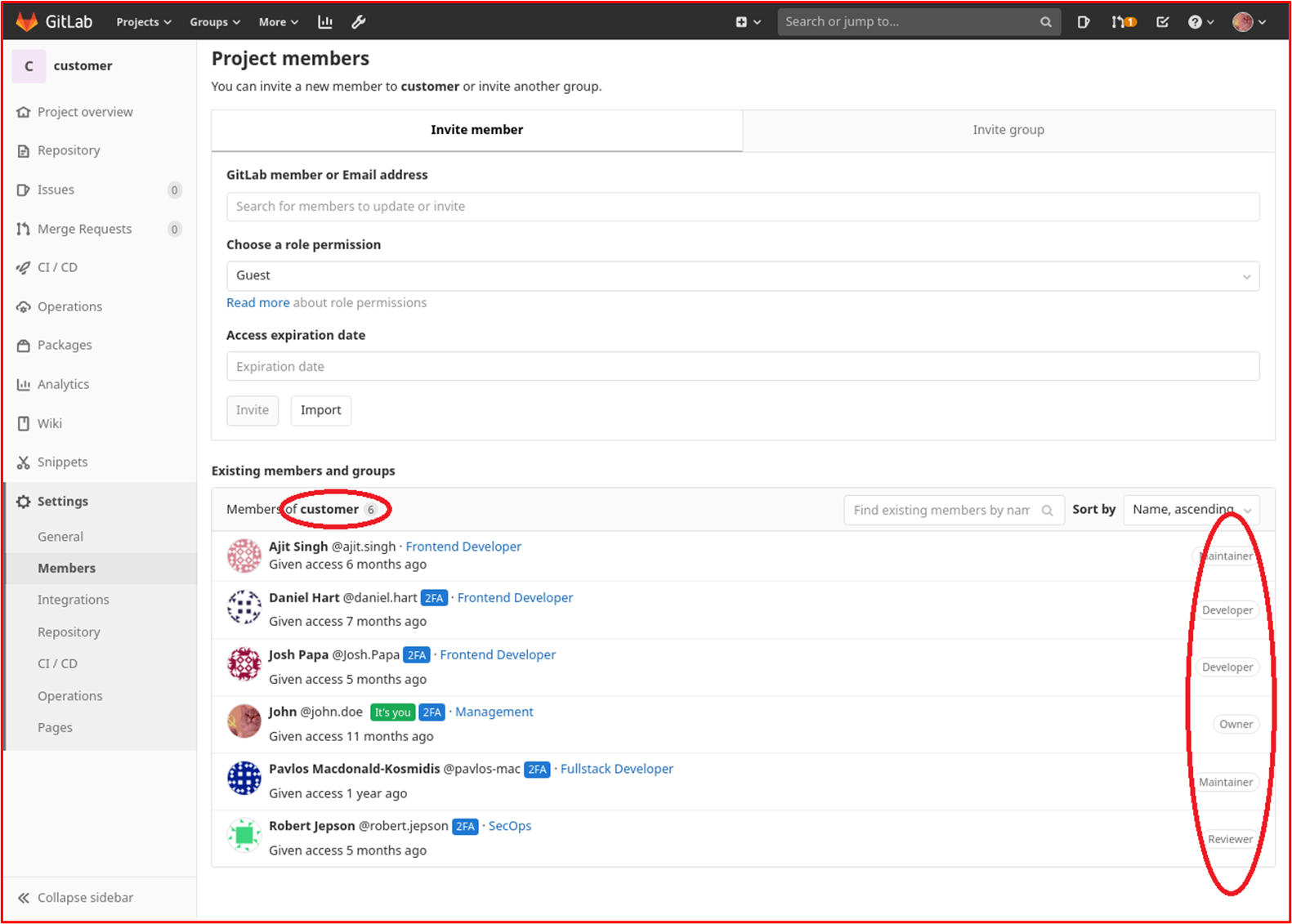

Example Evidence: The following screenshot shows members of the "Customers" project in GitLab which is the Contoso "Customer Portal". As can be seen in the screenshot, users have different "Roles" to limit access to the project.

Account Management

Secure account management practices are important as user accounts form the basis of allowing access to information systems, system environments and data. User accounts need to be properly secured as a compromise of the user's credentials can provide not only a foothold into the environment and access to sensitive data but may also provide administrative control over the entire environment or key systems if the user's credentials have administrative privileges.

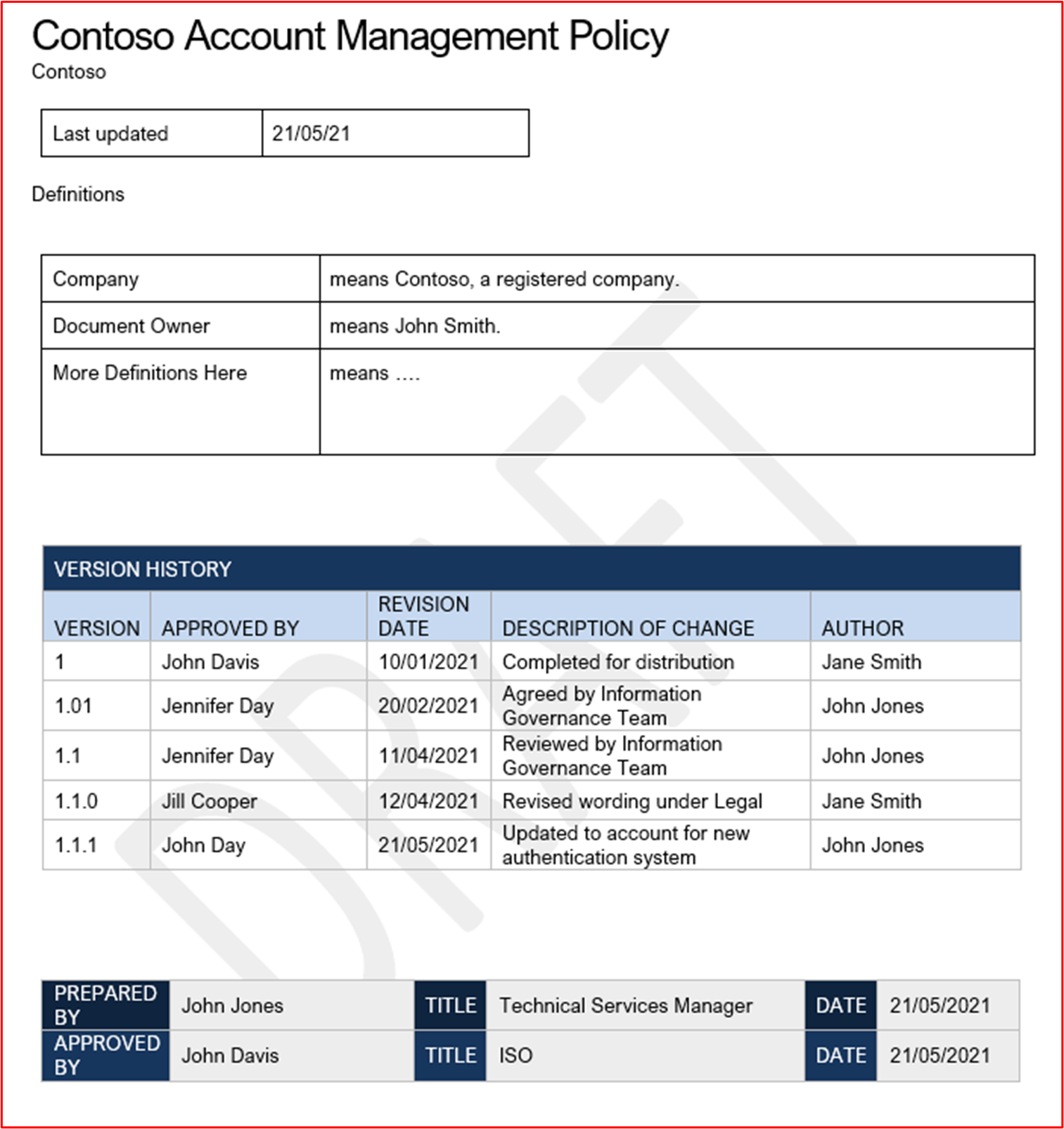

Control 39: Provide policy documentation that governs account management practices and procedures.

Intent: User accounts continue to be targeted by activity groups and will often be the source of a data compromise. By configuring overly permissive accounts, organizations won't only increase the pool of 'privileged' accounts that can be used by an activity group to perform a data breach but can also increase the risk of the successful exploitation of a vulnerability that would require specific privileges to succeed.

BeyondTrust produces a "Microsoft Vulnerabilities Report" each year which analyzes Microsoft security vulnerabilities for the previous year and details percentages of these vulnerabilities that rely on the user account having admin rights. In a recent blog post "New Microsoft Vulnerabilities Report Reveals a 48% YoY Increase in Vulnerabilities & How They Could Be Mitigated with Least Privilege", 90% of Critical vulnerabilities in Internet Explorer, 85% of Critical vulnerabilities in Microsoft Edge and 100% of Critical vulnerabilities in Microsoft Outlook would have been mitigated by removing admin rights. To support secure account management, organizations need to ensure supporting policies and procedures which promote security best practices are in place and followed to mitigate these threats.

Example Evidence Guidelines: Supply the documented policies and procedure documents which cover your account management practices. At a minimum, the topics covered should align to the controls within the Microsoft 365 Certification.

Example Evidence: The following screenshot shows an example Account Management Policy for Contoso.

Note: This screenshot shows a policy/process document, the expectation is for ISVs to share the actual supporting policy/procedure documentation and not simply provide a screenshot.

Control 40: Provide demonstratable evidence that default credentials are either disabled, removed, or changed across the sampled system components.

Intent: Although this is becoming less popular, there are still instances where activity groups can leverage default and well documented user credentials to compromise production system components. A popular example of this is with Dell iDRAC (Integrated Dell Remote Access Controller). This system can be used to remotely manage a Dell Server, which could be used by an activity group to gain control over the Server's operating system. The default credential of root::calvin is documented and can often be used by activity groups to gain access to systems used by organizations. The intent of this control is to ensure these default credentials are either disabled or removed

Example Evidence Guidelines: There are various ways in which evidence can be collected to support this control. Screenshots of configured users across all system components can help, that is, screenshots of the Linux /etc/shadow and /etc/passwd files will help to demonstrate if accounts have been disabled. Note, that the /etc/shadow file would be needed to demonstrate accounts are truly disabled by observing that the password hash starts with an invalid character such as '!' indicating that the password is unusable. The advice would be to only disable a few characters of the password has and redact the rest. Other options would be for a screensharing sessions where the assessor was able to manually try default credentials, for example in the above discussion on Dell iDRAC, the assessor need to try to authenticate against all Dell iDRAC interfaces using the default credentials.

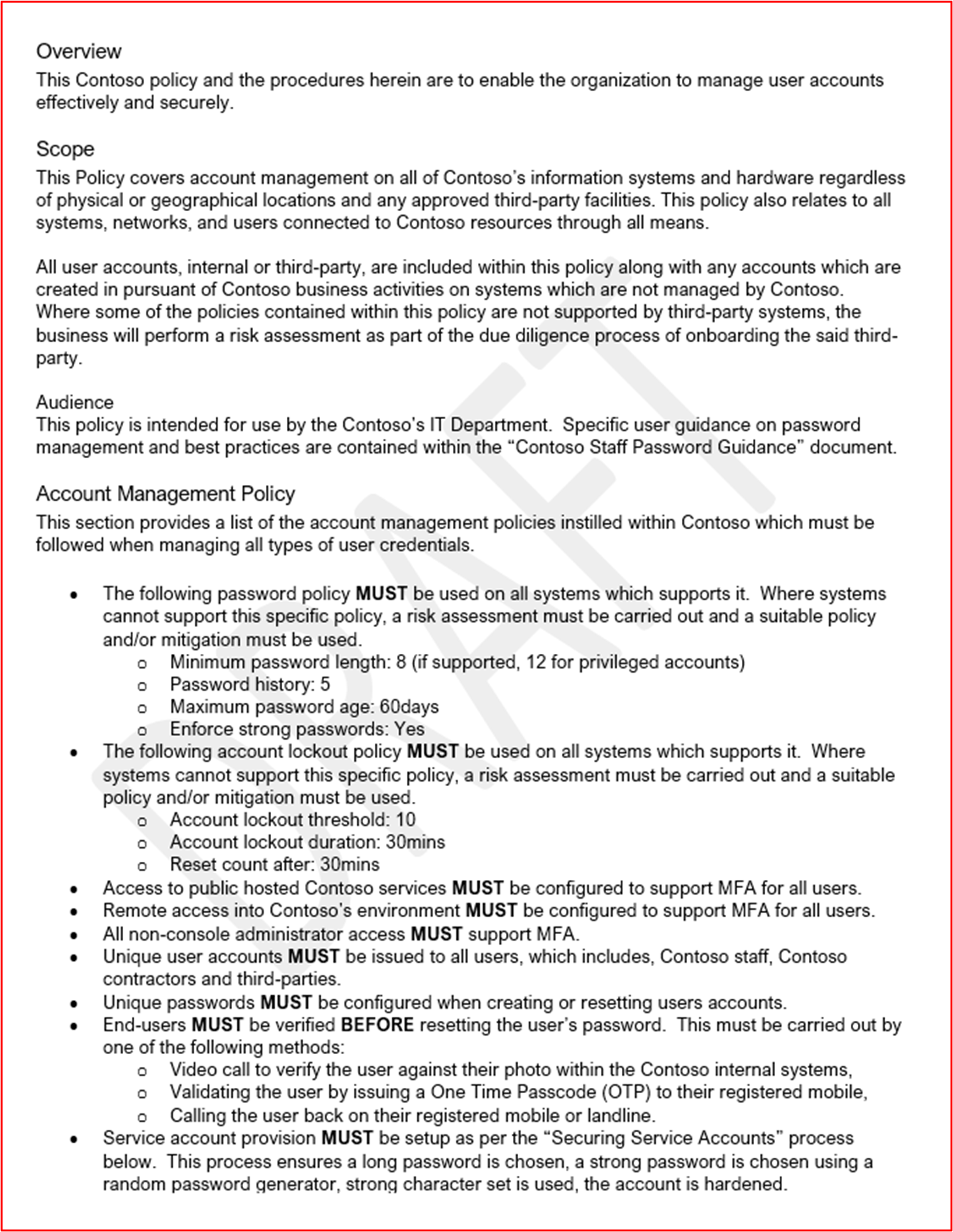

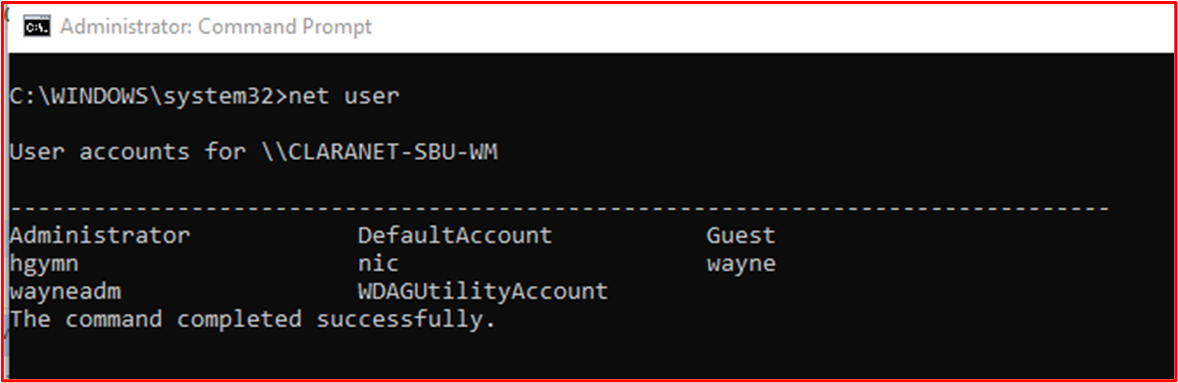

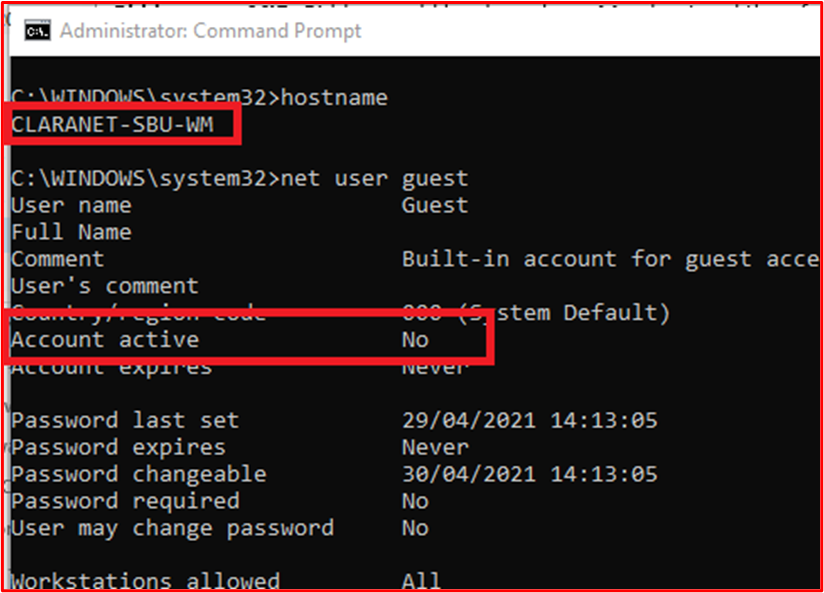

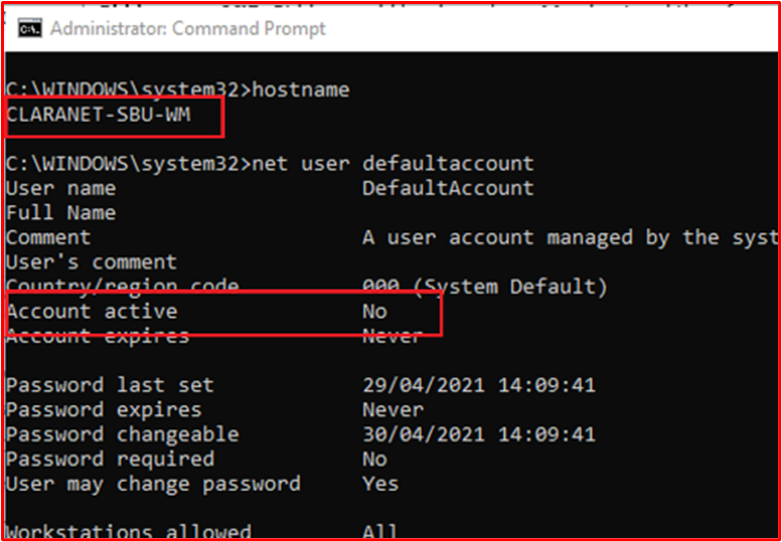

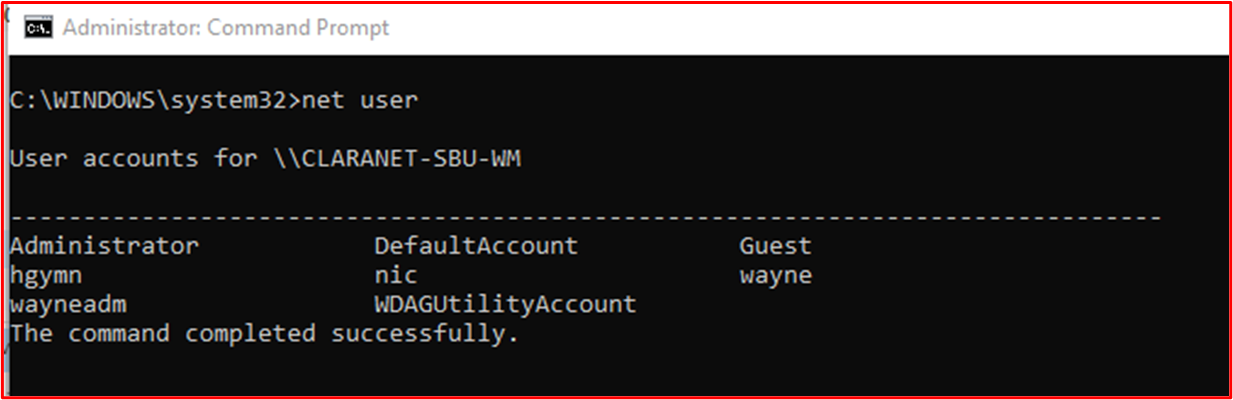

Example Evidence: The following screenshot shows user accounts configured for the in-scope system component "CLARANET-SBU-WM". The shows several default accounts; Administrator, DefaultAccount and Guest, however, the following screenshots show that these accounts are disabled.

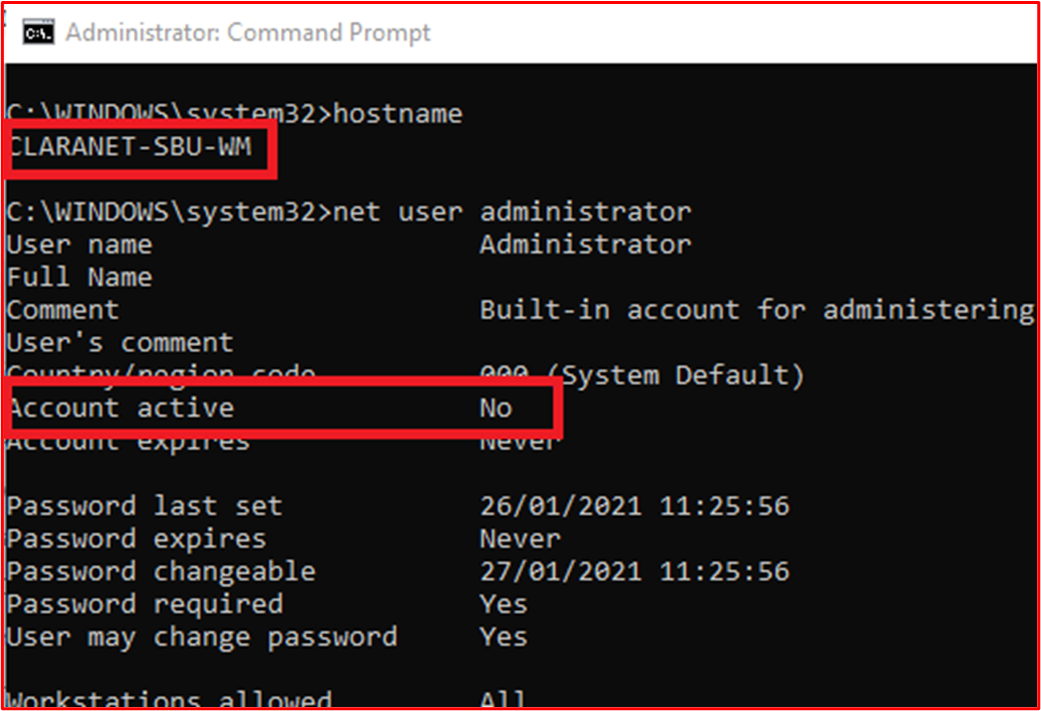

This next screenshot shows the Administrator account is disabled on the in-scope system component "CLARANET-SBU-WM".

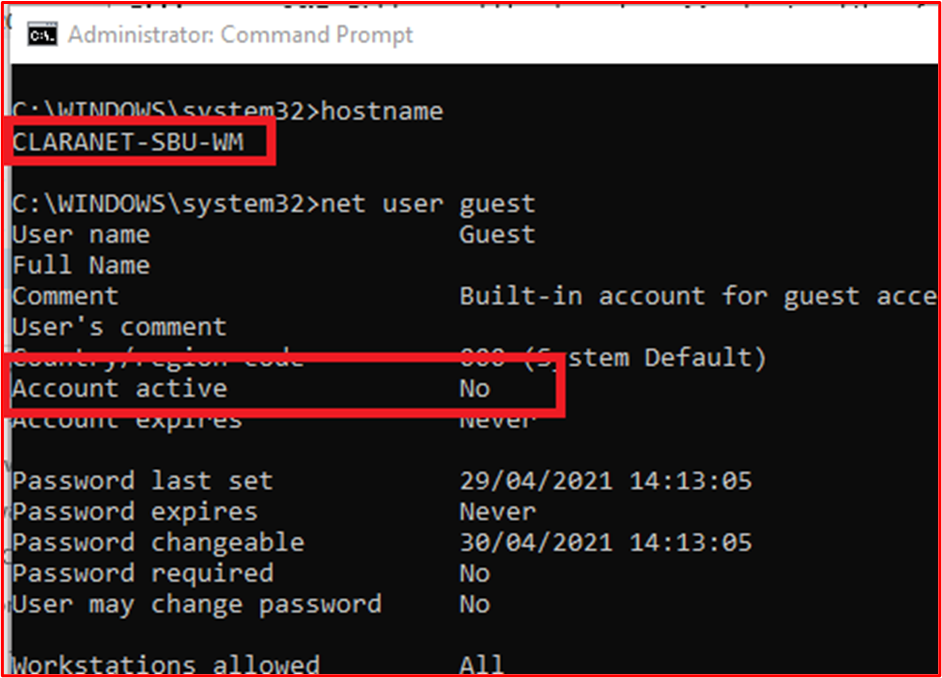

This next screenshot shows the Guest account is disabled on the in-scope system component "CLARANET-SBU-WM".

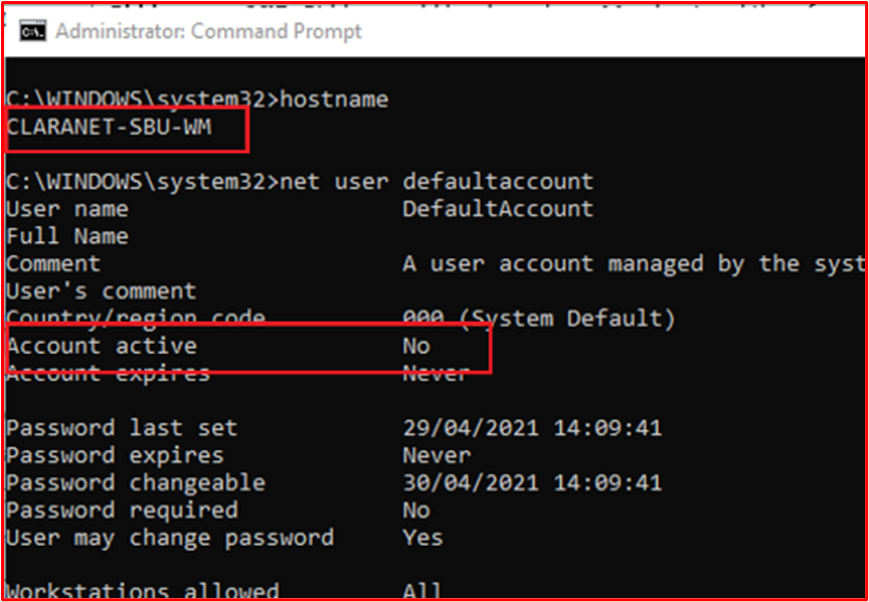

This next screenshot shows that the DefaultAccount is disabled on the in-scope system component "CLARANET-SBU-WM".

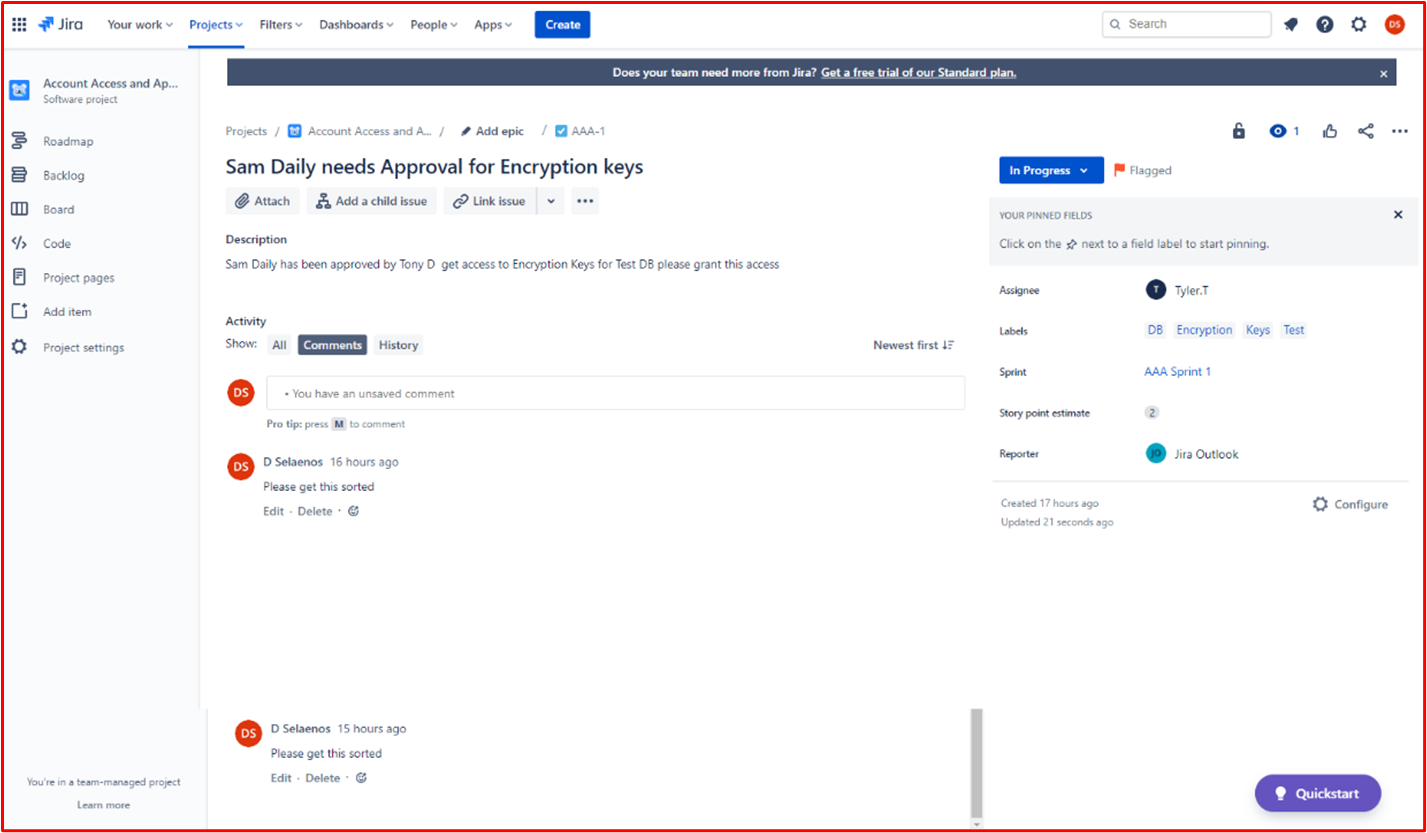

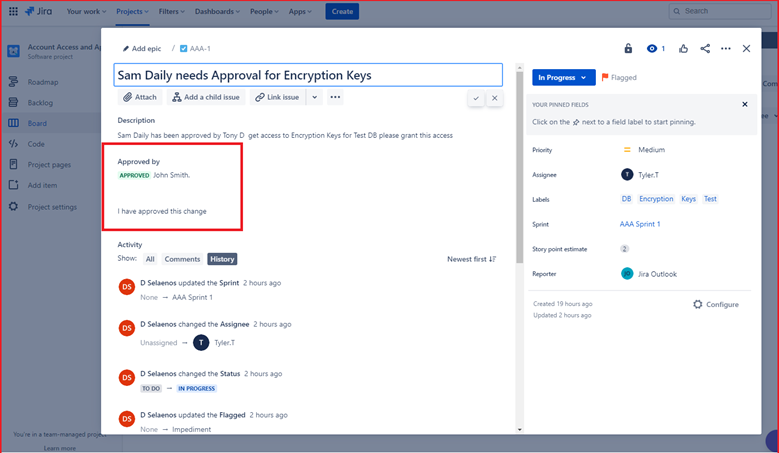

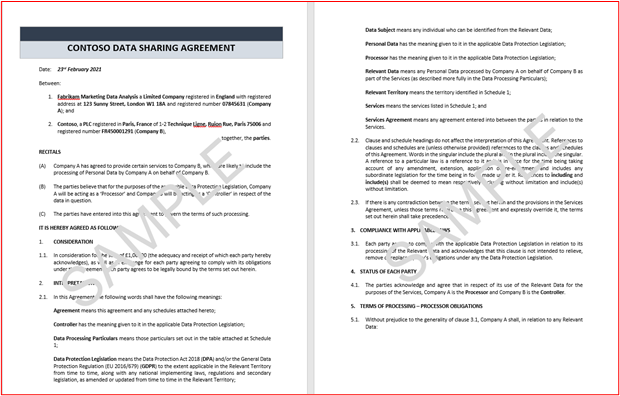

Control 41: Provide demonstratable evidence that account creation, modification and deletion goes through an established approval process.

Intent: The intent is to have an established process to ensure all account management activities is approved ensuring that account privileges are maintaining the least privilege principles and that account management activities can be properly reviewed and tracked.

Example Evidence Guidelines: Evidence would typically be in the form of change request tickets, ITSM (IT Service Management) requests or paperwork showing requests for accounts to be created, modified, or deleted have gone through an approval process.

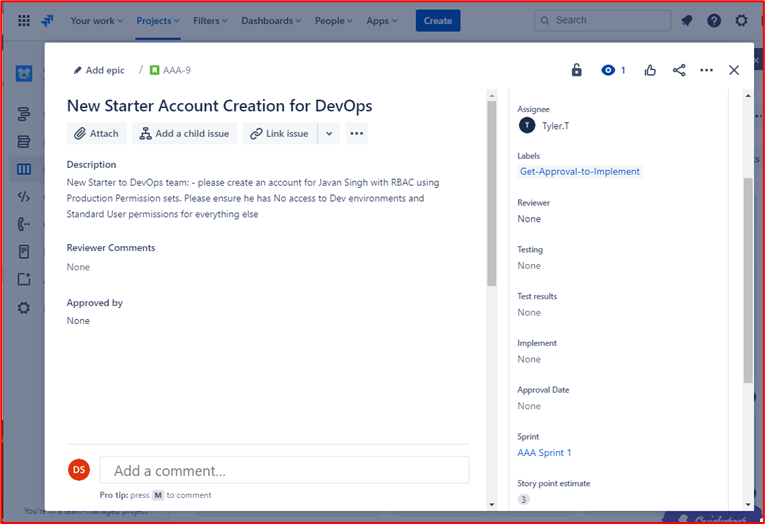

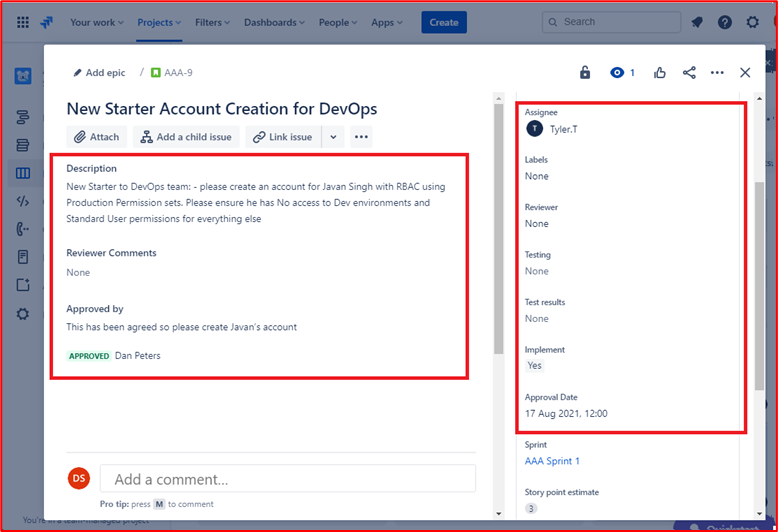

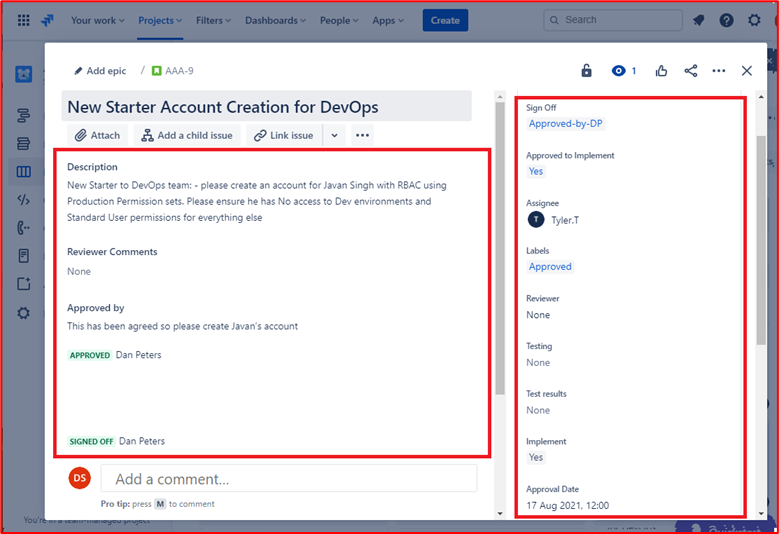

Example Evidence: The images below show account creation for a new starter to the DevOps team who is required to have role-based access control setting based on the production environment permissions with no access to dev environment and standard non privileged access to everything else.

The account creation has gone through the approval process and the sign out process once the account was created and the ticket closed.

Control 42: Provide demonstratable evidence that a process is in place to either disable or delete accounts not used within 3 months.

Intent: Inactive accounts can sometimes become compromised either because they're targeted in brute force attacks which may not be flagged as the user won't be trying to log into the accounts, or by way of a password database breach where a user's password has been reused and is available within a username/password dump on the Internet. Unused accounts should be disabled/removed to reduce the attack surface an activity group has to carry out account compromise activities. These accounts may be due to a leavers process not being carried out properly, a staff member going on long term sickness or a staff member going on maternity/paternity leave. By implementing a quarterly process to identify these accounts, organizations can minimize the attack surface.

Example Evidence Guidelines: Evidence should be two-fold. Firstly, a screenshot or file export showing the "last sign in" of all user accounts within the in-scope environment. This may be local accounts as well as accounts within a centralized directory service, such as Microsoft Entra ID. This will demonstrate that no accounts older than 3 months are enabled. Secondly, evidence of the quarterly review process which may be documentary evidence of the task being completed within ADO (Azure DevOps) or JIRA tickets, or through paper records which should be signed off.

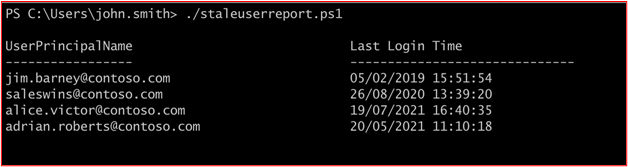

Example Evidence: This first screenshot shows the output of the script which is executed quarterly to view the last sign in attribute for users within Microsoft Entra ID.

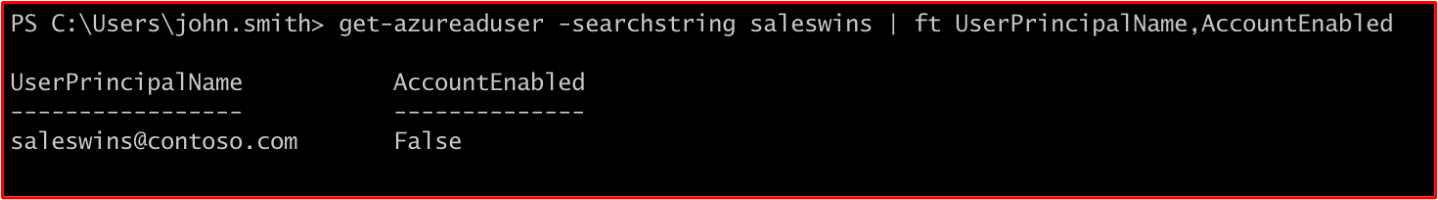

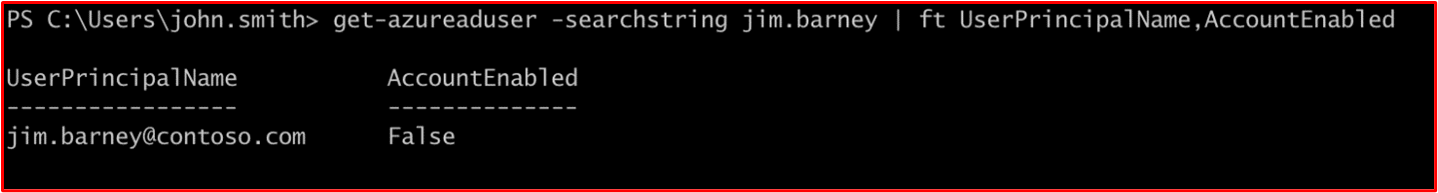

As can be seen in the above screenshot, two users are showing as not have logged in for some time. The following two screenshots show that these two users are disabled.

Control 43: Provide demonstratable evidence that a strong password policy or other suitable mitigations to protect user credentials are in place. The following should be used as a minimum guideline:

Minimum password length of 8 characters

Account lockout threshold of no more than 10 attempts

Password history of a minimum of 5 passwords

Enforcement of the use of strong password

Intent: As already discussed, user credentials are often the target of attack by activity groups attempting to gain access to an organization's environment. The intent of a strong password policy is to try to force users into picking strong passwords to mitigate the chances of activity groups being able to brute force them. The intention of adding the "or other suitable mitigations" is to recognize that organizations may implement other security measures to help protect user credentials based on industry developments such as "NIST Special Publication 800-63B".

Example Evidence Guidelines: Evidence to demonstrate a strong password policy may be in the form of a screenshot of an organizations Group Policy Object or Local Security Policy "Account Policies à Password Policy" and "Account Policies à Account Lockout Policy" settings. The evidence depends on the technologies being used; that is, for Linux it could be the /etc/pam.d/common-password config file, for BitBucket the "Authentication Policies" section within the Admin Portal (https://support.atlassian.com/security-and-access-policies/docs/manage-your-password-policy/), etc.

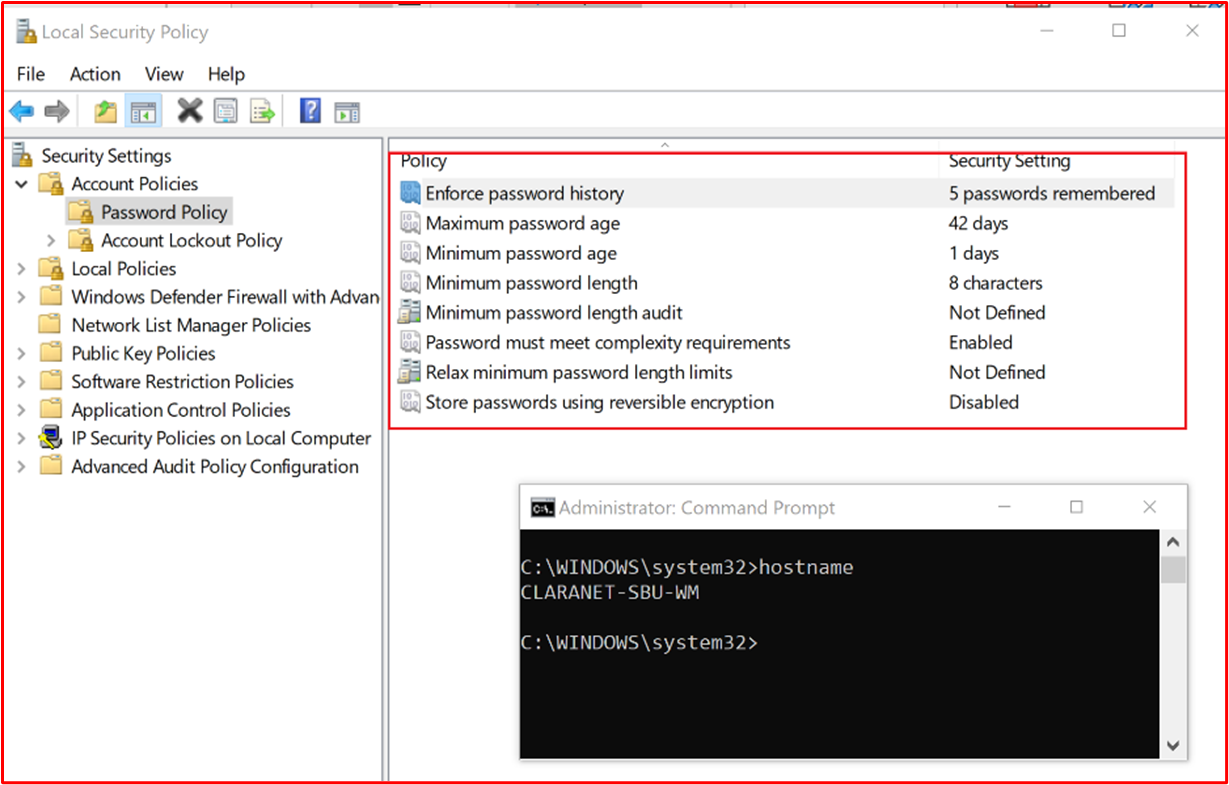

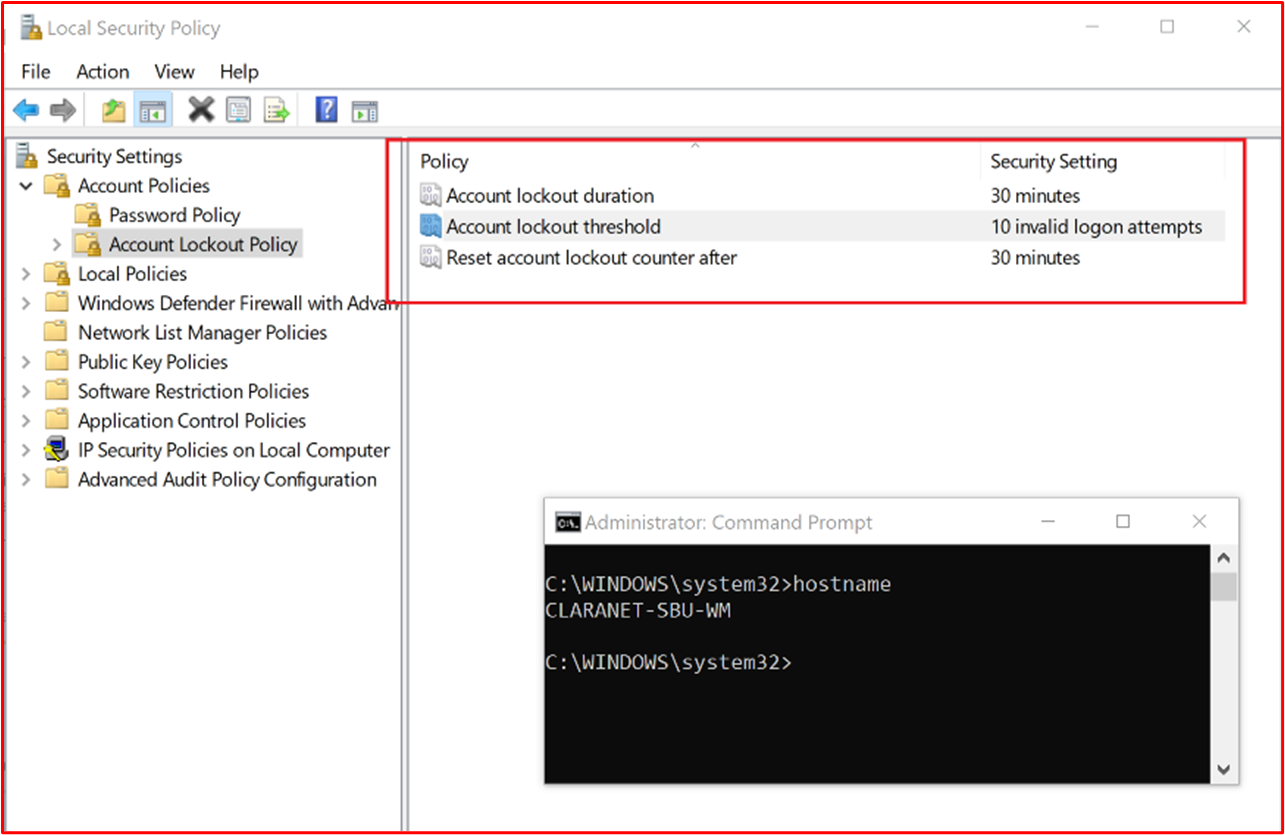

Example Evidence: The evidence below shows the password policy configured within the "Local Security Policy" of the in-scope system component "CLARANET-SBU-WM".

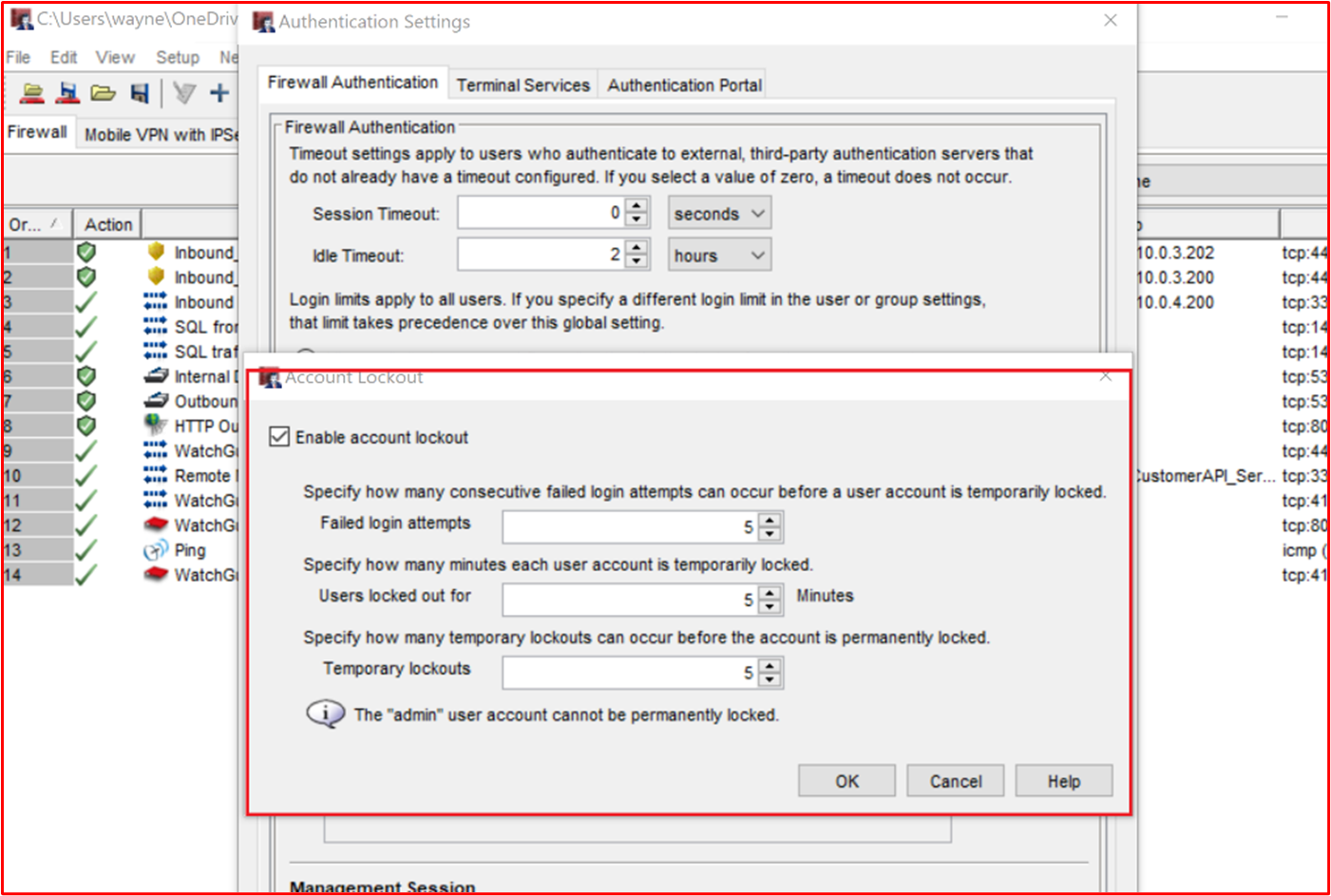

The screenshot below shows Account Lockout settings for a WatchGuard Firewall.

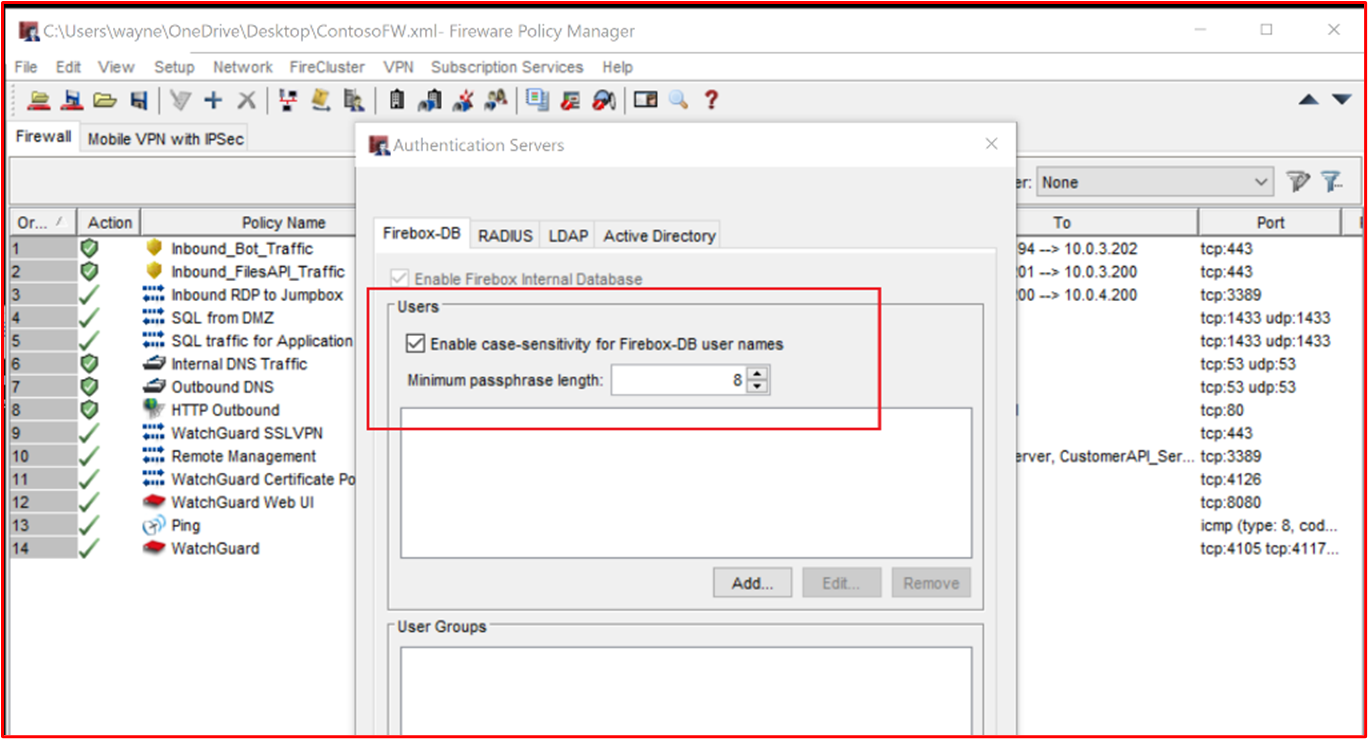

Below is an example of a minimum passphrase length for the WatchGaurd Firewall.

Control 44: Provide demonstratable evidence that unique user accounts are issued to all users.

Intent: The intent of this control is accountability. By issuing users with their own unique user accounts, users will be accountable for their actions as user activity can be tracked to an individual user.

Example Evidence Guidelines: Evidence would be by way of screenshots showing configured user accounts across the in-scope system components which may include servers, code repositories, cloud management platforms, Active Directory, Firewalls, etc.

Example Evidence: The following screenshot shows user accounts configured for the in-scope system component "CLARANET-SBU-WM".

This next screenshot shows the Administrator account is disabled on the in-scope system component "CLARANET-SBU-WM".

This next screenshot shows the Guest account is disabled on the in-scope system component "CLARANET-SBU-WM".

This next screenshot shows that the DefaultAccount is disabled on the in-scope system component "CLARANET-SBU-WM".

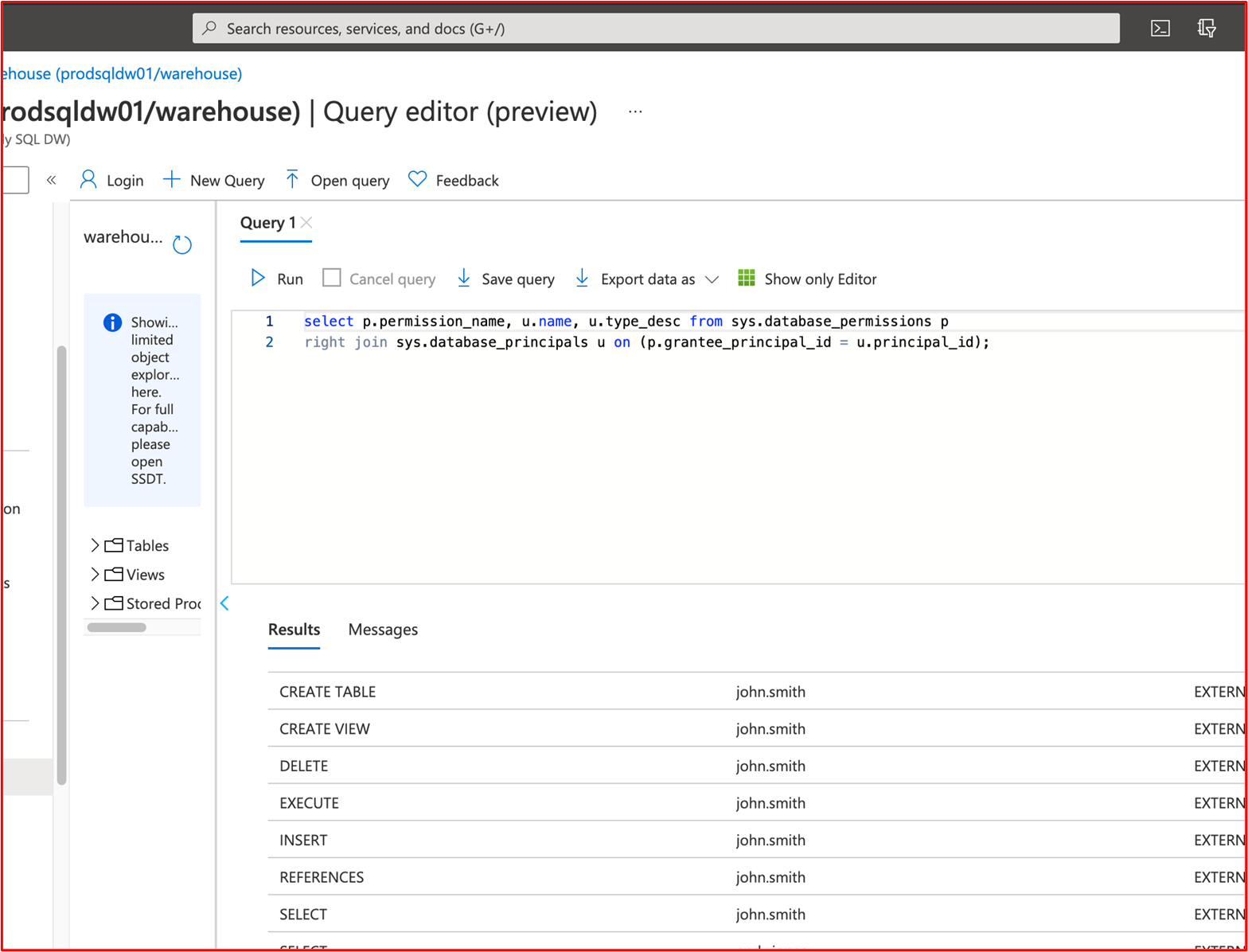

Control 45: Provide demonstratable evidence that least privilege principles are being followed within the environment.

Intent: Users should only be provided with the privileges necessary to fulfill their job function. This is to limit the risk of a user intentionally or unintentionally accessing data they shouldn't or carrying out a malicious act. By following this principle, it also reduces the potential attack surface (that is, privileged accounts) that can be targeted by a malicious activity group.

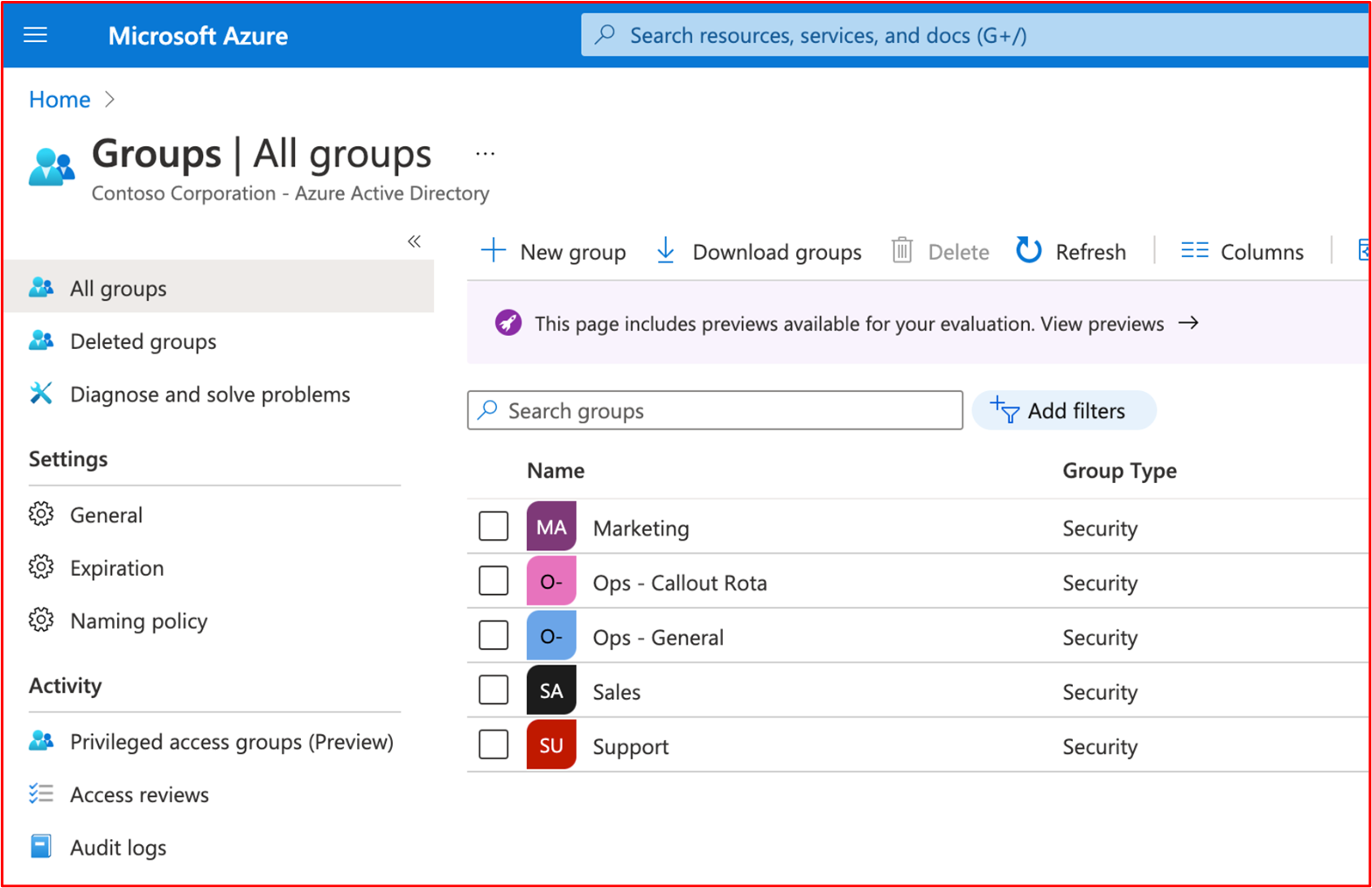

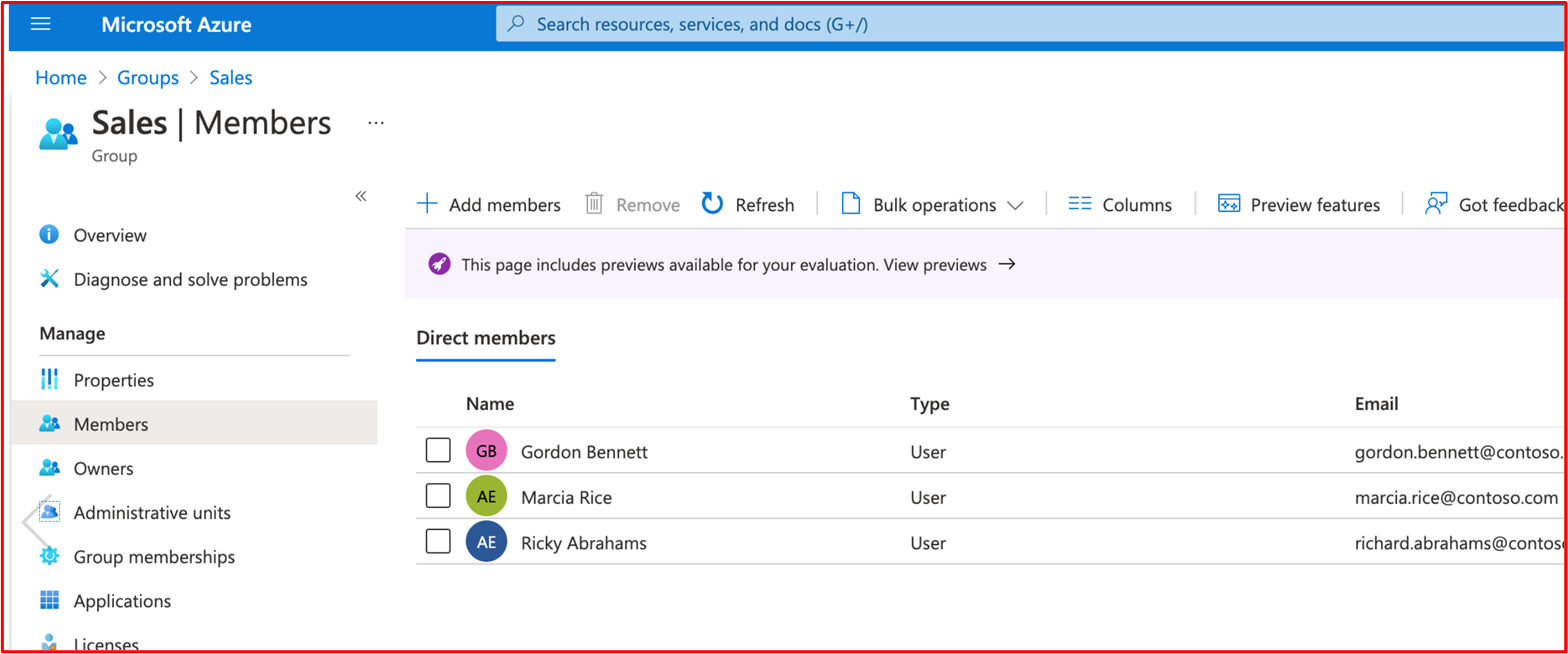

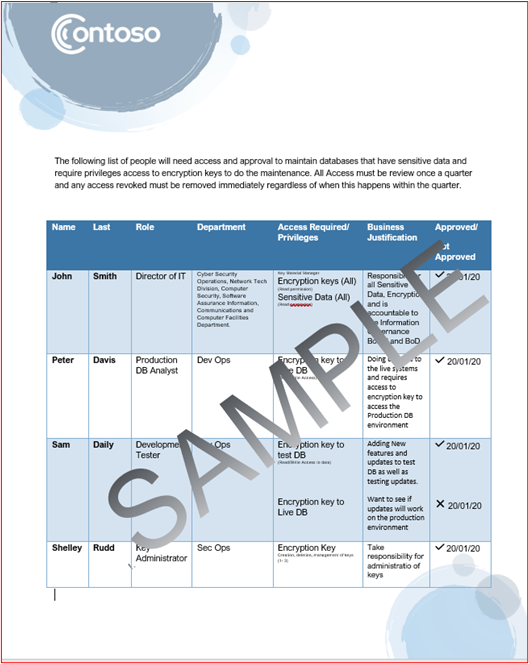

Example Evidence Guidelines: Most organizations will utilize groups to assign privileges based upon teams within the organization. Evidence could be screenshots showing the various privileged groups and only user accounts from the teams that require these privileges. Usually, this would be backed up with supporting policies/processes defining each defined group with the privileges required and business justification and a hierarchy of team members to validate group membership is configured correctly.

For example: Within Azure, the Owners group should be limited, so this should be documented and should have a limited number of people assigned to that group. Another example could be a limited number of staff with the ability to make code changes, a group may be setup with this privilege with the members of staff deemed as needing this permission configured. This should be documented so the certification analyst can cross reference the document with the configured groups, etc.

Example Evidence: The following screenshot shows that the environment is configured with groups assign according to job function.

The following screenshot shows that users are allocated to groups based upon their job function.

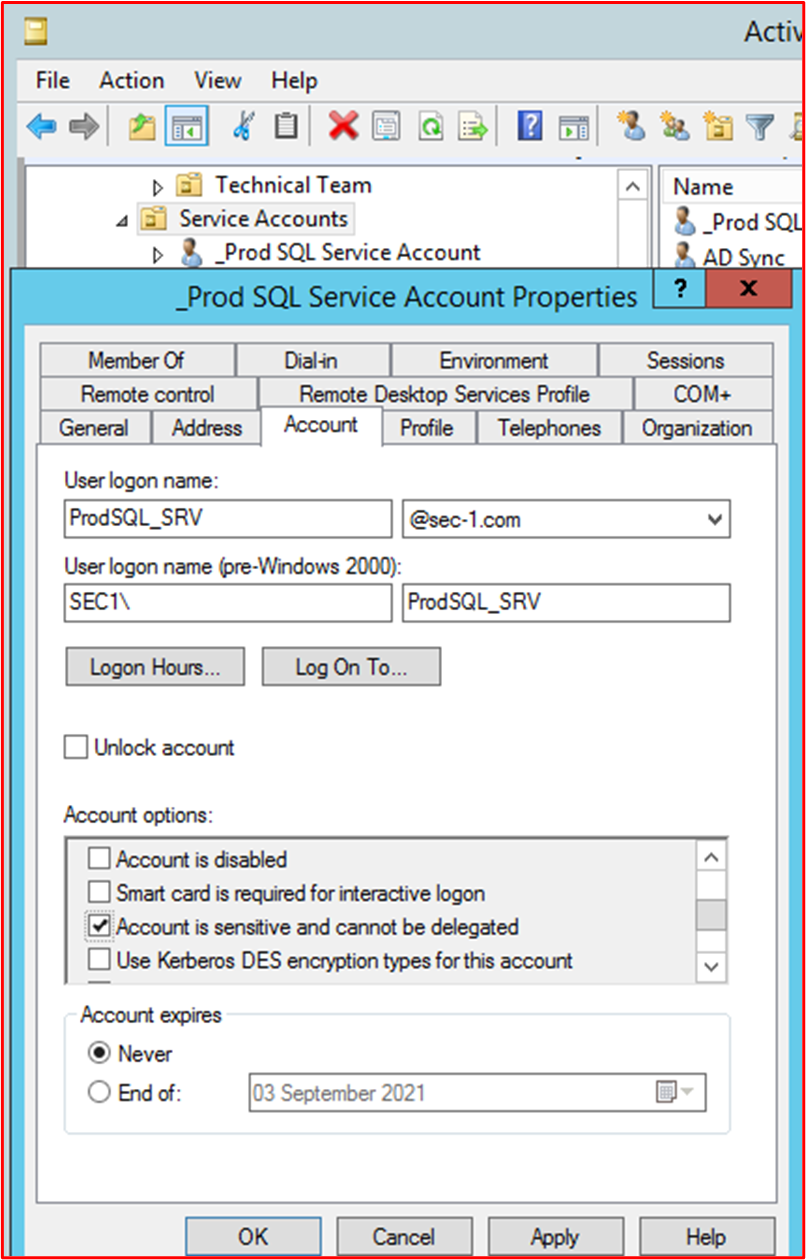

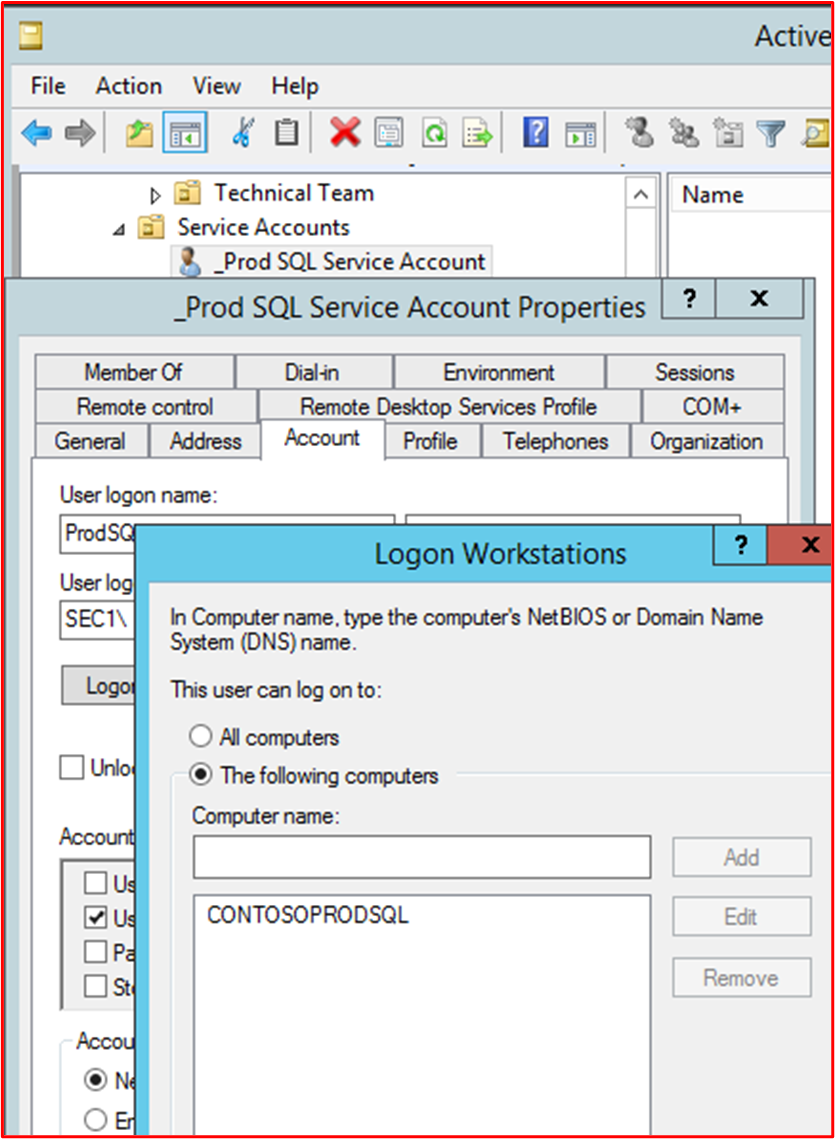

Control 46: Provide demonstratable evidence that a process is in place to secure or harden service accounts and the process is being followed.

Intent: Service accounts will often be targeted by activity groups because they're often configured with elevated privileges. These accounts may not follow the standard password policies because expiration of service account passwords often breaks functionality. Therefore, they may be configured with weak passwords or passwords that are reused within the organization. Another potential issue, particularly within a Windows environment, may be that the operating system caches the password hash. This can be a big problem if either: the service account is configured within a directory service, since this account can be used access across multiple systems with the level of privileges configured, or the service account is local, the likelihood is that the same account / password will be used across multiple systems within the environment. The above problems can lead to an activity group gaining access to more systems within the environment and can lead to a further elevation of privilege and/or lateral movement. The intent therefore is to ensure that service accounts are properly hardened and secured to help protect them from being taken over by an activity group, or by limiting the risk should one of these service accounts be compromised.

Example Evidence Guidelines: There are many guides on the Internet to help harden service accounts. Evidence can be in the form of screenshots which demonstrate how the organization has implemented secure hardening of the account. A few examples (the expectation is that multiple techniques would be used) includes:

Restricting the accounts to a set of computers within Active Directory,

Setting the account so interactive sign in isn't permitted,

Setting an extremely complex password,

For Active Directory, enable the "Account is sensitive and can't be delegated" flag. These techniques are discussed in the following article "Segmentation and Shared Active Directory for a Cardholder Data Environment".

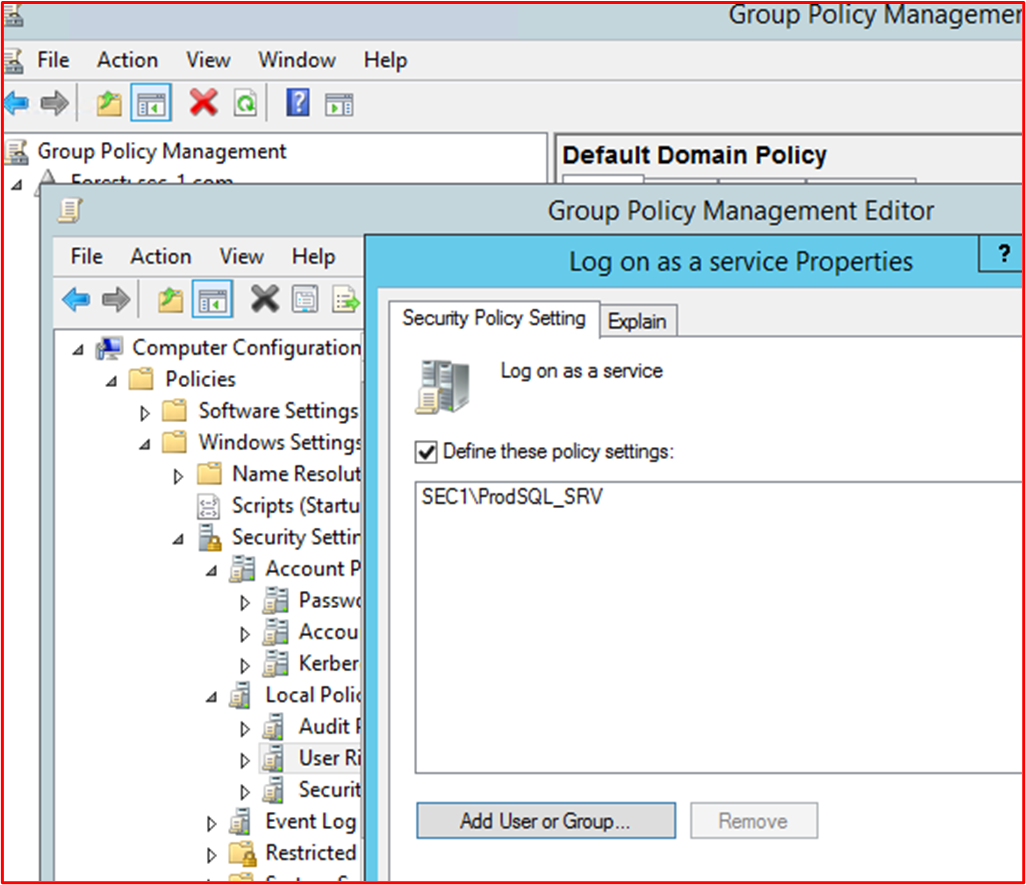

Example Evidence: There are multiple ways to harden a service account, that will be dependent upon each individual environment. The mechanisms suitable for your environment, which are used would be documented within the Account Management policy/procedure document earlier which will help to review this evidence. Below are some of the mechanisms that may be employed:

The following screenshot shows the 'Account is sensitive and connect be delegated' option is selected on the service account "_Prod SQL Service Account".

This next screenshot shows that the service account "_Prod SQL Service Account" is locked down to the SQL Server and can only sign in that server.

This next screenshot shows that the service account "_Prod SQL Service Account" is only allowed to sign in as a service.

Control 47: Provide demonstratable evidence that MFA is configured for all remote access connections and all non-console administrative interfaces.

Terms defined as:

Remote Access – Typically, this refers to technologies used to access the supporting environment. For example, Remote Access IPSec VPN, SSL VPN or Jumpbox/Bastian Host.

Non-console Administrative Interfaces – Typically, this refers to over the network administrative connections to system components. This could be over Remote Desktop, SSH or a web interface.

Intent: The intent of this control is to provide mitigations against brute forcing privileged accounts and accounts with secure access into the environment. By providing multi-factor authentication (MFA), a compromised password should still be protected against a successful sign in as the MFA mechanism should still be secured. This helps to ensure all access and administrative actions are only carried out by authorized and trusted staff members.

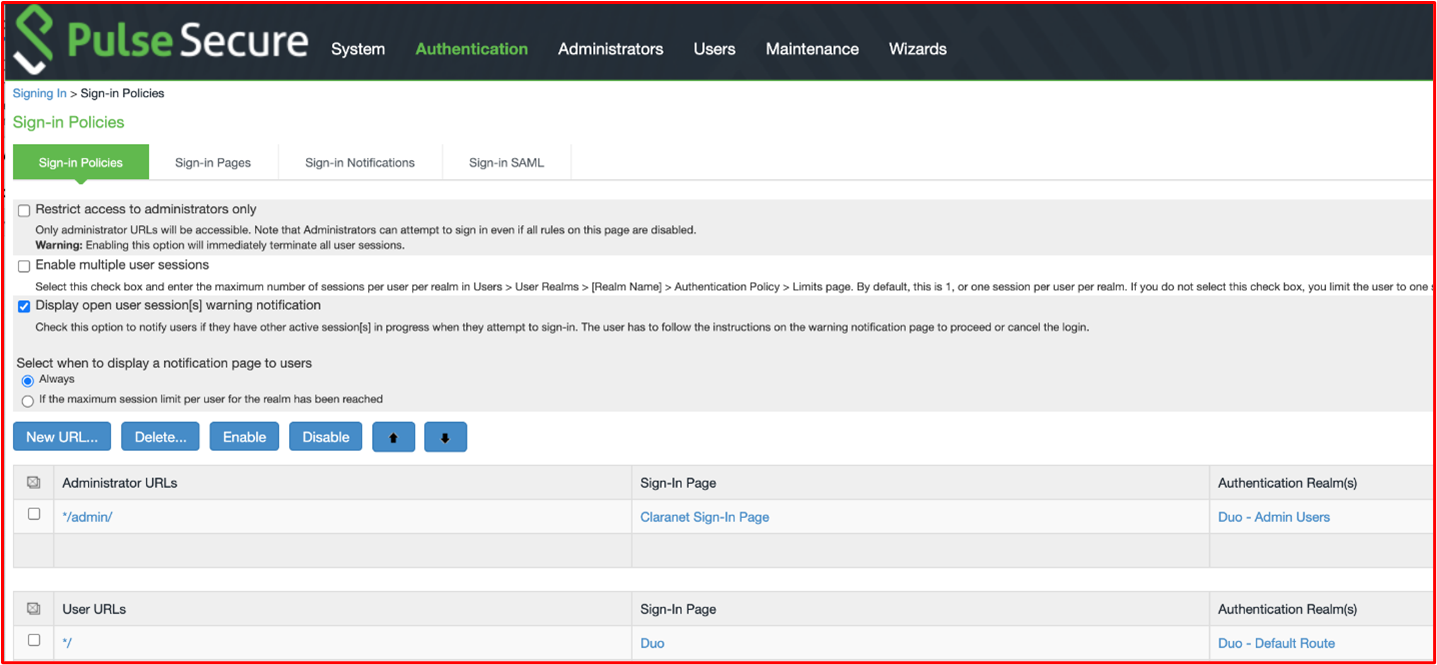

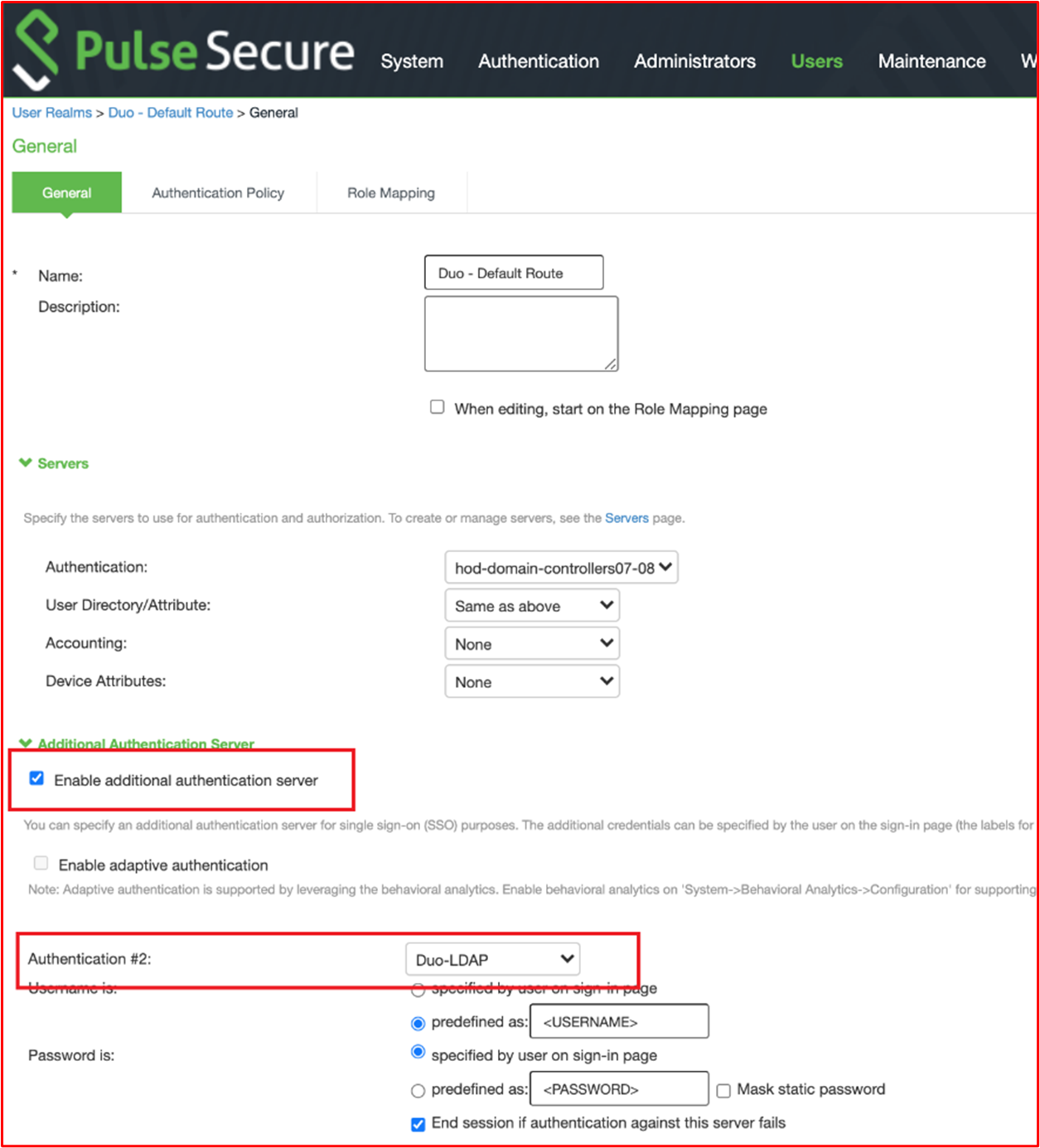

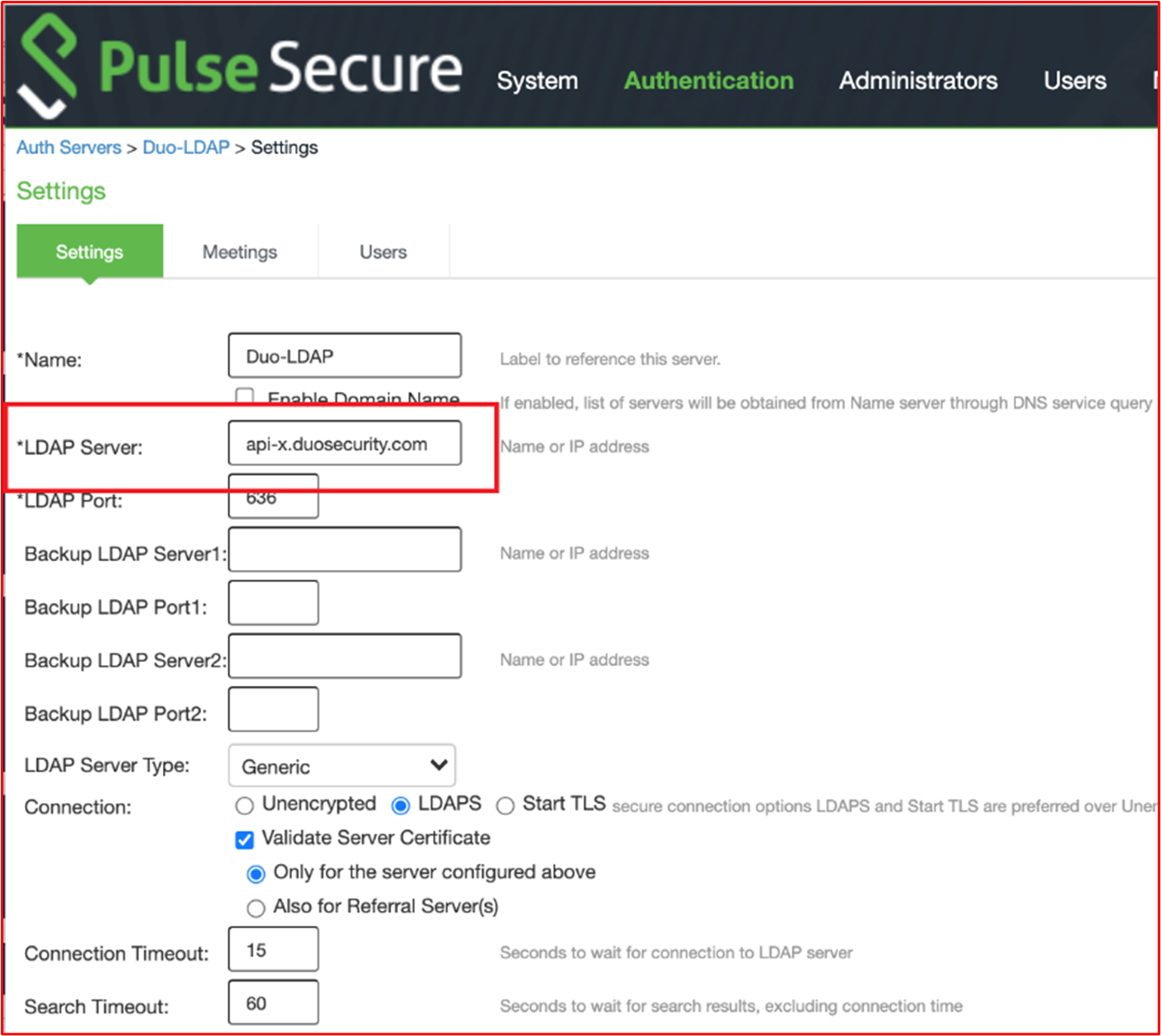

Example Evidence Guidelines: Evidence needs to show MFA is enabled on all technologies that fit in the above categories. This may be through a screenshot showing that MFA is enabled at the system level. By system level, we need evidence that it's enabled for all users and not just an example of an account with MFA enabled. Where the technology is backed off to an MFA solution, we need the evidence to demonstrate that it's enabled and in use. What is meant by this is; where the technology is setup for Radius Authentication, which points to a MFA provider, you also need to evidence that the Radius Server it's pointing to, is an MFA solution and that accounts are configured to utilize it.

Example Evidence 1: The following screenshots shows the authentication realms configured on Pulse Secure which is used for remote access into the environment. Authentication is backed off by the Duo SaaS Service for MFA Support.

This screenshot demonstrates that an additional authentication server is enabled which is pointing to "Duo-LDAP" for the 'Duo - Default Route' authentication realm.

This final screenshot shows the configuration for the Duo-LDAP authentication server which demonstrates that this is pointing to the Duo SaaS service for MFA.

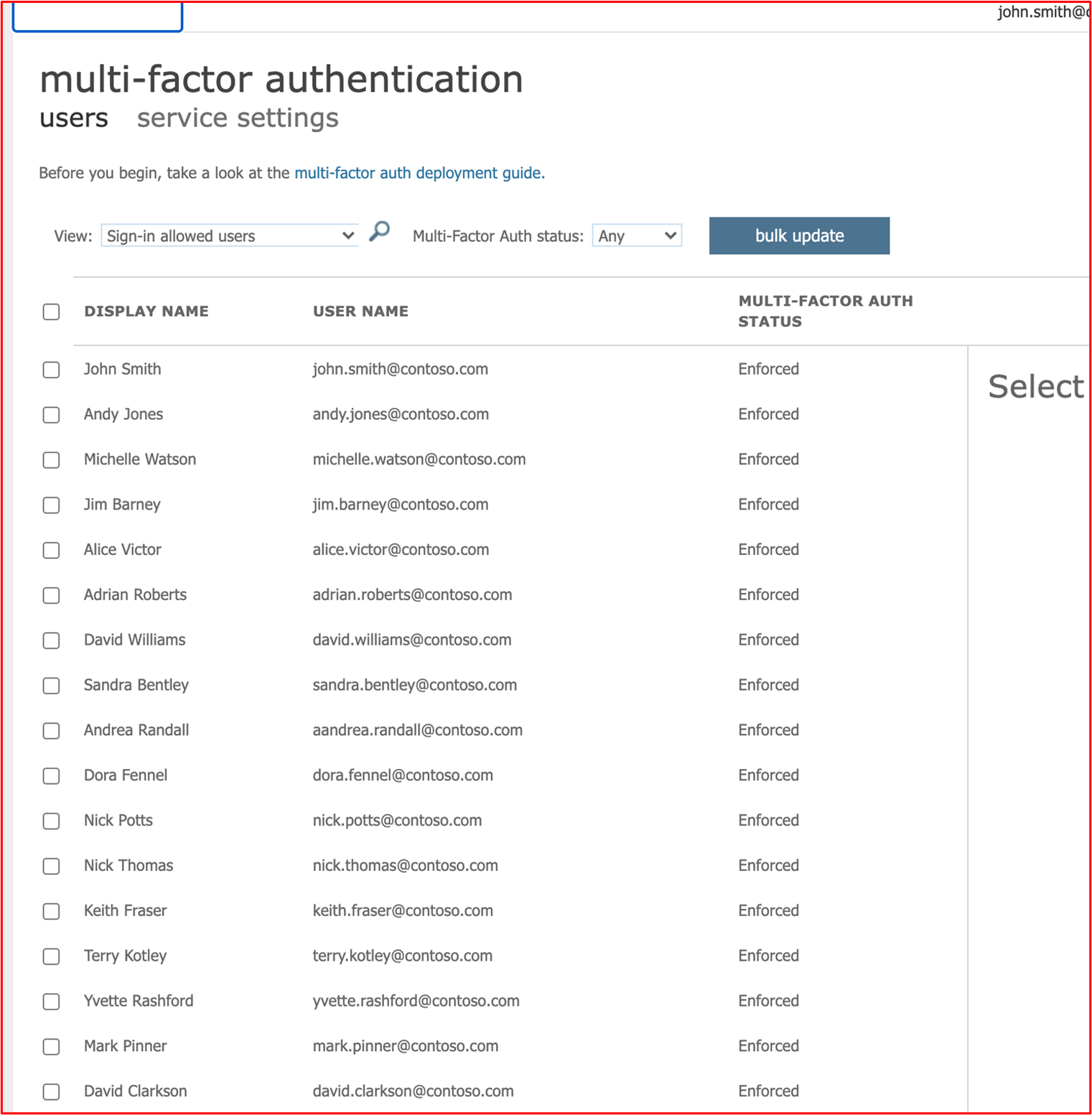

Example Evidence 2: The following screenshots show that all Azure users have MFA enabled.

Note: you'll need to provide evidence for all non-console connections to demonstrate that MFA is enabled for them. So, for example, if you RDP or SSH to servers or other system components (that is, Firewalls).

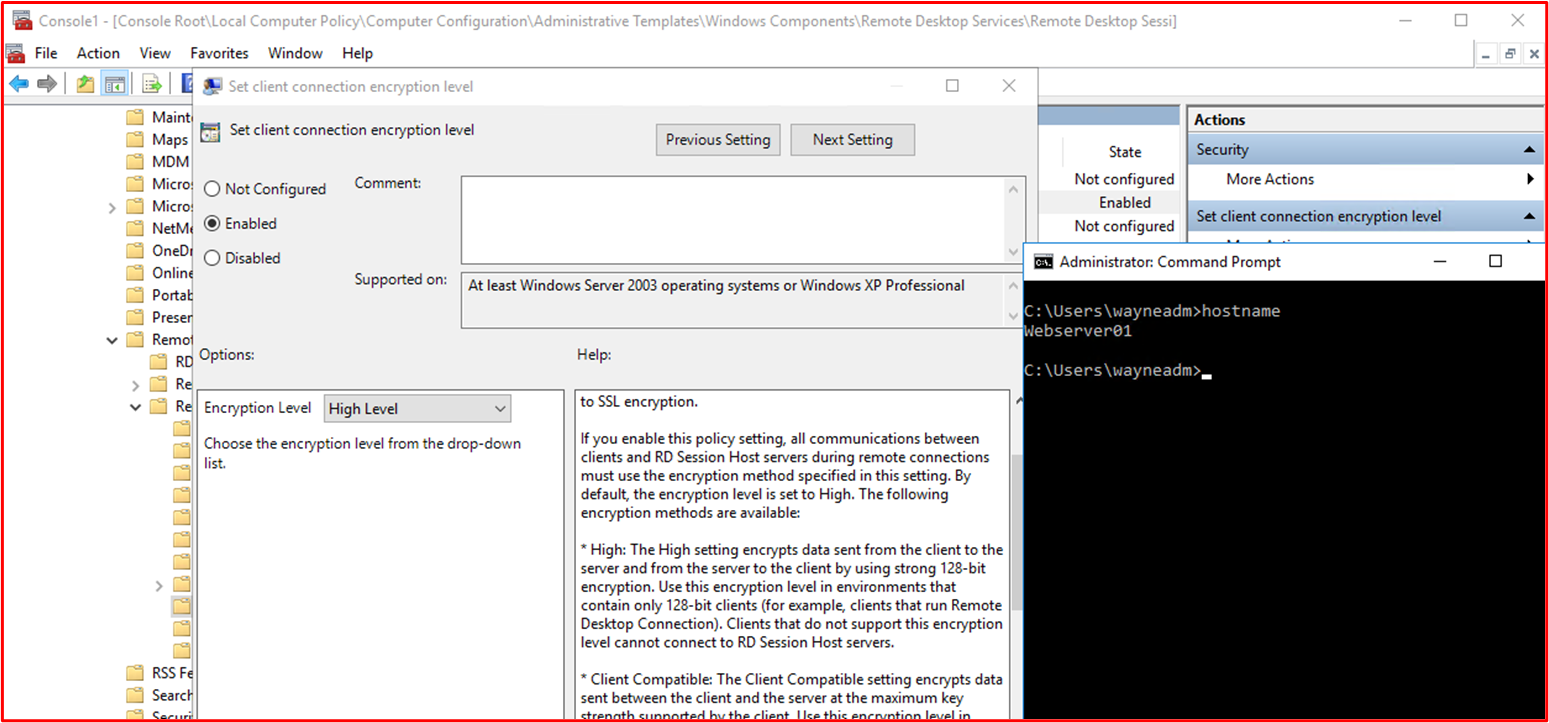

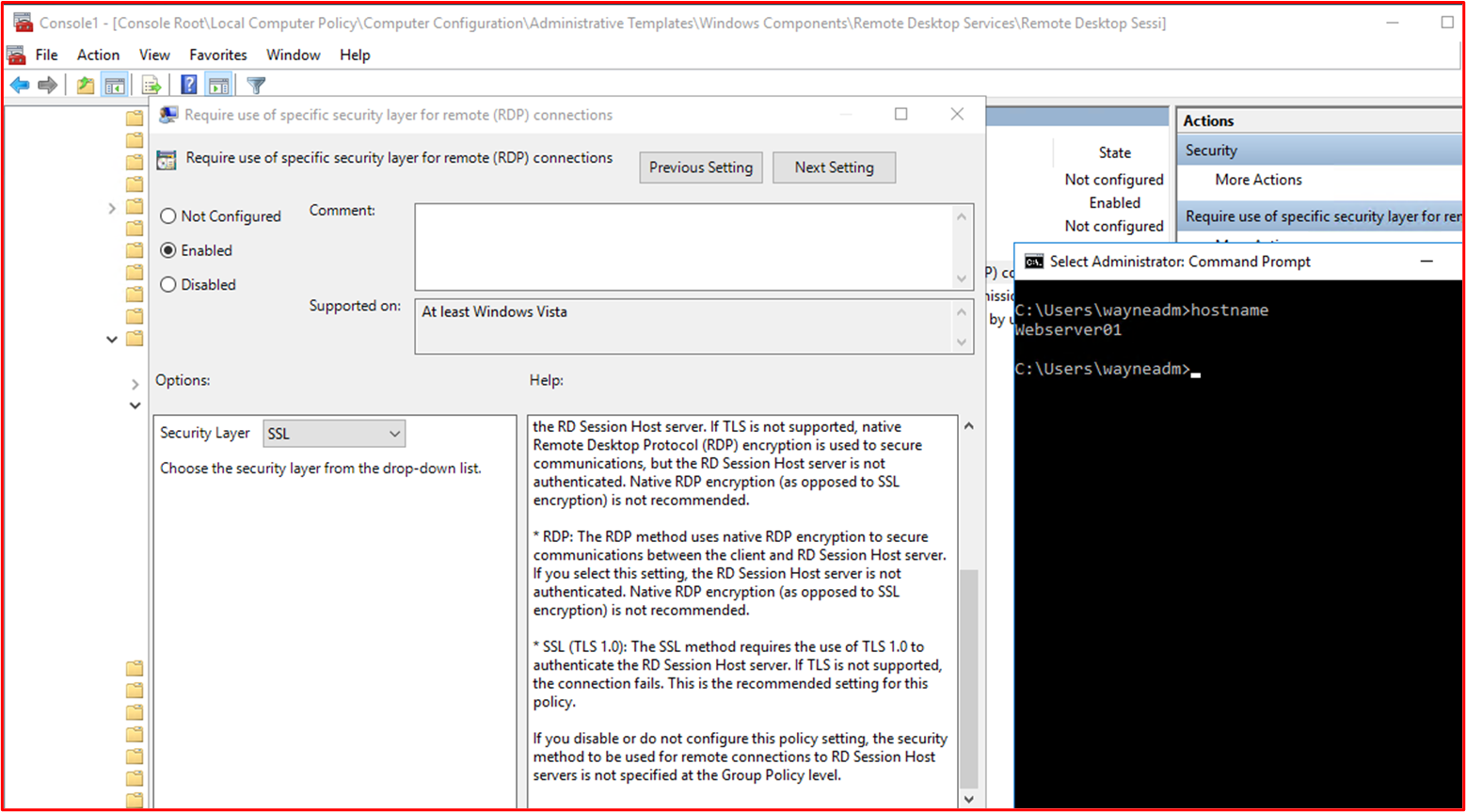

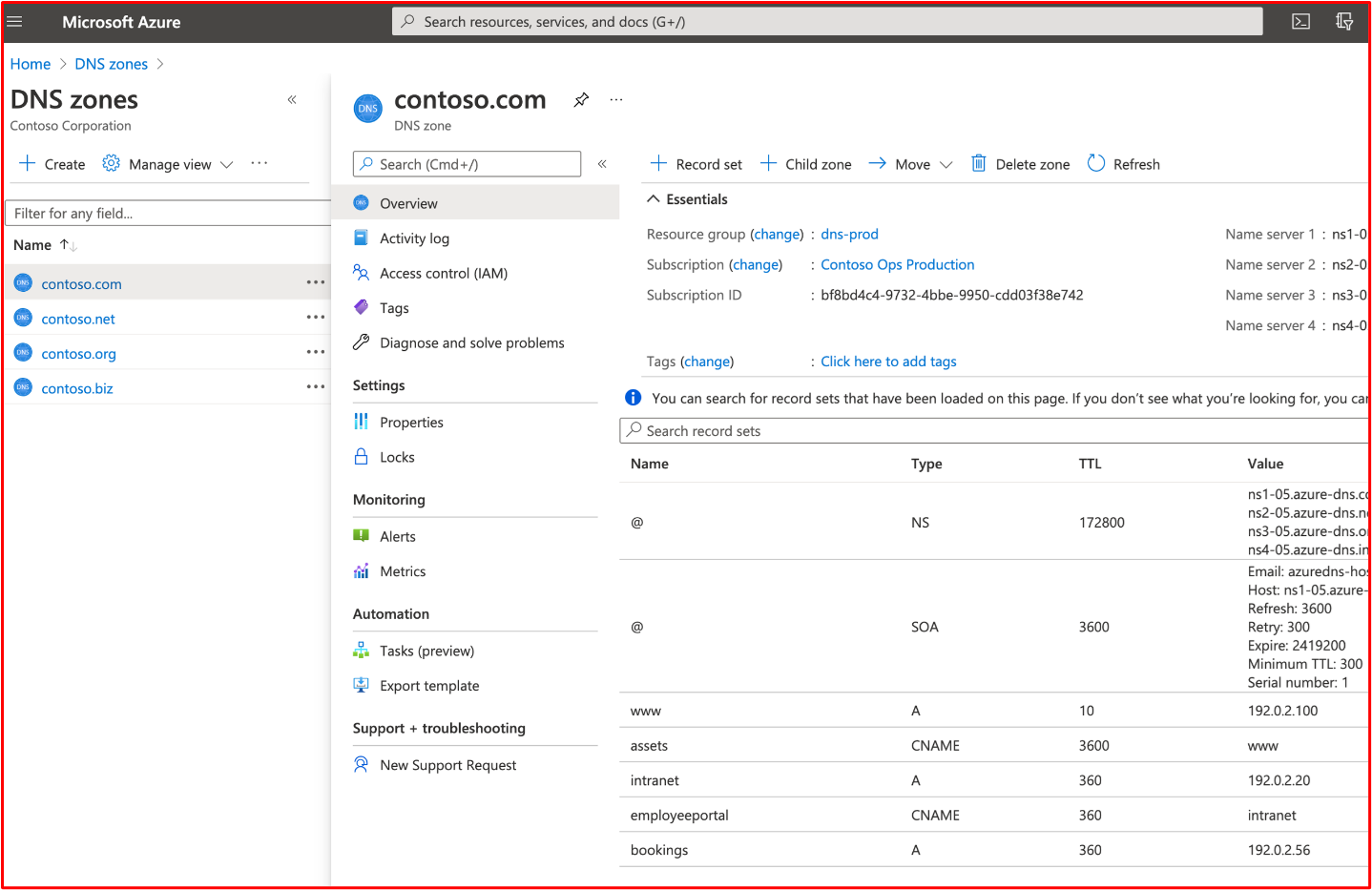

Control 48: Provide demonstratable evidence that strong encryption is configured for all remote access connections and all non-console administrative interfaces, including access to any code repositories and cloud management interfaces.

Terms defined as:

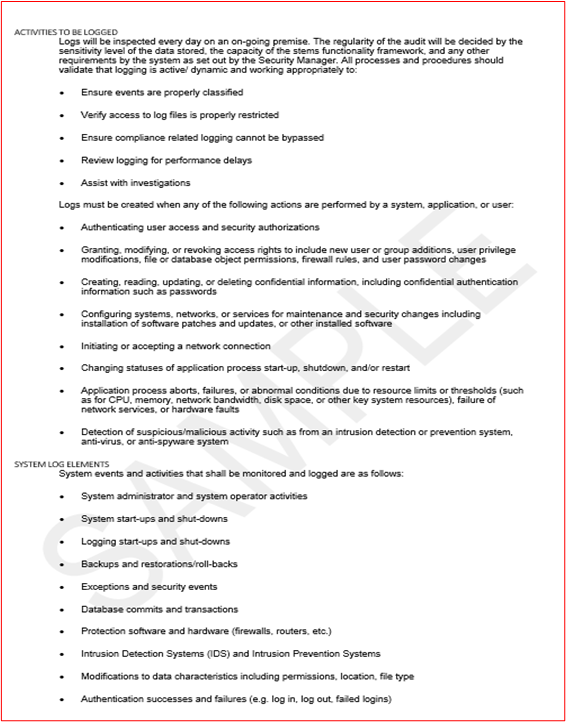

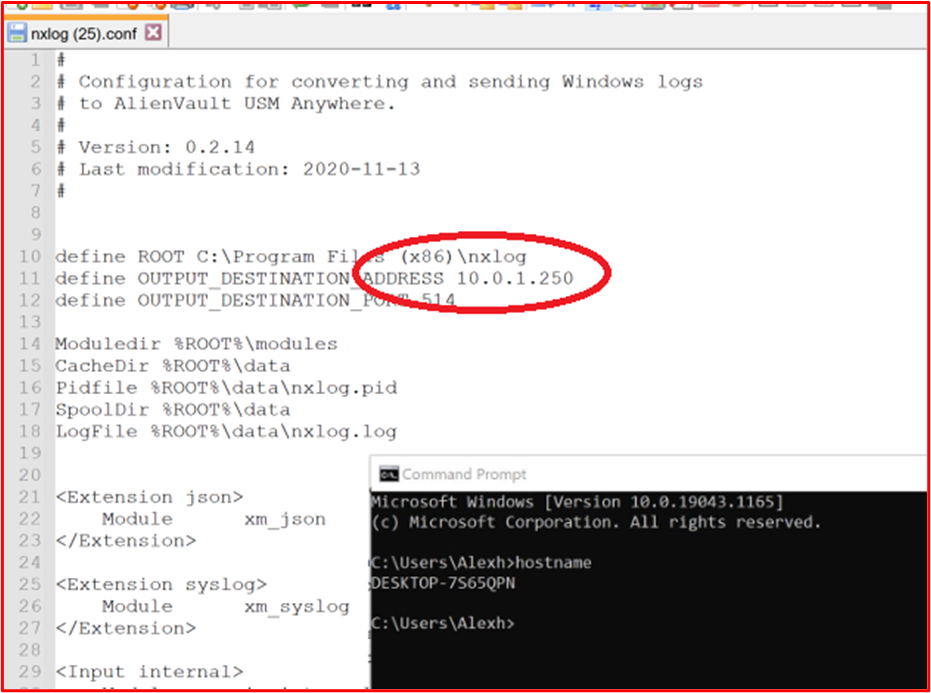

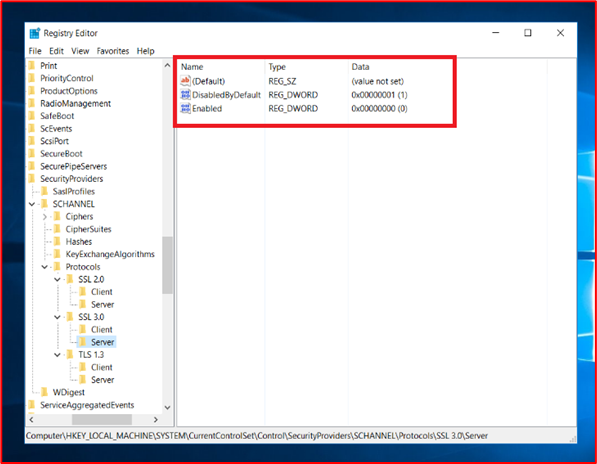

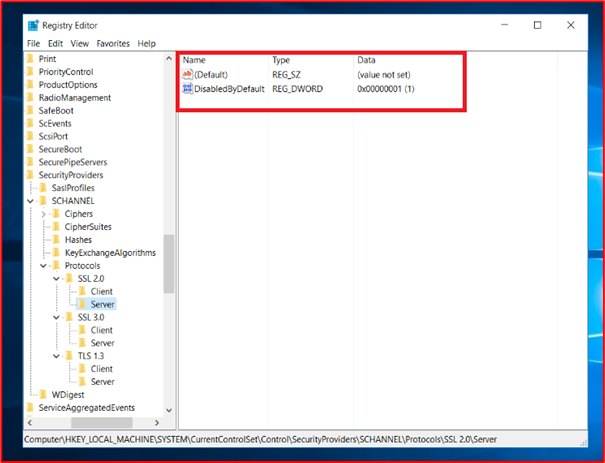

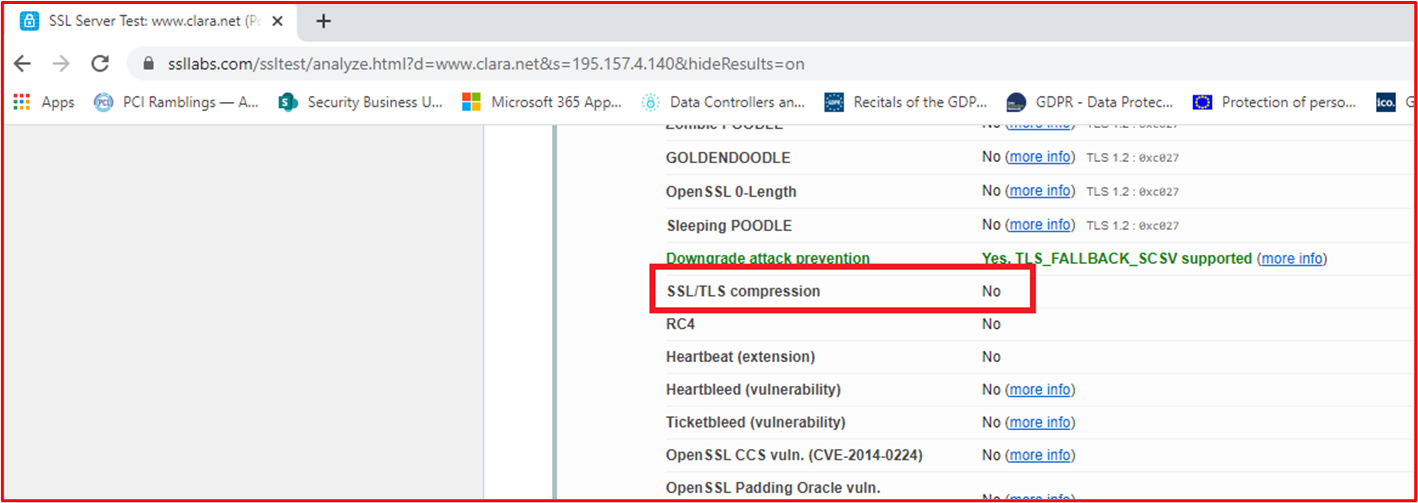

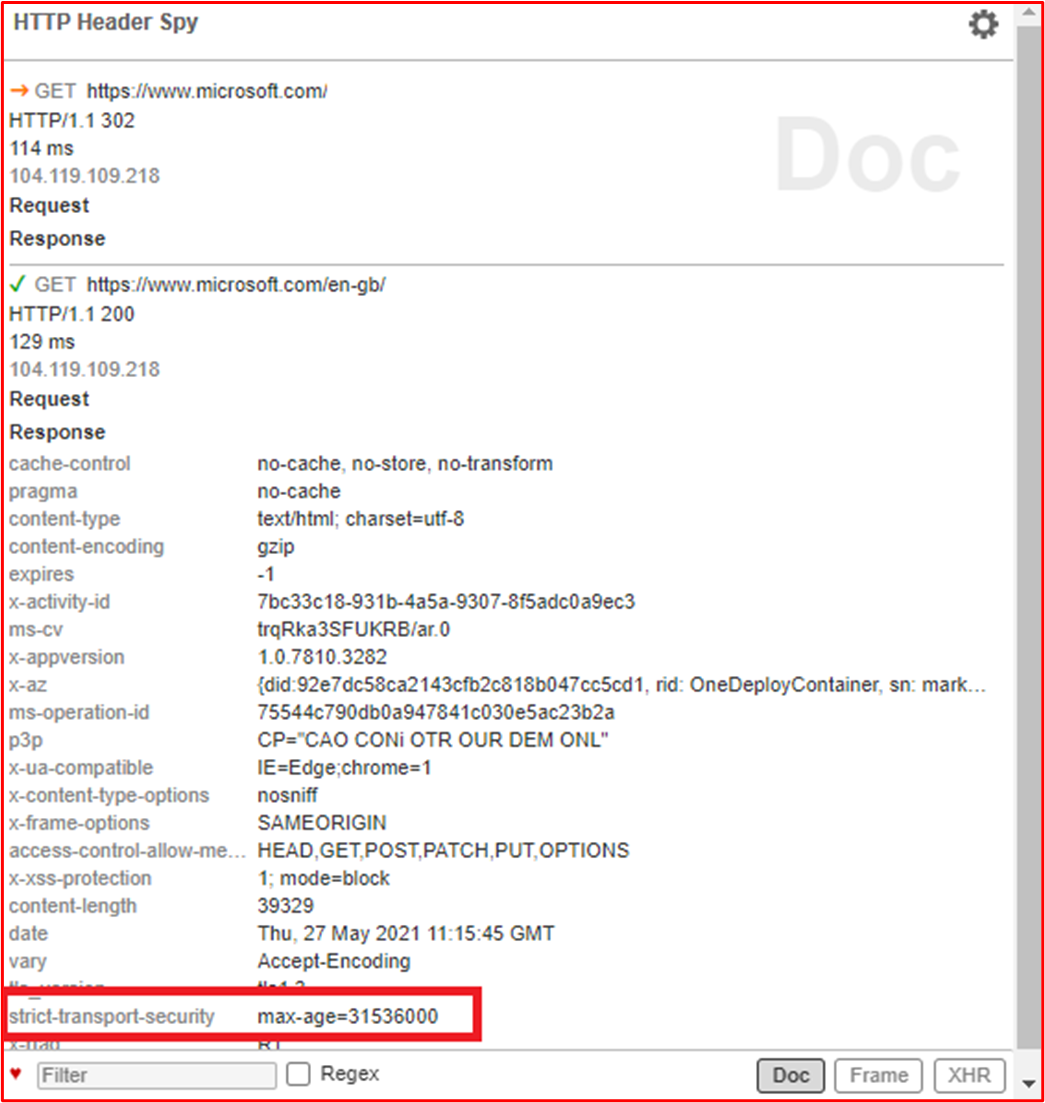

Code Repositories – The code base of the app needs to be protected against malicious modification which could introduce malware into the app. MFA needs to be configured on the code repository.